[Project] [Paper] [Demo] [Dataset (Google)] [Dataset (Baidu)] [Dataset (Ali)]

Guangze Zheng¹, Shijie Lin¹, Haobo Zuo¹, Changhong Fu², Jia Pan¹*

PyTorch implementation for NetTrack. SOTA performance on BFT, TAO, TAO-OW, AnmimalTrack, and GMOT-40 without any training or finetuning!

- [2024/03/16] 💻 Code has been released.

- [2024/03/01] 📰 NetTrack has been accepted by CVPR 2024.

-

Prerequisite

conda create -n nettrack python=3.10 # please use the default version pip3 install torch torchvision # --index-url https://download.pytorch.org/whl/cu121 pip3 install -r requirements.txt pip3 install cython; pip3 install 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI' pip3 install cython_bbox sudo apt update sudo apt install ffmpeg

Install Grounding DINO and CoTracker:

pip install git+https://github.com/IDEA-Research/GroundingDINO.git pip install git+https://github.com/facebookresearch/co-tracker.git@8d364031971f6b3efec945dd15c468a183e58212

-

Prepare weights: Download the default pretrained Grouding DINO and CoTracker model:

cd weights cd groundingdino wget https://huggingface.co/ShilongLiu/GroundingDINO/resolve/main/groundingdino_swinb_cogcoor.pth cd .. mkdir cotracker && cd cotracker wget https://dl.fbaipublicfiles.com/cotracker/cotracker_stride_4_wind_8.pth cd ..

-

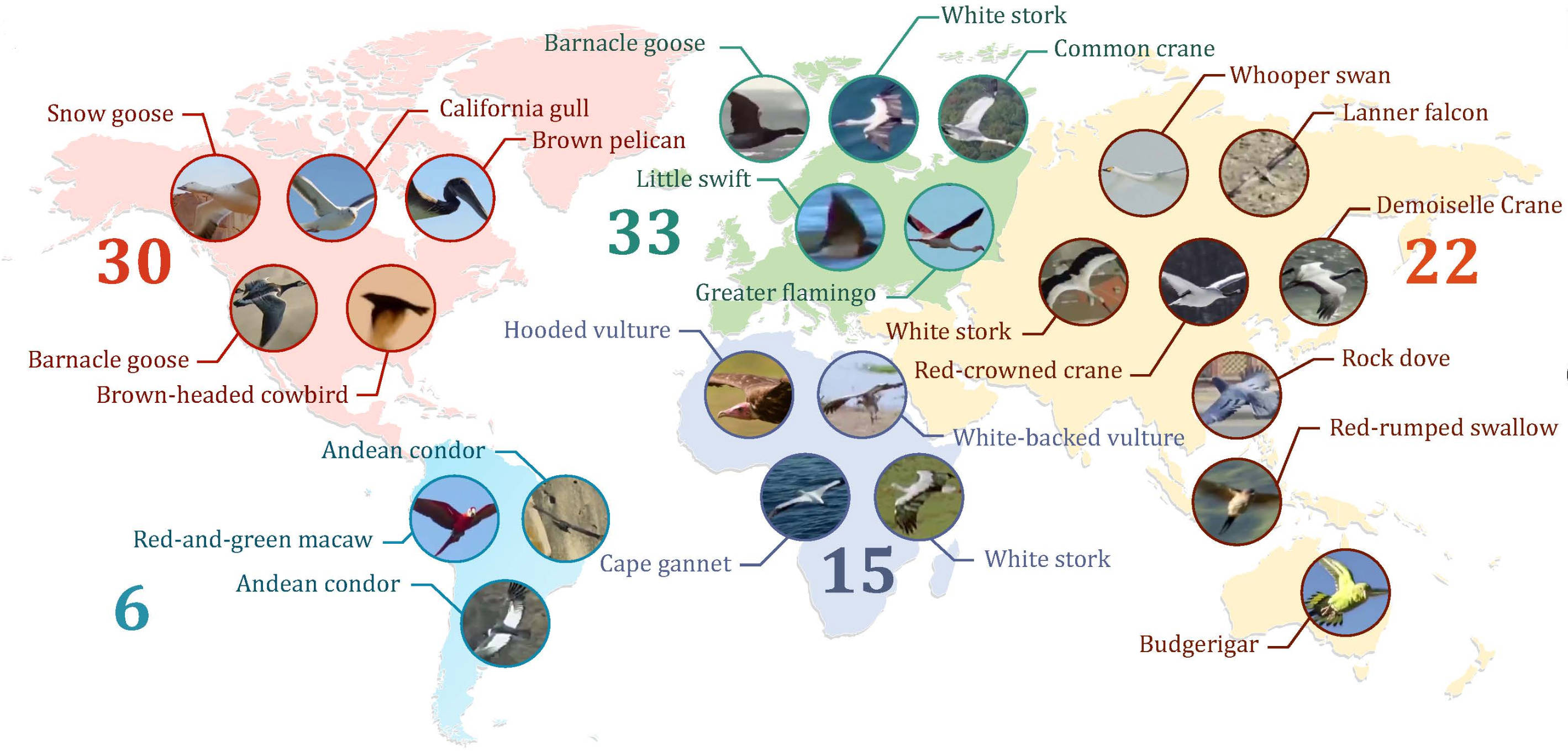

📊 Bird flock tracking (BFT) dataset:

- 🎬106 various bird flight videos with 22 species and 14 scenes

- 🎯collected for artifical intelligence and ecological research

- 📈 We provide a Multiple Object Tracking (MOT) benchmark for evaluating open-world MOT for highly dynamic object tracking.

-

📥 Download BFT dataset v1.5

- [Recommended] Download with Google Drive

- Download with Baidu Pan

- Download with AliPan

Due to policy limitations of Alipan, please run the .exe file directly to decompress data.

-

Run default demo video.

sh tools/demo/demo_seq.sh

The results will be shown in

./output/track_res. -

Evaluate Please ref to

./docs/evalutate.md.

Watch our video on YouTube!

The primary data of BFT dataset is from the BBC nature documentary series Earthflight. The code is based on GroundingDINO, CoTracker, and ByteTrack. Dr. Ming-Shan Wang provided valuable biological suggestions for this work. The authors appreciate the great work and the contributions they made.

If you find this dataset useful, please cite our work. Looking forward to your suggestions to make this dataset better!

@Inproceedings{nettrack,

title={{NetTrack: Tracking Highly Dynamic Objects with a Net}},

author={Zheng, Guangze and Lin, Shijie and Zuo, Haobo and Fu, Changhong and Pan, Jia},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024},

pages={1-8}}