This repository hosts the official PyTorch implementation of the paper: "AquaLoRA: Toward White-box Protection for Customized Stable Diffusion Models via Watermark LoRA" (Accepted by ICML 2024).

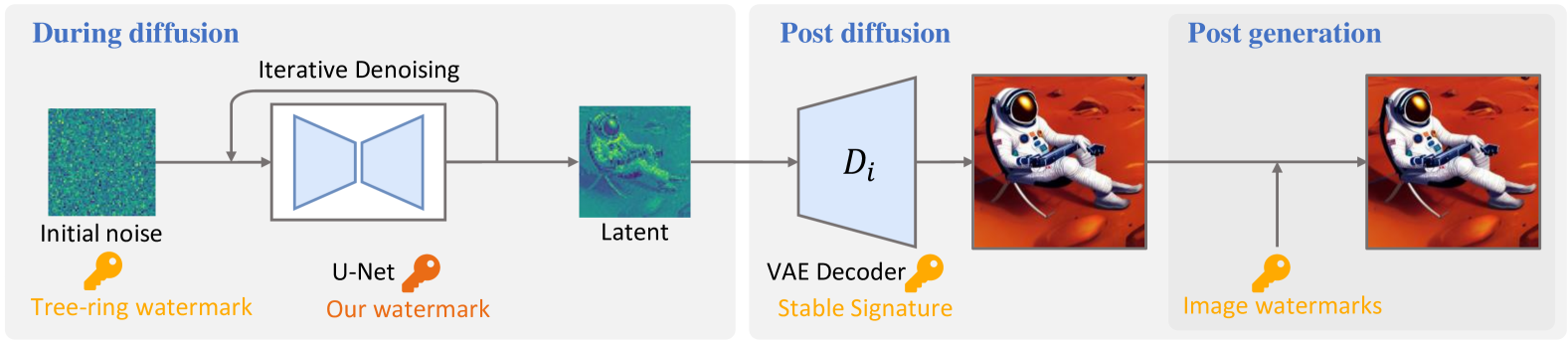

We introduce AquaLoRA, the first implementation addressing white-box protection. Our approach integrates watermark information directly into the U-Net of SD models using a watermark LoRA module in a two-stage process. This module includes a scaling matrix, enabling flexible message updates without retraining. To maintain image fidelity, we design Prior Preserving Fine-Tuning to ensure watermark integration with small impact on the distribution. Our extensive experiments and ablation studies validate the effectiveness of our design.

Run the following command to install the environment:

git clone https://github.com/Georgefwt/AquaLoRA.git

cd AquaLoRA

conda create -n aqualora python=3.10

conda activate aqualora

pip install -r requirements.txtSee train/README.md for details.

See evaluation/README.md for details.

We provide a script to merge LoRA into the Stable Diffusion model itself, enabling protection for customized models. You can run:

cd scripts

python diffusers_lora_to_webui.py --src_lora <your train folder>/<secret message>/pytorch_lora_weights.safetensors --tgt_lora watermark.safetensors

python merge_lora.py --sd_model <customized model safetensors> --models watermark.safetensors --save_to watermark_SDmodel.safetensors| Stage | Link |

|---|---|

| Pretrained Latent Watermark | huggingface.co/georgefen/AquaLoRA-Models/tree/main/pretrained_latent_watermark |

| Prior-preserving Fine-tuned | huggingface.co/georgefen/AquaLoRA-Models/tree/main/ppft_trained |

| Robustness Enhanced (opt.) | huggingface.co/georgefen/AquaLoRA-Models/tree/main/rob_finetuned |

- training code

- evaluation code

- release training dataset

- release trained checkpoints

Download AquaLoRA-Models from Hugging Face to the project's main directory, then run the Gradio demo by executing python run_gradio_demo.py.

This code builds on the code from the diffusers library. In addition, we borrow code from the following repositories:

- sd-scripts for merge the LoRA with the model.

- dreamsim for calculate dreamsim metric.

- pytorch-fid for calculate FID metric.

If you use this code for your research, please cite the following work:

@article{feng2024aqualora,

title={AquaLoRA: Toward White-box Protection for Customized Stable Diffusion Models via Watermark LoRA},

author={Feng, Weitao and Zhou, Wenbo and He, Jiyan and Zhang, Jie and Wei, Tianyi and Li, Guanlin and Zhang, Tianwei and Zhang, Weiming and Yu, Nenghai},

journal={arXiv preprint arXiv:2405.11135},

year={2024}

}