This work is based on this paper. Double Deep Q learning (DDQN) was introduced as a way to reduce the observed overestimations of the regular DQN algorithm and to lead to much better performance on several tasks.

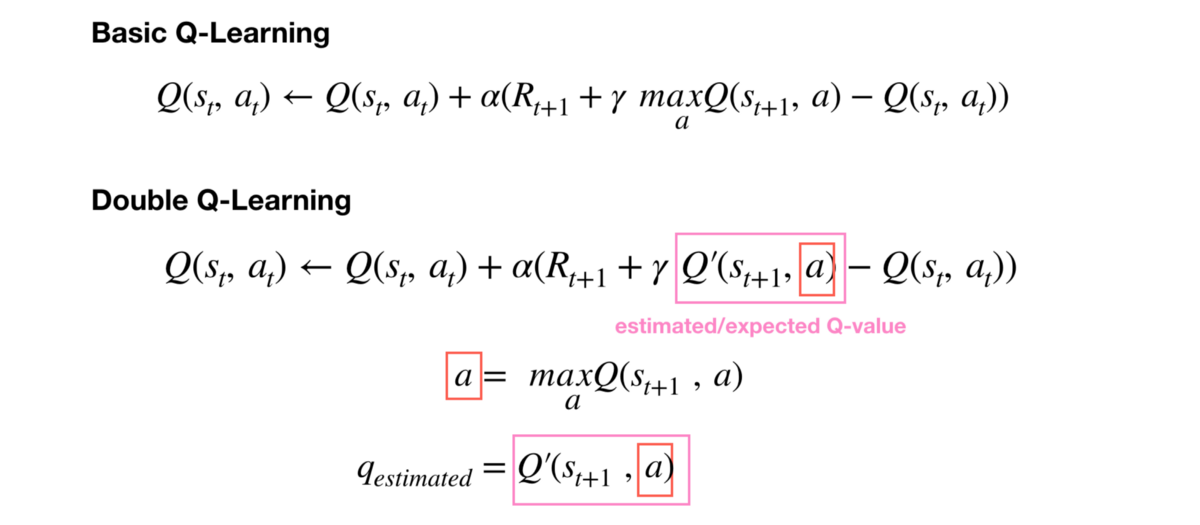

The max operator in standard DQN uses the same values both to select and to evaluate an action. This makes it more likely to select overestimated values, resulting in overoptimistic value estimates. To

prevent this, we can decouple the selection from the evaluation. This is the idea behind Double Q-learning.

To train the network on Pong gym environment run the following command:

python atari.pyAll results will be stored within checkpoint_dir.