NLP Python code that calculates stance and classifies Arabic tweets about COVID-19 vaccination.

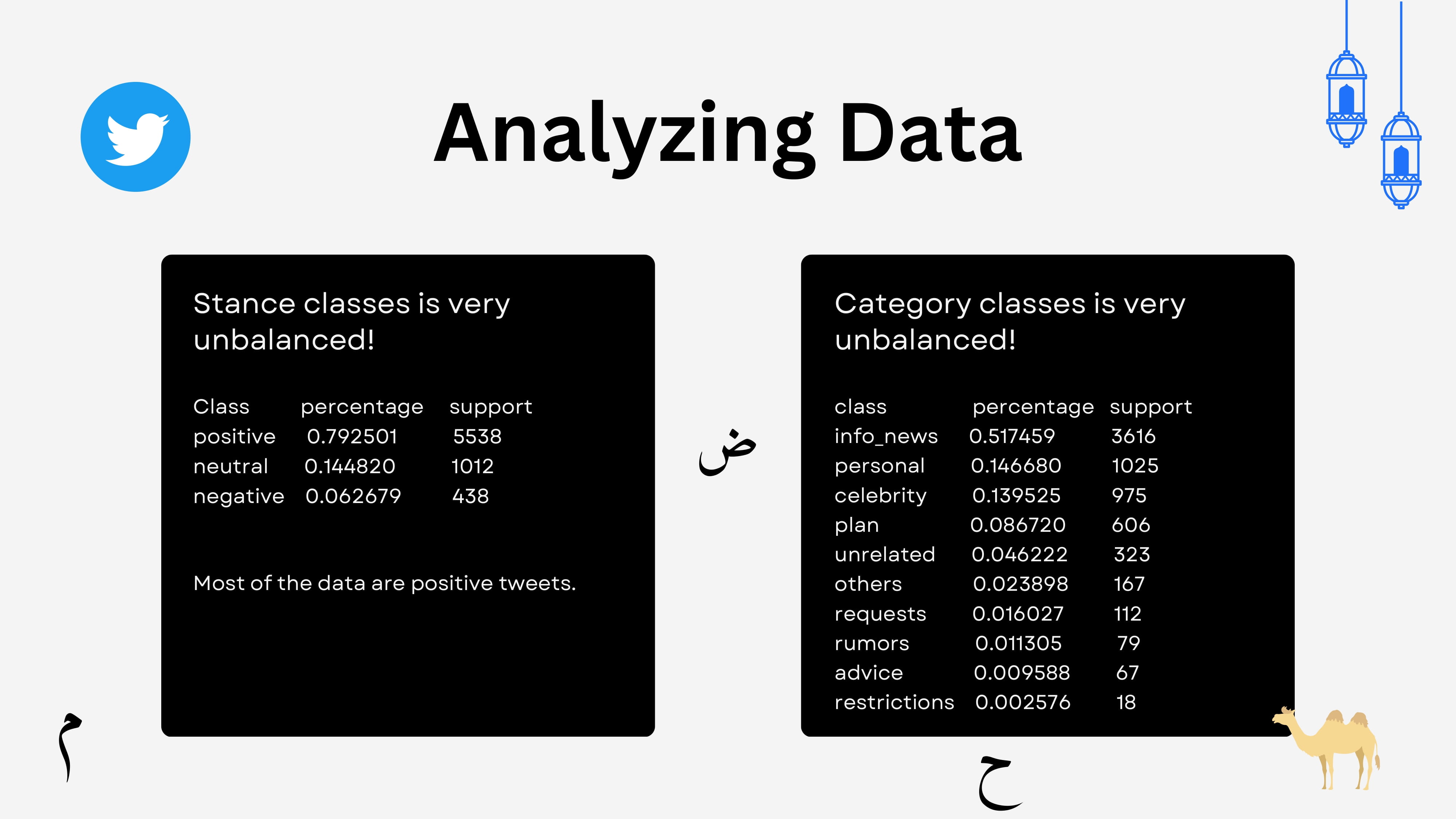

Problem was un-avoidable as unlike 'accuracy', 'macro f1' score will just collapse when we ignore some very low probabilty classes. We implemented two approaches:

-

Oversampling

More samples for minoriy classes, using

class_weight='balanced'inscikit-learnclassifiers. -

Penalizing mistakes

Higher penalty for minority classses, using

imblearn.

We used regex, camel-tools, farasapy, Arabic-Stopwords and nltk

- Removing Diacritization (التشكيل) and punctuation.

- Replacing links, numbers and mentions with , and .

- Converting emojis to equivalent text. (😂 -> face_tearing_with_joy)

- Normalizing letters.

أ إ آ -> ا - Lemmatization using multiple tools.

- Converting English text to lowercase.

- Repeating hashtag words

ntimes. - Removing stopwords. (combined

nltkandArabic-Stopwords) e.g. 'وأيها' , 'عندنا' , 'معي'. - Removing duplicate rows.

- Tokenization using

camel-toolssimple word tokenizer.

- Translate English text.

- Do everything in Arabic. (emoji meanings, tokens, english text translation, …)

- Remove numbers or convert to word representation.

- Better stop words datasets, can also remove too rare / too frequent words.

- Named entity recognition. (NER)

- Bag of words (BOW)

- TF-IDF

- We used both Word

n-gramsand Charactern-grams.

- We used both Word

- Continuous BOW Word2Vec

- Skip-gram Word2Vec

- Arabert Embeddings as a feature for SVM

- We took the

pooler outputfrom Bert, which resemble embeddings and feed them to SVM as a feature.

- We took the

- SVM

- Naive Bayes

- KNN

- Decision Trees

- Random Forest with

n_estimators = 1000 - Logistic Regression with

n_iterations = 300

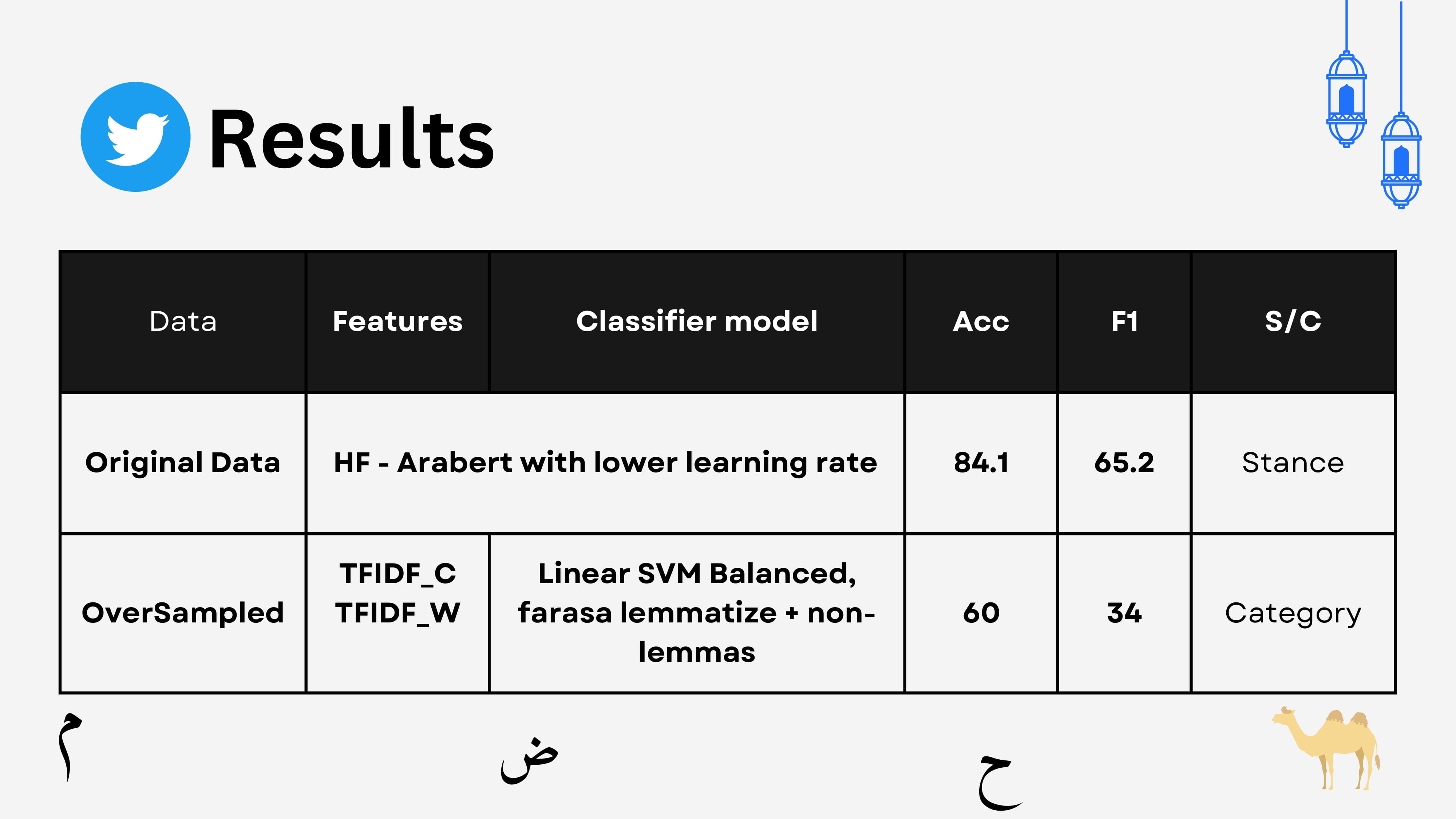

Best results was:

| Preprocessing | Features | Classifier Model | Acc | F1 |

|---|---|---|---|---|

| Farasa lemmatize + dediacritized camel lemmatize + original text | TFIDF char + word | Linear SVM | 80 | 56 |

Other results:

| Features | Classifier Model | Acc | F1 |

|---|---|---|---|

| BOW | Naive Bayes | 56 | 42 |

| BOW | Logistic Regression | 77 | 55 |

| BOW | Random Forest | 80 | 46 |

| BOW | Linear SVM | 76 | 51 |

| CBOW | Ridge Classifier | 73 | 38 |

| S-Gram | Ridge Classifier | 80 | 40 |

In the sequence models family, we’ve built a 3-layer LSTM followed by a linear neural network layer.

LSTM’s Training Settings:

| Epochs | Batch Size | Learning rate | Embedding Dimension | LSTM Hidden layers Dimension |

|---|---|---|---|---|

| 50 | 256 | 0.001 | 300 | 50 |

Best result on Categorization problem:

| Data | Features | Classifier Model | Acc | F1 |

|---|---|---|---|---|

| Oversampled data | Embedding Layer | 3-layer LSTM + 1 NN layer | 56.6 | 25.9 |

In the transformers family, we’ve fine-tuned an Arabic bert model on our dataset.

-

The arabic bert used was aubmindlab/bert-base-arabertv02-twitter from hugging face. We’ve chosen this model because it was trained on ~60 Million Arabic tweets.

-

As per the documentation, we’ve used the preprocessing and tokenizer that was used when the model authors built their model. Fine-tuning:

-

We use the AraBert as a feature extractor, by first freezing the bert’s parameters, then passing the data through this arabert model, and producing the embedding as output. The sentence embedding is calculated by taking the last layer hidden-state of the first token of the sequence

CLS token. -

Then the sentence embeddings enters a classifier head we’ve built. The classifier head consists of 2 neural network layers in order to fine tune the weights of the model on our data.

-

Arabert Training Settings:

Epochs Batch Size Learnign rate 50 16 0.001 -

Arabert embeddings with SVM classifier:

Extract sentences’ embeddings using arabert, then train these embeddings using a linear-kernel SVM.

nltkArabic-Stopwordscamel-tools==1.2.0farasapyarabertpandasscikit-learngensimTransformersimblearn

|

غياث عجم |

Noran Hany |

Hala Hamdy |

Reem Attallah |