Using fleet we're going to deploy an entire stack, clusters and apps/services.

- Two harvester nodes (though this can be changed to only need one)

- kubectl with local cluster kubeconfig as current context

- running

Rancher Server - patched default

ClusterGroupinfleet-localnamespace (see below for how) - Optional kapp, ytt, kubecm

There's a bug in fleet right now that has yet to be fixed. It prevents us from deploying applications to our local cluster. This is an easy permanent fix, just run:

kubectl patch ClusterGroup -n fleet-local default --type=json -p='[{"op": "remove", "path": "/spec/selector/matchLabels/name"}]'Easy mode for deploying is using Carvel's ytt and kapp applications. ytt is both a yaml template and overlay tool as well as a yaml aggregator, we can use it to recursively grab all yaml files in a directory and feed over to kubectl or kapp. kapp does similar to kubectl but will create the objects in order, and allow you to aggregate/track the deployment with an app name. This aggregation allows for a higher level view for tracking changes to the objects we manually change.

Assuming your kubeconfig context is set, you can kick this whole thing off with a simple command:

╭─ ~/rancher/fleet-stack on main !1 ······································································································ 0|1 х at 16:27:21 ─╮

╰─ ytt -f workloads | kapp deploy -a fleet-workloads -f - -y ─╯

Target cluster 'https://rancher.homelab.platformfeverdream.io/k8s/clusters/local' (nodes: rancher-server-node-0c292fd2-jkltk)

Changes

Namespace Name Kind Age Op Op st. Wait to Rs Ri

fleet-default app-platform GitRepo - create - reconcile - -

^ essentials GitRepo - create - reconcile - -

fleet-local dev-cluster-loader GitRepo - create - reconcile - -

Op: 3 create, 0 delete, 0 update, 0 noop, 0 exists

Wait to: 3 reconcile, 0 delete, 0 noop

4:27:24PM: ---- applying 3 changes [0/3 done] ----

4:27:24PM: create gitrepo/app-platform (fleet.cattle.io/v1alpha1) namespace: fleet-default

4:27:24PM: create gitrepo/dev-cluster-loader (fleet.cattle.io/v1alpha1) namespace: fleet-local

4:27:24PM: create gitrepo/essentials (fleet.cattle.io/v1alpha1) namespace: fleet-default

4:27:24PM: ---- waiting on 3 changes [0/3 done] ----

4:27:24PM: ok: reconcile gitrepo/app-platform (fleet.cattle.io/v1alpha1) namespace: fleet-default

4:27:24PM: ok: reconcile gitrepo/dev-cluster-loader (fleet.cattle.io/v1alpha1) namespace: fleet-local

4:27:24PM: ok: reconcile gitrepo/essentials (fleet.cattle.io/v1alpha1) namespace: fleet-default

4:27:24PM: ---- applying complete [3/3 done] ----

4:27:24PM: ---- waiting complete [3/3 done] ----

SucceededThe alternative using kubectl only require's doing a kubectl apply of all yaml files within workoads.

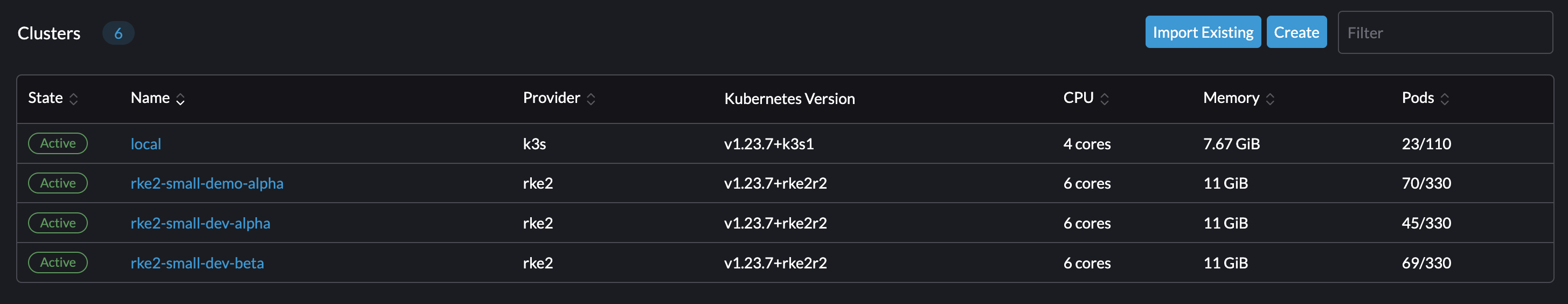

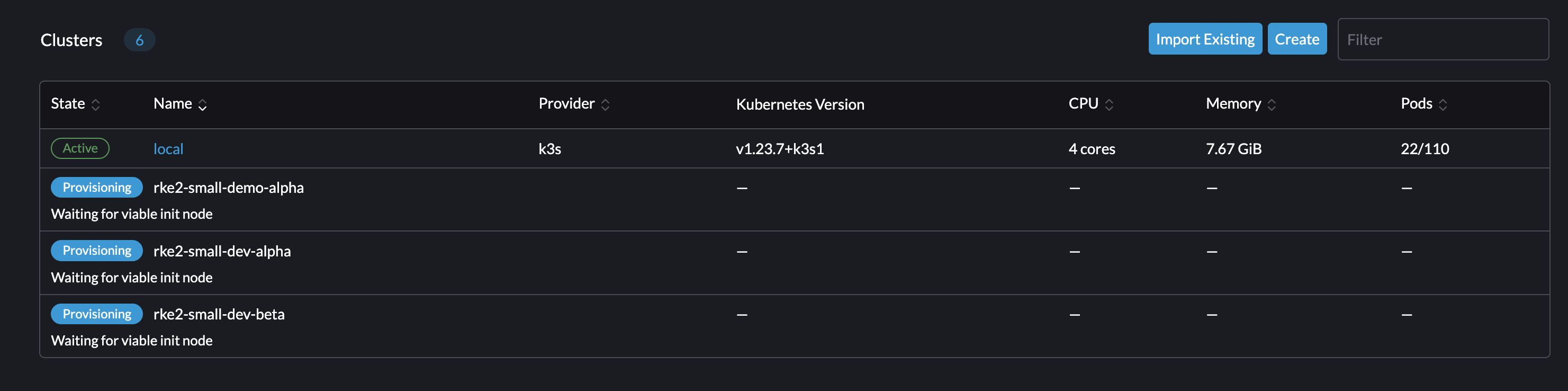

One this kicks off, we can view the clusters being built within our Rancher Server UI. Congrats, you've just kicked off a full-stack deployment using Fleet! This includes LCM or Lifecycle Management of RKE2 clusters themselves. Formerly, you would be required to enter the UI and deploy each of these items manually by clicking through menus. However, through the magic of Fleet and Rancher, your cluster configurations can be boiled down to a simple yaml file like this one!

While that is running, you can view the section below to understand what a Fleet Stack is and why this is cool!

The term stack is pretty well-known and pretty informative as to what it is. It's a set of software designed/configured to work together to deliver a specific solution. Typically there are multiple layers of dependency involved in a stack and this particular case is no different.

At the base layer we have our Infrastructure which is Harvester as HCI. Rancher supports provisioning RKE2/K3S clusters directly into Harvester in a similar way that it supports AWS, Azure, vSphere, etc. Here we're using Harvester as part of this demo.

At the platform layer we're going to be using RKE2 cluster instances. The default configuration here is for 3. One running on a low-core-count server called harveter1 and two running on a high-core-count server called harvester2

TODO: Drop in draw.io diagram

At the application layer we're installing services like cert-manager and longhorn as well as an application platform tool called Epinio. These are configured to install on specific clusters using label selectors to demonstrate various methods of managing automation to clusters at scale using Fleet.

What's really happening before your eyes is provisioning of a set of RKE2 clusters onto multiple Harvester instances as well as installation of the applications that run on top of it! This repo is a model of how a single git repository in production can manage many clusters and applications that run upon them at scale. Its structure is tailored to supporting this way of working. Because of naming conventions, directory structure and how it is presented, it can be very easy to onboard new platform engineers/operators in use of it.

The term workloads here stands for manual tasks/objects that we create inside our Rancher server, this is where the first kick event occurs, and if you check the HowTo section above, you can see we did just that. We fed all yaml files here into kapp/kubectl so they were created in our local rancher server.

Clusters and applications are seperated here for convenience and for certain playbooks that might manage clusters and apps as seperate processes. Keep in mind, these files don't have to be in this directory structure, the workload files could exist anywhere. They are not consumed by a gitops process, they are only applied manually via kapp or kubectl. These files are only GitRepo objects defined within Fleet's Docs.

workloads

├── apps

│ ├── app_platform.yaml

│ ├── essentials.yaml

│ └── monitoring.yaml.disabled

└── clusters

└── dev_clusters.yamlWithin the gitops directory is where all gitops-based code is to be consumed. This directory structure matters a great deal as it is a way to distinguish what is being provisioned cert-manager for example as well as where it is being installed. Note the directory structure itself defines first the level of the stack it applies to and then either which infra or the name of the base app/bundle to be installed. So at a glance, looking at the full path will tell the operator what the configuration belongs to without needing any manual inspection. This also allows for recursive inclusion of whole directories into a single GitRepo configuration. For instance, with the clusters we can include the entire harvester1/demo directory and track every single cluster that is part of that environment (the demo environment) as part of a single bundle. If we want to add a new cluster to this environment, it's as simple as creating a new folder copying the configs in and doing a git-commit. ¯\_(ツ)_/¯

See the tree of the gitops directory. Note how cert-manager AND longhorn are colocated under the same parent, allowing them to be part of an essentials bundle that is installed on all clusters!

gitops

├── apps

│ ├── app_platform

│ │ └── epinio

│ │ ├── fleet.yaml

│ │ └── values.yaml

│ ├── essentials

│ │ ├── cert-manager

│ │ │ ├── cert-manager

│ │ │ │ └── fleet.yaml

│ │ │ └── crds

│ │ │ ├── crds.yaml

│ │ │ └── fleet.yaml

│ │ └── longhorn

│ │ ├── fleet.yaml

│ │ └── values.yaml

│ └── monitoring

│ ├── crd

│ │ └── fleet.yaml

│ └── main

│ └── fleet.yaml

└── clusters

├── harvester1

│ └── demo

│ └── alpha

│ ├── fleet.yaml

│ └── values.yaml

└── harvester2

└── dev

├── alpha

│ ├── fleet.yaml

│ └── values.yaml

└── beta

├── fleet.yaml

└── values.yamlAdmit it, this is the real reason you're here right? I put this section at the bottom because this is honestly the coolest part. A lot of folks still don't understand the magic of the K8S ClusterAPI (or CAPI for short). What it allows us to do is provision resources in a cloud in a cloud-agnostic way. EC2 instances are provisioned in the same way that vSphere and Harvester VMs are.

The difference here it is that it is declarative and part of K8S instead of being separated into something clunky like Terraform or gasp Ansible playbooks. This allows your K8S management layer to control what resources it needs to provision in order to do LCM operations on the clusters it manages. All of it is centralized into K8S objects so it can all be managed with a single toolset and within a single workflow! It may not sound all that cool to some, but the time-savings alone is pretty spectacular. Ask yourself how things might be if K8S clusters were suddenly easy to create and delete on a whim? That day is now.

Rancher provides a great UI for managing these clusters across many clouds and environments from a single location. What you're seeing here is extending that feature into a GitOps-driven process. Instead of editing configurations within a UI, you're editing them in a git-repo because your clusters literally are now infrastructure-as-code.

Using the official Rancher cluster template helm chart, we can reference this chart within our fleet.yaml files and feed it a single values file defining our cluster.

Here's an example of the demo-alpha cluster referencing its helm chart and its values file. See the Fleet.yaml spec here

defaultNamespace: default

helm:

chart: https://github.com/rancher/cluster-template-examples/raw/main/cluster-template-0.0.1.tgz

releaseName: cluster-template-demo-alpha

valuesFiles:

- values.yaml

---

cloudCredentialSecretName: cattle-global-data:cc-ltgct #! this value had to be looked up

cloudprovider: harvester

cluster:

annotations: {}

labels:

environment: dev

cluster_name: alpha

#! must be a unique name

name: rke2-small-demo-alpha

kubernetesVersion: v1.23.7+rke2r2

monitoring:

enabled: false

rke:

localClusterAuthEndpoint:

enabled: false

nodepools:

#! name field must be unique across all clusters

- name: demo-control-plane-alpha

displayName: control-plane

etcd: true

controlplane: true

worker: false

# specify node labels

labels: {}

# specify node taints

taints: {}

# specify nodepool size

quantity: 1

diskSize: 40

diskBus: virtio

cpuCount: 2

memorySize: 4

networkName: default/workloads

networkType: dhcp

imageName: default/image-d4cpg #! this value had to be looked up

vmNamespace: default

sshUser: ubuntu

#! name field must be unique across all clusters

- name: demo-worker-alpha

displayName: worker

worker: true

# specify node labels

labels: {}

# specify node taints

taints: {}

# specify nodepool size

quantity: 3

diskSize: 40

diskBus: virtio

cpuCount: 2

memorySize: 4

networkName: default/workloads

networkType: dhcp

imageName: default/image-d4cpg #! this value had to be looked up

vmNamespace: default

sshUser: ubuntu