“A generative adversarial model is a powerful machine learning technique where two neural networks compete against each other in a zero-sum game, leading to the creation of new, synthetic data that is indistinguishable from real data.”

The objective of this project is to use the following Kaggle dataset, in order to generate anime faces using a Deep Convolutional Generative Adversarial Network (DCGAN).

A Deep Convolutional Generative Adversarial Network (DCGAN) is a generative model that consists of two main components: a generator network and a discriminator network. The generator network takes in a random noise vector and outputs a synthetic image, while the discriminator network takes in both real and synthetic images and outputs a probability that the input image is real. The two networks are trained in a zero-sum game, where the generator tries to generate synthetic images that are indistinguishable from real images, while the discriminator tries to correctly identify real and synthetic images.

The generator network can be represented mathematically as a function G(z) where z is a random noise vector and G outputs a synthetic image. The discriminator network can be represented as a function D(x) where x is an input image and D outputs a probability that x is a real image. The objective of the DCGAN is to find the Nash equilibrium of the following minimax game:

where

Pre-trained models are located inside the ./checkpoint folder. They were trained on an NVIDIA GeForce MX110 GPU.

| Name | Time | Epochs |

|---|---|---|

| afg.pt | 6 hours | 33 |

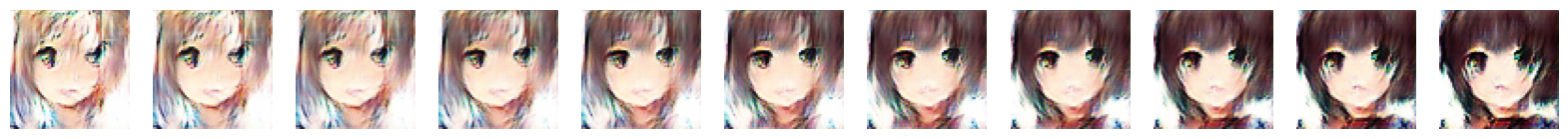

Below are some images generated from a model that has been trained for 33:

The following is an example of interpolation between two generated images: