🧭 To develop your own Executor, please use

jina hub newand create your own Executor repo.

This repository provides a selection of Executors for Jina.

⚙️ Executor is how Jina processes Documents. It is the building block of your Jina data pipeline, providing a specific functional needs: preparing data, encoding it with your model, storing, searching, and more.

The following is general guidelines. Check each executor's README for details.

Use the prebuilt image from JinaHub in your Python code

from jina import Flow

f = Flow().add(uses='jinahub+docker://ExecutorName')Use the source code from JinaHub in your Python code:

from jina import Flow

f = Flow().add(uses='jinahub://ExecutorName')Click here to see advance usage

-

Install the

executorspackage.pip install git+https://github.com/jina-ai/executors/

-

Use

executorsin your codefrom jina import Flow from jinahub.type.subtype.ExecutorName import ExecutorName f = Flow().add(uses=ExecutorName)

-

Clone the repo and build the docker image

git clone https://github.com/jina-ai/executors cd executors/type/subtype docker build -t executor-image .

-

Use

executor-imagein your codefrom jina import Flow f = Flow().add(uses='docker://executor-image:latest')

⚠️ Please do not commit your new Executor to this repository. This repository is only for Jina engineers to better manage in-house executors in a centralized way. You may submit PRs to fix bugs/add features to the existing ones.

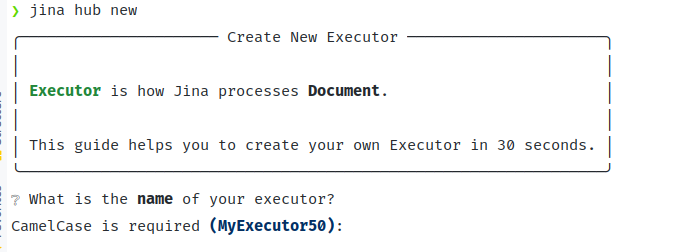

Use jina hub new to create a new Executor, following easy interactive prompts:

Then follow the guide on Executors and how to push it to the Jina Hub marketplace.

For internal Jina enigneers only:

- add the new executor to the right subfolder.

- crafters transform data

- encoders compute the vector representation of data

- indexers store and retrieve data

- segmenters split data into chunks

- rankers

- push your initial version to Jina Hub. Use the guide here

- add the UUID and secret to the secrets store. Make sure

(folder name) == (manifest alias) == (name in secrets store)

Some Executors might require a large model. During CI/tests, it is advisable to download it as part of a fixture and store it to disk, to be re-used by the Executor.

In production, it is recommended to set up your workspace, model, and class to load from disk. If the Executor is served with Docker, make sure to also map the directory, as the Docker runtime does not get persisted.