Serve Llama 2 (7B/13B/70B) Large Language Models efficiently at scale by leveraging heterogeneous Dell™ PowerEdge™ Rack servers in a distributed manner.

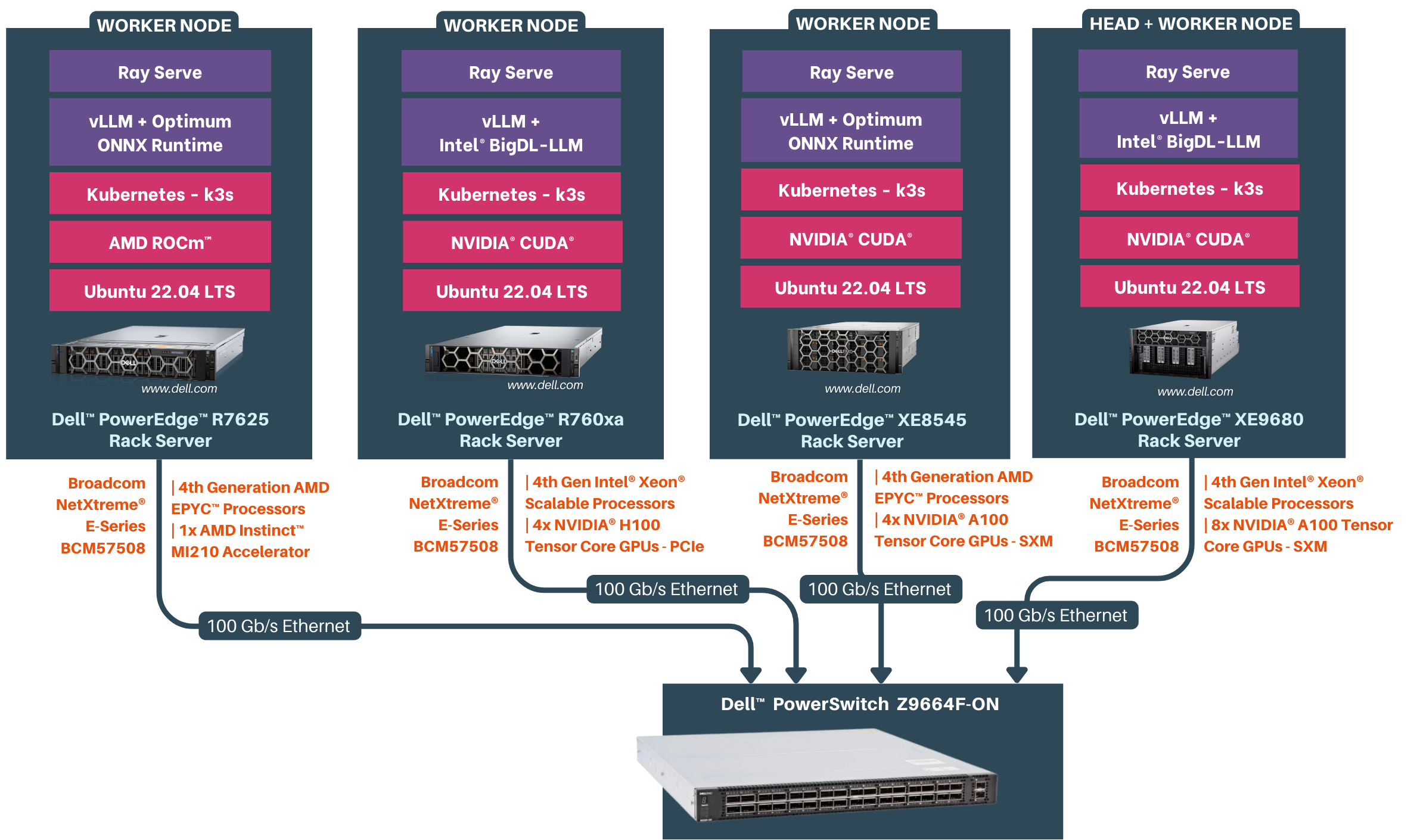

The developer documentation provides a comprehensive guide for serving Llama 2 Large Language Models (LLMs) in a distributed environment, using Dell™ PowerEdge™ Rack servers networked with Broadcom® Ethernet Network Adapters. This document aims to empower developers with the knowledge and tools necessary to serve LLMs for maximum inference performance by creating GPU and CPU clusters . By leveraging the computational power of Dell™ PowerEdge™ Rack servers, networking capability of Broadcom® Ethernet Network Adapters and the scalability with Kubernetes, developers can efficiently serve LLMs to meet their application requirements.

Before we start, make sure you have the following software components set up on your Dell™ PowerEdge™ Rack servers:

- Ubuntu Server 22.04, with kernel v5.15 or higher

- Docker v24.0 or later

- NVIDIA® CUDA® Toolkit v12.2.1

- AMD ROCm™ v5.7.1

Additionally, ensure you have the following hardware components at your disposal:

- Dell™ PowerEdge™ Rack Servers equipped with NVIDIA® GPUs / AMD GPUs.

- Broadcom® Ethernet Network Adapters

- Dell™ PowerSwitch Z9664F-ON

The solution was tested with the below hardware stack

| Server | CPU | RAM | Disk | GPU |

|---|---|---|---|---|

| Dell™ PowerEdge™ XE9680 | Intel® Xeon® Platinum 8480+ | 2 TB | 3TB | 8xNVIDIA® A100 Tensor Core 80GB SXM GPUs |

| Dell™ PowerEdge™ XE8545 | AMD EPYC™ 7763 64-Core Processor | 1 TB | 2 TB | 4xNVIDIA® A100 Tensor Core 80GB SXM GPUs |

| Dell™ PowerEdge™ R760xa | Intel® Xeon® Platinum 8480+ | 1 TB | 1 TB | 4xNVIDIA® H100 Tensor Core 80GB PCIe GPUs |

| Dell™ PowerEdge™ R7625 | AMD EPYC™ 9354 32-Core Processor | 1.5 TB | 1 TB | 1xAMD Instinct™ MI210 Accelerator GPU |

Estimated Time: 40 mins ⏱️

To set the stage for distributed inferencing, we'll start by configuring our cluster with precision. Using Kubernetes as our orchestration system, we'll establish a cluster comprising of a designated server/head node and multiple agent/worker nodes. The flexibility lies in your hands: you can choose to include the head node in the distributed inferencing process or opt for an additional worker node on the same machine as the head node, enhancing the power of your computational setup.

To optimize our resources further, we'll integrate NVIDIA® device plugins for Kubernetes and AMD device plugins for Kubernetes using Helm.

This streamlined integration ensures that our distributed inferencing environment is equipped with the necessary acceleration capabilities.

Get started with setting up your distributed cluster by following steps.

- K3S Setup on Server and Agent Nodes

- Installing Helm

- NVIDIA® Device Plugins for Kubernetes

- AMD Device Plugins for Kubernetes

Estimated Time: 10 mins ⏱️

We'll be introducing KubeRay to our Kubernetes cluster and configure a Kubernetes secret for handling inference docker images. This step guarantees a robust and scalable infrastructure for our distributed inference.

Estimated Time: 1hr ⏱️

We will be executing the distributed inference of the cutting-edge Llama 2 70B model developed by Meta AI. To accomplish this, we're harnessing the power of robust tool Ray Serve and inference backends optimized for the available hardware cluster, ensuring an efficient and scalable workflow.

Let's deploy the Llama 2 models on your cluster for distributed inference.

The Ray Dashboard offers real-time insights into serving. Accessible at http://127.0.0.1:30265, this dashboard grants you access to the Serve tab, allowing you to monitor serve applications deployed and its logs. Meanwhile, the Cluster tab provides a comprehensive overview of device statistics across your cluster, ensuring you're always in control.

The Grafana Dashboard empowers you with comprehensive system and cluster metrics. Every dashboard provides in-depth insights into Kubernetes cluster and Node-level metrics, offering a seamless way to monitor and troubleshoot your nodes and deployments.

Dive into monitoring your cluster.

Use the application end point to run a chatbot using Gradio.

To gain a comprehensive understanding of every facet involved in serving a Large Language Model (LLM), explore the following resources:

| Resource | Link |

|---|---|

| Llama 2 LLM Model | Meta AI-Llama 2 Page Llama 2 Research Paper |

| Ray Serve | Ray Serve GitHub repo |

| Ray Service | Ray Service Documentation |

| vLLM | vLLM GitHub |

| Intel BigDL | BigDL GitHub |

| Optimum ONNXRuntime | HuggingFace Optimum ONNX Runtime ONNX Runtime Optimum ONNXRuntime GitHub |