Authors: Luca Iezzi and Giulia Ciabatti.

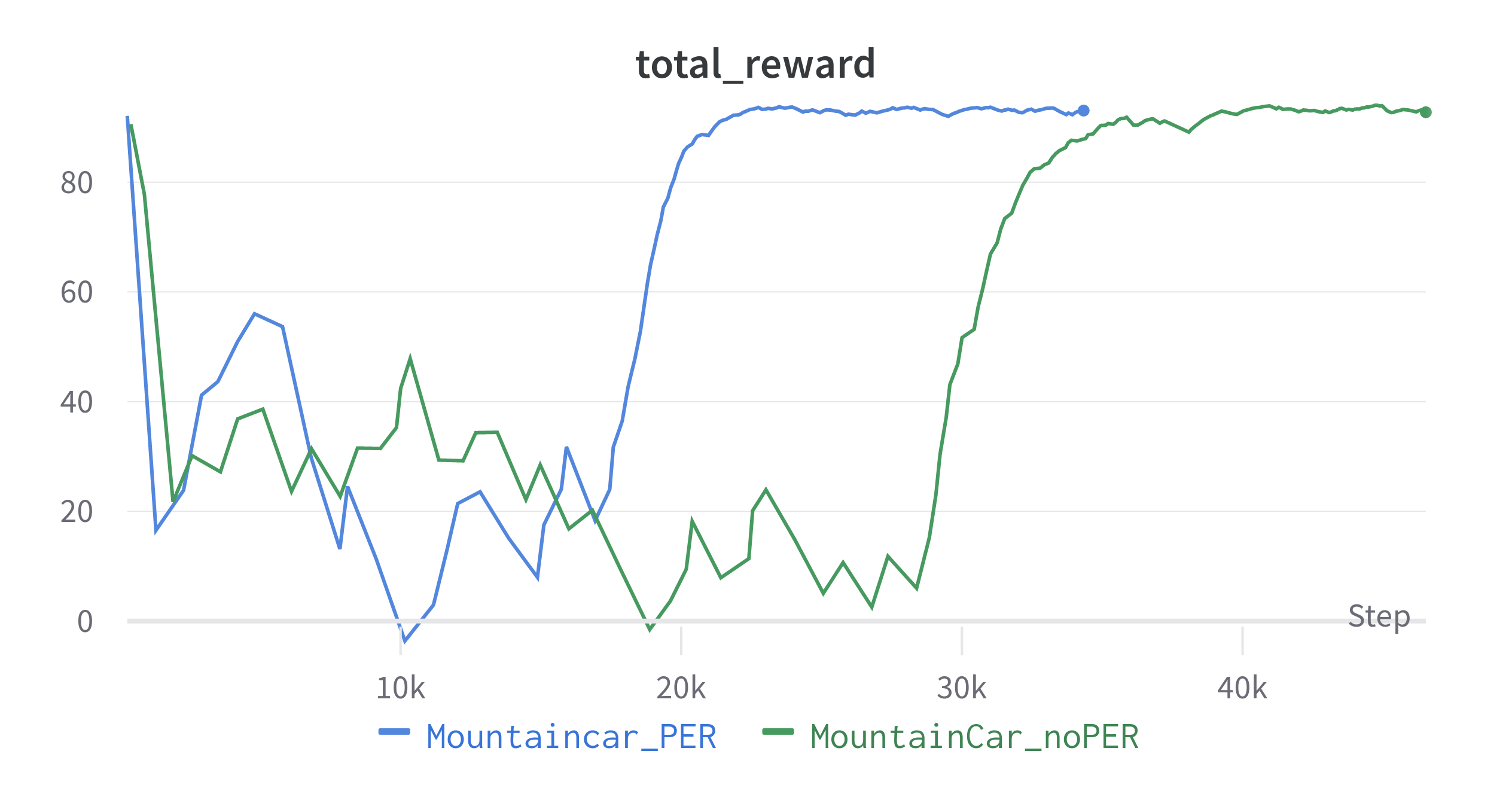

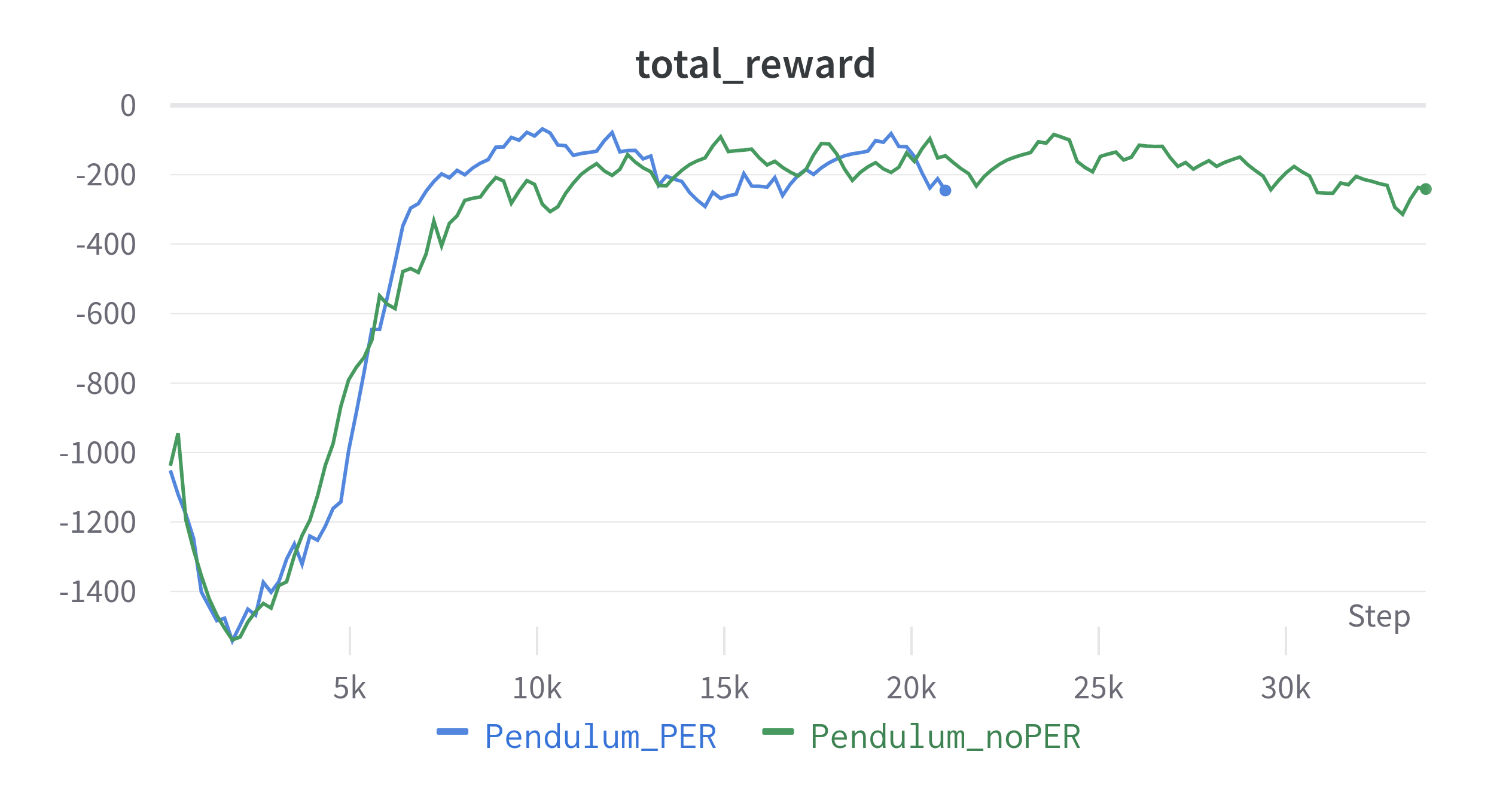

This consists of a complete reimplementation of DDPG with PrioritizedExperience Replay, and its adaptation on Pendulum-v1 and MountainCarContinuous-v0, from OpenAI Gym.

| Agent playing |

|---|

|

|

This implementation is based on Python 3.8 and PyTorch Lightning. To install all the requirements:

$ pip install -r requirements.txt| PER_BUFFER | ACTOR | CRITIC | ||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

The complete pipeline to train the 3 model components:

In simple_config.py, set ENV=[gym env you want to train on], set TRAIN=True and run:

$ python main.pyIn main.py, manually copy the path of one of the checkpoints in ckpt/ in the variable model, set TRAIN=False and RENDER=True in simple_config.py and run:

$ python main.pySome of the implementations (e.g. SumTree and MinTree) have been taken by existing repos, but it's been impossible to track the original author :( . If you recognize your code there, don't hesitate to drop me an email and I will add your repo to the credits!