Official implementation of the paper "Revisiting Counterfactual Problems in Referring Expression Comprehension" [CVPR 2024]

- (2024/3/6) Release our C-REC datasets C-RefCOCO/+/g.

- (2024/10/18) Release our C-REC model.

C-RefCOCO/+/g are three fine-grained counterfactual referring expression comprehension (C-REC) datasets built on three REC benchmark datasets RefCOCO/+/g through our proposed CSG method.

The number of normal and counterfactual samples in C-RefCOCO/+/g is 1:1. The size of C-RefCOCO/+/g is shown as follows.

| train | val | testA(test) | testB | |

|---|---|---|---|---|

| C-RefCOCO | 61870 | 15566 | 6994 | 8810 |

| C-RefCOCO+ | 59962 | 15328 | 7846 | 7108 |

| C-RefCOCOg | 30298 | 3676 | 7122 |

The number of seven categories of attributes in normal samples are shown as follows. Note that there are some splits that do not contain certain categories of attribute words, such as A5 (relative location relation) and A6 (relative location object) in C-RefCOCO+.

| A1 | A2 | A3 | A4 | A5 | A6 | A7 | |

|---|---|---|---|---|---|---|---|

| C-RefCOCO | 23862 | 5136 | 464 | 16142 | 131 | 131 | 754 |

| C-RefCOCO+ | 28573 | 9864 | 1685 | 2646 | 0 | 0 | 2354 |

| C-RefCOCOg | 11312 | 4114 | 638 | 4024 | 108 | 108 | 244 |

- Download ms-coco train2014 images, where the images in our datasets are all from.

- Our datasets are in:

$ROOT/data

|-- crec

|-- c_refcoco.json

|-- c_refcoco+.json

|-- c_refcocog.json

- Definitions of every term in json files:

| item | type | description |

|---|---|---|

| atts | str | attribute words |

| bbox | list | bounding box ([0,0,0,0] for counterfactual samples) |

| iid | int | image id (from ms-coco train2014) |

| refs | str | the original positive expression for both normal and counterfactual samples |

| cf_id | int | counterfactual polarity (1: counterfactual; 0: normal) |

| att_pos | int | position of attribute words (start from 0) |

| query | str | text query |

| neg | str | negative query (it would be the normal text for counterfactual samples; this is for contrastive loss calculation) |

| att_id | int | category of attribute word, from 1 to 7 (A1-A7) |

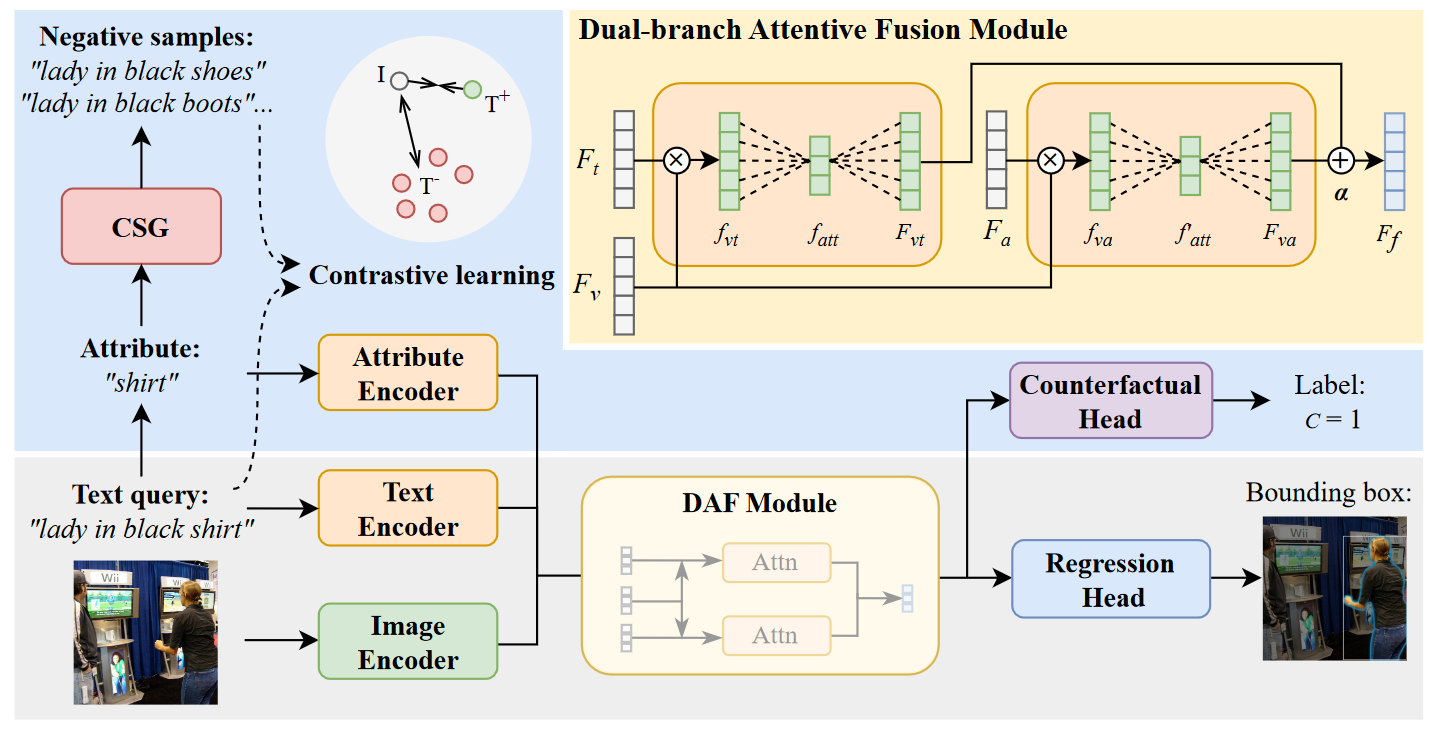

Our CREC model is based on one-stage referring expression comprehension model, augmented by our newly-built datasets.

- Python 3.7

- PyTorch 1.11.0 + CUDA 11.3

- Install mmcv following the installation guide

- Install Spacy and initialize the GloVe and install other requirements as follows:

pip install -r requirements.txt

wget https://github.com/explosion/spacy-models/releases/download/en_vectors_web_lg-2.1.0/en_vectors_web_lg-2.1.0.tar.gz -O en_vectors_web_lg-2.1.0.tar.gz

pip install en_vectors_web_lg-2.1.0.tar.gz

-

Config preparation. Modify the config file according to your needs.

-

Train the model. Download pretrained weights of visual backbone following Pretrained Weights.

[Optional] Resume from checkpoint:

-

To auto resume from

last_checkpoint.pth, settrain.auto_resume.enabled=Truein config.py, which will automatically resume fromlast_checkpoint.pthsaved incfg.train.output_dir. -

To resume from a checkpoint, set

train.auto_resume.enabled=Falseandtrain.resume_path=path/to/checkpoint.pthin config.py.

To train our model on 4 GPUs, run:

bash tools/train.sh configs/crec_refcoco.py 4- Test the model.

To test our model from

path/to/checkpoint.pthon1GPUs, run:.

bash tools/eval.sh configs/crec_refcoco.py 1 path/to/checkpoint.pthThis project is released under the Apache 2.0 license.

If this repository is helpful for your research, or you want to refer the provided results in your paper, consider cite:

@inproceedings{yu2024revisiting,

title={Revisiting Counterfactual Problems in Referring Expression Comprehension},

author={Yu, Zhihan and Li, Ruifan},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={13438--13448},

year={2024}

}Thanks a lot for the nicely organized code from the following repos: