The Official PyTorch Implementation of "MHEntropy: Multiple Hypotheses Meet Entropy for Pose and Shape Recovery" (ICCV 2023 Paper)

Rongyu Chen

·

Linlin Yang*

·

Angela Yao

National University of Singapore, School of Computing, Computer Vision & Machine Learning (CVML) Group

ICCV 2023

Thanks for your interest.

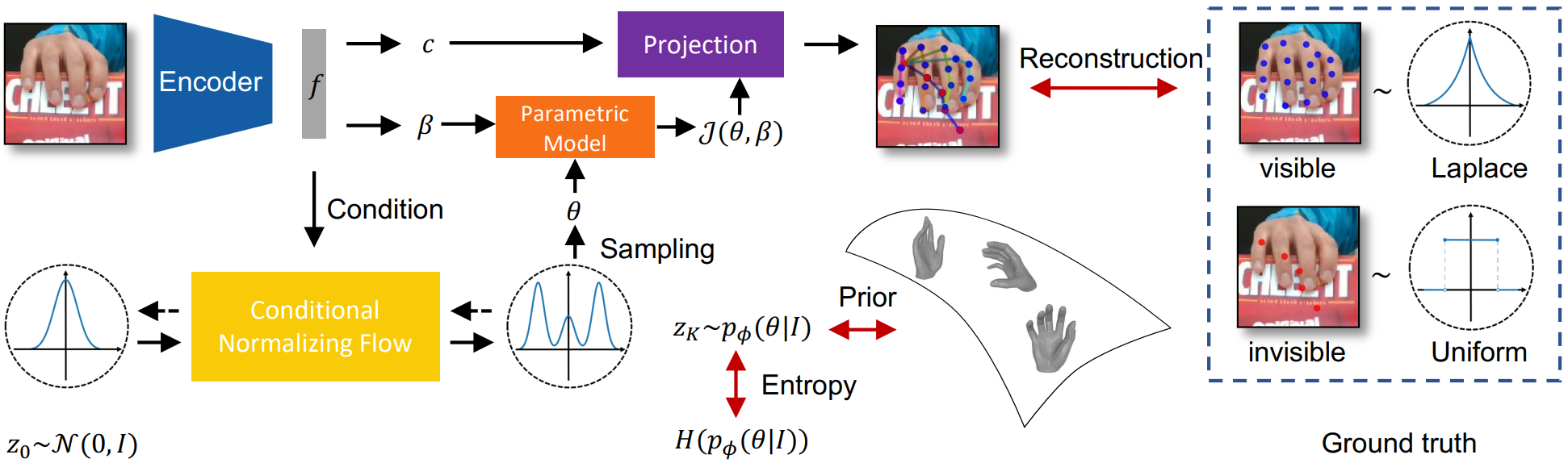

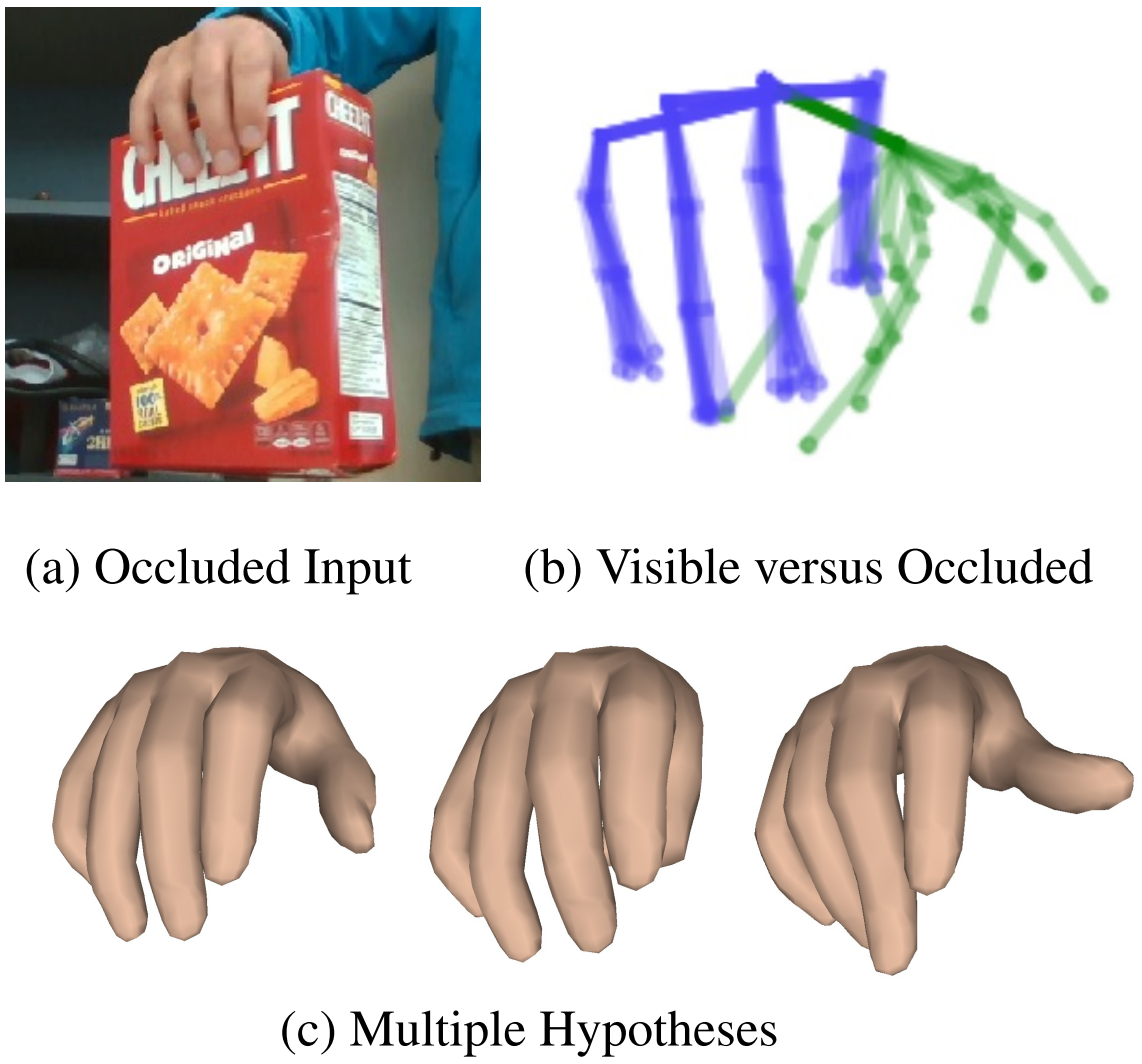

We mainly tackle the problem of using only visible 2D keypoints that are easy to annotate to train the HMR model to model ambiguity (occlusion, depth ambiguity, etc.) and generate multiple feasible, accurate, and diverse hypos. It also answers "how generative models help discriminative tasks". The key idea is that using knowledge rather than data samples to define the target data distribution under a probabilistic framework, KLD will naturally derive a missing entropy term.

Please find the hand experiments here.

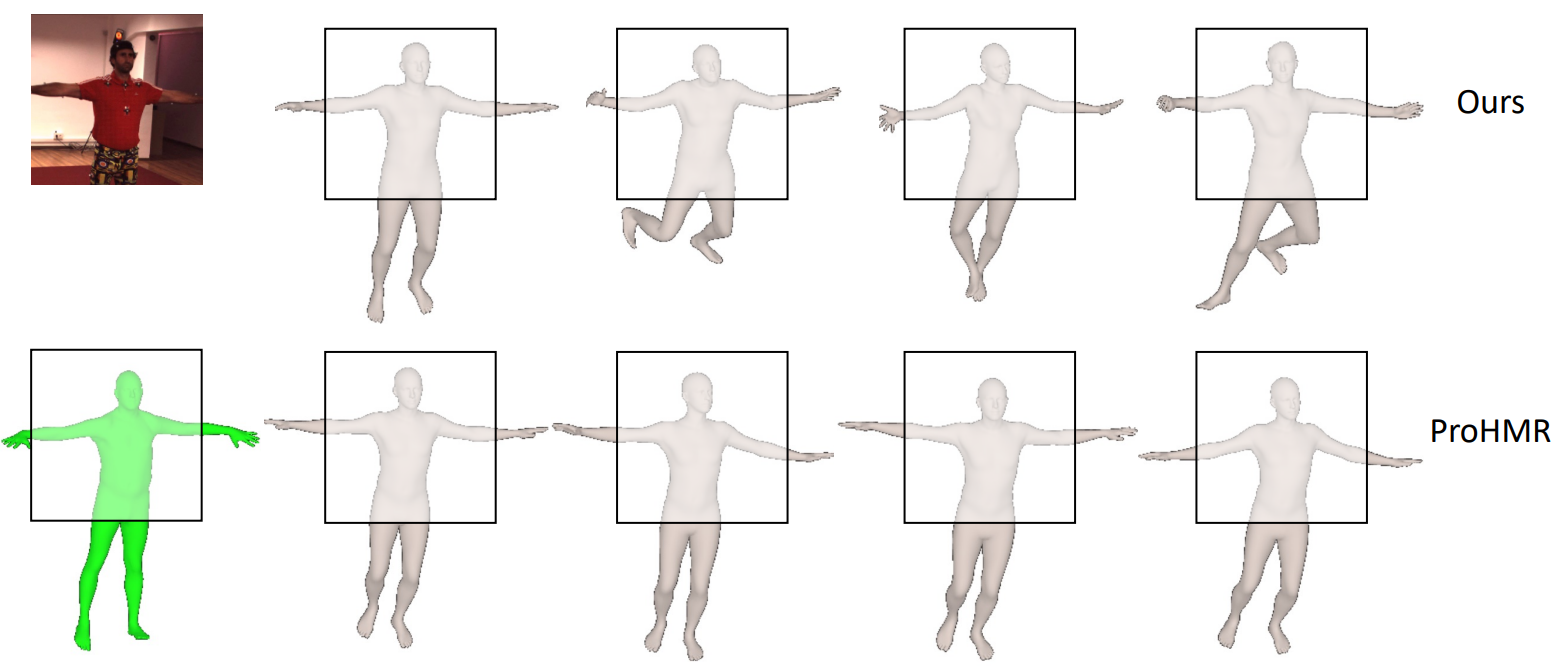

Our method can be adapted to a variety of backbone models. Simply use the ProHMR code repo to load our pre-trained model weights to perform inference and evaluation.

NLL (Negative Log-likelihood) Loss

log_prob, _ = self.flow.log_prob(smpl_params, conditioning_feats[has_smpl_params])

loss_nll = -log_prob.mean()VS. Our Entropy Loss

pred_smpl_params, pred_cam, log_prob, _, pred_pose_6d = self.flow(conditioning_feats, num_samples=num_samples-1)

log_prob_ent = output['log_prob'][:, 1:]

loss_ent = log_prob_ent.mean()Please cite our paper, if you happen to use this codebase:

@inproceedings{chen2023MHEntropy,

title={{MHEntropy}: Multiple Hypotheses Meet Entropy for Pose and Shape Recovery},

author={Chen, Rongyu and Yang, Linlin and Yao, Angela},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year={2023}

}