Zongming Li1,*, Tianheng Cheng1,*, Shoufa Chen2, Peize Sun2, Haocheng Shen3,Longjin Ran3, Xiaoxin Chen3, Wenyu Liu1, Xinggang Wang1,📧

1 Huazhong University of Science and Technology, 2 The University of Hong Kong 3 vivo AI Lab

(* equal contribution, 📧 corresponding author)

[2024-10-31]: The code and models have been released!

[2024-10-04]: We have released the technical report of ControlAR. Code, models, and demos are coming soon!

-

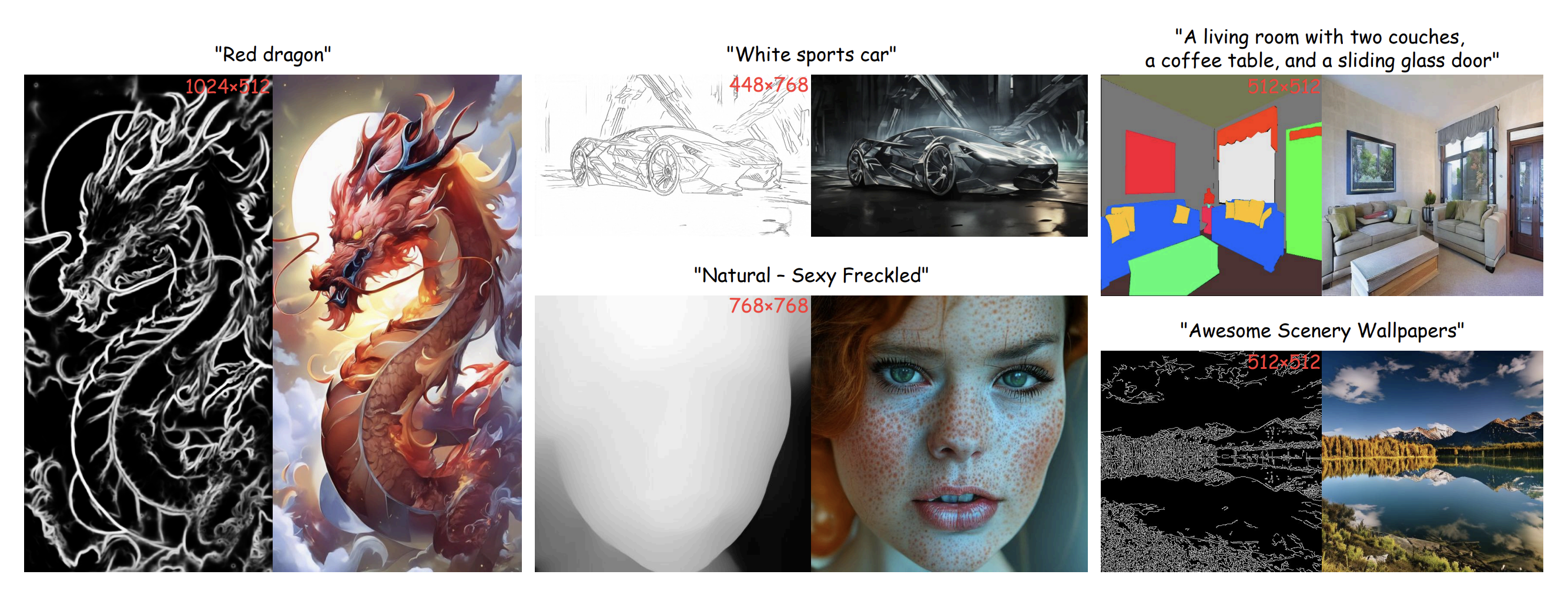

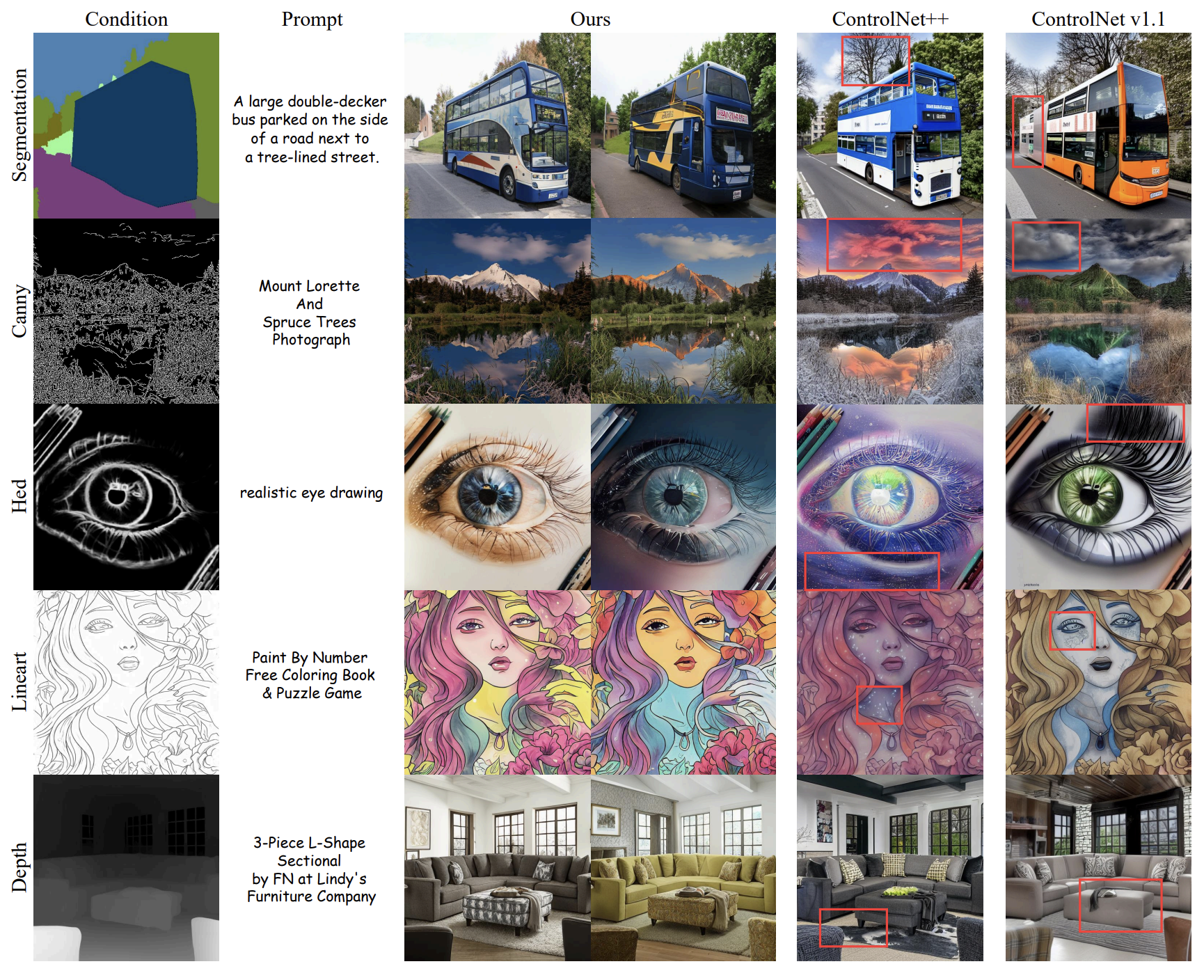

ControlAR explores an effective yet simple conditional decoding strategy for adding spatial controls to autoregressive models, e.g., LlamaGen, from a sequence perspective.

-

ControlAR supports arbitrary-resolution image generation with autoregressive models without hand-crafted special tokens or resolution-aware prompts.

- release code & models.

- release demo code and HuggingFace demo.

We provide both quantitative and qualitative comparisons with diffusion-based methods in the technical report!

We released checkpoints of text-to-image ControlAR on different controls and settings, i.e. arbitrary-resolution generation.

| AR Model | Type | Control | Arbitrary-Resolution | Checkpoint |

|---|---|---|---|---|

| LlamaGen-XL | t2i | Canny Edge | ✅ | ckpt |

| LlamaGen-XL | t2i | Depth | ✅ | ckpt |

| LlamaGen-XL | t2i | HED Edge | ❌ | ckpt |

| LlamaGen-XL | t2i | Seg. Mask | ❌ | ckpt |

conda create -n ControlAR python=3.10\

git clone https://github.com/hustvl/ControlAR.git\

cd ControlAR\

pip install torch==2.1.2+cu118 --extra-index-url https://download.pytorch.org/whl/cu118\

pip install -r requirements.txt\

pip3 install -U openmim \

mim install mmengine \

mim install "mmcv==2.1.0"\

pip3 install "mmsegmentation>=1.0.0"\

pip3 install mmdet\

git clone https://github.com/open-mmlab/mmsegmentation.git| tokenizer | text encoder | LlamaGen-B | LlamaGen-L | LlamaGen-XL |

|---|---|---|---|---|

| vq_ds16_t2i.pt | flan-t5-xl | c2i_B_256.pt | c2i_L_256.pt | t2i_XL_512.pt |

We recommend storing them in the following structures:

|---checkpoints

|---t2i

|---canny/canny_MR.safetensors

|---hed/hed.safetensors

|---depth/depth_MR.safetensors

|---seg/seg_cocostuff.safetensors

|---t5-ckpt

|---flan-t5-xl

|---config.json

|---pytorch_model-00001-of-00002.bin

|---pytorch_model-00002-of-00002.bin

|---pytorch_model.bin.index.json

|---tokenizer.json

|---vq

|---vq_ds16_c2i.pt

|---vq_ds16_t2i.pt

|---llamagen (Only necessary for training)

|---c2i_B_256.pt

|---c2i_L_256.pt

|---t2i_XL_stage2_512.pt

Coming soon...

python autoregressive/sample/sample_c2i.py \

--vq-ckpt checkpoints/vq/vq_ds16_c2i.pt \

--gpt-ckpt checkpoints/c2i/canny/LlamaGen-L.pt \

--gpt-model GPT-L --seed 0 --condition-type cannyGenerate an image using HED edge and text-to-image ControlAR:

python autoregressive/sample/sample_t2i.py \

--vq-ckpt checkpoints/vq/vq_ds16_t2i.pt \

--gpt-ckpt checkpoints/t2i/hed/hed.safetensors \

--gpt-model GPT-XL --image-size 512 \

--condition-type hed --seed 0 --condition-path condition/example/t2i/multigen/eye.pngGenerate an image using segmentation mask and text-to-image ControlAR:

python autoregressive/sample/sample_t2i.py \

--vq-ckpt checkpoints/vq/vq_ds16_t2i.pt \

--gpt-ckpt checkpoints/t2i/seg/seg_cocostuff.safetensors \

--gpt-model GPT-XL --image-size 512 \

--condition-type seg --seed 0 --condition-path condition/example/t2i/cocostuff/doll.png \

--prompt 'A stuffed animal wearing a mask and a leash, sitting on a pink blanket'python3 autoregressive/sample/sample_t2i_MR.py --vq-ckpt checkpoints/vq/vq_ds16_t2i.pt \

--gpt-ckpt checkpoints/t2i/depth_MR.safetensors --gpt-model GPT-XL --image-size 768 \

--condition-type depth --condition-path condition/example/t2i/multi_resolution/bird.jpg \

--prompt 'colorful bird' --seed 0python3 autoregressive/sample/sample_t2i_MR.py --vq-ckpt checkpoints/vq/vq_ds16_t2i.pt \

--gpt-ckpt checkpoints/t2i/canny_MR.safetensors --gpt-model GPT-XL --image-size 768 \

--condition-type canny --condition-path condition/example/t2i/multi_resolution/bird.jpg \

--prompt 'colorful bird' --seed 0We provide the dataset datails for evaluation and training. If you don't want to train ControlAR, just download the validation splits.

- Download ImageNet and save it to

data/imagenet/data.

- Download ADE20K with caption(~7GB) and save the

.parquetfiles todata/Captioned_ADE20K/data. - Download COCOStuff with caption( ~62GB) and save the .parquet files to

data/Captioned_COCOStuff/data. - Download MultiGen-20M( ~1.22TB) and save the .parquet files to

data/MultiGen20M/data.

To save training time, we adopt the tokenizer to pre-process the images with the text prompts.

- ImageNet

bash scripts/autoregressive/extract_file_imagenet.sh \

--vq-ckpt checkpoints/vq/vq_ds16_c2i.pt \

--data-path data/imagenet/data/val \

--code-path data/imagenet/val/imagenet_code_c2i_flip_ten_crop \

--ten-crop --crop-range 1.1 --image-size 256- ADE20k

bash scripts/autoregressive/extract_file_ade.sh \

--vq-ckpt checkpoints/vq/vq_ds16_t2i.pt \

--data-path data/Captioned_ADE20K/data --code-path data/Captioned_ADE20K/val \

--ten-crop --crop-range 1.1 --image-size 512 --split validation- COCOStuff

bash scripts/autoregressive/extract_file_cocostuff.sh \

--vq-ckpt checkpoints/vq/vq_ds16_t2i.pt \

--data-path data/Captioned_COCOStuff/data --code-path data/Captioned_COCOStuff/val \

--ten-crop --crop-range 1.1 --image-size 512 --split validation- MultiGen

bash scripts/autoregressive/extract_file_multigen.sh \

--vq-ckpt checkpoints/vq/vq_ds16_t2i.pt \

--data-path data/MultiGen20M/data --code-path data/MultiGen20M/val \

--ten-crop --crop-range 1.1 --image-size 512 --split validationbash scripts/autoregressive/test_c2i.sh \

--vq-ckpt ./checkpoints/vq/vq_ds16_c2i.pt \

--gpt-ckpt ./checkpoints/c2i/canny/LlamaGen-L.pt \

--code-path /path/imagenet/val/imagenet_code_c2i_flip_ten_crop \

--gpt-model GPT-L --condition-type canny --get-condition-img True \

--sample-dir ./sample --save-image Truepython create_npz.py --generated-images ./sample/imagenet/cannyThen download imagenet validation data which contains 10000 images, or you can use the whole validation data as reference data by running val.sh.

Calculate the FID score:

python evaluations/c2i/evaluator.py /path/imagenet/val/FID/VIRTUAL_imagenet256_labeled.npz \

sample/imagenet/canny.npzDownload Mask2Former(weight) and save it to evaluations/.

Use this command to get 2000 images based on the segmentation mask:

bash scripts/autoregressive/test_t2i.sh --vq-ckpt checkpoints/vq/vq_ds16_t2i.pt \

--gpt-ckpt checkpoints/t2i/seg/seg_ade20k.pt \

--code-path data/Captioned_ADE20K/val --gpt-model GPT-XL --image-size 512 \

--sample-dir sample/ade20k --condition-type seg --seed 0Calculate mIoU of the segmentation masks from the generated images:

python evaluations/ade20k_mIoU.pyDownload DeepLabV3(weight) and save it to evaluations/.

Generate images using segmentation masks as condition controls:

bash scripts/autoregressive/test_t2i.sh --vq-ckpt checkpoints/vq/vq_ds16_t2i.pt \

--gpt-ckpt checkpoints/t2i/seg/seg_cocostuff.pt \

--code-path data/Captioned_COCOStuff/val --gpt-model GPT-XL --image-size 512 \

--sample-dir sample/cocostuff --condition-type seg --seed 0Calculate mIoU of the segmentation masks from the generated images:

python evaluations/cocostuff_mIoU.pyWe adopt generation with HED edges as the example:

Generate 5000 images based on the HED edges generated from validation images

bash scripts/autoregressive/test_t2i.sh --vq-ckpt checkpoints/vq/vq_ds16_t2i.pt \

--gpt-ckpt checkpoints/t2i/hed/hed.safetensors --code-path data/MultiGen20M/val \

--gpt-model GPT-XL --image-size 512 --sample-dir sample/multigen/hed \

--condition-type hed --seed 0Evaluate the conditional consistency (SSIM):

python evaluations/hed_ssim.pyCalculate the FID score:

python evaluations/clean_fid.py --val-images data/MultiGen20M/val/image --generated-images sample/multigen/hed/visualizationbash scripts/autoregressive/train_c2i_canny.sh --cloud-save-path output \

--code-path data/imagenet/train/imagenet_code_c2i_flip_ten_crop \

--image-size 256 --gpt-model GPT-B --gpt-ckpt checkpoints/llamagen/c2i_B_256.ptbash scripts/autoregressive/train_t2i_canny.sh The development of ControlAR is based on LlamaGen, ControlNet, ControlNet++, and AiM, and we sincerely thank the contributors for thoese great works!

If you find ControlAR is useful in your research or applications, please consider giving us a star 🌟 and citing it by the following BibTeX entry.

@article{li2024controlar,

title={ControlAR: Controllable Image Generation with Autoregressive Models},

author={Zongming Li, Tianheng Cheng, Shoufa Chen, Peize Sun, Haocheng Shen, Longjin Ran, Xiaoxin Chen, Wenyu Liu, Xinggang Wang},

year={2024},

eprint={2410.02705},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2410.02705},

}