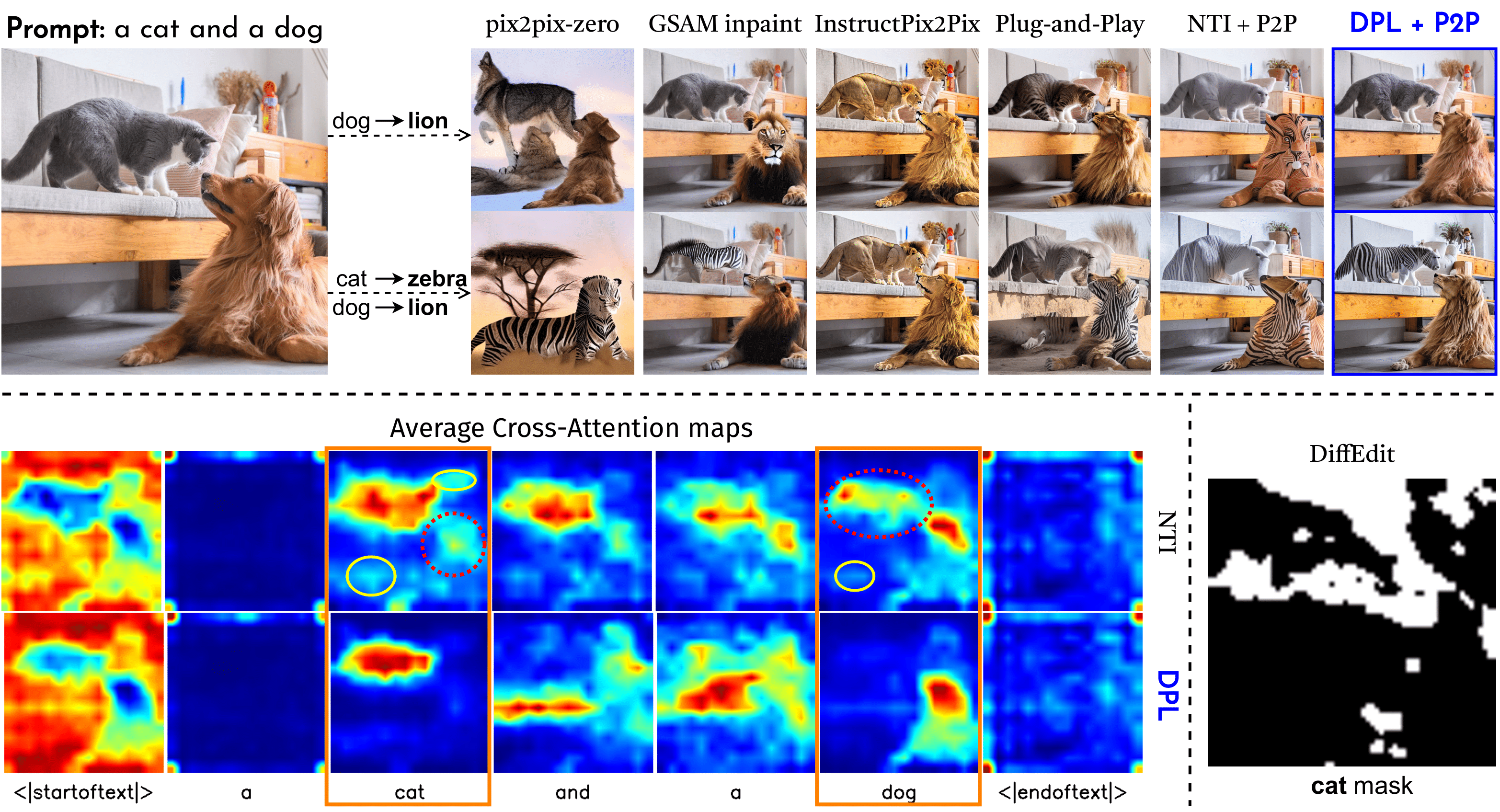

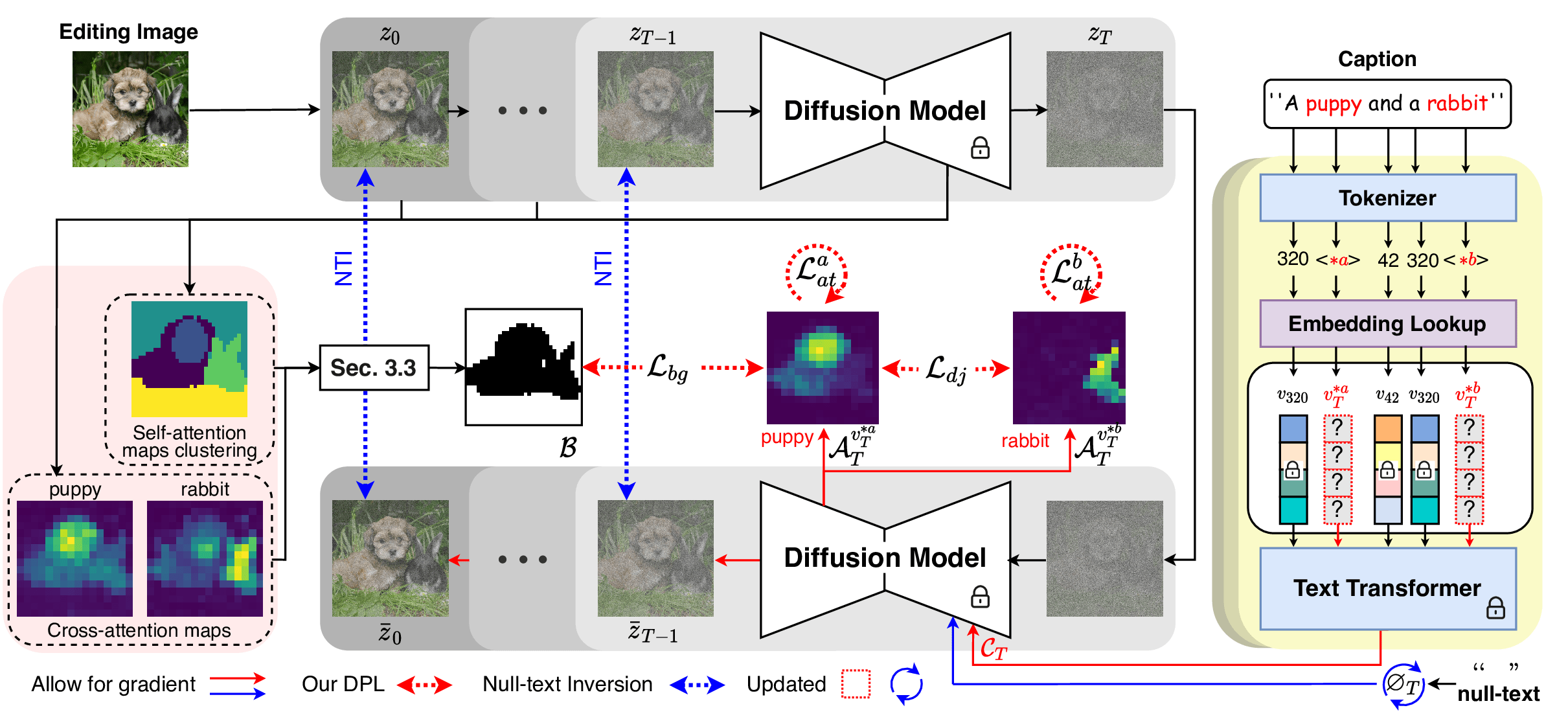

Dynamic Prompt Learning: Addressing Cross-Attention Leakage for Text-Based Image Editing (Neurips 2023)

The required packages are listed in "torch2.yml"

For images without captions, we used the BLIP model to generate image captions. You can change it into BLIP-2 for better performance.

python _1_BLIP_caption.py --input_image https://huggingface.co/datasets/GoGiants1/TMDBEval500/resolve/main/TMDBEval500/images/10.jpg --results_folder ./output

We apply DDIM inversion to get the initial noise and also visualize all attentions and features with clustering or PCA visualization as we showed in the paper.

python _2_DDIM_inv.py --input_image https://huggingface.co/datasets/GoGiants1/TMDBEval500/resolve/main/TMDBEval500/images/12.jpg --results_folder ./output

We offer the bash file to run our DPL inversion as below.

bash ./DPL.sh

If you already have the segmentation maps or detection boxes, then we also offer the other choices for the DPL inversion as shown in "_3_dpl_det_inv.py" and "_3_dpl_seg_inv.py"

We release our customized P2P editing code in "_4_image_edit.py"

For comparison, there are some methods already existing in the diffusers, we include them over here by naming as "comp_XXX.py".

-

The best hyperparameters may vary for each image, we recommend to explore it for your usage. Actually, after the "_2_DDIM_inv.py", we already save the cross-attention maps. If they have already good qualities, our method DPL is not necessary.

-

The editing quality cannot be ensured even with perfect cross-attention maps, we will make it as our future job.

Supplementary Material is over here.

Fulfill this repo with more bash files and example images in the future.

More experimental images are shared via the Google Drive.

It is an enhanced version of DPL with localization priors, including the bounding boxes or segmentation masks obtained from pretrained segmentation/detection models. The corresponding codes are shown in "_3_dpl_seg_inv.py" and "_3_dpl_det_inv.py".

If you find this repo helpful, do not hesitate to cite our papers. Thanks!

@article{wang2023DPL,

title={Dynamic prompt learning: Addressing cross-attention leakage for text-based image editing},

author={Wang, Kai and Yang, Fei and Yang, Shiqi and Butt, Muhammad Atif and van de Weijer, Joost},

journal={Advances in Neural Information Processing Systems},

volume={36},

year={2023}

}

@article{tang2024locinv,

title={LocInv: Localization-aware Inversion for Text-Guided Image Editing},

author={Tang, Chuanming and Wang, Kai and Yang, Fei and van de Weijer, Joost},

journal={CVPR 2024 AI4CC workshop},

year={2024}

}