This Google Maps Scraper is provided for educational and research purposes only. By using this Google Maps Scraper, you agree to comply with local and international laws regarding data scraping and privacy. The authors and contributors are not responsible for any misuse of this software. This tool should not be used to violate the rights of others, for unethical purposes, or to use data in an unauthorized or illegal manner.

We take the concerns of the Google Maps Scraper Project very seriously. For any concerns, please contact Chetan Jain at chetan@omkar.cloud. We will promptly reply to your emails.

-

✅ BOTASAURUS: The All-in-One Web Scraping Framework with Anti-Detection, Parallelization, Asynchronous, and Caching Superpowers.

-

✅ GOOGLE SCRAPER: Discover Search Results from Google.

-

✅ AMAZON SCRAPER: Discover Search Results and Product Data from Amazon.

-

✅ TRIP ADVISOR SCRAPER: Discover search results of hotels and restaurants from TripAdvisor.

Google Maps Scraper helps you find Business Profiles from Google Maps.

-

Limitless Scraping, Say No to costly subscriptions or expensive pay-per-result fees.

-

Sort, select, and filter results.

-

Scrape cities across countries.

-

Scrape reviews while ensuring the privacy of reviewers is maintained.

In the next 5 minutes, you'll extract 120 Search Results from Google Maps.

If you'd like to see a demo before using the tool, I encourage you to watch this short video.

To use the tool, you must have Node.js 18+ and Python installed on your PC.

Let's get started by following these super simple steps:

1️⃣ Clone the Magic 🧙♀️:

git clone https://github.com/omkarcloud/google-maps-scraper

cd google-maps-scraper2️⃣ Install Dependencies 📦:

python -m pip install -r requirements.txt3️⃣ Get the results by running 😎:

python main.pyOnce the scraping process is complete, you will find the search results in the output directory.

Note:

- If you're encountering errors on your Mac, they're likely caused by an old version of Node.js. To check your current Node.js version, run

node -vin your terminal. If your version is lower than 18, please upgrade to the latest version of Node.js. After upgrading, execute the following command:

python -m javascript --install proxy-chainThis command might display some node errors, but it will also provide steps to resolve them. Resolve them, and you can easily use the tool.

- If you don't have Node.js 18+ and Python installed or you are still facing errors, follow this Simple FAQ here, and you will have your search results in the next 5 Minutes

Open the main.py file, and update the queries list with your desired query.

queries = ["web developers in delhi"]

Gmaps.places(queries, max=5)Add multiple queries to the queries list as follows:

queries = [

"web developers in bangalore",

"web developers in delhi",

]

Gmaps.places(queries, max=5)A: Remove the max parameter.

By doing so, you can scrape all the Google Maps Listing. For example, to scrape all web developers in Bangalore, modify the code as follows:

queries = ["web developers in bangalore"]

Gmaps.places(queries)You can scrape a maximum of 120 results per search, as Google does not display any more search results beyond that. However, don't worry about running out of results as there are thousands of cities in our world :).

You can apply filters such as:

min_reviews/max_reviews(e.g., 10)category_in(e.g., "Dental Clinic", "Dental Laboratory")has_website(e.g., True/False)has_phone(e.g., True/False)min_rating/max_rating(e.g., 3.5)

For instance, to scrape listings with at least 5 reviews and no more than 100 reviews, with a phone number but no website:

Gmaps.places(queries, min_reviews=5, max_reviews=100, has_phone=True, has_website=False)To scrape listings that belong to specific categories:

Gmaps.places(queries, category_in=[Gmaps.Category.DentalClinic, Gmaps.Category.DentalLaboratory])See the list of all supported categories here

We sort the listings using a really good sorting order, which is as follows:

- Reviews [Businesses with more reviews come first]

- Website [Businesses more open to technology come first]

- LinkedIn [Businesses that are easier to contact come first]

- Is Spending On Ads [Businesses already investing in ads are more likely to invest in your product, so they appear first.]

However, you also have the freedom to sort them according to your preferences as follows:

-

To sort by reviews:

Gmaps.places(queries, sort=[Gmaps.SORT_BY_REVIEWS_DESCENDING])

-

To sort by rating:

Gmaps.places(queries, sort=[Gmaps.SORT_BY_RATING_DESCENDING])

-

To sort first by reviews and then by those without a website:

Gmaps.places(queries, sort=[Gmaps.SORT_BY_REVIEWS_DESCENDING, Gmaps.SORT_BY_NOT_HAS_WEBSITE])

-

To sort by name (alphabetically):

Gmaps.places(queries, sort=[Gmaps.SORT_BY_NAME_ASCENDING])

-

To sort by a different field, such as category, in ascending order:

Gmaps.places(queries, sort=[[Gmaps.Fields.CATEGORIES, Gmaps.SORT_ASCENDING]])

-

Or, to sort in descending order:

Gmaps.places(queries, sort=[[Gmaps.Fields.CATEGORIES, Gmaps.SORT_DESCENDING]])

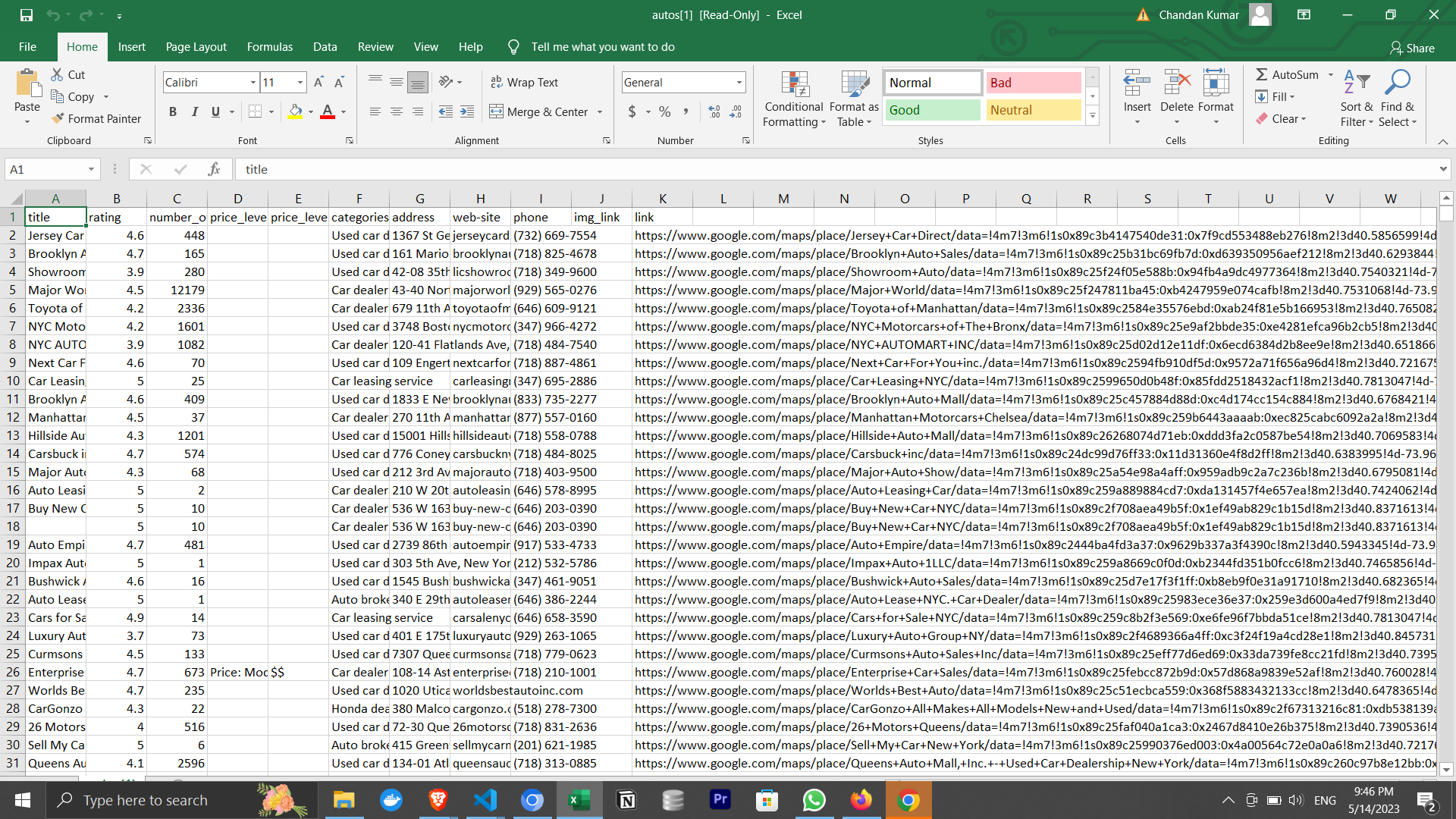

You may upgrade to the Pro Version of the Google Maps Scraper to scrape additional data points, like:

- 🌐 Website

- 📞 Phone Numbers

- 🌍 Geo Coordinates

- 💰 Price Range

- And many more data points like Owner details, Photos, About Section, and many more!

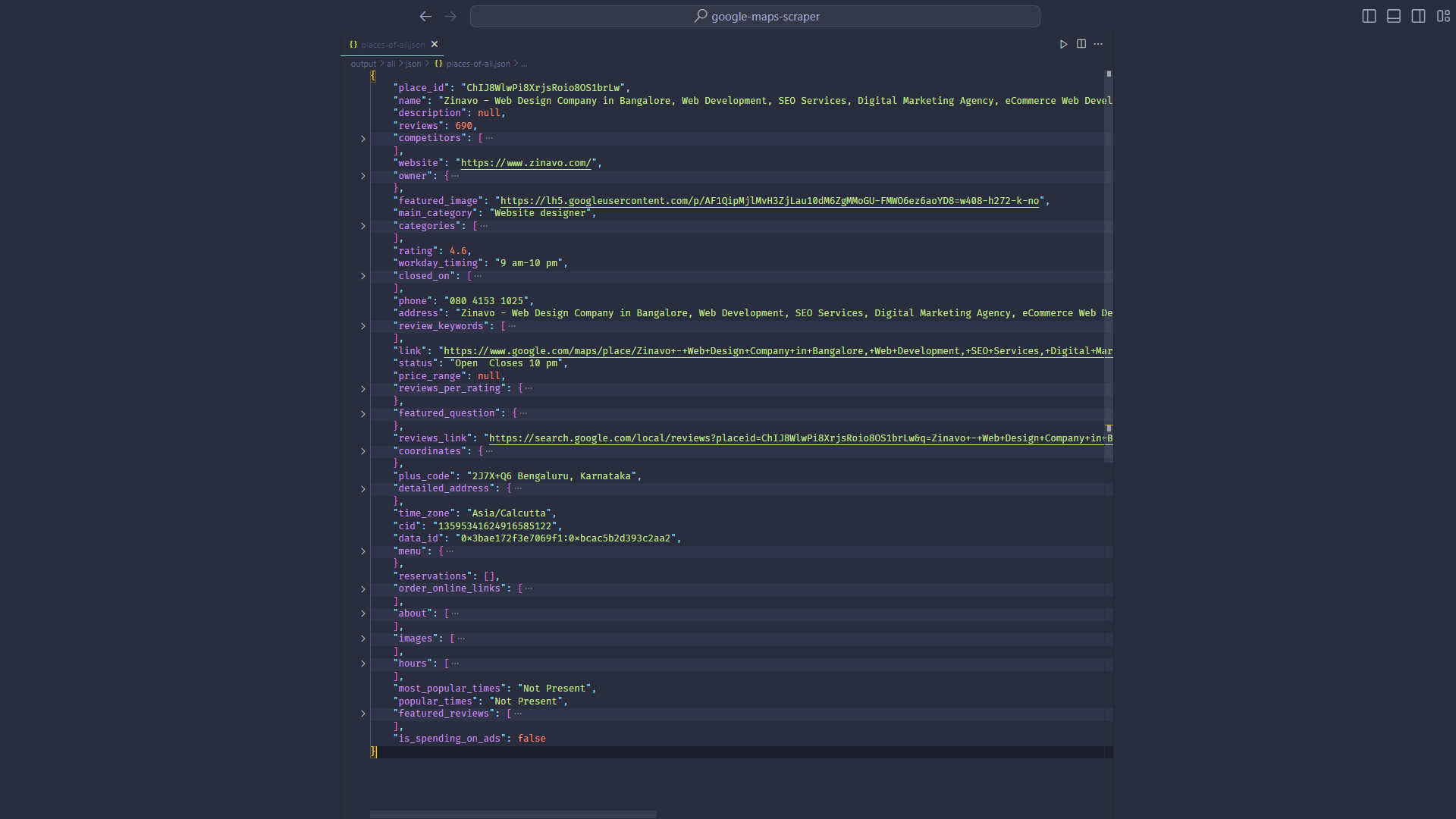

Below is a sample search result scraped by the Pro Version:

View sample search results scraped by the Pro Version here

Also, see the list of fields scraped by Pro Version here.

🔍 Comparison:

See how the Pro Version stacks up against the free version in this comparison image:

And here's the best part - the Pro Version comes with zero risk. That's right because we offer a 30-Day Refund Policy!

Also, Pro Version is not a Monthly Subscription but a One Time Risk-Free Investment Only.

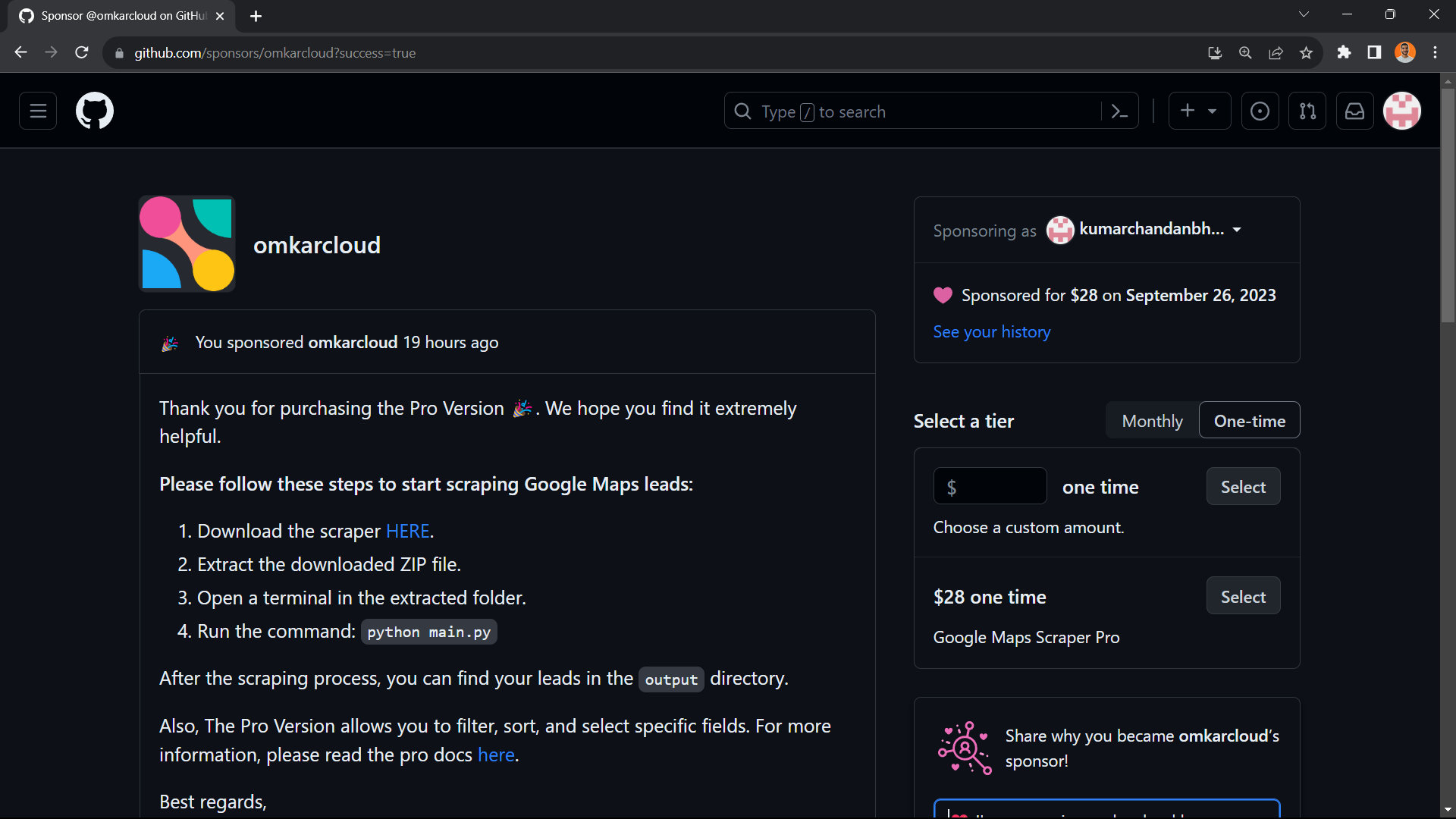

Visit the Sponsorship Page here and pay $28 by selecting Google Maps Scraper Pro Option.

After payment, you'll see a success screen with instructions on how to use the Pro Version:

We wholeheartedly believe in the value our product brings, especially since it has successfully worked for hundreds of people like you.

But, we also understand the reservations you might have.

That's why we've put the ball in your court: If, within the next 30 days, you feel that our product hasn’t met your expectations, don't hesitate. Reach out to us, and within 24 hours, we will gladly refund your money, no questions and no hassles.

The risk is entirely on us because we're confident in what we've created.

We are ethical and honest people, and we will never keep your money if you are not happy with our product. Requesting a refund is a simple process that should only take about 5 minutes. To request a refund, ensure you have one of the following:

- A PayPal Account (e.g., "myname@example.com" or "chetan@gmail.com")

- or a UPI ID (For India Only) (e.g., 'myname@bankname' or 'chetan@okhdfc')

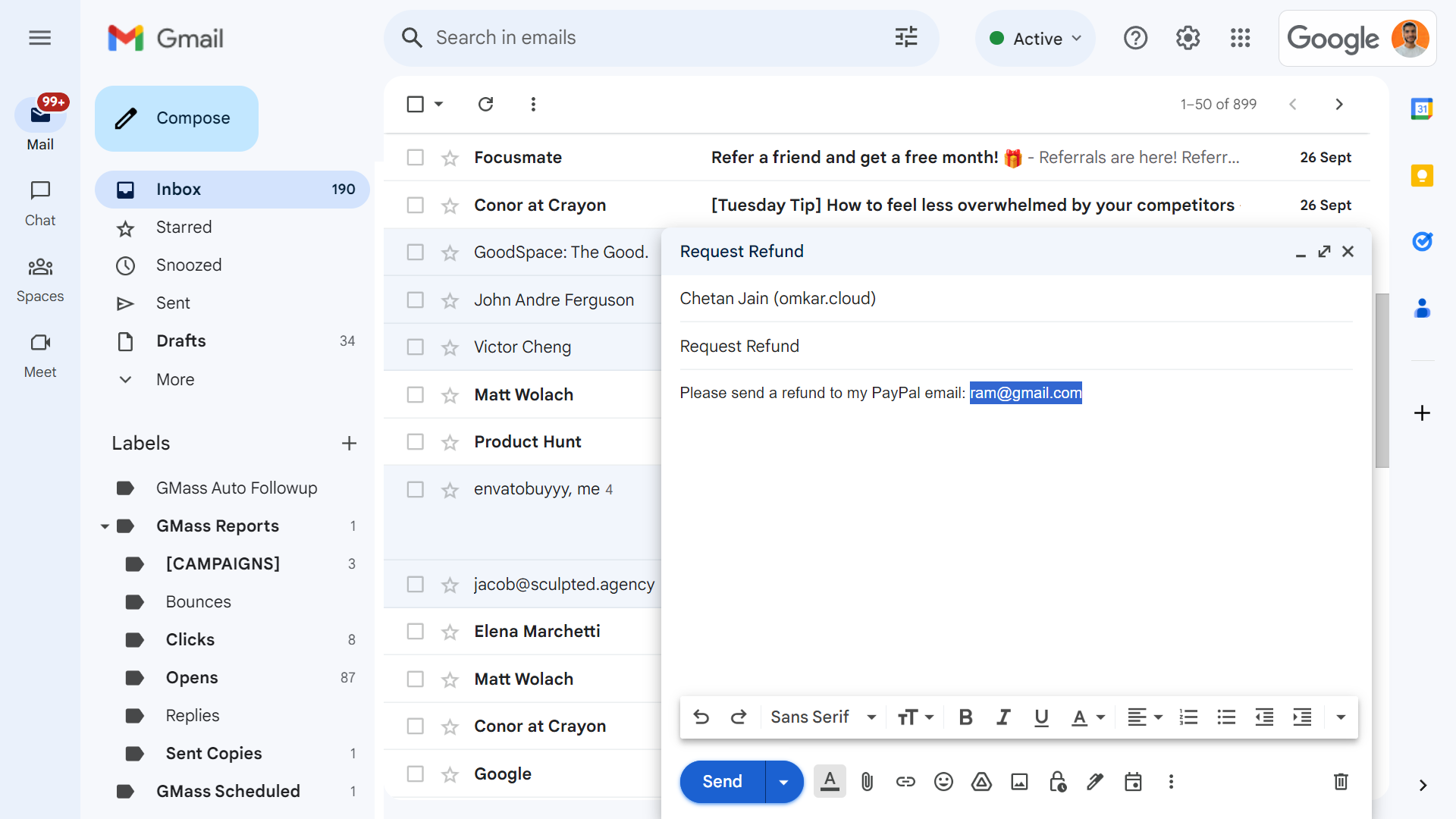

Next, follow these steps to initiate a refund:

-

Send an email to

chetan@omkar.cloudusing the following template:-

To request a refund via PayPal:

Subject: Request Refund Content: Please send a refund to my PayPal email: myname@example.com -

To request a refund via UPI (For India Only):

Subject: Request Refund Content: Please send a refund to my UPI ID: myname@bankname

-

-

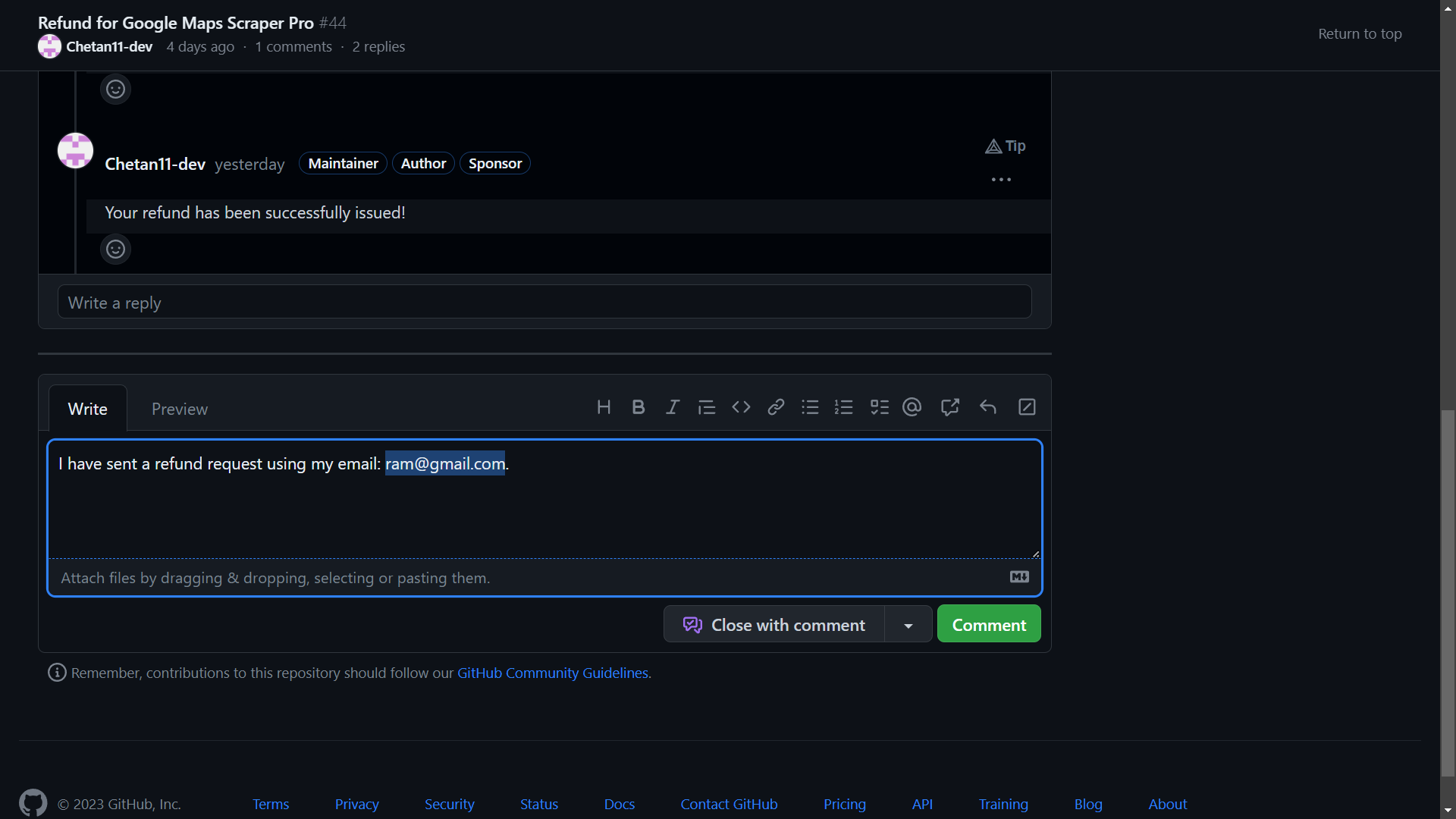

Next, go to the discussion here and comment to request a refund using this template:

I have sent a refund request from my email: myname@example.com. -

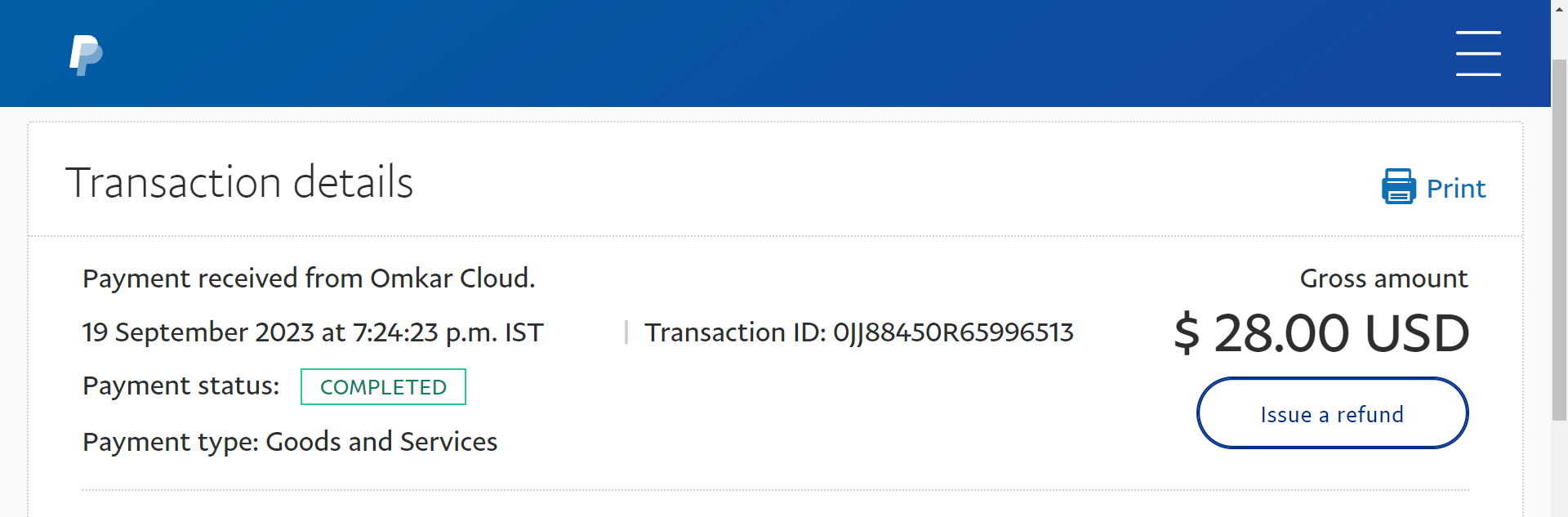

You can expect to receive your refund within 1 day. We will also update you in the GitHub Discussion here :)

All $28 will be refunded to you within 24 hours, without any questions and without any hidden charges.

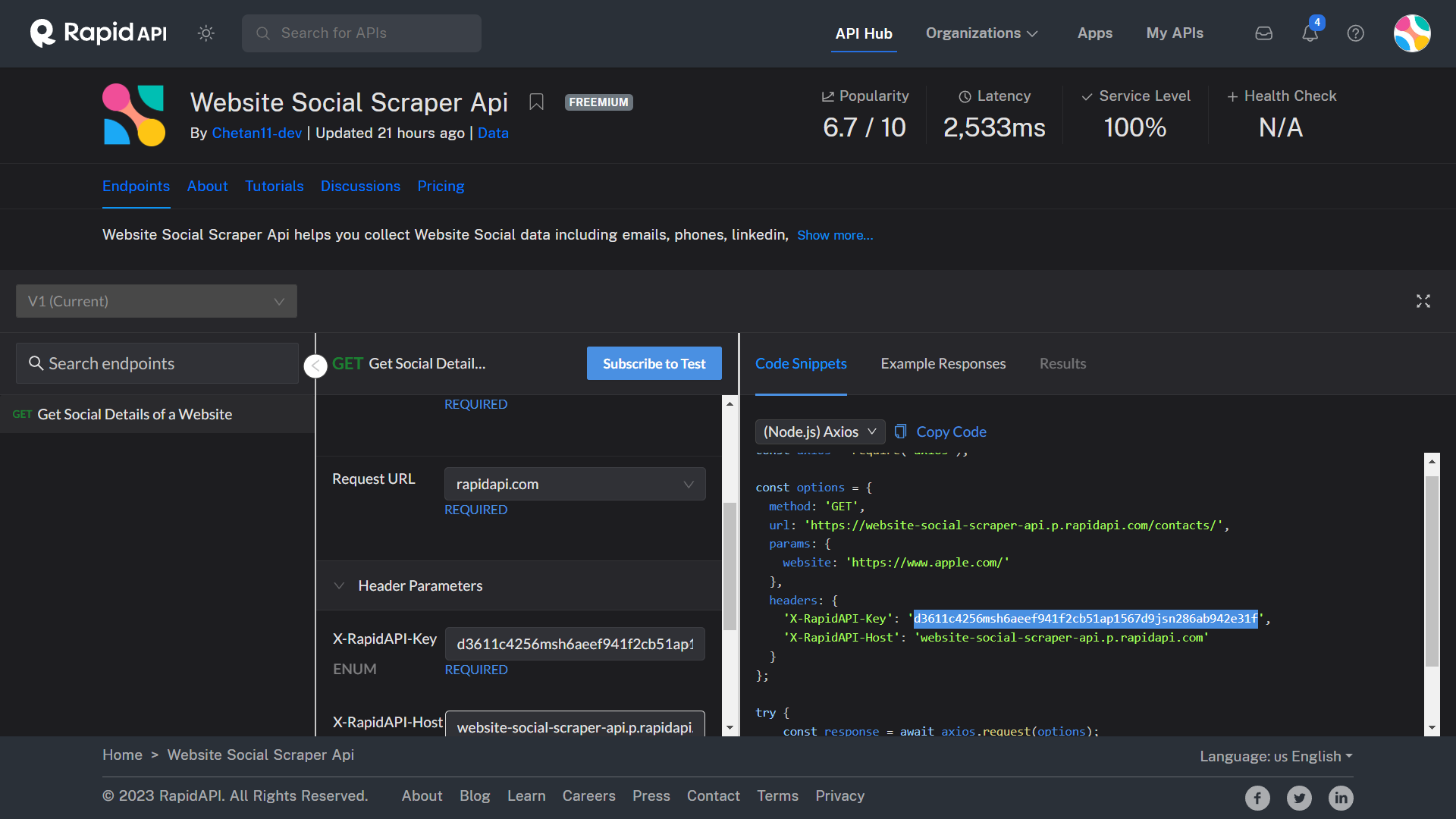

To scrape social details of profiles, follow these steps to use our Website Social Scraper API with the Free Plan, allowing you to scrape contact details of 50 profiles at no cost:

- Sign up on RapidAPI by visiting this link.

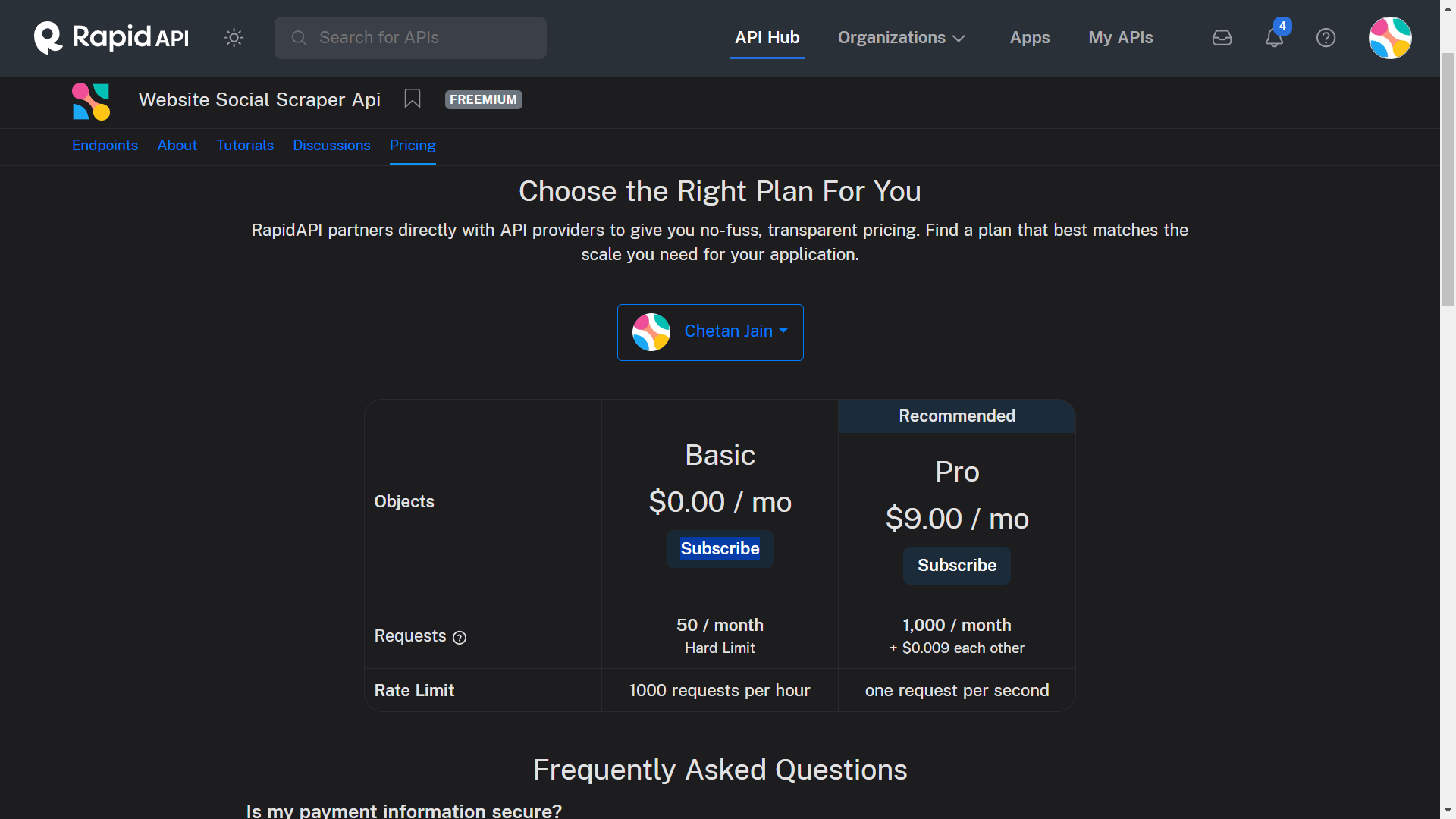

- Subscribe to the Free Plan by visiting this link.

queries = ["web developers in bangalore"]

Gmaps.places(queries, max=5, key="YOUR_API_KEY") - Run the script, and you'll find emails, Facebook, Twitter, and LinkedIn details of the Business in your output file.

python main.pyPlease note that this feature is available in Both Free and Pro Versions.

The first 50 contact details are free. After that, you can upgrade to the Pro Plan to scrape 1,000 contacts for $9, which is affordable considering if you land just one B2B client, you could easily make hundreds of dollars, easily covering the investment.

Disclaimer: This API should not be used for bulk automated mailing, for unethical purposes, or in an unauthorized or illegal manner.

Consider this example, to scrape web developers from 100 cities in India, use the following example:

queries = Gmaps.Cities.India("web developers in")[0:100]

Gmaps.places(queries) After running the code, an india-cities.json file will be generated in the output directory with a list of all the Indian cities.

You can prioritize certain cities by editing the cities JSON file in the output folder and moving them to the top of the list.

We recommend scraping only 100 cities at a time, as countries like India have thousands of cities, and scraping them all could take a considerable amount of time. Once you've exhausted the outreach in 100 cities, you can scrape more.

See the list of all supported countries here

Yes, you can. The scraper is smart like you and will resume from where it left off if you interrupt the process.

Seeing a lot of fields can be intimidating, so we have only kept the most important fields in the output.

However, you can select from up to multiple fields.

Also, to select all the fields, use the following code:

queries = [

"web developers in bangalore"

]

Gmaps.places(queries, fields=Gmaps.ALL_FIELDS)To select specific fields only, use the following code:

queries = [

"web developers in bangalore"

]

fields = [

Gmaps.Fields.PLACE_ID,

Gmaps.Fields.NAME,

Gmaps.Fields.MAIN_CATEGORY,

Gmaps.Fields.RATING,

Gmaps.Fields.REVIEWS,

Gmaps.Fields.WEBSITE,

Gmaps.Fields.PHONE,

Gmaps.Fields.ADDRESS,

Gmaps.Fields.LINK,

]

Gmaps.places(queries, fields=fields)Please note that selecting more or fewer fields will not affect the scraping time; it will remain exactly the same. So, don't fall into the trap of selecting fewer fields thinking it will decrease the scraping time, because it won't.

For examples of CSV/JSON formats containing all fields, you can download this file.

Also, see the list of all supported fields here

❓ Could you share resources that would be helpful to me, as I am sending personalized emails and providing useful services?

- I recommend reading The Cold Email Manifesto to learn how to write effective cold emails.

Also, if hotel and restaurant owners are your primary target audience, consider using TripAdvisor instead of Google Maps due to the lower competition.

Our TripAdvisor Scraper allows you to easily gather contact information and descriptions from TripAdvisor.

See our Tripadvisor Scraper here. Here's a revised version of your text with improved sentence flow and grammar:

Below are some of the smart features:

-

Instead of visiting each search result through a browser, which can result in up to 10,000 requests for just 100 results, we utilize HTTP requests. This approach leads to only 100 requests for 100 results, saving time and bandwidth while also placing a minimal load on Google's servers.

-

Rather than first scrolling and then visiting search results, our system scrolls and retrieves results simultaneously in parallel. This feature would have been challenging to implement using only Python Threads. However, thanks to the Botasaurus Async Queue Feature, it was really simple.

For web scrapers interested in understanding how this works in more detail, you can read this tutorial. Here, we guide you through creating a simplified version of a Google Maps Scraper.

Thanks! We used Botasaurus, which is the secret behind our Google Maps Scraper.

It's a Web Scraping Framework that makes life easier for Web Scrapers.

Botasaurus handled the hard parts of our Scraper, such as:

- Caching

- Parallel and Asynchronous Scraping

- Creation and Reuse of Drivers

- Writing output to CSV and JSON files

If you are a Web Scraper, we highly recommend that you learn about Botasaurus here, because Botasaurus will really save you countless hours in your life as a Web Scraper.

Having read this page, you have all the knowledge needed to effectively utilize the tool.

You may choose to explore the following questions based on your interests:

- I Don't Have Python, or I'm Facing Errors When Setting Up the Scraper on My PC. How to Solve It?

- How to Scrape Reviews?

- What Are Popular Snippets for Data Scientists?

- How to Change the Language of Output?

- I Have Google Map Places Links, How to Scrape Links?

- How to Scrape at Particular Coordinates and Zoom Level?

- When Setting the Lang Attribute to Hindi/Japanese/Chinese, the Characters Are in English Instead of the Specified Language. How to Transform Characters to the Specified Language?

For further help, contact us on WhatsApp. We'll be happy to help you out.

Love It? Star It ⭐!

Become one of our amazing stargazers by giving us a star ⭐ on GitHub!

It's just one click, but it means the world to me.