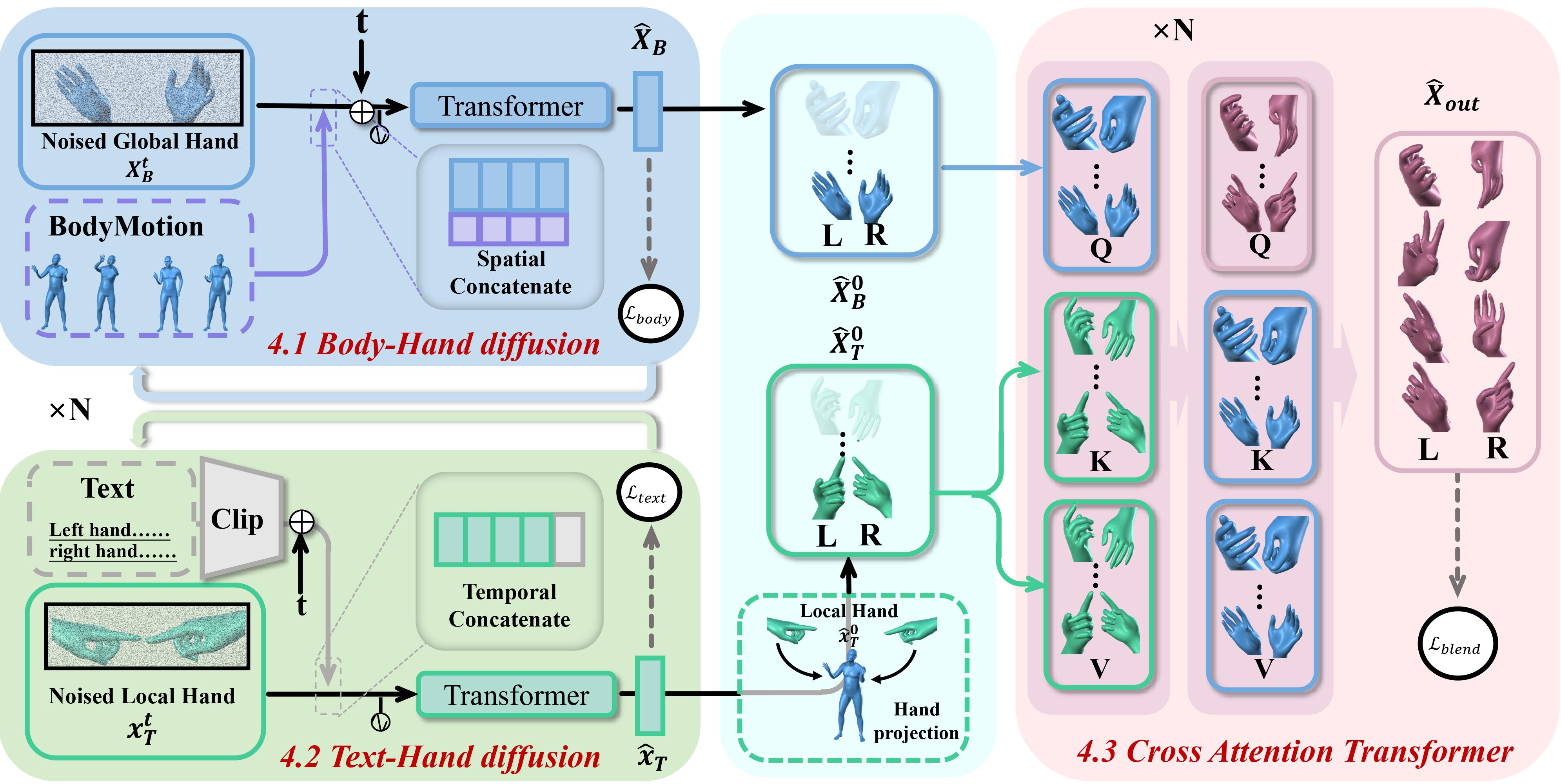

The official implementation of the paper "BOTH2Hands: Inferring 3D Hands from Both Text Prompts and Body Dynamics".

For more results and details, please visit our webpage.

This code was tested on Ubuntu 20.04.6 LTS.

conda env create -f both2hands.yaml

conda activate both2handsClone MPI-IS mesh , and use pip install locally:

cd path/to/mesh/folderIf you have issues, check this issue.

Clone human body prior , and install locally:

cd path/to/human_body_prior/folder

python setup.py developDownload SMPL+H model, and extract folder here:

- body_model

- smpl_models

- smplh

- neutral

- ......

- smplh

- smpl_models

Please read follow section to fetch full BOTH57M data. Then collect data like "dataset/ExampleDataset/ExampleDataset.p" using joblib, and put your dataset like this:

- dataset

- ExampleDataset

- ExampleDataset.p

- YourDataset

- YourDataset.p

- ExampleDataset

python main.py --config train.yamlDownload model checkpoints and put it here:

- checkpoints

- example

- both2hands_example.ckpt

- example

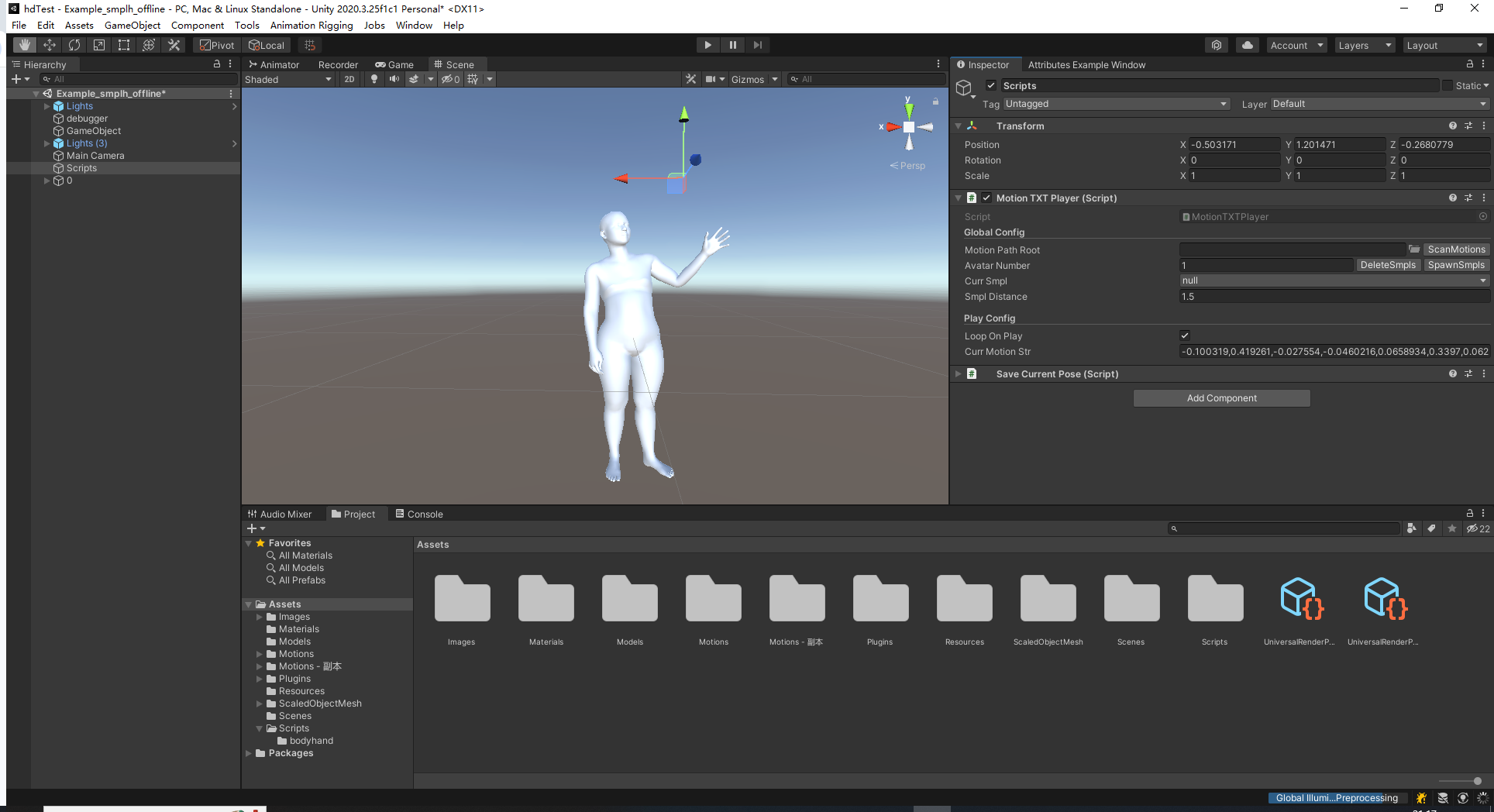

python main.py --config gen.yamlWe currently only provide unity visualization methods, which is easy to use. The project is tested on Unity 2020.3.25.

Download model checkpoints and put it here:

- checkpoints

- example

- both2hands_example.ckpt

- example

Download encoder checkpoints and put it here:

- eval_encoder

- encoder.pth

python main.py --config eval.yamlThe dataset is available on Here and requires the password to unzip the file. Please carefully read, fill in the license form, and send it to Wenqian Zhang (zhangwq2022@shanghaitech.edu.cn) and cc Lan Xu (xulan1@shanghaitech.edu.cn) to request access.

By requesting access to the content, you acknowledge that you have read this agreement, understand it, and agree to be bound by its terms and conditions. This agreement constitutes a legal and binding agreement between you and the provider of the protected system or content. The Visual & Data Intelligence(VDI) Center of ShanghaiTech University is the only owner of all intellectual property rights, including copyright, of BOTH57M DATASET, and VDI reserves the right to terminate your access to the dataset at any time.

Notes:

(1) Students are NOT eligible to be a recipient. If you are a student, please ask your supervisor to make a request.

(2) Once the license agreement is signed, we will give access to the data.

(3) Dataset including RGB data is Large (3.4T), please prepare your storage space.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Please consider citing our work if you find this repo is useful for your projects.

@inproceedings{zhang24both,

title = {BOTH2Hands: Inferring 3D Hands from Both Text Prompts and Body Dynamics},

author = {Zhang, Wenqian and Huang, Molin and Zhou, Yuxuan and Zhang, Juze and Yu, Jingyi and Wang, Jingya and Xu, Lan},

booktitle = {Conference on Computer Vision and Pattern Recognition ({CVPR})},

year = {2024},

}The code is built based on EgoEgo. Please check their projects if you are interested in body conditioned motion diffusion.