Exploring Data-Efficient 3D Scene Understanding with Contrastive Scene Contexts

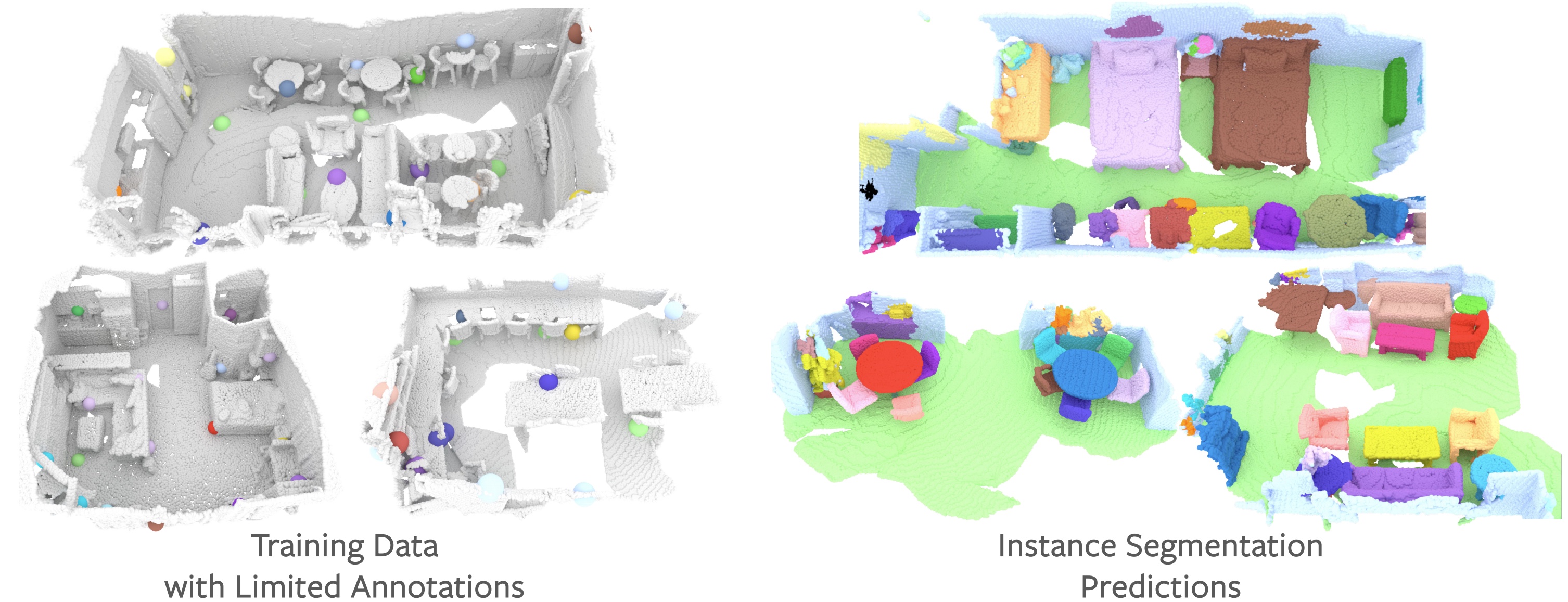

The rapid progress in 3D scene understanding has come with growing demand for data; however, collecting and annotating 3D scenes (e.g. point clouds) are notoriously hard. For example, the number of scenes (e.g. indoor rooms) that can be accessed and scanned might be limited; even given sufficient data, acquiring 3D labels (e.g. instance masks) requires intensive human labor. In this paper, we explore data-efficient learning for 3D point cloud. As a first step towards this direction, we propose Contrastive Scene Contexts, a 3D pre-training method that makes use of both point-level correspondences and spatial contexts in a scene. Our method achieves state-of-the-art results on a suite of benchmarks where training data or labels are scarce. Our study reveals that exhaustive labelling of 3D point clouds might be unnecessary; and remarkably, on ScanNet, even using 0.1% of point labels, we still achieve 89% (instance segmentation) and 96% (semantic segmentation) of the baseline performance that uses full annotations.

[CVPR 2021 Paper] [Video] [Project Page] [ScanNet Data-Efficient Benchmark]

Environment

This codebase was tested with the following environment configurations.

- Ubuntu 20.04

- CUDA 10.2

- GCC 7.3.0

- Python 3.7.7

- PyTorch 1.5.1

- MinkowskiEngine v0.4.3

Installation

We use conda for the installation process:

# Install virtual env and PyTorch

conda create -n sparseconv043 python=3.7

conda activate sparseconv043

conda install pytorch==1.5.1 torchvision==0.6.1 cudatoolkit=10.2 -c pytorch

# Complie and install MinkowskiEngine 0.4.3.

conda install mkl mkl-include -c intel

wget https://github.com/NVIDIA/MinkowskiEngine/archive/refs/tags/v0.4.3.zip

cd MinkowskiEngine-0.4.3

python setup.py install

Next, download Contrastive Scene Contexts git repository and install the requirement from the root directory.

git clone https://github.com/facebookresearch/ContrastiveSceneContexts.git

cd ContrastiveSceneContexts

pip install -r requirements.txt

Our code also depends on PointGroup and PointNet++.

# Install OPs in PointGroup by:

conda install -c bioconda google-sparsehash

cd downstream/insseg/lib/bfs/ops

python setup.py build_ext --include-dirs=YOUR_ENV_PATH/include

python setup.py install

# Install PointNet++

cd downstream/votenet/models/backbone/pointnet2

python setup.py install

Pre-training on ScanNet

Data Pre-processing

For pre-training, one can generate ScanNet Pair data by following code (need to change the TARGET and SCANNET_DIR accordingly in the script).

cd pretrain/scannet_pair

./preprocess.sh

This piece of code first extracts pointcloud from partial frames, and then computes a filelist of overlapped partial frames for each scene. Generate a combined txt file called overlap30.txt of filelists of each scene by running the code

cd pretrain/scannet_pair

python generate_list.py --target_dir TARGET

This overlap30.txt should be put into folder TARGET/splits.

Pre-training

Our codebase enables multi-gpu training with distributed data parallel (DDP) module in pytorch. To train ContrastiveSceneContexts with 8 GPUs (batch_size=32, 4 per GPU) on a single server:

cd pretrain/contrastive_scene_contexts

# Pretrain with SparseConv backbone

OUT_DIR=./output DATASET=ROOT_PATH_OF_DATA scripts/pretrain_sparseconv.sh

# Pretrain with PointNet++ backbone

OUT_DIR=./output DATASET=ROOT_PATH_OF_DATA scripts/pretrain_pointnet2.sh

ScanNet Downstream Tasks

Data Pre-Processing

We provide the code for pre-processing the data for ScanNet downstream tasks. One can run following code to generate the training data for semantic segmentation and instance segmentation. We use SCANNET_DATA to refer where scannet data lives and SCANNET_OUT_PATH to denote the output path of processed scannet data.

# Edit path variables: SCANNET_DATA and SCANNET_OUT_PATH

cd downstream/semseg/lib/datasets/preprocessing/scannet

python collect_indoor3d_data.py --input SCANNET_DATA --output SCANNET_OUT_PATH

# copy the filelists

cp -r split SCANNET_OUT_PATH

For ScanNet detection data generation, please refer to VoteNet ScanNet Data. Run command to soft link the generated detection data (located in PATH_DET_DATA) to following location:

# soft link detection data

cd downstream/det/

ln -s PATH_DET_DATA datasets/scannet/scannet_train_detection_data

For Data-Efficient Learning, download the scene_list and points_list as well as bbox_list from ScanNet Data-Efficient Benchmark. To Active Selection for points_list, run following code:

# Get features per point

cd downstream/semseg/

DATAPATH=SCANNET_DATA LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/inference_features.sh

# run k-means on feature space

cd lib

python sampling_points.py --point_data SCANNET_OUT_PATH --feat_data PATH_CHECKPOINT

Semantic Segmentation

We provide code for the semantic segmentation experiments conducted in our paper. Our code supports multi-gpu training. To train with 8 GPUs on a single server,

# Edit relevant path variables and then run:

cd downstream/semseg/

DATAPATH=SCANNET_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/train_scannet.sh

For Limited Scene Reconstruction, run following code:

# Edit relevant path variables and then run:

cd downstream/semseg/

DATAPATH=SCANNET_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT TRAIN_FILE=PATH_SCENE_LIST ./scripts/data_efficient/by_scenes.sh

For Limited Points Annotation, run following code:

# Edit relevant path variables and then run:

cd downstream/semseg/

DATAPATH=SCANNET_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT SAMPLED_INDS=PATH_SCENE_LIST ./scripts/data_efficient/by_points.sh

Model Zoo

We also provide our pre-trained checkpoints (and log file) for reference. You can evalutate our pre-trained model by running code:

# PATH_CHECKPOINT points to downloaded pre-trained model path:

cd downstream/semseg/

DATAPATH=SCANNET_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/test_scannet.sh

| Training Data | mIoU (val) | Pre-trained Model Used (for initialization) | Logs | Curves | Model |

|---|---|---|---|---|---|

| 1% scenes | 29.3 | download | link | link | link |

| 5% scenes | 45.4 | download | link | link | link |

| 10% scenes | 59.5 | download | link | link | link |

| 20% scenes | 64.1 | download | link | link | link |

| 100% scenes | 73.8 | download | link | link | link |

| 20 points | 53.8 | download | link | link | link |

| 50 points | 62.9 | download | link | link | link |

| 100 points | 66.9 | download | link | link | link |

| 200 points | 69.0 | download | link | link | link |

Instance Segmentation

We provide code for the instance segmentation experiments conducted in our paper. Our code supports multi-gpu training. To train with 8 GPUs on a single server,

# Edit relevant path variables and then run:

cd downstream/insseg/

DATAPATH=SCANNET_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/train_scannet.sh

For Limited Scene Reconstruction, run following code:

# Edit relevant path variables and then run:

cd downstream/insseg/

DATAPATH=SCANNET_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT TRAIN_FILE=PATH_SCENE_LIST ./scripts/data_efficient/by_scenes.sh

For Limited Points Annotation, run following code:

# Edit relevant path variables and then run:

cd downstream/insseg/

DATAPATH=SCANNET_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT SAMPLED_INDS=PATH_POINTS_LIST ./scripts/data_efficient/by_points.sh

For ScanNet Benchmark, run following code (train on train+val and evaluate on val):

# Edit relevant path variables and then run:

cd downstream/insseg/

DATAPATH=SCANNET_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/train_scannet_benchmark.sh

Model Zoo

We provide our pre-trained checkpoints (and log file) for reference. You can evalutate our pre-trained model by running code:

# PATH_CHECKPOINT points to pre-trained model path:

cd downstream/insseg/

DATAPATH=SCANNET_DATA LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/test_scannet.sh

For submitting to ScanNet Benchmark with our pre-trained model, run following command (the submission file is located in output/benchmark_instance):

# PATH_CHECKPOINT points to pre-trained model path:

cd downstream/insseg/

DATAPATH=SCANNET_DATA LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/test_scannet_benchmark.sh

| Training Data | mAP@0.5 (val) | Pre-trained Model Used (for initialization) | Logs | Curves | Model |

|---|---|---|---|---|---|

| 1% scenes | 12.3 | download | link | link | link |

| 5% scenes | 33.9 | download | link | link | link |

| 10% scenes | 45.3 | download | link | link | link |

| 20% scenes | 49.8 | download | link | link | link |

| 100% scenes | 59.4 | download | link | link | link |

| 20 points | 27.2 | download | link | link | link |

| 50 points | 35.7 | download | link | link | link |

| 100 points | 43.6 | download | link | link | link |

| 200 points | 50.4 | download | link | link | link |

| train + val | 76.5 (64.8 on test) | download | link | link | link |

3D Object Detection

We provide the code for 3D Object Detection downstream task. The code is adapted directly fron VoteNet. Additionally, we provide two backones, namely PointNet++ and SparseConv. To fine-tune the downstream task, run following command:

cd downstream/votenet/

# train sparseconv backbone

LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/train_scannet.sh

# train pointnet++ backbone

LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/train_scannet_pointnet.sh

For Limited Scene Reconstruction, run following code:

# Edit relevant path variables and then run:

cd downstream/votenet/

LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT TRAIN_FILE=PATH_SCENE_LIST ./scripts/data_efficient/by_Scentrain_scannet.sh

For Limited Bbox Annotation, run following code:

# Edit relevant path variables and then run:

cd downstream/votenet/

DATAPATH=SCANNET_DATA LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT SAMPLED_BBOX=PATH_BBOX_LIST ./scripts/data_efficient/by_bboxes.sh

For submitting to ScanNet Data-Efficient Benchmark, you can set "test.write_to_bencmark=True" in "downstream/votenet/scripts/test_scannet.sh" or "downstream/votenet/scripts/test_scannet_pointnet.sh"

Model Zoo

We provide our pre-trained checkpoints (and log file) for reference. You can evaluate our pre-trained model by running following code.

# PATH_CHECKPOINT points to pre-trained model path:

cd downstream/votenet/

LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/test_scannet.sh

| Training Data | mAP@0.5 (val) | mAP@0.25 (val) | Pre-trained Model Used (for initialization) | Logs | Curves | Model |

|---|---|---|---|---|---|---|

| 10% scenes | 9.9 | 24.7 | download | link | link | link |

| 20% scenes | 21.4 | 41.4 | download | link | link | link |

| 40% scenes | 29.5 | 52.0 | download | link | link | link |

| 80% scenes | 36.3 | 56.3 | download | link | link | link |

| 100% scenes | 39.3 | 59.1 | download | link | link | link |

| 100% scenes (PointNet++) | 39.2 | 62.5 | download | link | link | link |

| 1 bboxes | 10.9 | 24.5 | download | link | link | link |

| 2 bboxes | 18.5 | 36.5 | download | link | link | link |

| 4 bboxes | 26.1 | 45.9 | download | link | link | link |

| 7 bboxes | 30.4 | 52.5 | download | link | link | link |

Stanford 3D (S3DIS) Fine-tuning

Data Pre-Processing

We provide the code for pre-processing the data for Stanford3D (S3DIS) downstream tasks. One can run following code to generate the training data for semantic segmentation and instance segmentation.

# Edit path variables, STANFORD_3D_OUT_PATH

cd downstream/semseg/lib/datasets/preprocessing/stanford

python stanford.py

Semantic Segmentation

We provide code for the semantic segmentation experiments conducted in our paper. Our code supports multi-gpu training. To fine-tune with 8 GPUs on a single server,

# Edit relevant path variables and then run:

cd downstream/semseg/

DATAPATH=STANFORD_3D_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/train_stanford3d.sh

Model Zoo

We provide our pre-trained model and log file for reference. You can evalutate our pre-trained model by running code:

# PATH_CHECKPOINT points to pre-trained model path:

cd downstream/semseg/

DATAPATH=STANFORD_3D_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/test_stanford3d.sh

| Training Data | mIoU (val) | Pre-trained Model Used (for initialization) | Logs | Curves | Model |

|---|---|---|---|---|---|

| 100% scenes | 72.2 | download | link | link | link |

Instance Segmentation

We provide code for the instance segmentation experiments conducted in our paper. Our code supports multi-gpu training. To fine-tune with 8 GPUs on a single server,

# Edit relevant path variables and then run:

cd downstream/insseg/

DATAPATH=STANFORD_3D_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/train_stanford3d.sh

Model Zoo

We provide our pre-trained model and log file for reference. You can evaluate our pre-trained model by running code:

# PATH_CHECKPOINT points to pre-trained model path:

cd downstream/insseg/

DATAPATH=STANFORD_3D_OUT_PATH LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/test_stanford3d.sh

| Training Data | mAP@0.5 (val) | Pre-trained Model Used (for initialization) | Logs | Curves | Model |

|---|---|---|---|---|---|

| 100% scenes | 63.4 | download | link | link | link |

SUN-RGBD Fine-tuning

Data Pre-Processing

For SUN-RGBD detection data generation, please refer to VoteNet SUN-RGBD Data. To soft link generated SUN-RGBD detection data (SUN_RGBD_DATA_PATH) to following location, run the command:

cd downstream/det/datasets/sunrgbd

# soft link

link -s SUN_RGBD_DATA_PATH/sunrgbd_pc_bbox_votes_50k_v1_train sunrgbd_pc_bbox_votes_50k_v1_train

link -s SUN_RGBD_DATA_PATH/sunrgbd_pc_bbox_votes_50k_v1_val sunrgbd_pc_bbox_votes_50k_v1_val

3D Object Detection

We provide the code for 3D Object Detection downstream task. The code is adapted directly fron VoteNet. To fine-tune the downstream task, run following code:

# Edit relevant path variables and then run:

cd downstream/votenet/

LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/train_sunrgbd.sh

Model Zoo

We provide our pre-trained checkpoints (and log file) for reference. You can load our pre-trained model by setting the pre-trained model path to PATH_CHECKPOINT.

# PATH_CHECKPOINT points to pre-trained model path:

cd downstream/votenet/

LOG_DIR=./output PRETRAIN=PATH_CHECKPOINT ./scripts/test_sunrgbd.sh

| Training Data | mAP@0.5 (val) | mAP@0.25 (val) | Pre-trained Model (initialization) | Logs | Curves | Model |

|---|---|---|---|---|---|---|

| 100% scenes | 36.4 | 58.9 | download | link | link | link |

Citing our paper

@inproceedings{hou2021exploring,

title={Exploring data-efficient 3d scene understanding with contrastive scene contexts},

author={Hou, Ji and Graham, Benjamin and Nie{\ss}ner, Matthias and Xie, Saining},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={15587--15597},

year={2021}

}

License

Contrastive Scene Contexts is relased under the MIT License. See the LICENSE file for more details.