When to use Graphs in RAG: A Comprehensive Benchmark and Analysis for Graph Retrieval-Augmented Generation

If you find this benchmark helpful, please cite our paper:

@article{xiang2025use,

title={When to use Graphs in RAG: A Comprehensive Analysis for Graph Retrieval-Augmented Generation},

author={Xiang, Zhishang and Wu, Chuanjie and Zhang, Qinggang and Chen, Shengyuan and Hong, Zijin and Huang, Xiao and Su, Jinsong},

journal={arXiv preprint arXiv:2506.05690},

year={2025}

}

This repository is for the GraphRAG-Bench project, a comprehensive benchmark for evaluating Graph Retrieval-Augmented Generation models.

- [2025-10-27] We release LinearRAG, a relation-free method for efficient GraphRAG.

- [2025-08-24] We support DIGIMON for flexible benchmarking across GraphRAG models.

- [2025-05-25] We release GraphRAG-Bench, the benchmark for evaluating GraphRAG models.

- [2025-05-14] We release the GraphRAG-Bench dataset.

- [2025-01-21] We release the GraphRAG survey.

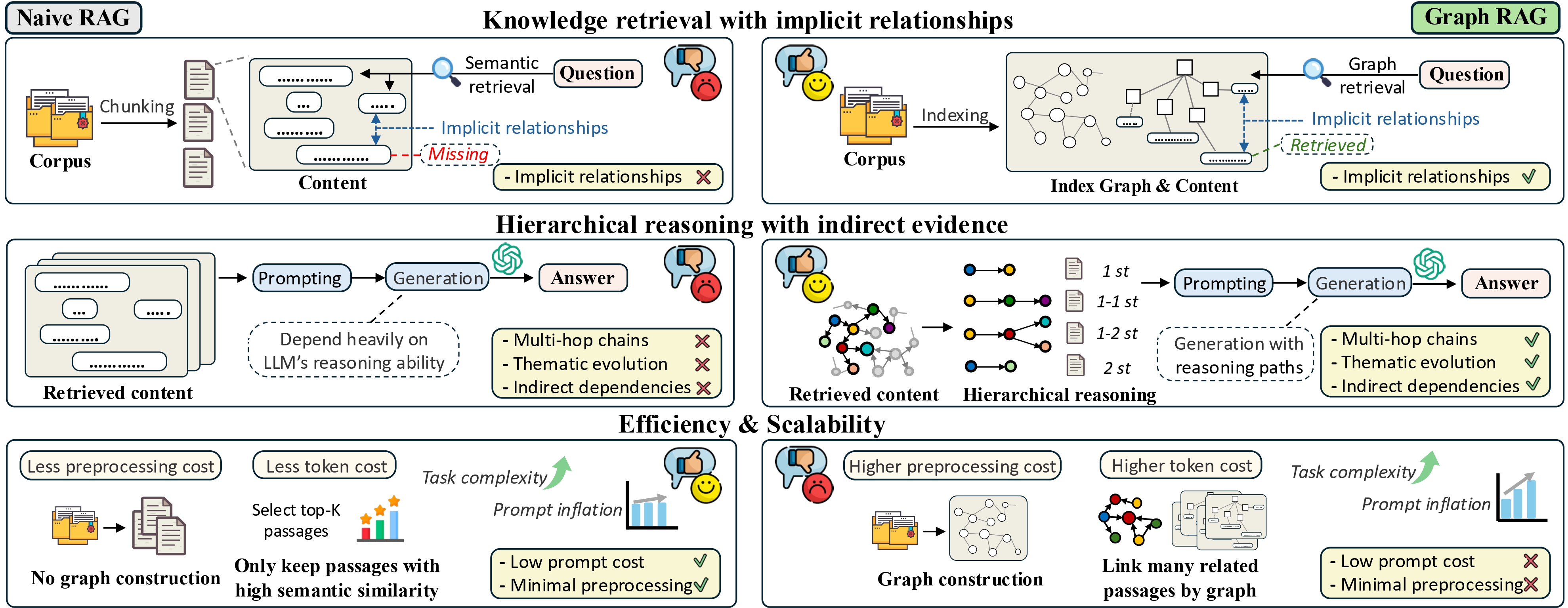

- Introduces Graph Retrieval-Augmented Generation (GraphRAG) concept

- Compares traditional RAG vs GraphRAG approach

- Explains research objective: Identify scenarios where GraphRAG outperforms traditional RAG

- Visual comparison diagram of RAG vs GraphRAG

More Details

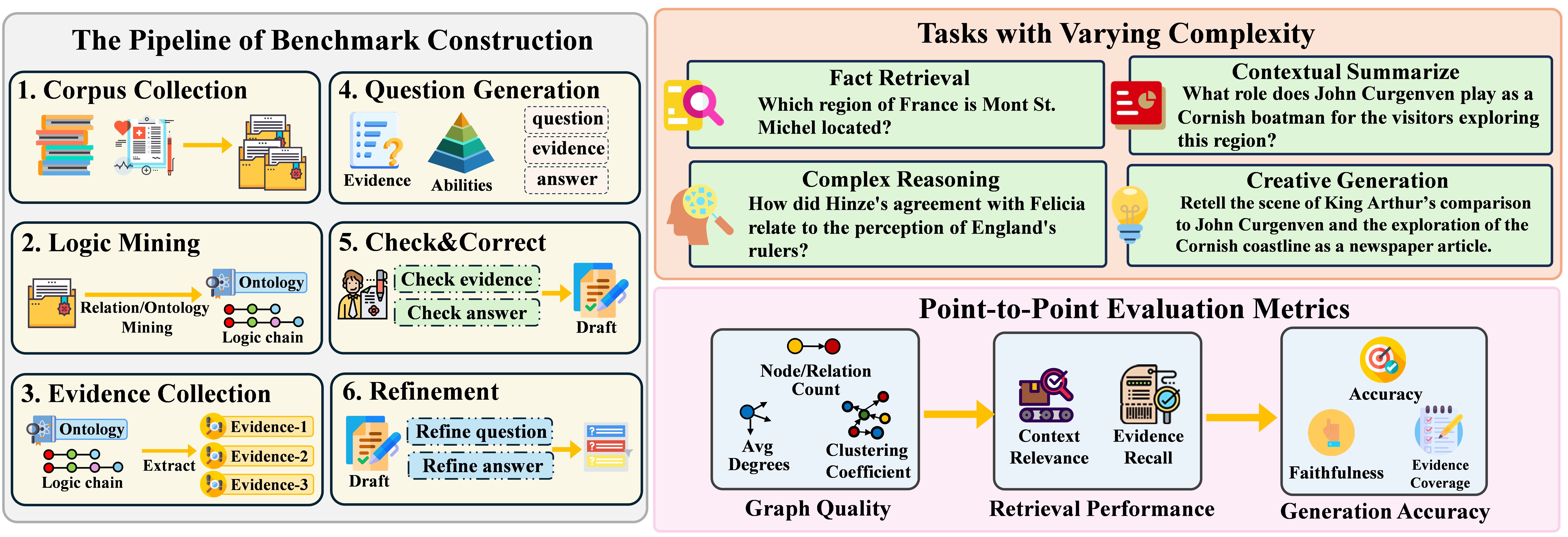

Graph retrieval-augmented generation (GraphRAG) has emerged as a powerful paradigm for enhancing large language models (LLMs) with external knowledge. It leverages graphs to model the hierarchical structure between specific concepts, enabling more coherent and effective knowledge retrieval for accurate reasoning. Despite its conceptual promise, recent studies report that GraphRAG frequently underperforms vanilla RAG on many real-world tasks. This raises a critical question: Is GraphRAG really effective, and in which scenarios do graph structures provide measurable benefits for RAG systems? To address this, we propose GraphRAG-Bench, a comprehensive benchmark designed to evaluate GraphRAG models on both hierarchical knowledge retrieval and deep contextual reasoning. GraphRAG-Bench features a comprehensive dataset with tasks of increasing difficulty, covering fact retrieval, complex reasoning, contextual summarization, and creative generation, and a systematic evaluation across the entire pipeline, from graph construction and knowledge retrieval to final generation. Leveraging this novel benchmark, we systematically investigate the conditions when GraphRAG surpasses traditional RAG and the underlying reasons for its success, offering guidelines for its practical application.Two domain-specific leaderboards with comprehensive metrics:

1. GraphRAG-Bench (Novel)

- Evaluates models on literary/fictional content

2. GraphRAG-Bench (Medical)

- Evaluates models on medical/healthcare content

Evaluation Dimensions:

- Fact Retrieval (Accuracy, ROUGE-L)

- Complex Reasoning (Accuracy, ROUGE-L)

- Contextual Summarization (Accuracy, Coverage)

- Creative Generation (Accuracy, Factual Score, Coverage)

Level 1: Fact Retrieval Example: "Which region of France is Mont St. Michel located?"

Level 2: Complex Reasoning Example: "How did Hinze's agreement with Felicia relate to the perception of England's rulers?"

Level 3: Contextual Summarization Example: "What role does John Curgenven play as a Cornish boatman for visitors exploring this region?"

Level 4: Creative Generation Example: "Retell King Arthur's comparison to John Curgenven as a newspaper article."

First, install the necessary dependencies for GraphRAG-Bench.

pip install -r requirements.txtTo prevent dependency conflicts, we strongly recommend using separate Conda environments for each framework:

We use the installation of LightRAG as an example. For other frameworks, please refer to their respective installation instructions.

# Create and activate environment (example for LightRAG)

conda create -n lightrag python=3.10 -y

conda activate lightrag

# Install LightRAG

git clone https://github.com/HKUDS/LightRAG.git

cd LightRAG

pip install -e .

We provide detailed instructions on how to use GraphRAG-Bench to evaluate each framework.

Specifically, we introduce how to perform index construction and batch inference for each framework in the Examples folder with instructions in the Examples README.

Note that the evaluation code is standardized across all frameworks to ensure fair comparison. Please refer to the Evaluation folder and the Evaluation README for detailed instructions on the evaluation.

Contributions to improve the benchmark website are welcome. Please contact the project team via GraphRAG@hotmail.com .

If you find this benchmark helpful, please cite our paper:

@article{xiang2025use,

title={When to use Graphs in RAG: A Comprehensive Analysis for Graph Retrieval-Augmented Generation},

author={Xiang, Zhishang and Wu, Chuanjie and Zhang, Qinggang and Chen, Shengyuan and Hong, Zijin and Huang, Xiao and Su, Jinsong},

journal={arXiv preprint arXiv:2506.05690},

year={2025}

}