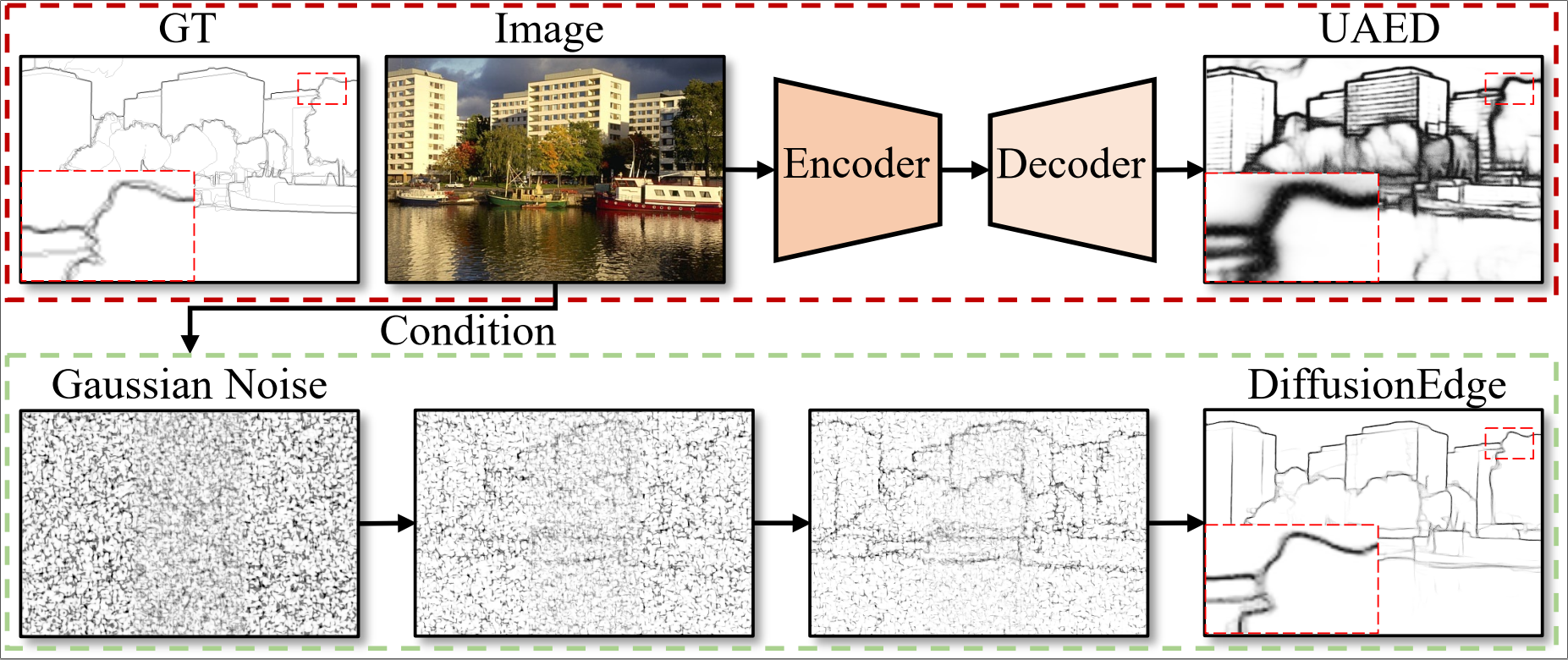

DiffusionEdge: Diffusion Probabilistic Model for Crisp Edge Detection (arxiv)

Yunfan Ye, Yuhang Huang, Renjiao Yi, Zhiping Cai, Kai Xu.

- We release a real-time model trained on BSDS, please see Real-time DiffusionEdge.

- We create a WeChat Group for flexible discussion. Please use WeChat APP to scan the QR code.

- 2023-12-09: The paper is accepted by AAAI-2024.

- Upload the pretrained first stage checkpoint download.

- Upload pretrained weights and pre-computed results.

- We now update a simple demo, please see Quickly Demo

- First Committed.

- install torch

conda create -n diffedge python=3.9

conda activate diffedge

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 torchaudio==0.12.1 --extra-index-url https://download.pytorch.org/whl/cu113

- install other packages.

pip install -r requirement.txt

- prepare accelerate config.

accelerate config

The training data structure should look like:

|-- $data_root

| |-- image

| |-- |-- raw

| |-- |-- |-- XXXXX.jpg

| |-- |-- |-- XXXXX.jpg

| |-- edge

| |-- |-- raw

| |-- |-- |-- XXXXX.png

| |-- |-- |-- XXXXX.png

The testing data structure should look like:

|-- $data_root

| |-- XXXXX.jpg

| |-- XXXXX.jpg

- download the pretrained weights:

| Dataset | ODS (SEval/CEval) | OIS (SEval/CEval) | AC | Weight | Pre-computed results |

|---|---|---|---|---|---|

| BSDS | 0.834 / 0.749 | 0.848 / 0.754 | 0.476 | download | download |

| NYUD | 0.761 / 0.732 | 0.766 / 0.738 | 0.846 | download | download |

| BIPED | 0.899 | 0.901 | 0.849 | download | download |

- put your images in a directory and run:

python demo.py --input_dir $your input dir$ --pre_weight $the downloaded weight path$ --out_dir $the path saves your results$ --bs 8

The larger --bs is, the faster the inference speed is and the larger the CUDA memory is.

- train the first stage model (AutoEncoder):

accelerate launch train_vae.py --cfg ./configs/first_stage_d4.yaml

- you should add the final model weight of the first stage to the config file

./configs/BSDS_train.yaml(line 42), then train latent diffusion-edge model:

accelerate launch train_cond_ldm.py --cfg ./configs/BSDS_train.yaml

make sure your model weight path is added in the config file ./configs/BSDS_sample.yaml (line 73), and run:

python sample_cond_ldm.py --cfg ./configs/BSDS_sample.yaml

Note that you can modify the sampling_timesteps (line 11) to control the inference speed.

- We now only test in the following environment, and more details will be released soon.

| Environment | Version |

|---|---|

| TensorRT | 8.6.1 |

| cuda | 11.6 |

| cudnn | 8.7.0 |

| pycuda | 2024.1 |

Please follow this link to install TensorRT.

- Download the pretrained weight. Real-time, qi~dong!

python demo_trt.py --input_dir $your input dir$ --pre_weight $the downloaded weight path$ --out_dir $the path saves your results$

If you have some questions, please contact with huangai@nudt.edu.cn.

Thanks to the base code DDM-Public.

@inproceedings{ye2024diffusionedge,

title={DiffusionEdge: Diffusion Probabilistic Model for Crisp Edge Detection},

author={Yunfan Ye and Kai Xu and Yuhang Huang and Renjiao Yi and Zhiping Cai},

year={2024},

booktitle={AAAI}

}