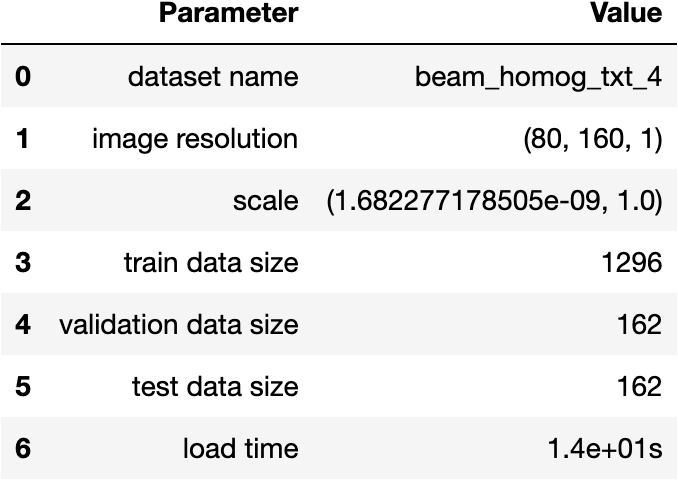

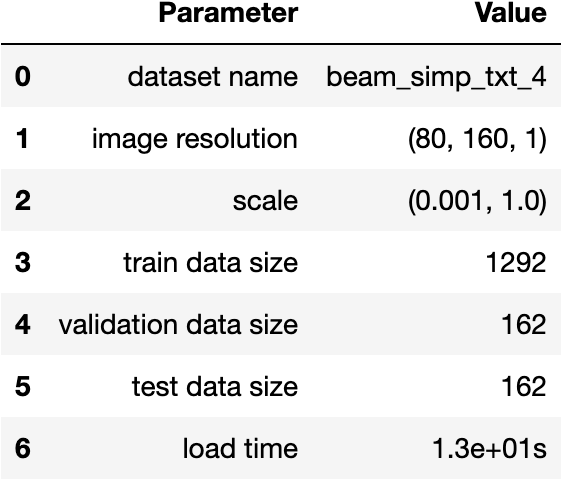

Each dataset is defined by its directory name and is located in the datasets folder.

Each dataset must contain all images for training, validation and testing.

Implemented input formats are:

-

PNG images

-

TXT files with the following information (in the same directory):

-

Th.mshfile containing the mesh information1st column 2n column 3rd column nNodes nElems nBoundEdges x_1 y_1 edgeID_1 : : : x_nNodes y_nNodes edgeID_nNodes n1_1 n2_1 n3_1 : : : n1_nElems n2_nElems n3_nElems e_n1_1 e_n2_1 e_boundID_1 : : : e_n1_nBoundEdges e_n2_nBoundEdges e_boundID_nBoundEdges First, a block of length

nNodeswith nodal positions x and y for each node in the mesh (x, y, edgeID).Second a block of length

nElemswith connectivity matrix, nodes in each element (node1, node2, node3).Thrid a block of length

nBoundEdgeswith information of each boundary edge; (node1, node2, boundID). -

fileName.txtfiles with element values (solution with P0, constant values in each element). This files must be all related to the same mesh inTh.msh1st column 2n column 3rd column ... nth column nElems u_1 u_2 u_3 u_4 u_5 : : : : : ... ... ... ... u_nElems

-

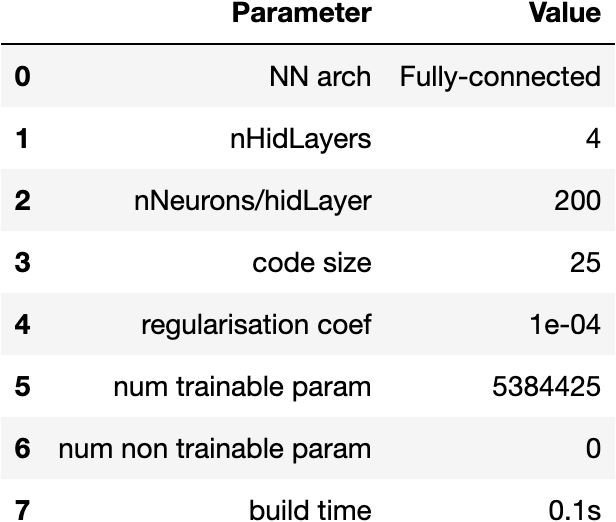

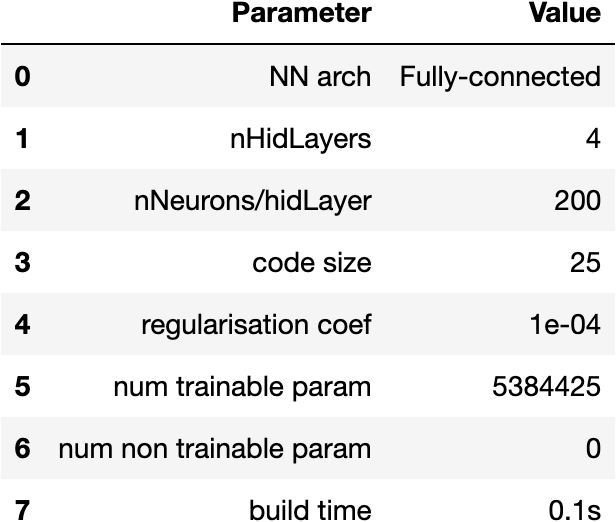

FCNN architecture for the beam_homog_x4 dataset

- Input layer - 80x160 = 12800 neurons - 0 train params

- Hidden layer - 200 neurons - 200*12800 + 200 = 2560200 train params

- Hidden layer - 200 neurons - 200*200 + 200 = 40200 train params

- Hidden layer - 200 neurons - 200*200 + 200 = 40200 train params

- Hidden layer - 200 neurons - 200*200 + 200 = 40200 train params

- Latent space (code) - max size 25 - 25*200 + 25 = 5025 train params

- Hidden layer - 200 neurons - 200*25 + 200 = 5200 train params

- Hidden layer - 200 neurons - 200*200 + 200 = 40200 train params

- Hidden layer - 200 neurons - 200*200 + 200 = 40200 train params

- Hidden layer - 200 neurons - 200*200 + 200 = 40200 train params

- Output layer - 80x160 = 12800 neurons - 12800*200 + 12800 = 2572800 train params

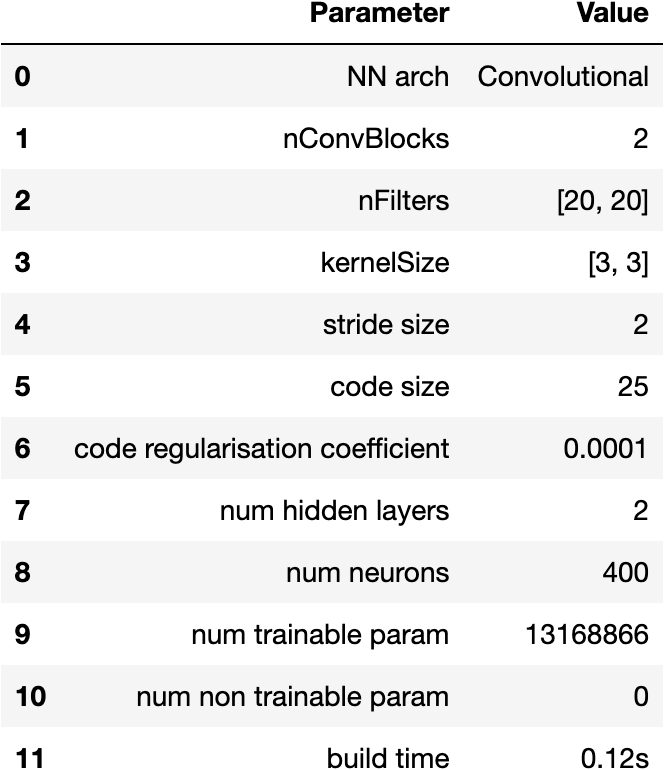

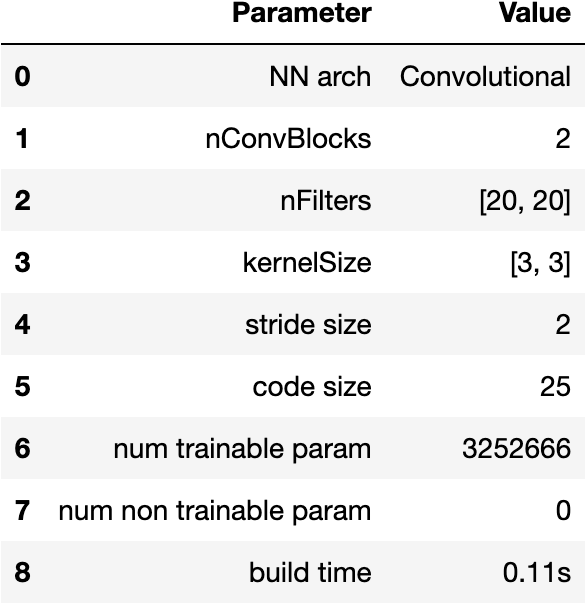

CNN architecture for the beam_homog_x4 dataset

- Input layer - 80x160x1 = 12800 neurons - 0 train params

- Conv layer with 20 filters of kernel 3 - 80x160x20 (each of the 20 filters is 3x3) - 20x9 + 20 = 200 train params

- MaxPol layer with stride 2 - 40x80x20 - 0 train params

- Conv layer with 20 filters of kernel 3 - 40x80x20 (each of the 20 filters is 3x3) - 180x20 + 20 = 3620 train params

- MaxPol layer with stride 2 - 20x40x20 - 0 train params

- Hidden layer - 20x40x20 = 16000 neurons - 0 train params

- Hidden layer - 400 neurons - 400*16000 + 400 = 6400400 train params

- Hidden layer - 400 neurons - 400*400 + 400 = 160400 train params

- Latent space (code) - max size 25 - 25*400 + 25 = 10025 train params

- Hidden layer - 400 neurons = 400*25 + 400 = 10400 train params

- Hidden layer - 400 neurons = 400*400 + 400 = 160400 train params

- Hidden layer - 20x40x20 = 16000 neurons - 16000*400 + 16000 = 6416000 train params

- Transposed conv layer with 20 filters, kernel 3 and stride 2 - 40x80x20 - 20x9x20 + 20 = 3620 train params

- Transposed conv layer with 20 filters, kernel 3 and stride 2 - 80x160x20 - 20x9x20 + 20 = 3620 train params

- Output layer - 80x160x1 = 12800 neurons - 20x9 + 1 = 181 train params

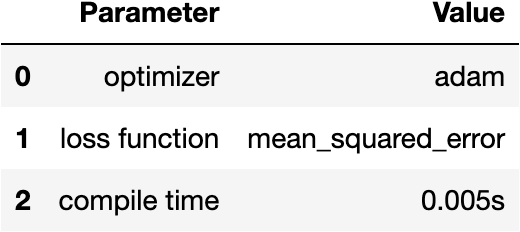

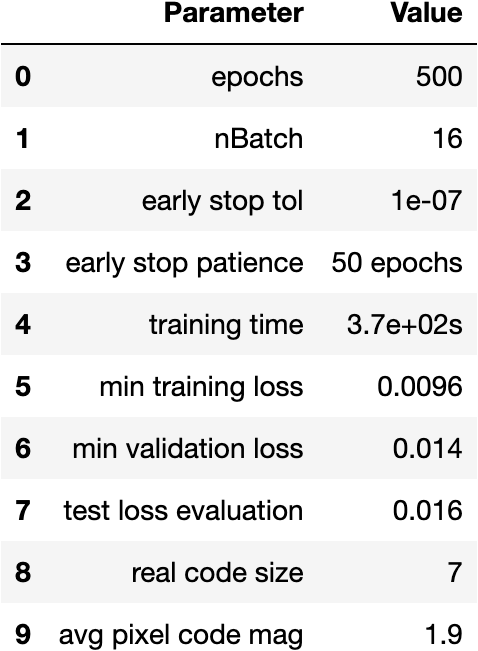

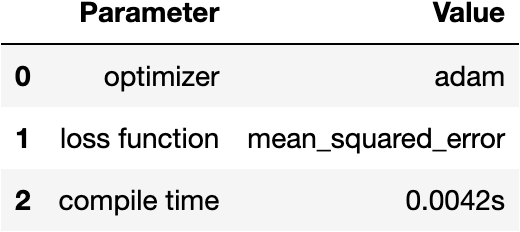

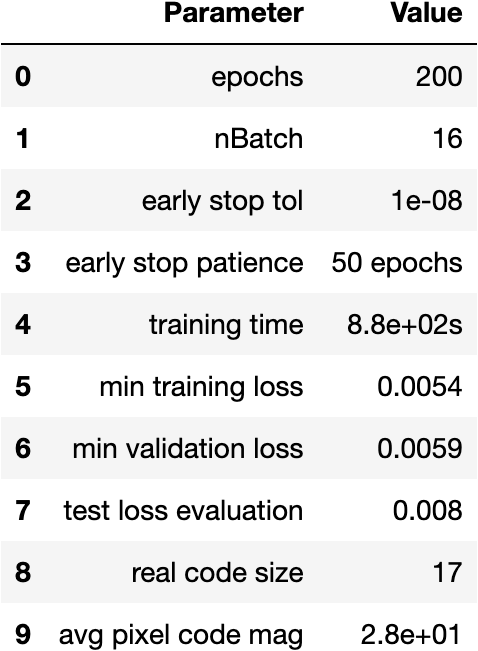

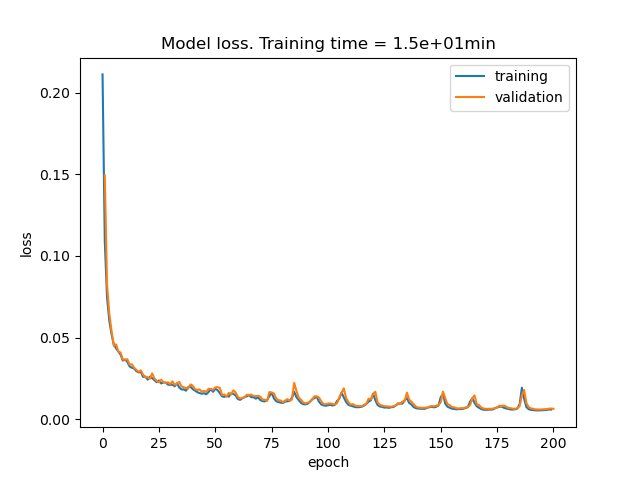

Training parameters and loss functions obtained durig training.

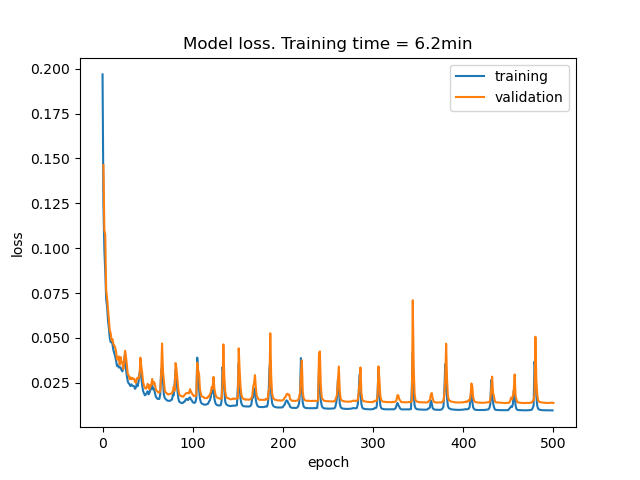

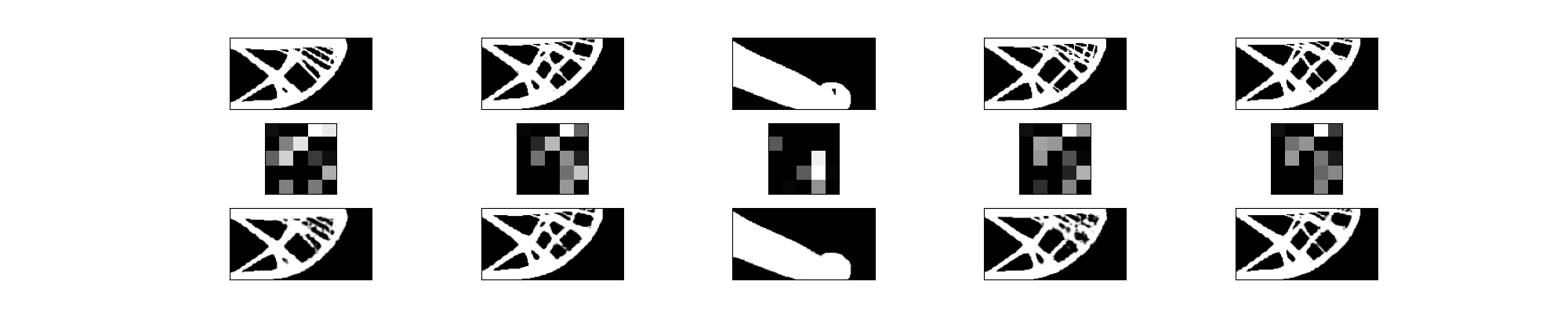

Results obtained using the autoencoder.

First row corresponds to the original image and last row are the recevered image after being passed through the autoencoder. The middle row corresponds to the latent space (code) representaion, where the information is compressed preserving the accuracy.

| Layer | Resolution | Size | Compression rate |

|---|---|---|---|

| Original image | 80x160x1 | 12800 | 1 |

| Latent space | 5x5 | 25 (only 7 non-zero) | 1800 |

| Reconstructed image | 80x160x1 | 12800 | 1 |

Training parameters and loss functions obtained durig training.

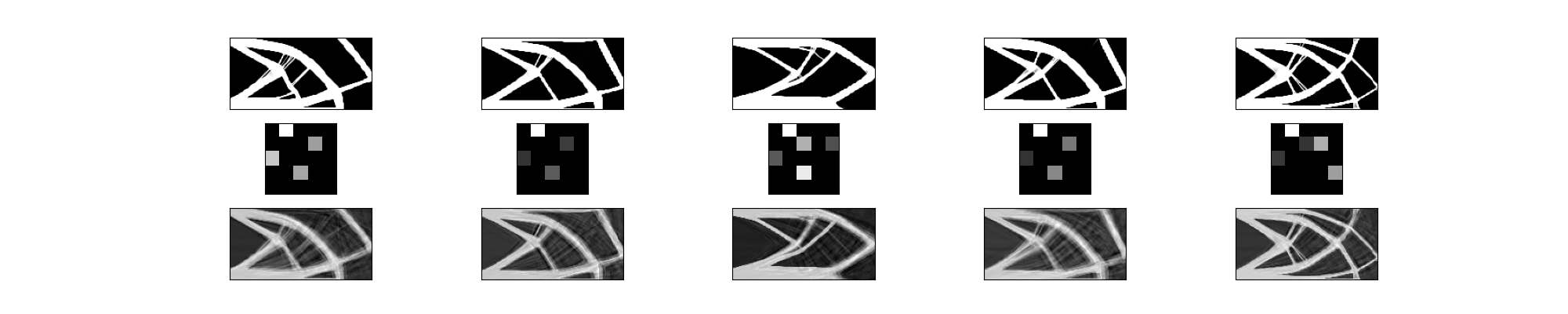

Results obtained using the autoencoder.

First row corresponds to the original image and last row are the recevered image after being passed through the autoencoder. The middle row corresponds to the latent space (code) representaion, where the information is compressed preserving the accuracy.

| Layer | Resolution | Size | Compression rate |

|---|---|---|---|

| Original image | 80x160x1 | 12800 | 1 |

| Latent space | 5x5 | 25 (only 17 non-zero) | 750 |

| Reconstructed image | 80x160x1 | 12800 | 1 |