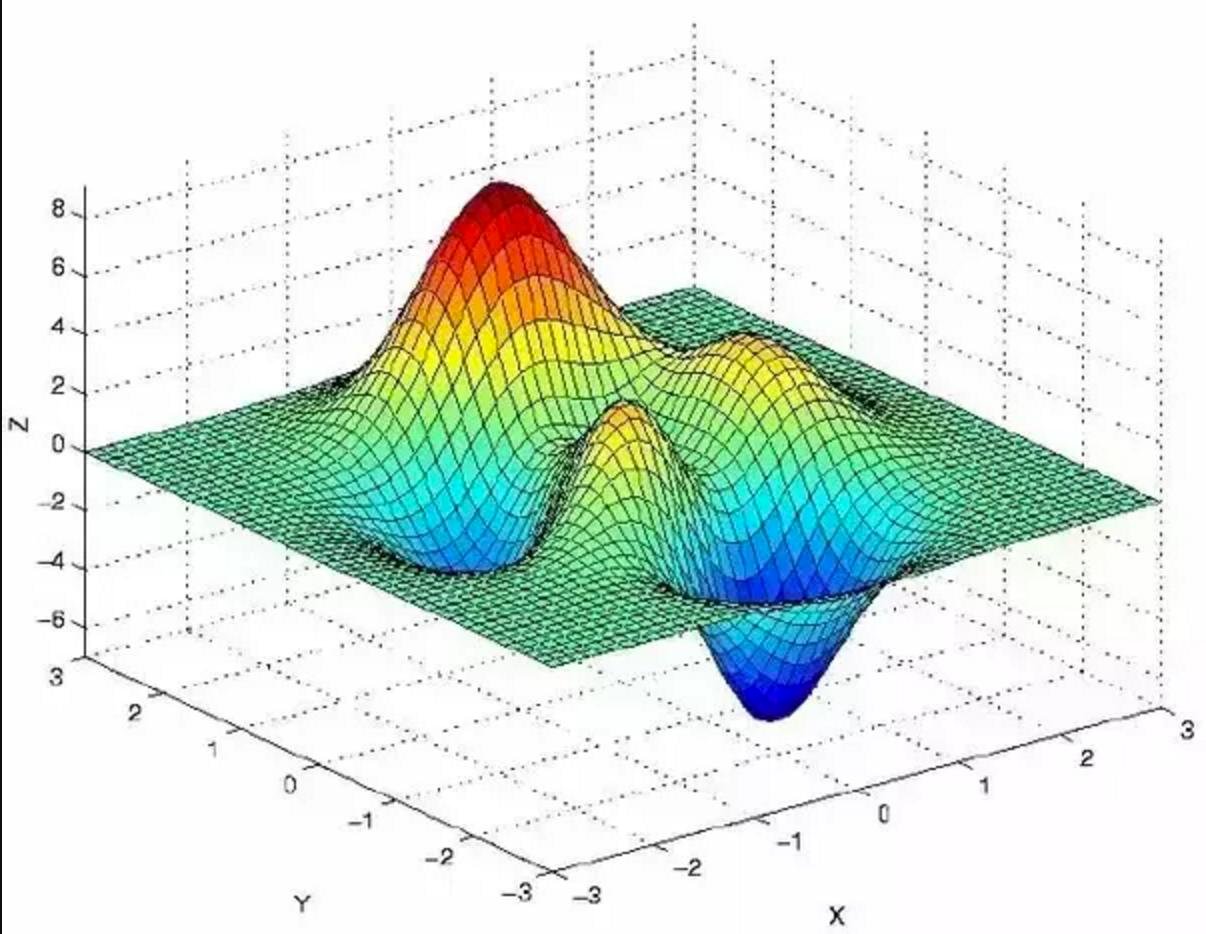

Gradient descent is a first-order iterative optimization algorithm for finding the minimum of a function. To find a local minimum of a function using gradient descent, one takes steps proportional to the negative of the gradient (or approximate gradient) of the function at the current point.

GustavoBarros11/implementing-gradient-descent

Implementing the Gradient Descent Algorithm in a small dataset

Jupyter Notebook