Authors: Jingfeng Yao, Xinggang Wang📧, Lang Ye, Wenyu Liu

Institute: School of EIC, HUST

(📧) corresponding author

2023/07/01We release a new version that enables text input and transparency correction!2023/06/08We release arxiv tech report!2023/06/08We release source codes of Matte Anything!

The program is still in progress. You can try the early version first! Thanks for your attention. If you like Matte Anything, you may also like its previous foundation work ViTMatte.

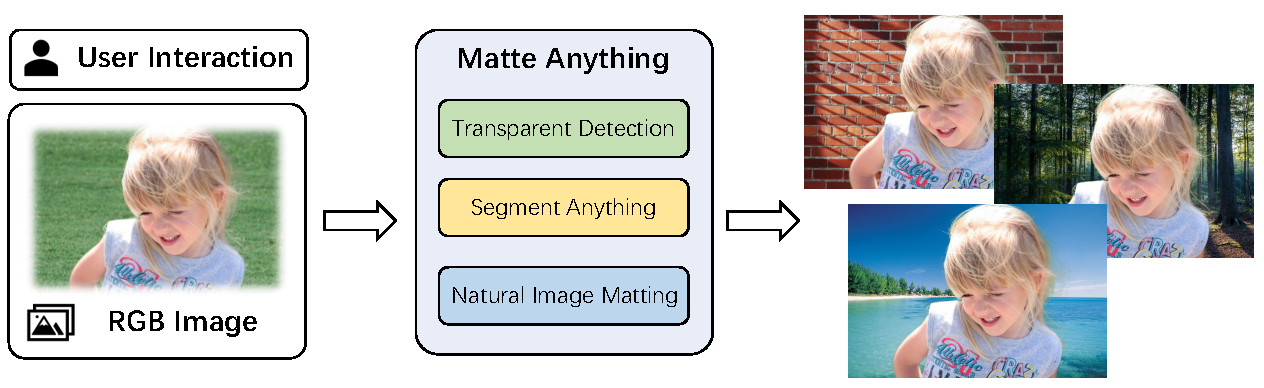

We propose Matte Anything (MatAny), an interactive natural image matting model. It could produce high-quality alpha-matte with various simple hints. The key insight of MatAny is to generate pseudo trimap automatically with contour and transparency prediction. We leverage task-specific vision models to enhance the performance of natural image matting.

- Matte Anything with Simple Interaction

- High Quality Matting Results

- Ability to Process Transparent Object

Try our Matte Anything with our web-ui!

Install Segment Anything Models as following:

pip install git+https://github.com/facebookresearch/segment-anything.git

Install ViTMatte as following:

python -m pip install 'git+https://github.com/facebookresearch/detectron2.git'

pip install -r requirements.txt

Install GroundingDINO as following:

cd Matte-Anything

git clone https://github.com/IDEA-Research/GroundingDINO.git

cd GroundingDINO

pip install -e .

Download pretrained models SAM_vit_h, ViTMatte_vit_b, and GroundingDINO-T. Put them in ./pretrained

python matte_anything.py

- Upload the image and click on it (default:

foreground point). - Click

Start!. - Modify

erode_kernel_sizeanddilate_kernel_sizefor a better trimap (optional).

matte_anything.mp4

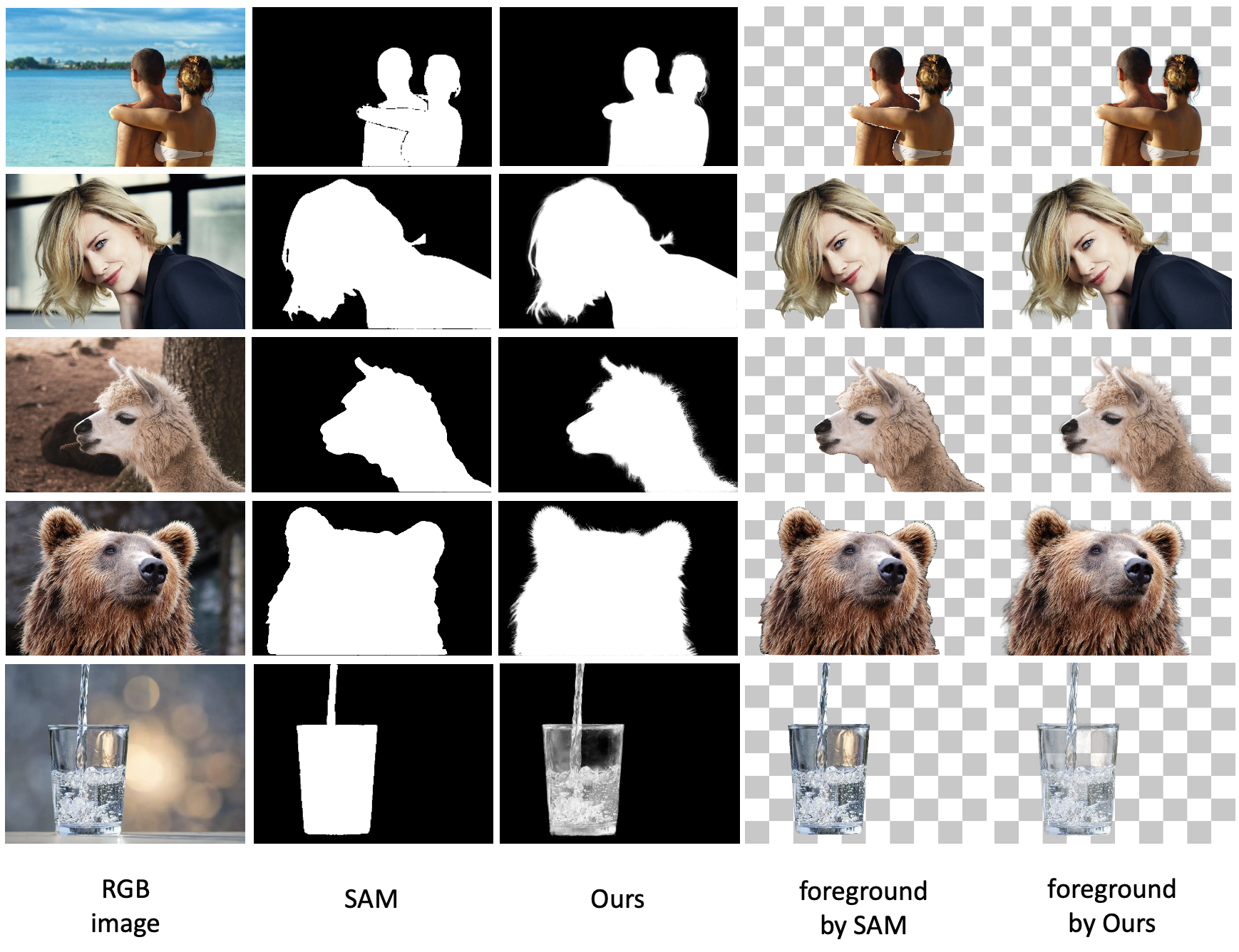

Visualization of SAM and MatAny on real-world data from AM-2K and P3M-500 .

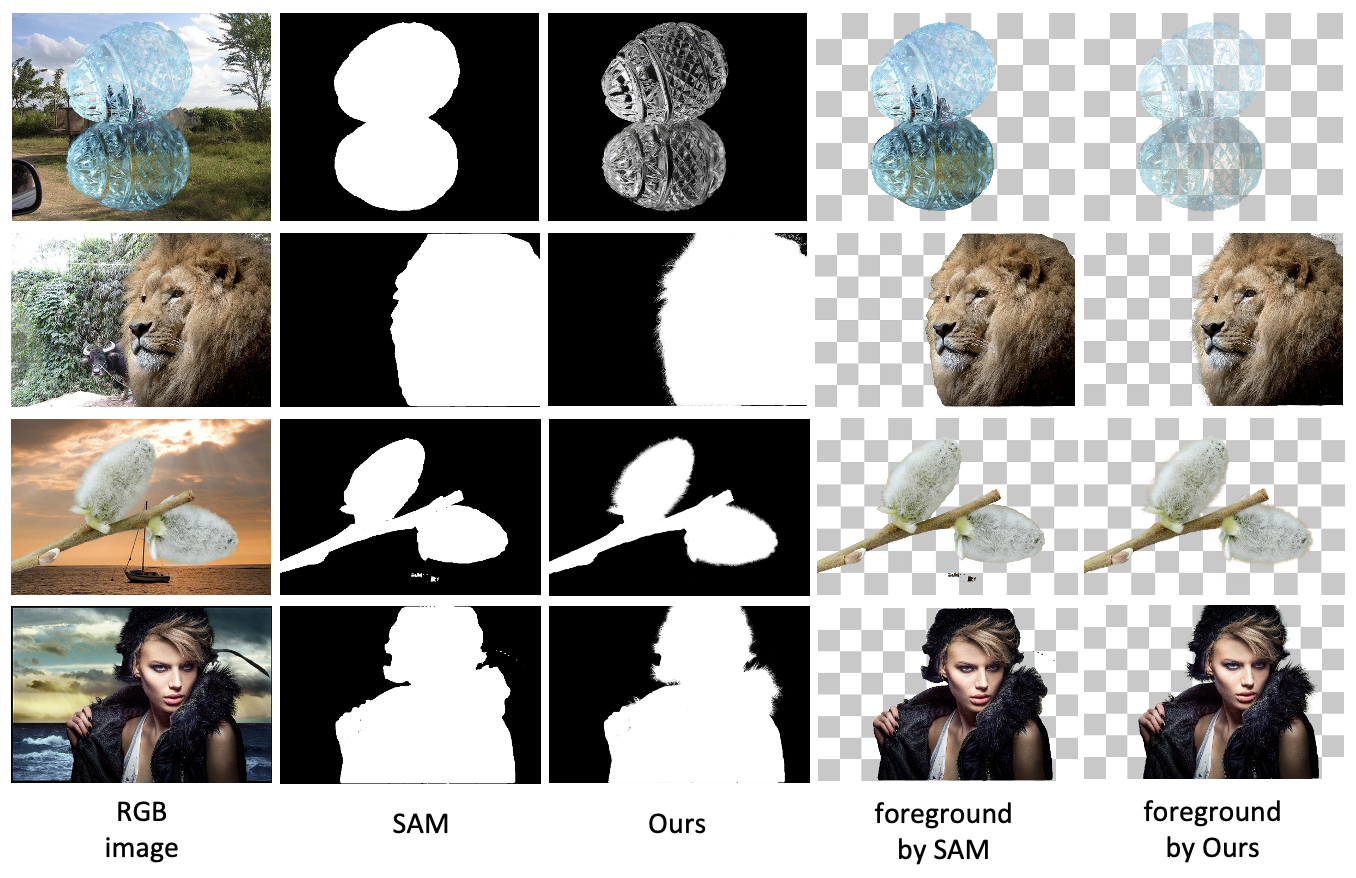

Visualization of SAM and MatAny on Composition-1k

Visualization of SAM and MatAny on Composition-1k

- adjustable trimap generation

- arxiv tech report

- support user transparency correction

- support text input

- add example data

- finetune ViTMatte for better performance

Our repo is built upon Segment Anything, GroundingDINO, and ViTMatte. Thanks to their work.

@article{matte_anything,

title={Matte Anything: Interactive Natural Image Matting with Segment Anything Models},

author={Yao, Jingfeng and Wang, Xinggang and Ye, Lang and Liu, Wenyu},

journal={arXiv preprint arXiv:2306.04121},

year={2023}

}