author: Andrew Gyakobo

This project was made to showcase a sample example of muli-threading in the C programming language. To be more exact, in this project we'll be trying to approximate the value

Multi-threading is a programming concept where multiple threads are spawned by a process to execute tasks concurrently. Each thread runs independently but shares the process's resources like memory and file handles. Multi-threading can lead to more efficient use of resources, faster execution of tasks, and improved performance in multi-core systems.

- Thread: A lightweight process or the smallest unit of execution within a process.

- Concurrency vs. Parallelism: Concurrency means multiple threads make progress at the same time, while parallelism means multiple threads run simultaneously on different cores.

- Synchronization: Mechanism to control the access of multiple threads to shared resources. Thread Safety: Ensuring that shared data is accessed by only one thread at a time.

We'll be utilizing the function

Note

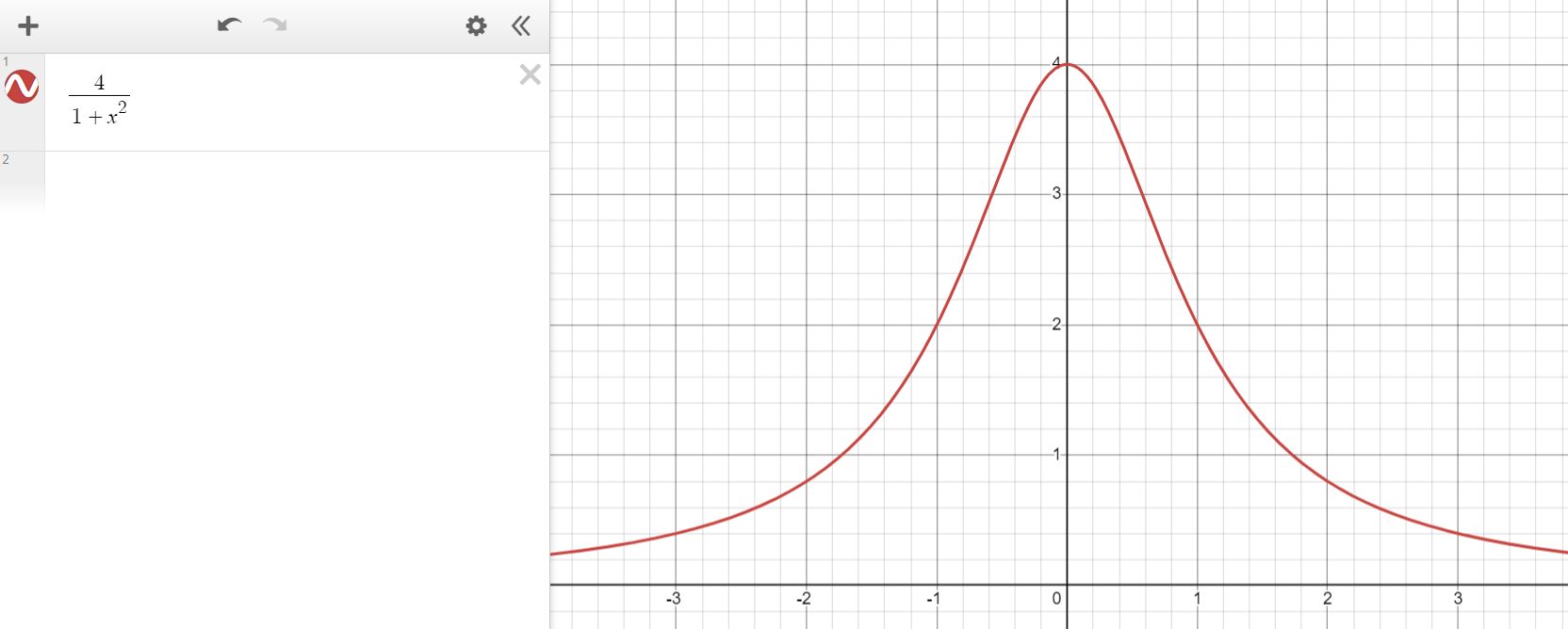

The graph below showcases the integrated function.

There is of course a minor issue with this calculation. In particular, as the

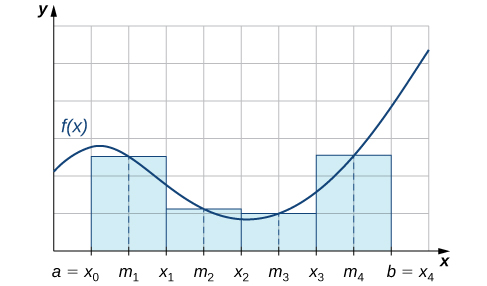

Here is a more specific example of the aforementioned computation, this isn't a representation of the previously calculated function but still comminucates the same idea:

As you can witness, the integration is just a summation of all the rectangles entangled under the function. This is roughly what is being calculated:

From here we can distinctly see that the smaller the

Henceforth, a viable solution to generate as much rectangles as possible would be to use parallelism and multi-core processing with the C library <omp.h>.

- From the getgo the code greets us with two include statements:

#include <omp.h>

#include <stdio.h>-

Furthermore, we define the

const int num_steps(the quantity of rectangles, the area of which shall be integrated) and then thedouble step(the dx or the width of each rectangle) -

Now entering the main scope of our program we initialize the multi-threading aspect using the

#pragma omp parallelwhere each so-called thread runs simeaultaneously from one another and calculates the partial arealocal_area.

#pragma omp parallel

{

int id = omp_get_thread_num();

int n = omp_get_num_threads();

int i;

double local_area = 0;

for (i = id; i<num_steps; i+=n) {

double x = (i + 0.5) * step;

double y = 4 / (1 + x*x);

local_area += step * y;

}

...- After calculating the

local_areaall the threads are simeaultaneously halted in one specific scope where they all perform one command; in our case sum up all the partial areas into onedouble areagiven us our final result.

#pragma omp critical

{

area += local_area;

}Important

It is important to acquiesce that before running this program you need to fathom and fully understand the limits of your PC set before making such calculations.

Just as a side note the OpenMP library comprises of the following parts. Also feel free to download, edit, commit and leave feedback to the project.

#pragma omp parallel

#pragma omp critical

#pragma omp barrier

#pragma omp master#include <omp.h>

int omp_get_thread_num()

int omp_get_num_threads()gcc -fopenmp # C compiler

g++ -fopenmp # C++ compilerexport OMP_NUM_THREADS=8

export OMP_NESTED=TRUEMIT