Zhendong Yang, Ailing Zeng, Chun Yuan, Yu Li

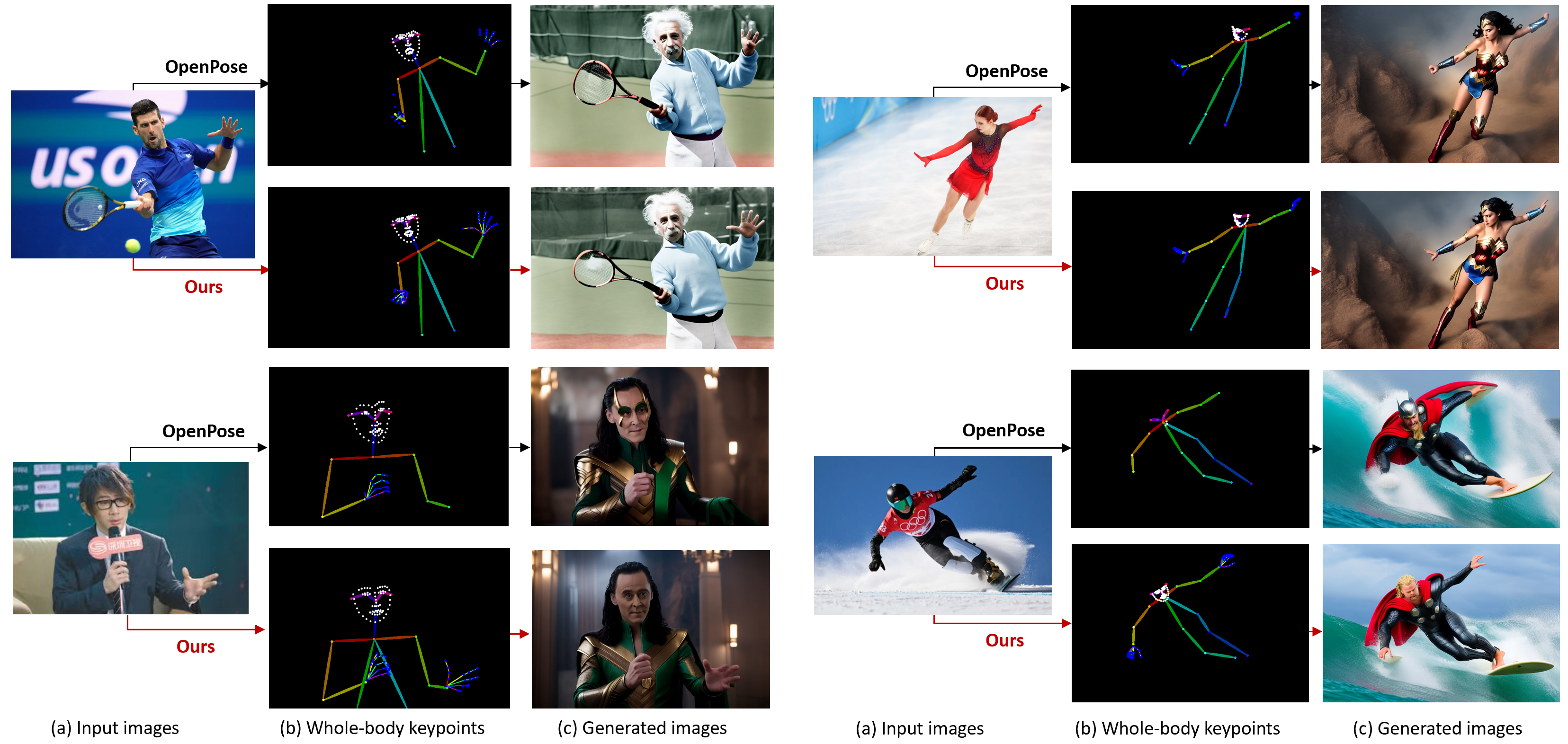

DWPose DWPose + ControlNet (prompt: Ironman)

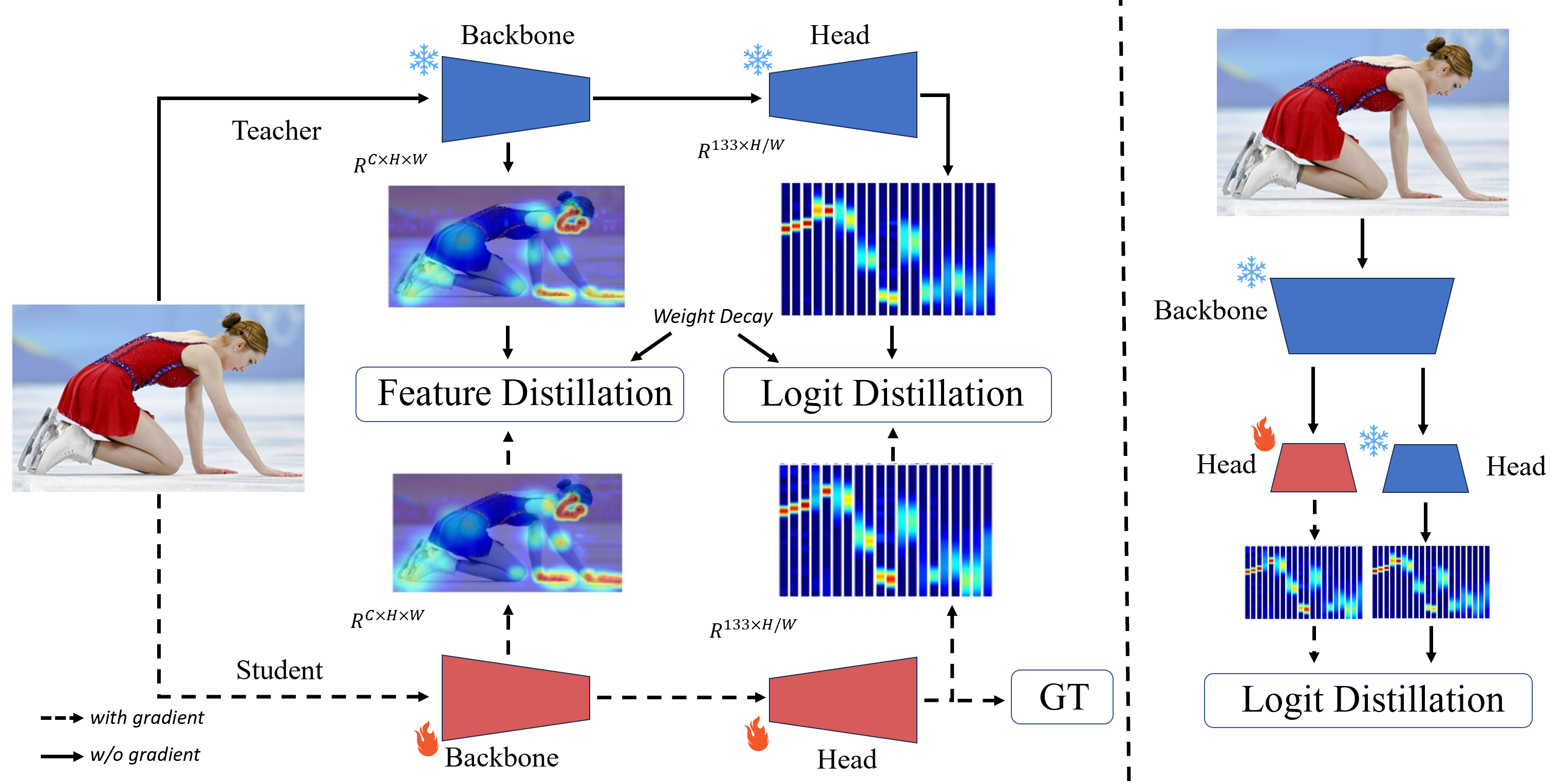

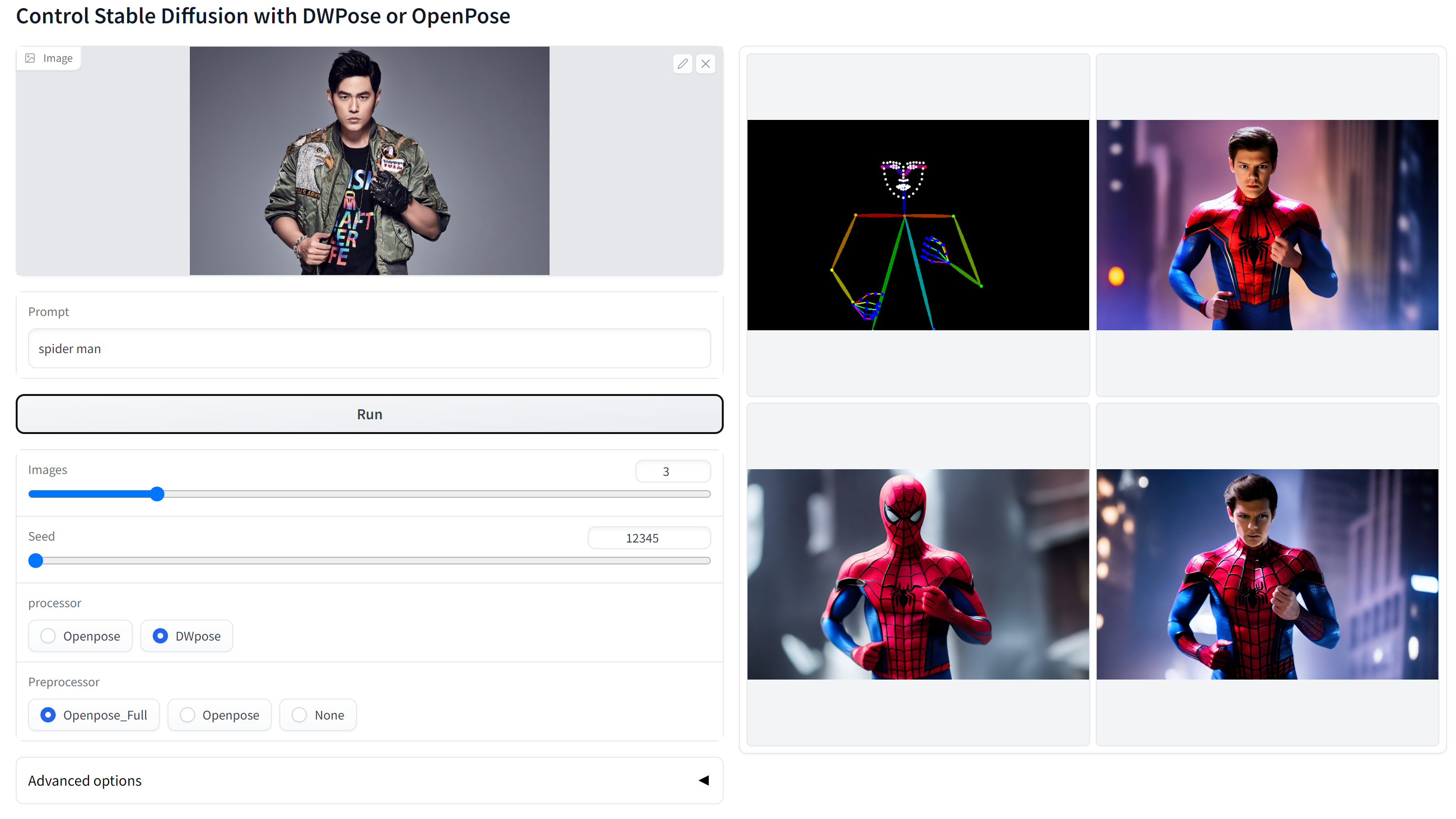

This repository is the official implementation of the Effective Whole-body Pose Estimation with Two-stages Distillation (ICCV 2023, CV4Metaverse Workshop). Our code is based on MMPose and ControlNet.

⚔️ We release a series of models named DWPose with different sizes, from tiny to large, for human whole-body pose estimation. Besides, we also replace Openpose with DWPose for ControlNet, obtaining better Generated Images.

-

2023/12/03: DWPose supports Consistent and Controllable Image-to-Video Synthesis for Character Animation. -

2023/08/17: Our paper Effective Whole-body Pose Estimation with Two-stages Distillation is accepted by ICCV 2023, CV4Metaverse Workshop. 🎉 🎉 🎉 -

2023/08/09: You can try DWPose with sd-webui-controlnet now! Just update your sd-webui-controlnet >= v1.1237, then choose dw_openpose_full as preprocessor. -

2023/08/09: We support to run onnx model with cv2. You can avoid installing onnxruntime. See branch opencv_onnx. -

2023/08/07: We upload all DWPose models to huggingface. Now, you can download them from baidu drive, google drive and huggingface. -

2023/08/07: We release a new DWPose with onnx. You can avoid installing mmcv through this. See branch onnx. -

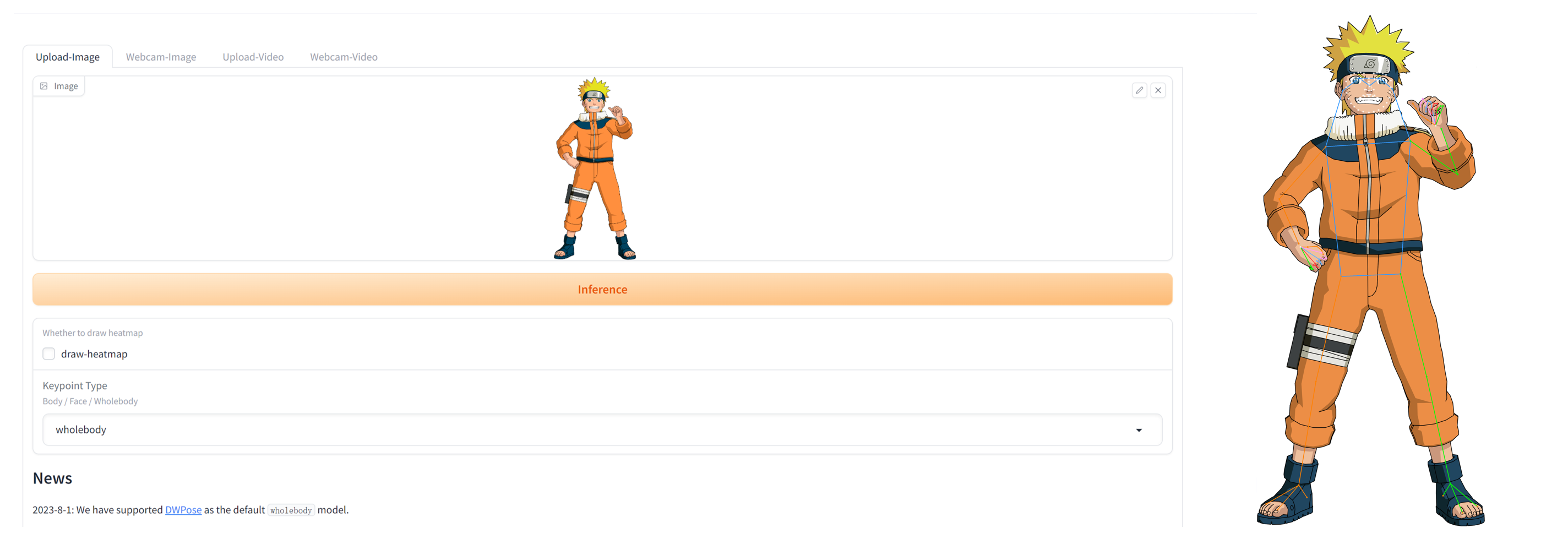

2023/08/01: Thanks to MMPose. You can try our DWPose with this demo by choosing wholebody!

See installation instructions. This branch uses onnx. You can try DWPose for ControlNet without mmcv.

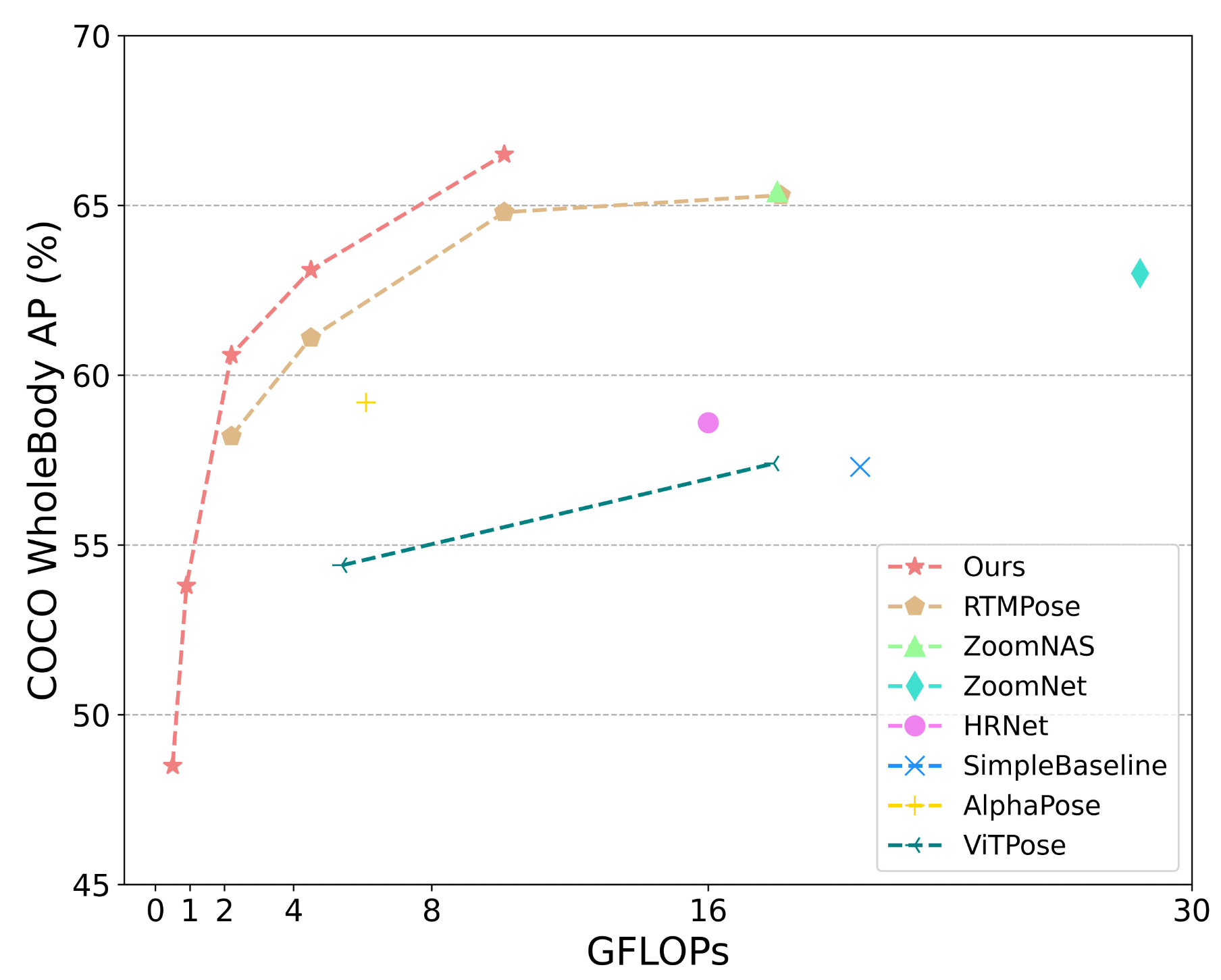

Results on COCO-WholeBody v1.0 val with detector having human AP of 56.4 on COCO val2017 dataset

| Arch | Input Size | FLOPS (G) | Body AP | Foot AP | Face AP | Hand AP | Whole AP | ckpt | ckpt |

|---|---|---|---|---|---|---|---|---|---|

| DWPose-t | 256x192 | 0.5 | 0.585 | 0.465 | 0.735 | 0.357 | 0.485 | baidu drive | google drive |

| DWPose-s | 256x192 | 0.9 | 0.633 | 0.533 | 0.776 | 0.427 | 0.538 | baidu drive | google drive |

| DWPose-m | 256x192 | 2.2 | 0.685 | 0.636 | 0.828 | 0.527 | 0.606 | baidu drive | google drive |

| DWPose-l | 256x192 | 4.5 | 0.704 | 0.662 | 0.843 | 0.566 | 0.631 | baidu drive | google drive |

| DWPose-l | 384x288 | 10.1 | 0.722 | 0.704 | 0.887 | 0.621 | 0.665 | baidu drive | google drive |

First, you need to download our Pose model dw-ll_ucoco_384.onnx (baidu, google) and Det model yolox_l.onnx (baidu, google), then put them into ControlNet-v1-1-nightly/annotator/ckpts. Then you can use DWPose to generate the images you like.

cd ControlNet-v1-1-nightly

python gradio_dw_open_pose.py

cd ControlNet-v1-1-nightly

python dwpose_infer_example.py

Note: Please change the image path and output path based on your file.

Prepare COCO in mmpose/data/coco and UBody in mmpose/data/UBody.

UBody needs to be tarnsferred into images. Don't forget.

cd mmpose

python video2image.py

If you want to evaluate the models on UBody

# add category into UBody's annotation

cd mmpose

python add_cat.py

cd mmpose

bash tools/dist_train.sh configs/distiller/ubody/s1_dis/rtmpose_x_dis_l__coco-ubody-256x192.py 8

cd mmpose

bash tools/dist_train.sh configs/distiller/ubody/s2_dis/dwpose_l-ll__coco-ubody-256x192.py 8

cd mmpose

# if first stage distillation

python pth_transfer.py $dis_ckpt $new_pose_ckpt

# if second stage distillation

python pth_transfer.py $dis_ckpt $new_pose_ckpt --two_dis

# test on UBody

bash tools/dist_test.sh configs/wholebody_2d_keypoint/rtmpose/ubody/rtmpose-l_8xb64-270e_ubody-wholebody-256x192.py $pose_ckpt 8

# test on COCO

bash tools/dist_test.sh configs/wholebody_2d_keypoint/rtmpose/ubody/rtmpose-l_8xb64-270e_coco-ubody-wholebody-256x192.py $pose_ckpt 8

@inproceedings{yang2023effective,

title={Effective whole-body pose estimation with two-stages distillation},

author={Yang, Zhendong and Zeng, Ailing and Yuan, Chun and Li, Yu},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={4210--4220},

year={2023}

}

Our code is based on MMPose and ControlNet.