This project is the first assignment for the SC4003-CE4046-CZ4046-INTELLIGENT AGENTS course. It involves implementing value iteration and policy iteration algorithms to solve a maze environment. The goal is to find the optimal policy and utilities for all non-wall states in the maze.

The following section presents the results of the maze solver algorithm, showcasing the original maze configuration and the optimized policy obtained after running the algorithm.

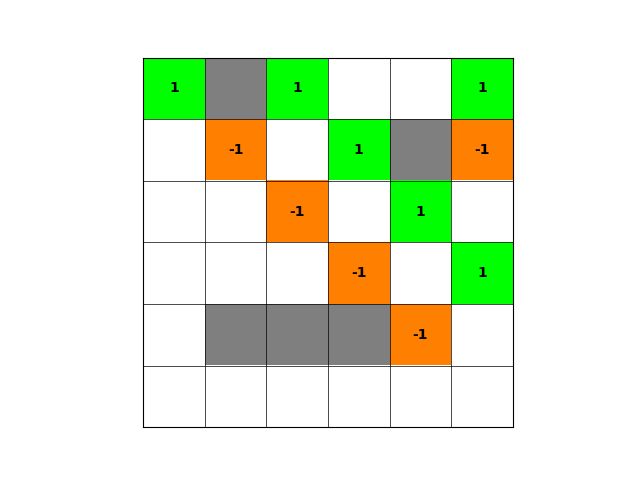

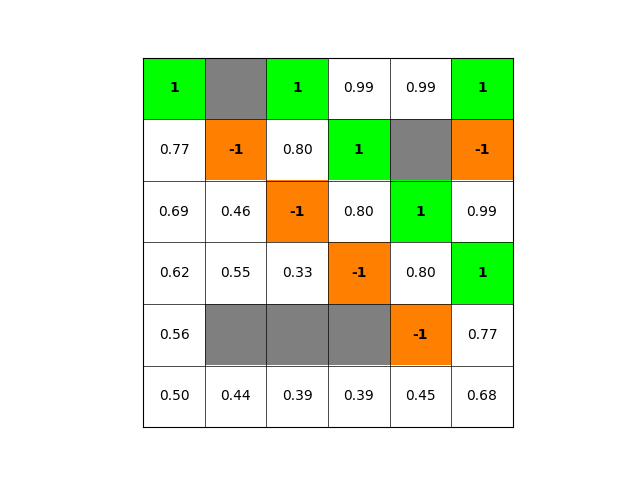

The original maze is set up with walls, positive rewards, and negative rewards as shown below:

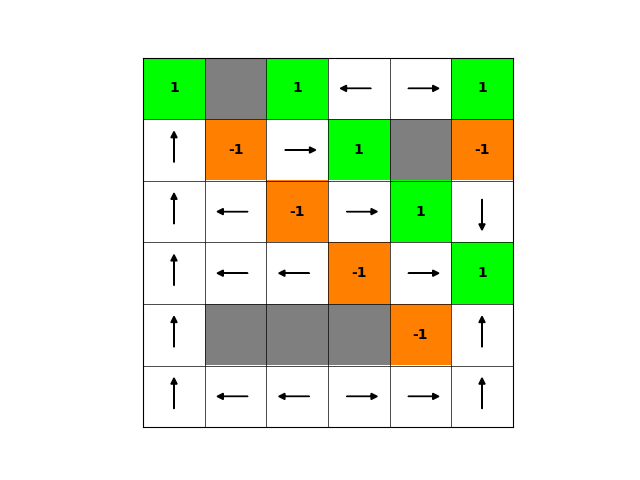

After running the maze solver algorithm, the optimized policy indicating the best actions at each state is visualized below:

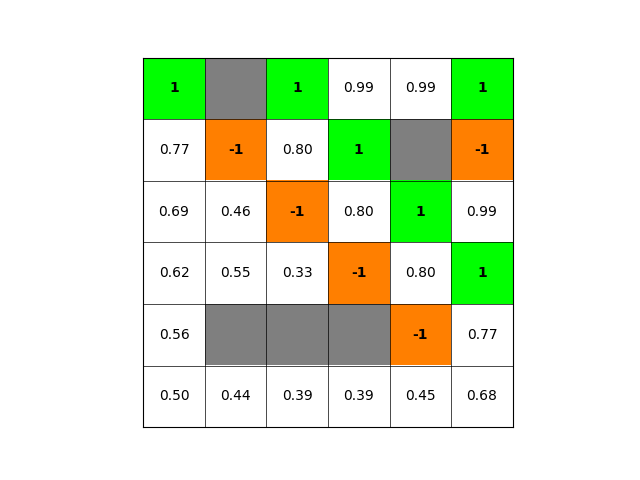

Value iteration policy and utility:

| Policy | Utility |

|---|---|

|

|

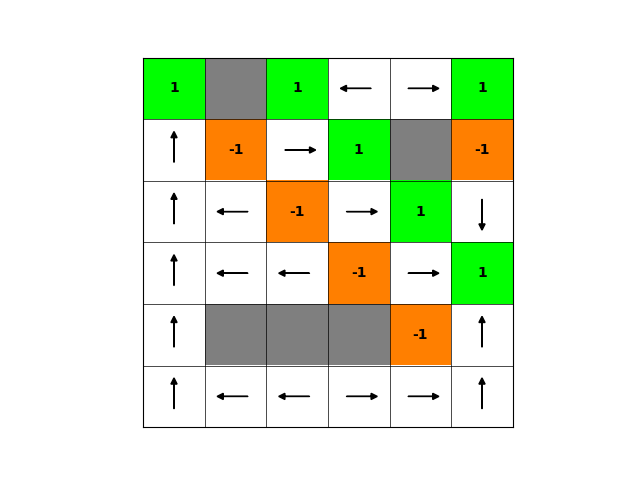

Policy iteration policy and utility:

| Policy | Utility |

|---|---|

|

|

The arrows represent the direction of the optimal action to take from each non-wall grid cell. Green cells indicate positive rewards, orange cells indicate negative rewards, and gray cells represent walls. The optimized policy provides a guide for an agent to maximize rewards and reach the goal state efficiently.

The results denote both could converge to the same state.

For more details please check the report

-

Clone the repository:

git clone https://github.com/H-tr/Agent-Decision-Making.git

-

Navigate to the project directory:

cd Agent-Decision-Making

-

Create the python environment

conda create -n maze_solver python=3.10 -y conda activate maze_solver pip install -r requirements.txt

-

Run the main logic

To monitor the iteration progress

tensorboard --logdir=runs

python main.py

For part 2:

python main.py --assignment part_2

-

Test

python -m unittest tests.test_maze_solver

This project is licensed under the MIT License - see the LICENSE file for details.

- Course instructors and teaching assistants for providing guidance and support.