We introduce a novel finetuning method Layer Variation Analysis (LVA) for transfer learning. Three domain adaptation experiments are demonstrated as follows:

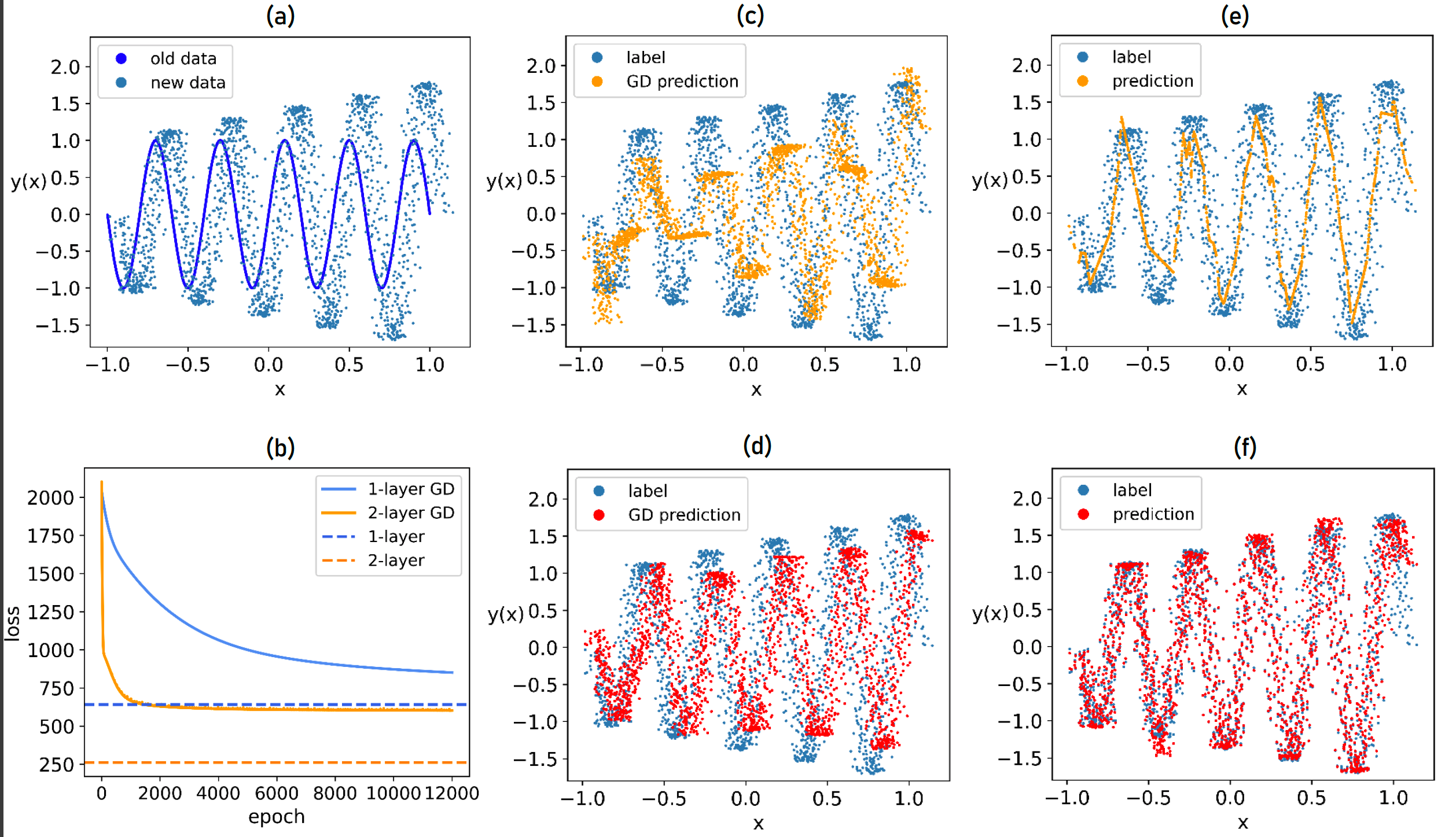

- [Exp. 1] Time Series Regression

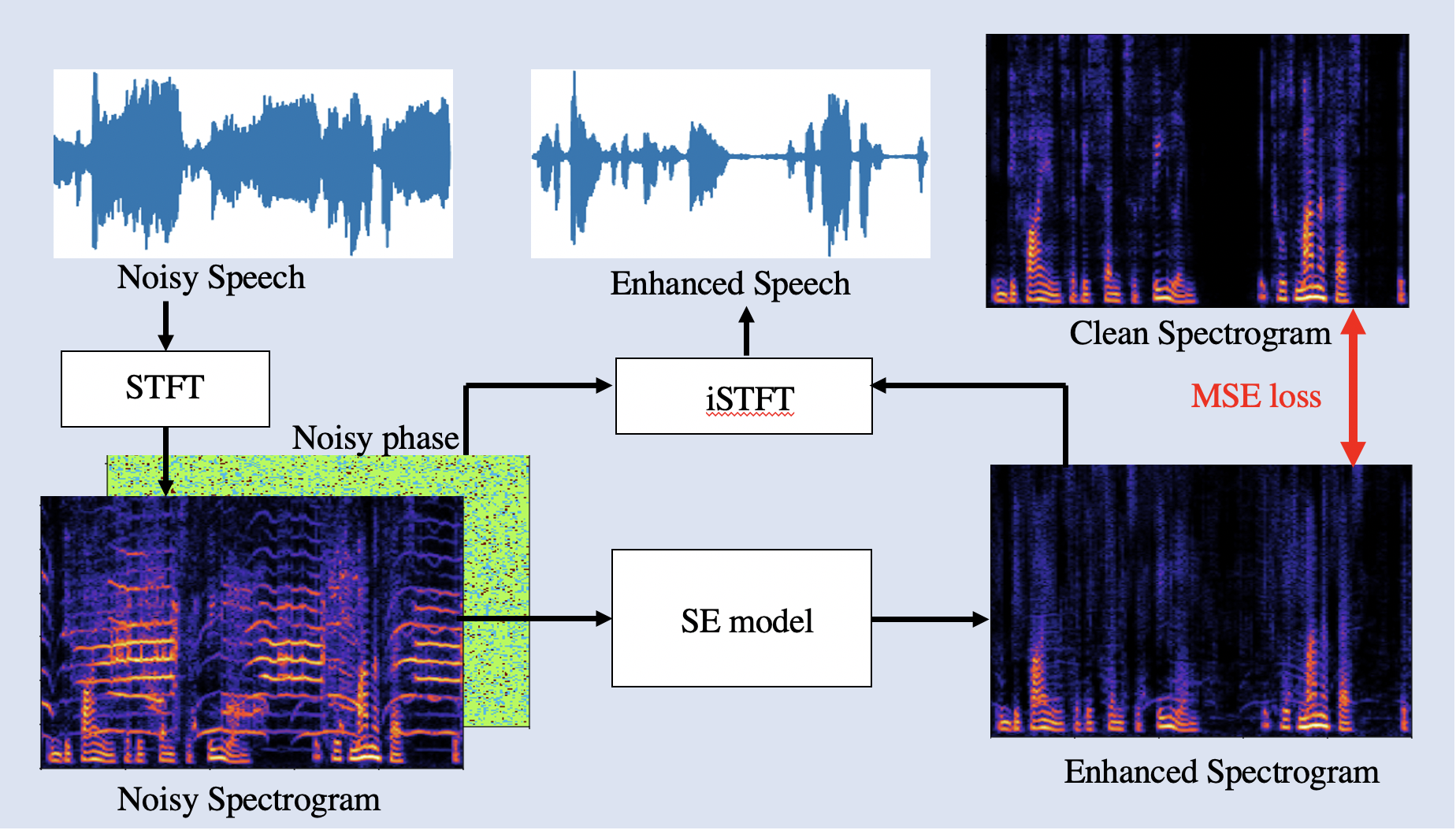

- [Exp. 2] Speech Enhancement (denoise)

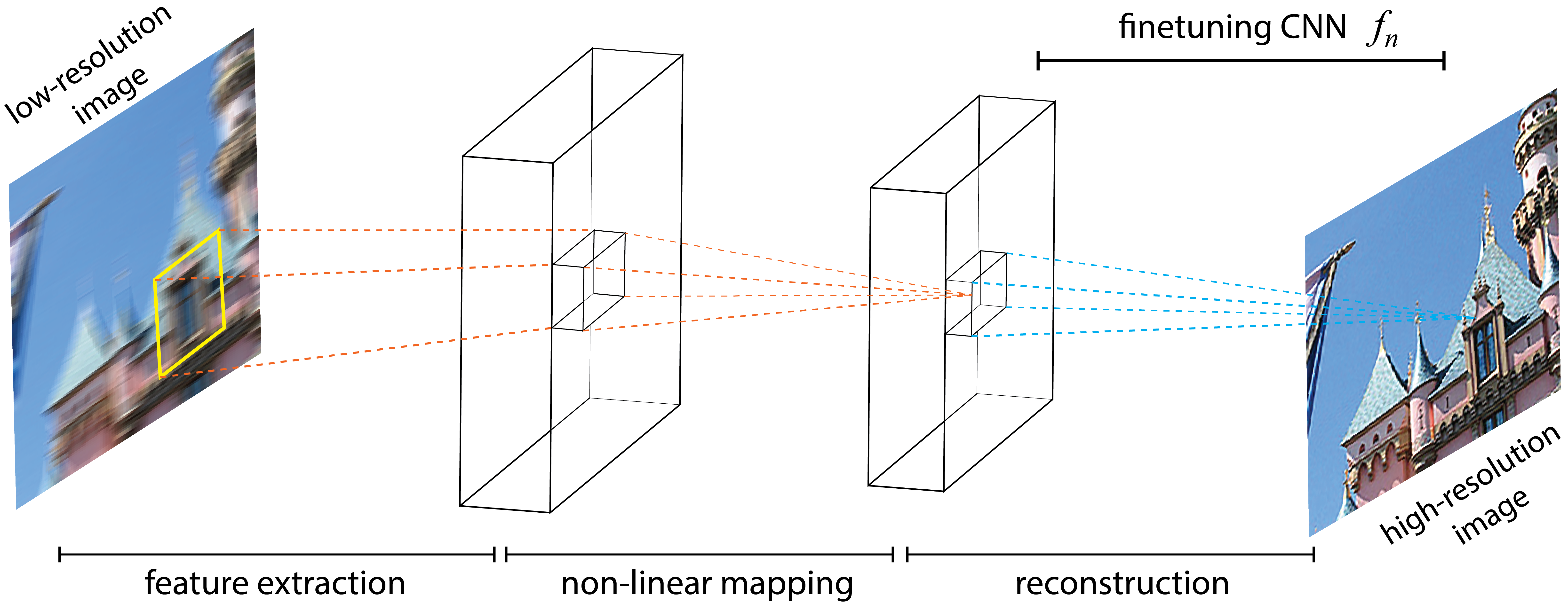

- [Exp. 3] Super Resolution (image deblur)

- [Exp. 1] Requires no dataset

- [Exp. 2] Download DNS-Challenge (or here) and use

/Exp2/data_preprocessing/for preprocessing. - [Exp. 3] Download CUFED (or here) and use

/Exp3/preprocessing_SR_images/for preprocessing.

- [Exp. 1]

pretraining.py - [Exp. 2]

SE_pretraining.py - [Exp. 3]

SRCNN_pretraining.py

- [Exp. 1]

GD_finetune_1layer.py&GD_vs_LVA_1layer.py - [Exp. 2]

SE_finetuning_and_comparison.py - [Exp. 3]

SRCNN_GD_finetuning.py&SRCNN_LVA_comparisons.py

- Python 3.8

- PyTorch 2.0.1

- librosa 0.10.0

- pypesq 1.2.4

- pystoi 0.3.3

- Tensorboard 2.13.0

- scikit-learn 1.2.2

- tqdm 4.65.0

- scipy 1.10.1

- NVIDIA GPU with CUDA 11.0+