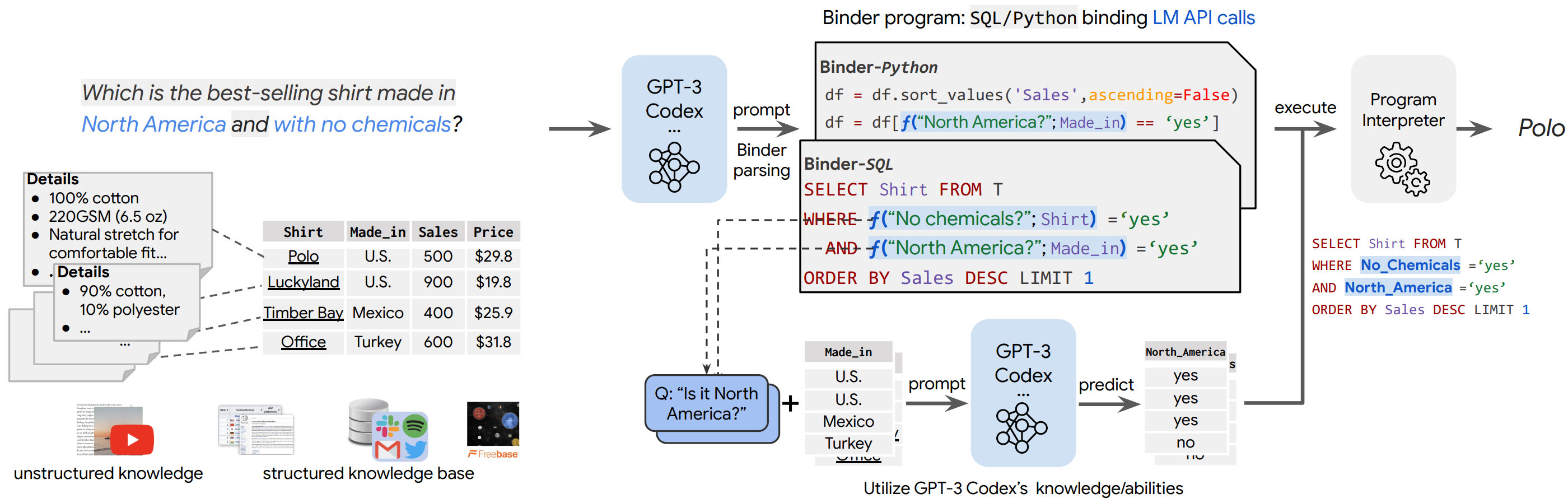

Binder🔗: Binding Language Models in Symbolic Languages

Code for paper Binding Language Models in Symbolic Languages. Please refer to our project page for more demonstrations and up-to-date related resources. Check out our demo page to have an instant experience of Binder, which achieves sota or comparable performance with only dozens of(~10) program annotations.

Updates

- 2023-08-25: 🔥 Update to support OpenAI chat series models like

gpt-3.5-xxxandgpt-4-xxx, code will be further refactor later to support more! - 2023-03-23: Since OpenAI no longer supports Codex series models, we will sooner test and update the engine from "code-davinci-002" to "gpt-3.5-turbo".

- 2023-01-22: Accepted by ICLR 2023 (Spotlight)

- 2022-12-04: Due to the fact OpenAI's new policy on request limitation, the n sampling couldn't be done as previously, we will add features to call multiple times to be the same usage soon!

- 2022-10-06: We released our code, huggingface spaces demo and project page. Check it out!

Dependencies

To establish the environment run this code in the shell:

conda env create -f py3.7binder.yaml

pip install records==0.5.3That will create the environment binder we used.

Usage

Environment setup

Activate the environment by running

conda activate binderAdd key

Apply and get API keys(sk-xxxx like) from OpenAI API, save the key in key.txt file, make sure you have the rights to access the model(in the implementation of this repo, code-davinci-002) you need.

Run

Check out commands in run.py

Citation

If you find our work helpful, please cite as

@article{Binder,

title={Binding Language Models in Symbolic Languages},

author={Zhoujun Cheng and Tianbao Xie and Peng Shi and Chengzu Li and Rahul Nadkarni and Yushi Hu and Caiming Xiong and Dragomir Radev and Mari Ostendorf and Luke Zettlemoyer and Noah A. Smith and Tao Yu},

journal={ICLR},

year={2023},

volume={abs/2210.02875}

}