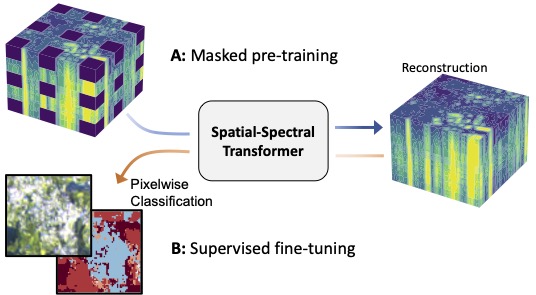

This projects tailors vision transformers to the characteristics of hyperspectral aerial and satellite imagery using: (i) blockwise patch embeddings (ii) spatial-spectral self-attention, (iii) spectral positional embeddings and (iv) masked self-supervised pre-training.

Results were presented at the CVPR EarthVision Workshop 2023, Paper Link.

The masked pre-training can be started with a call to the pretrain.py file.

Before starting the training, make sure to adjust the paths to your local copy of the dataset in configs/config.yaml. Hyperparameters can be adjusted in configs/pretrain_config.yaml.

The finetune.py script can be used to finetune a pre-trained model or to train a model from scratch for classifcation of EnMAP or Houston2018 data. The desired dataset must be provided as argument, e.g., finetune.py enmap. Prior to training, the dataset paths must be specified in configs/config.yaml. Hyperparameters can be adjusted in configs/finetune_config_{dataset}.yaml. There is also an alternative fine-tuning script for the use with wandb sweep functionality at src/finetune_sweep.py.

- The Houston2018 dataset is publicly available from the Hyperspectral Image Analysis Lab at the University of Houston and IEEE GRSS IADF.

- Code to re-create the unlabeled EnMAP and labeled EnMAP-DFC datasets is made available in the enmap_data directory. Please follow the instructions there.

We provide the pre-trained model checkpoints for the spatial-spectral transformer on Houston2018 and EnMAP datasets.

This repository was developed using Python 3.8.13 with PyTorch 1.12. Please have a look at the requirements.txt file for more details.

It incorporates code from the following source for the 3D-CNN model of Li et al. (Remote Sensing, 2017)

The vision transformer and SimMIM implementations are adapted from:

If you would like to cite our work, please use the following reference:

- Scheibenreif, L., Mommert, M., & Borth, D. (2023). Masked Vision Transformers for Hyperspectral Image Classification, In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) 2023

@inproceedings{scheibenreif2023masked,

title={Masked vision transformers for hyperspectral image classification},

author={Scheibenreif, Linus and Mommert, Michael and Borth, Damian},

booktitle={Proceedings of the IEEE/CVF conference on computer vision and pattern recognition},

pages={2166--2176},

year={2023}

}