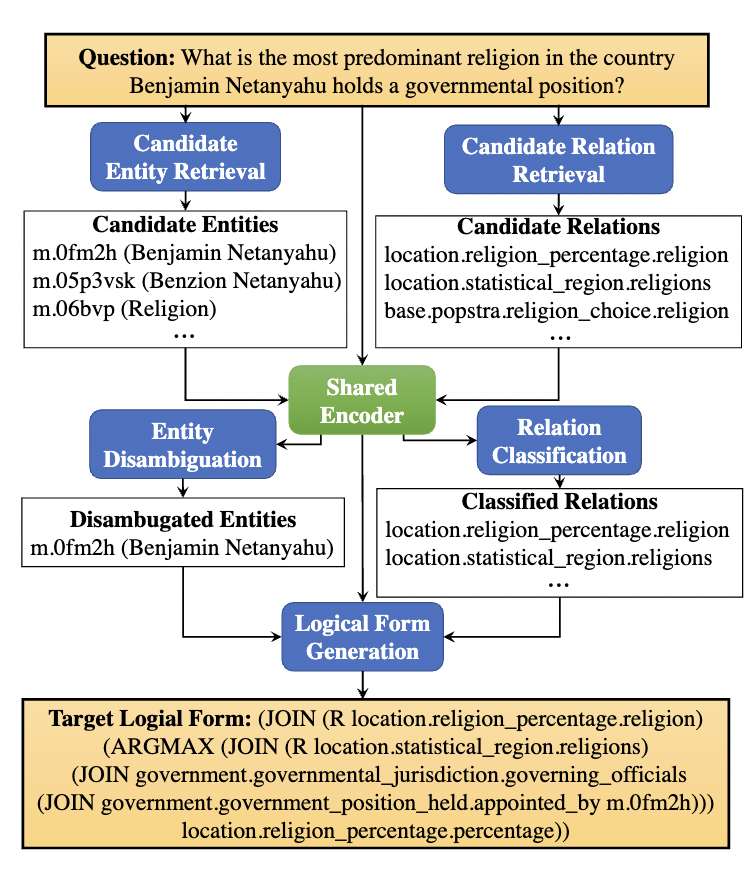

Question answering over knowledge bases (KBQA) for complex questions is a challenging task in natural language processing. Recently, generation-based methods that translate natural language questions to executable logical forms have achieved promising performance. However, most of the existing methods struggle in handling questions with unseen KB items and novel combinations of relations. Some methods leverage auxiliary information to augment logical form generation, but the noise introduced can also lead to incorrect results. To address these issues, we propose GMT-KBQA, a Generation-based KBQA method via Multi-Task learning. GMT-KBQA first gets candidate entities and relations through dense retrieval, and then introduces a multi-task model which jointly learns entity disambiguation, relation classification, and logical form generation. Experimental results show that GMT-KBQA achieves state-of-the-art results on both ComplexWebQuestions and WebQuestionsSP datasets. Furthermore, the detailed evaluation demonstrates that GMT-KBQA benefits from the auxiliary tasks and has a strong generalization capability.

├── LICENSE

├── README.md <- The top-level README for developers using this project.

├── .gitignore

├── ablation_exps.py

├── config.py <- configuration file

├── data_process.py <- code for constructing generation task data

├── detect_and_link_entity.py

├── error_analysis.py

├── eval_topk_prediction_final.py <- code for executing generated logical forms and evaluation

├── generation_command.txt <- command for training, prediction and evaluation of our model

├── parse_sparql_cwq.py <- parse sparql to s-expression

├── parse_sparql_webqsp.py <- parse sparql to s-expression

├── run_entity_disamb.py

├── run_multitask_generator_final.py <- code for training and prediction of our model

├── run_relation_data_process.py <- data preprocess for relation linking

|

├── components <- utility functions

|

├── data

├── common_data <- Freebase meta data

├── facc1 <- facc1 data for entity linking

├── CWQ

├── entity_retrieval

├── generation

├── origin

├── relation_retrieval

├── sexpr

├── WebQSP

├── entity_retrieval

├── generation

├── origin

├── relation_retrieval

├── sexpr

├── entity_retrieval <- code for entity detection, linking and disambiguation

├── executor <- utility functions for executing SPARQLs

├── exps <- saved model checkpoints and training/prediction/evaluation results

├── generation <- code for model and dataset evaluation scripts

├── inputDataset <- code fordataset generation

├── lib <- virtuoso library

├── ontology <- Freebase ontology information

├── relation_retrieval <- code for relation retrieval

├── bi-encoder

├── cross-encoder

├── scripts <- scripts for entity/relation retrieval and LF generation

Project based on the cookiecutter data science project template. #cookiecutterdatascience

This is an original implementation of paper "Logical Form Generation via Multi-task Learning for Complex Question Answering over Knowledge Bases" [Paper PDF]

@inproceedings{hu-etal-2022-logical,

title = "Logical Form Generation via Multi-task Learning for Complex Question Answering over Knowledge Bases",

author = "Hu, Xixin and

Wu, Xuan and

Shu, Yiheng and

Qu, Yuzhong",

booktitle = "Proceedings of the 29th International Conference on Computational Linguistics",

month = oct,

year = "2022",

address = "Gyeongju, Republic of Korea",

publisher = "International Committee on Computational Linguistics",

url = "https://aclanthology.org/2022.coling-1.145",

pages = "1687--1696",

abstract = "Question answering over knowledge bases (KBQA) for complex questions is a challenging task in natural language processing. Recently, generation-based methods that translate natural language questions to executable logical forms have achieved promising performance. These methods use auxiliary information to augment the logical form generation of questions with unseen KB items or novel combinations, but the noise introduced can also leads to more incorrect results. In this work, we propose GMT-KBQA, a Generation-based KBQA method via Multi-Task learning, to better retrieve and utilize auxiliary information. GMT-KBQA first obtains candidate entities and relations through dense retrieval, and then introduces a multi-task model which jointly learns entity disambiguation, relation classification, and logical form generation. Experimental results show that GMT-KBQA achieves state-of-the-art results on both ComplexWebQuestions and WebQuestionsSP datasets. Furthermore, the detailed evaluation demonstrates that GMT-KBQA benefits from the auxiliary tasks and has a strong generalization capability.",

}

Note that all data preparation steps can be skipped, as we've provided those results here. That is, for a fast start, only step (1), (6), (7) is necessary.

At the same time, we also provided detailed instruction for reproducing these results, marked with (Optional) below.

(1) General setup

Download the CWQ dataset here and put them under data/CWQ/origin. The dataset files should be named as ComplexWebQuestions_test[train,dev].json.

Download the WebQSP dataset from here and put them under data/WebQSP/origin. The dataset files should be named as WebQSP.test[train].json.

Setup Freebase: Both datasets use Freebase as the knowledge source. You may refer to Freebase Setup to set up a Virtuoso triplestore service (We use the official data dump of Freebase from here). After starting your virtuoso service, please replace variable FREEBASE_SPARQL_WRAPPER_URL and FREEBASE_ODBC_PORT in config.py with your own.

Other data, model checkpoints as well as evaluation results can be downloaded here. Please refer to README_download.md and download what you need. Besides, FACC1 mention information can be downloaded following data/common_data/facc1/README.md

You may create a conda environment according to configuration file environment.yml:

conda env create -f environment.yml

And then activate this environment:

conda activate gmt

(2) (Optional) Parse SPARQL queries to S-expressions

This step can be skipped, as we've provided the entity retrieval retuls in

- CWQ:

data/CWQ/sexpr/CWQ.test[train,dev].jso. - WebQSP:

data/WebQSP/sexpr/WebQSP.test[train].json

As stated in the paper, we generate S-expressions which are not provided by the original dataset. Here we provide the scripts to parse SPARQL queries to S-expressions.

-

CWQ: Run

python parse_sparql_cwq.py, and it will augment the original dataset files with s-expressions. The augmented dataset files are saved asdata/CWQ/sexpr/CWQ.test[train,dev].json. -

WebQSP: Run

python parse_sparql_webqsp.pyand the augmented dataset files are saved asdata/WebQSP/sexpr/WebQSP.test[train,dev].json.

(3) (Optional) Retrieve Candidate Entities

This step can be skipped, as we've provided the entity retrieval retuls in

- CWQ:

data/CWQ/entity_retrieval/candidate_entities/CWQ_test[train,dev]_merged_cand_entities_elq_facc1.json. - WebQSP:

data/WebQSP/entity_retrieval/candidate_entities/WebQSP_test[train]_merged_cand_entities_elq_facc1.json

If you want to retrieve the candidate entities from scratch, follow the steps below:

-

Obtain the linking results from ELQ. Firstly you should deploy our tailored ELQ. Then you should modify variable

ELQ_SERVICE_URLinconfig.pyaccording to your own ELQ service url. Next run- CWQ:

python detect_and_link_entity.py --dataset CWQ --split test[train,dev] --linker elqto get candidate entities linked by ELQ. The results will be saved asdata/CWQ/entity_retrieval/candidate_entities/CWQ_test[train,dev]_cand_entities_elq.json. - WebQSP: Run

python detect_and_link_entity.py --dataset WebQSP --split test[train] --linker elq, and the results will be saved asdata/WebQSP/entity_retrieval/candidate_entities/WebQSP_test[train]_cand_entities_elq.json

- CWQ:

-

Retrieve candidate entities from FACC1.

-

CWQ: Firstly run

python detect_and_link_entity.py --dataset CWQ --split test[train,dev] --linker facc1to retrieve candidate entities. Then runsh scripts/run_entity_disamb.sh CWQ predict test[train,dev]to rank the candidates by a BertRanker. The ranked results will be saved asdata/CWQ/entity_retrieval/candidate_entities/CWQ_test[train,dev]_cand_entities_facc1.json. -

WebQSP: Firstly run

python detect_and_link_entity.py --dataset WebQSP --split test[train] --linker facc1to retrieve candidate entities. Then runsh scripts/run_entity_disamb.sh WebQSP predict test[train]to rank the candidates by a BertRanker. The ranked results will be saved asdata/WebQSP/entity_retrieval/candidate_entities/WebQSP_test[train]_cand_entities_facc1.json

-

-

Finally, merge the linking results of ELQ and FACC1.

- CWQ:

python data_process.py merge_entity --dataset CWQ --split test[train,dev], and the final entity retrieval results are saved asdata/CWQ/entity_retrieval/candidate_entities/CWQ_test[train,dev]_merged_cand_entities_elq_facc1.json. Note for CWQ, entity label will be standardized in final entity retrieval results. - WebQSP:

python data_process.py merge_entity --dataset WebQSP --split test[train], and the final entity retrieval results are saved asdata/WebQSP/entity_retrieval/candidate_entities/WebQSP_test[train]_merged_cand_entities_elq_facc1.json.

- CWQ:

(4) (Optional) Retrieve Candidate Relations

This step can also be skipped , as we've provided the candidate relations in data/{DATASET}/relation_retrieval/

If you want to retrive the candidate relations from scratch, follow the steps below:

-

Train the bi-encoder to encode questions and relations.

- CWQ: Run

python run_relation_data_process.py sample_data --dataset CWQ --split train[dev]to prepare training data. Then runsh scripts/run_bi_encoder_CWQ.sh mask_mentionto train bi-encoder model. Trained model will be saved indata/CWQ/relation_retrieval/bi-encoder/saved_models/mask_mention. - WebQSP: Run

python run_relation_data_process.py sample_data --dataset WebQSP --split trainto prepare training data. Then runsh scripts/run_bi_encoder_WebQSP.sh rich_relation_3epochsto train bi-encoder model. Trained model will be saved indata/WebQSP/relation_retrieval/bi-encoder/saved_models/rich_relation_3epochs. For WebQSP, linking entities' two-hop relations will be queried and cached.

- CWQ: Run

-

Build the index of encoded relations.

- CWQ: To encode Freebase relations using trained bi-encoder, run

python relation_retrieval/bi-encoder/build_and_search_index.py encode_relation --dataset CWQ. Then runpython relation_retrieval/bi-encoder/build_and_search_index.py build_index --dataset CWQto build the index of encoded relations. Index file will be saved asdata/CWQ/relation_retrieval/bi-encoder/index/mask_mention/ep_1_flat.index. - WebQSP: To encode Freebase relations using trained bi-encoder, run

python relation_retrieval/bi-encoder/build_and_search_index.py encode_relation --dataset WebQSP. Then runpython relation_retrieval/bi-encoder/build_and_search_index.py build_index --dataset WebQSPto build the index of encoded relations. Index file will be saved asdata/WebQSP/relation_retrieval/bi-encoder/index/rich_relation_3epochs/ep_3_flat.index.

- CWQ: To encode Freebase relations using trained bi-encoder, run

-

Retrieve candidate relations using index.

- CWQ: First encode questions into vector by running

python relation_retrieval/bi-encoder/build_and_search_index.py encode_question --dataset CWQ --split test[train, dev]. Then candidate relations can be retrieved using index by runningpython relation_retrieval/bi-encoder/build_and_search_index.py retrieve_relations --dataset CWQ --split train[dev, test]. The retrieved relations will be saved as the training data of cross-encoder indata/CWQ/relation_retrieval/cross-encoder/mask_mention_1epoch_question_relation/CWQ_test[train,dev].tsv. - WebQSP: First encode questions into vector by running

python relation_retrieval/bi-encoder/build_and_search_index.py encode_question --dataset WebQSP --split train[ptrain, pdev, test]. Then candidate relations can be retrieved using index by runningpython relation_retrieval/bi-encoder/build_and_search_index.py retrieve_relations --dataset WebQSP --split test_2hop[train_2hop, train, test, ptrain, pdev]. The retrieved relations will be saved as the training data of cross-encoder indata/WebQSP/relation_retrieval/cross-encoder/rich_relation_3epochs_question_relation/WebQSP_train[ptrain, pdev, test, train_2hop, test_2hop].tsv.

- CWQ: First encode questions into vector by running

-

Train the cross-encoder to rank retrieved relations.

- CWQ: To train, run

sh scripts/run_cross_encoder_CWQ_question_relation.sh train mask_mention_1epoch_question_relation. Trained models will be saved asdata/CWQ/relation_retrieval/cross-encoder/saved_models/mask_mention_1epoch_question_relation/CWQ_ep_1.pt. To get inference results, runsh scripts/run_cross_encoder_CWQ_question_relation.sh predict mask_mention_1epoch_question_relation test[train/dev] CWQ_ep_1.pt. Inference result(logits) will be stored indata/CWQ/relation_retrieval/cross-encoder/saved_models/mask_mention_1epoch_question_relation/CWQ_ep_1.pt_test[train/dev]]. - WebQSP: To train, run

sh scripts/run_cross_encoder_WebQSP_question_relation.sh train rich_relation_3epochs_question_relation. Trained models will be saved asdata/WebQSP/relation_retrieval/cross-encoder/saved_models/rich_relation_3epochs_question_relation/WebQSP_ep_3.pt. To get inference results, runsh scripts/run_cross_encoder_WebQSP_question_relation.sh predict rich_relation_3epochs_question_relation test/[train, train_2hop, test_2hop] WebQSP_ep_3.pt.Inference result(logits) will be stored indata/WebQSP/relation_retrieval/cross-encoder/saved_models/rich_relation_3epochs_question_relation/WebQSP_ep_3.pt_test/[train, train_2hop, test_2hop].

- CWQ: To train, run

-

Get sorted relations for each question.

- CWQ: run

python data_process.py merge_relation --dataset CWQ --split test[train,dev]. The sorted relations will be saved asdata/CWQ/relation_retrieval/candidate_relations/CWQ_test[train,dev]_cand_rels_sorted.json - WebQSP: run

python data_process.py merge_relation --dataset WebQSP --split test[train, train_2hop, test_2hop]. The sorted relations will be saved asdata/WebQSP/relation_retrieval/candidate_relations/WebQSP_test[train, train_2hop, test_2hop]_cand_rels_sorted.json

- CWQ: run

-

(Optional) To only substitude candidate relations in previous merged file, please refer to

substitude_relations_in_merged_file()indata_process.py.

(5) (Optional) Prepare data for multi-task model

This step can be skipped, as we've provided the results in

data/{DATASET}/generation.

Prepare all the input data for our multi-task LF generation model with entities/relations retrieved above:

- CWQ: Run

python data_process.py merge_all --dataset CWQ --split test[train,dev]The merged data file will be saved asdata/CWQ/generation/merged/CWQ_test[train,dev].json. - WebQSP: Run

python data_process.py merge_all --dataset WebQSP --split test[train]. The merged data file will be saved asdata/WebQSP/generation/merged/WebQSP_test[train].json.

Note that if you retrieve candidate entities by your own, then you need also update unique_cand_entities and in_out_rels_map. You can do this by deleting cached files {DATASET}_candidate_entity_ids_unique.json and {DATASET}_candidate_entities_in_out_relations.json downloaded, and then run above commands.

(6) Generate Logical Forms through multi-task learning

-

Training logical form generation model.

- CWQ: our full model can be trained by running

sh scripts/GMT_KBQA_CWQ.sh train {FOLDER_NAME}, The trained model will be saved inexps/CWQ_{FOLDER_NAME}. - WebQSP: our full model can be trained by running

sh scripts/GMT_KBQA_WebQSP.sh train {FOLDER_NAME}. The trained model will be saved inexps/WebQSP_{FOLDER_NAME}. - Command for training other model variants mentioned in our paper can be found in

generation_command.txt.

- CWQ: our full model can be trained by running

-

Command for training model(as shown in 2.) will also do inference on

testsplit. To inference on other split or inference alone:- CWQ: You can run

sh scripts/GMT_KBQA_CWQ.sh predict {FOLDER_NAME} False test 50 4to do inference ontestsplit withbeam_size=50andtest_batch_size=4. - WebQSP: You can run

sh scripts/GMT_KBQA_WebQSP.sh predict {FOLDER_NAME} False test 50 2to do inference ontestsplit alone withbeam_size=50andtest_batch_size = 2. - Command for inferencing on other model variants can be found in

generation_command.txt.

- CWQ: You can run

-

To evaluate trained models:

- CWQ: Run

python3 eval_topk_prediction_final.py --split test --pred_file exps/CWQ_GMT_KBQA/beam_50_test_4_top_k_predictions.json --test_batch_size 4 --dataset CWQ - WebQSP: Run

python3 eval_topk_prediction_final.py --split test --pred_file exps/WebQSP_GMT_KBQA/beam_50_test_2_top_k_predictions.json --test_batch_size 2 --dataset WebQSP

- CWQ: Run

(7) Ablation experiments and Error analysis

- Evaluate entity linking and relation linking result:

- CWQ: Run

python ablation_exps.py linking_evaluation --dataset CWQ - WebQSP: Run

python ablation_exps.py linking_evaluation --dataset WebQSP

- CWQ: Run

- Evaluate QA performance on questions with unseen entity/relation

- CWQ: Run

python ablation_exps.py unseen_evaluation --dataset CWQ --model_type fullto get evaluation result on our full modelGMT-KBQA. Runpython ablation_exps.py unseen_evaluation --dataset CWQ --model_type baseto get evaluation result onT5-basemodel. - WebQSP: Run

python ablation_exps.py unseen_evaluation --dataset WebQSP --model_type fullto get evaluation result on our full modelGMT-KBQA. Runpython ablation_exps.py unseen_evaluation --dataset WebQSP --model_type baseto get evaluation result onT5-basemodel.

- CWQ: Run

- Error analysis on GMT-KBQA results.

- Run

python error_analysis.py.

- Run

- We spotted that due to file overwritten, disambiguated entities of WebQSP training set only contains entities from

FACC1(which should contain merged results ofELQandFACC1). Disambiguated entities of WebQSP training set is only used in our model variantw/Retrieval for WebQSPshown inTable 4in our paper. Other models and model variants are not influenced. - Influence: For experiment result in

Table 4of our paper, F1 ofw/Retrieval for WebQSPdataset should be 73.7%, still -1.0% lower thanT5-base. Other experiment results remains unchanged. - Corrected data: We have uploaded a

patchfoloder in Google Drive; download files in this folder and follow theREADME.mdto replace previous files. Besides, We have updated the experiment results ofw/Retrieval model variant for WebQSPdataset also in Google Drive, underexps/WebQSP_concat_retrieval.tar.gz. Still, if you didn't use our model variant w/Retrieval for WebQSP, then there's no need to update data.