Yupan Huang, Zaiqiao Meng, Fangyu Liu, Yixuan Su, Nigel Collier, Yutong Lu.

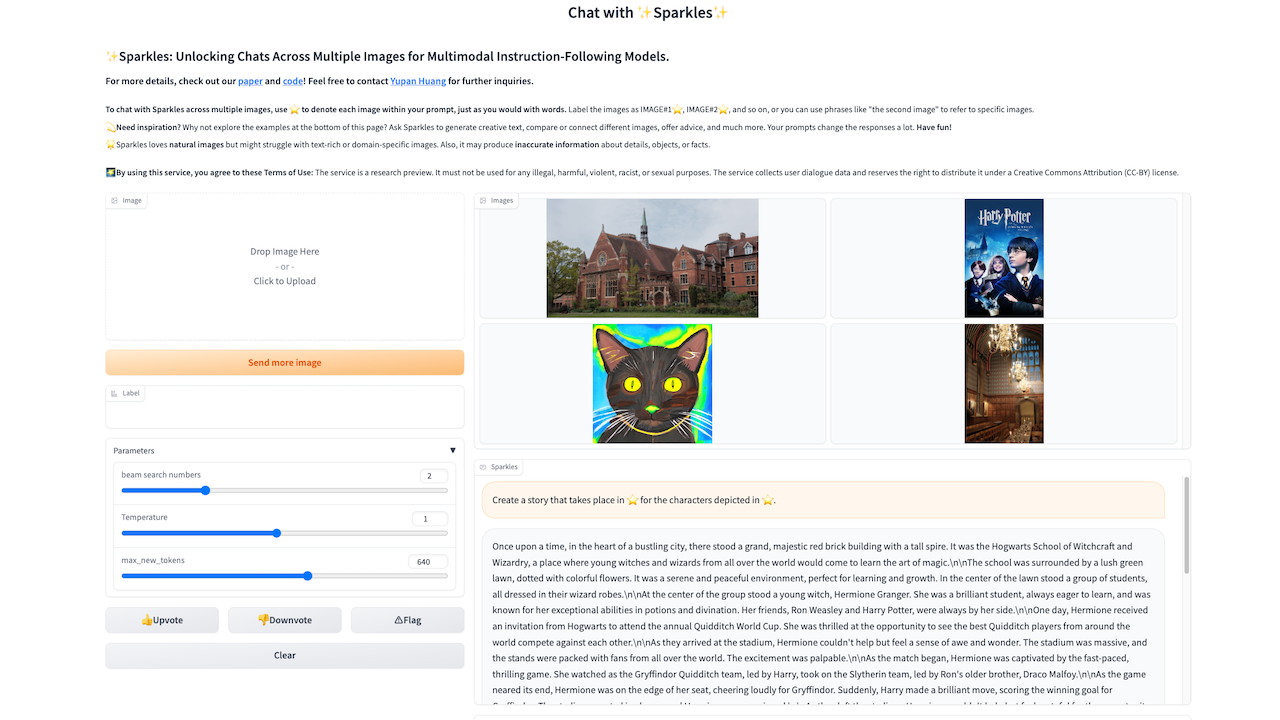

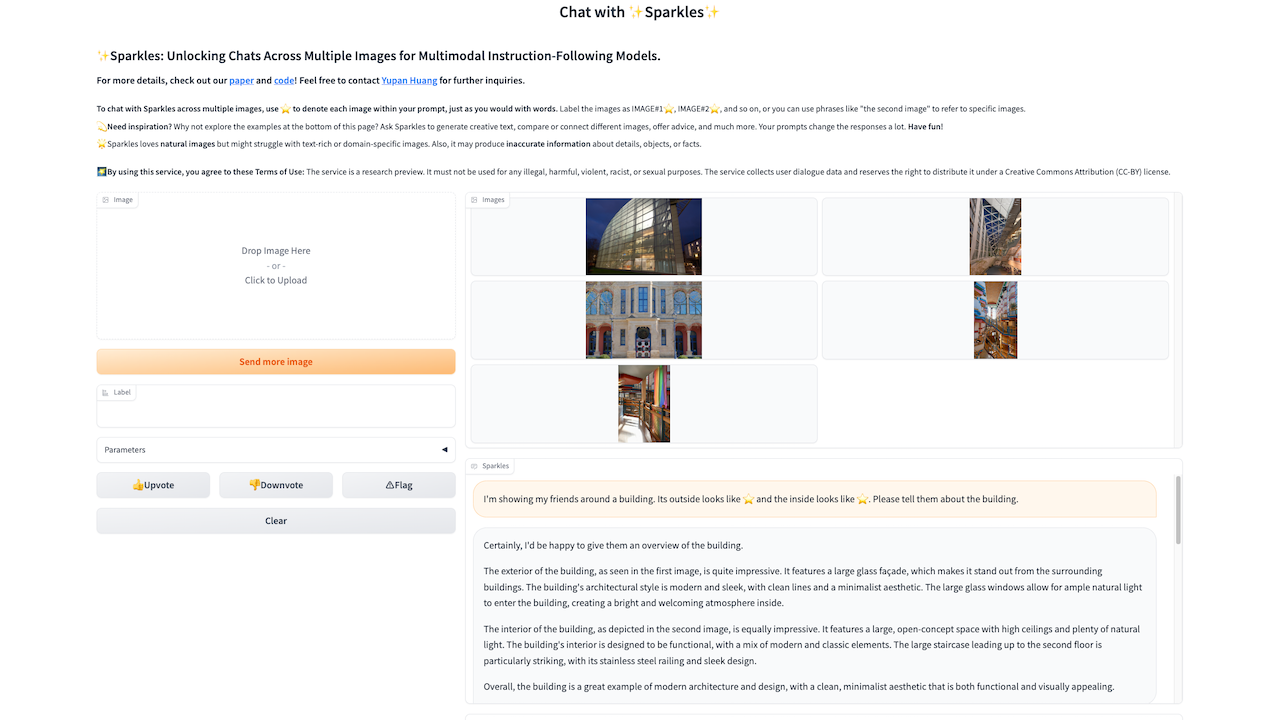

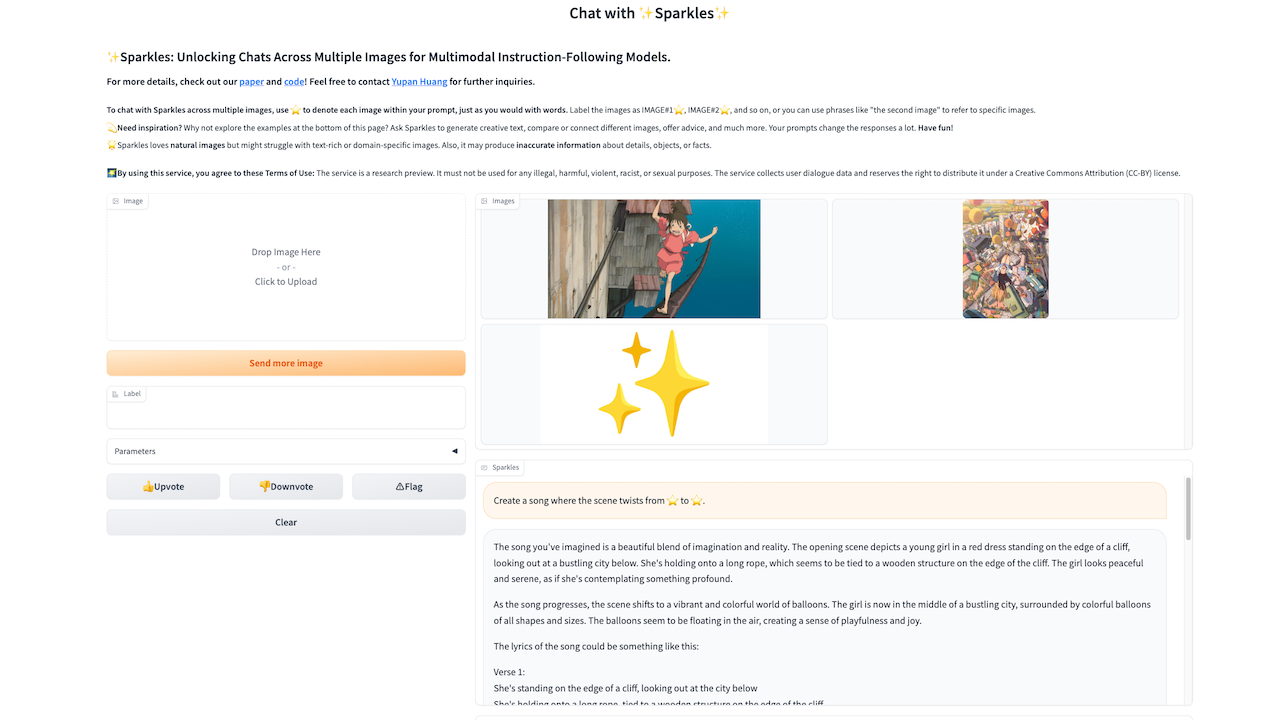

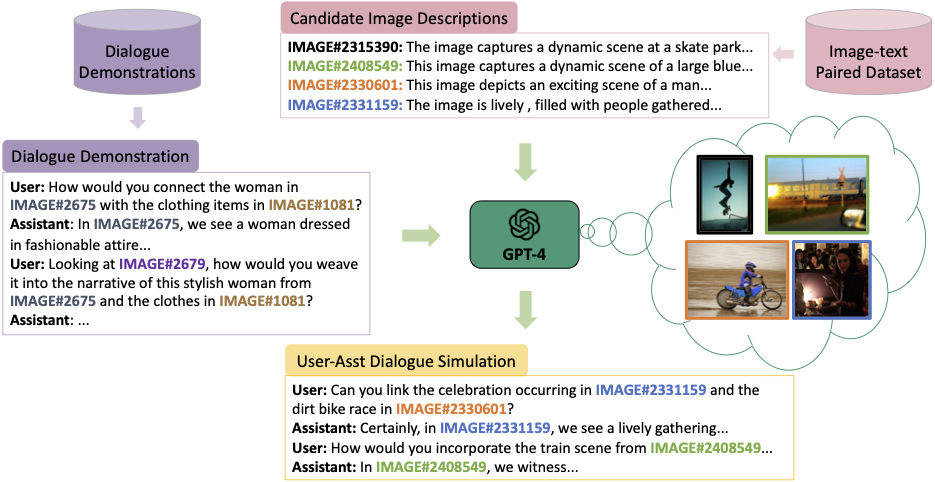

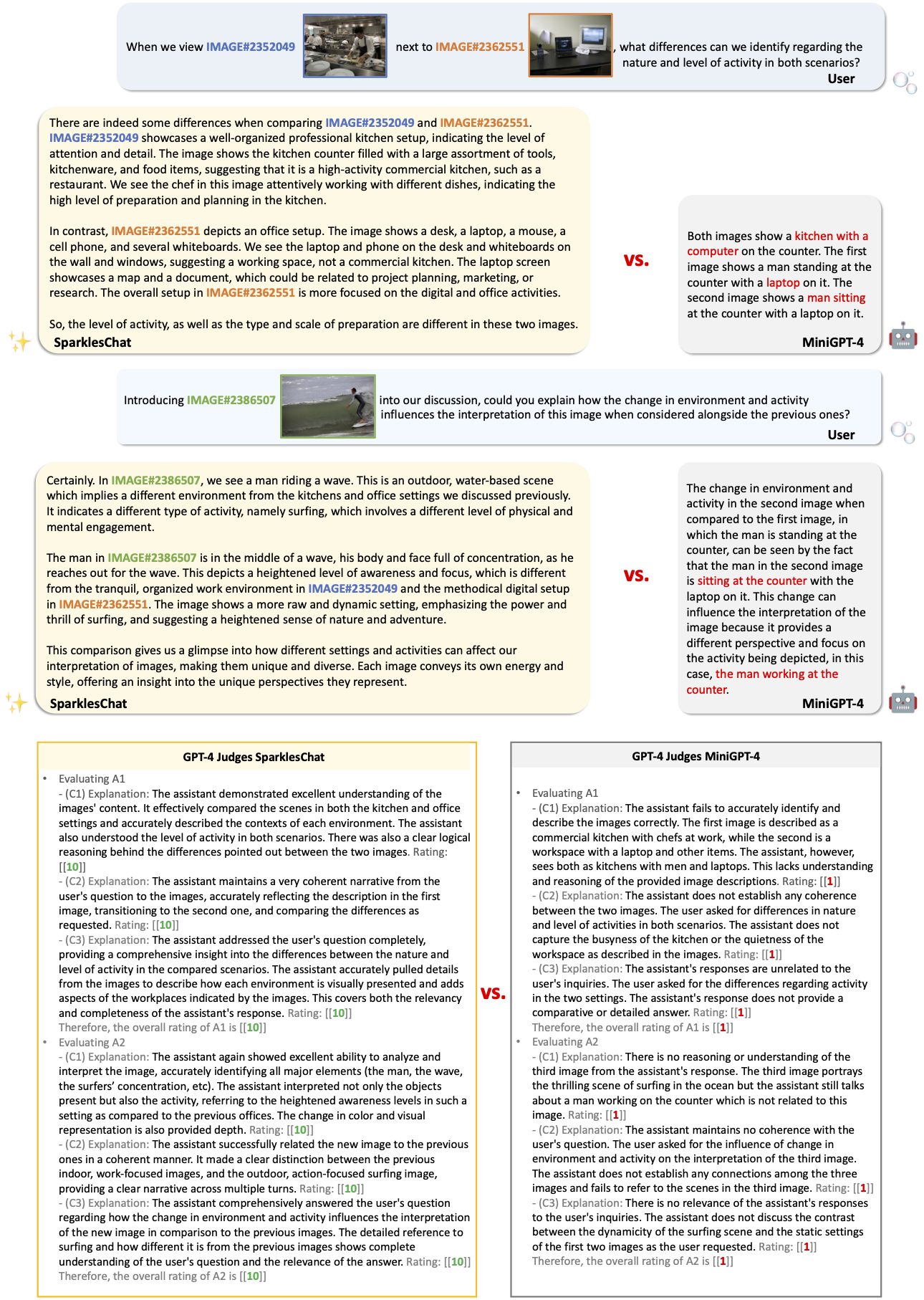

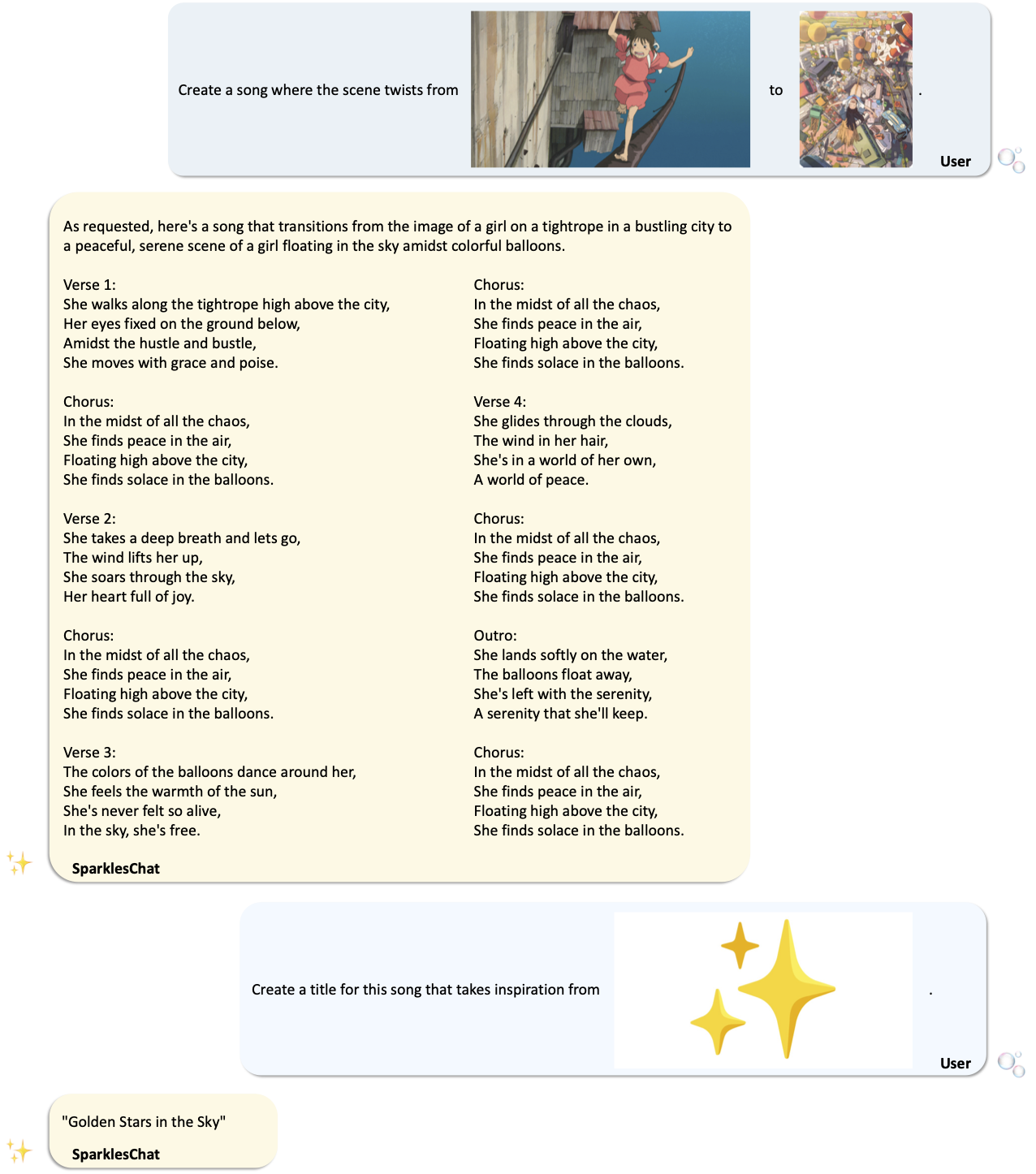

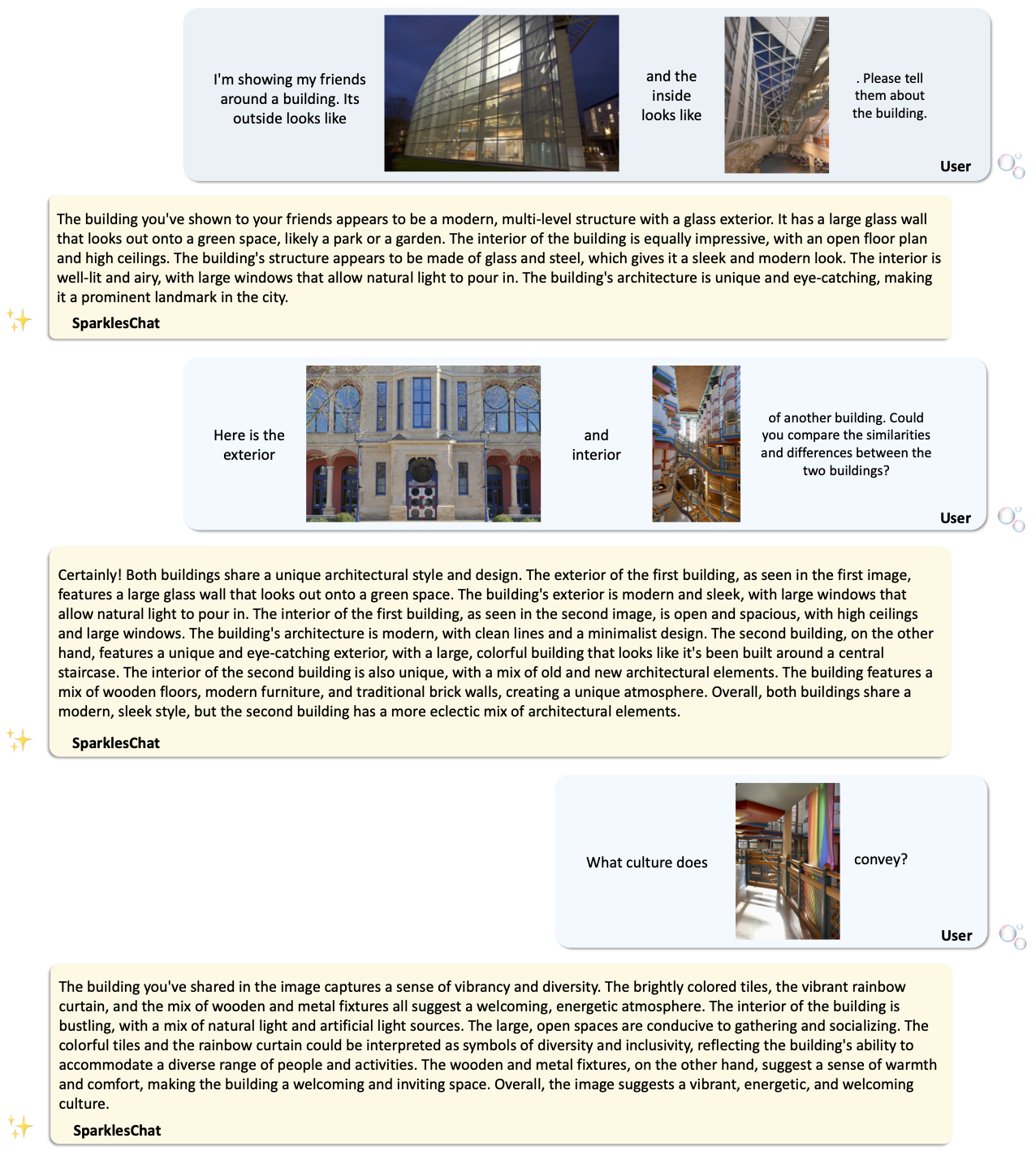

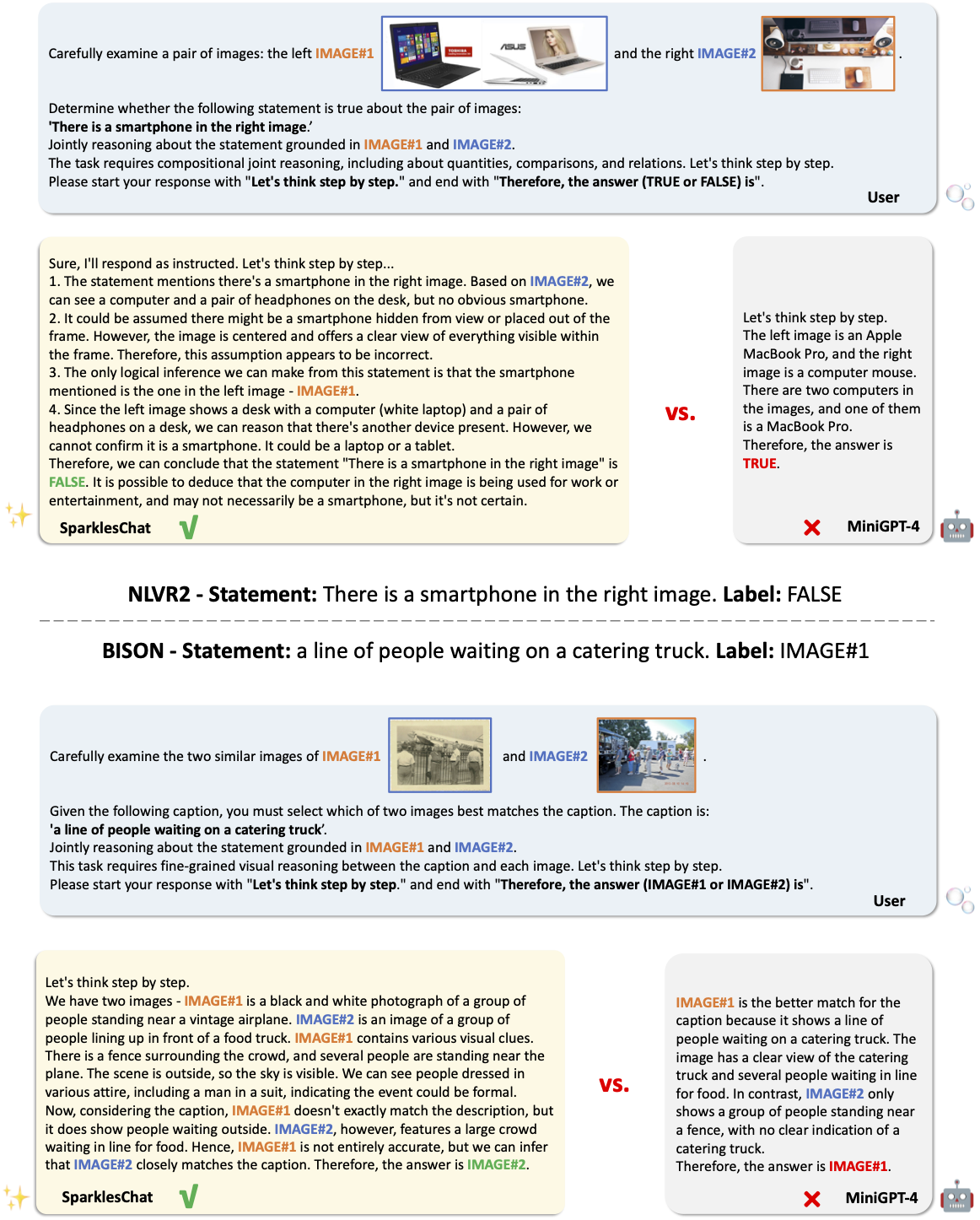

Large language models exhibit enhanced zero-shot performance on various tasks when fine-tuned with instruction-following data. Multimodal instruction-following models extend these capabilities by integrating both text and images. However, existing models such as MiniGPT-4 face challenges in maintaining dialogue coherence in scenarios involving multiple images. A primary reason is the lack of a specialized dataset for this critical application. To bridge these gaps, we present SparklesChat, a multimodal instruction-following model for open-ended dialogues across multiple images. To support the training, we introduce SparklesDialogue, the first machine-generated dialogue dataset tailored for word-level interleaved multi-image and text interactions. Furthermore, we construct SparklesEval, a GPT-assisted benchmark for quantitatively assessing a model's conversational competence across multiple images and dialogue turns. Our experiments validate the effectiveness of SparklesChat in understanding and reasoning across multiple images and dialogue turns. Specifically, SparklesChat outperformed MiniGPT-4 on established vision-and-language benchmarks, including the BISON binary image selection task and the NLVR2 visual reasoning task. Moreover, SparklesChat scored 8.56 out of 10 on SparklesEval, substantially exceeding MiniGPT-4's score of 3.91 and nearing GPT-4's score of 9.26. Qualitative evaluations further demonstrate SparklesChat's generality in handling real-world applications.

git clone https://github.com/HYPJUDY/Sparkles.git

cd Sparkles

conda env create -f environment.yml

conda activate sparklesRequired for generating SparklesDialogue and evaluation on SparklesEval. See OpenAI API and call_gpt_api.py for more details.

export OPENAI_API_KEY="your-api-key-here" # required, get key from https://platform.openai.com/account/api-keys

export OPENAI_ORGANIZATION="your-organization-here" # optional, only if you want to specify an organization

export OPENAI_API_BASE="https://your-endpoint.openai.com" # optional, only if calling the API from AzureMost related resources for Sparkles are available on OneDrive.

Structure of Resources.

Sparkles

├── models

│ ├── pretrained

│ │ ├── sparkleschat_7b.pth

│ │ ├── minigpt4_7b_stage1.pth

├── data

│ ├── SparklesDialogueCC

│ │ ├── annotations

| | | ├── SparklesDialogueCC.json

| | | ├── SparklesDialogueCC.html

| | | ├── SparklesDialogueCC_50demo_img11.json

| | | ├── ...

| | | ├── SparklesDialogueCC_turn1_1img.json

| | | ├── ...

│ │ ├── images

│ ├── SparklesDialogueVG

│ │ ├── annotations

| | | ├── SparklesDialogueVG.json

| | | ├── SparklesDialogueVG.html

| | | ├── SparklesDialogueVG_turn1_2img.json

| | | ├── ...

│ │ ├── images

│ ├── cc_sbu_align

│ │ ├── image

│ │ ├── filter_cap.json

│ │ ├── ...

│ ├── SVIT

│ │ ├── detail_description.json

│ │ ├── SVIT_detail_description_filtered_for_Sparkles.json

│ ├── LLaVA

│ │ ├── complex_reasoning_77k.json

│ │ ├── detail_23k.json

│ │ ├── LLaVA_complex_reasoning_77k_filtered_for_Sparkles.json

│ │ ├── LLaVA_detail_23k_filtered_for_Sparkles.json

│ ├── VisualGenome

│ │ ├── image_data.json

├── evaluation

│ ├── SparklesEval

│ │ ├── images

│ │ ├── annotations

| | | ├── sparkles_evaluation_sparkleseval_annotations.json

| | | ├── sparkles_evaluation_sparkleseval_annotations.html

│ │ ├── results

| | | ├── SparklesEval_models_pretrained_sparkleschat_7b.json

| | | ├── SparklesEval_models_pretrained_sparkleschat_7b.html

| | | ├── SparklesEval_models_pretrained_minigpt4_7b.json

| | | ├── SparklesEval_models_pretrained_minigpt4_7b.html

│ ├── BISON

│ │ ├── images

│ │ ├── annotations

| | | ├── sparkles_evaluation_bison_annotations.json

│ │ ├── results

| | | ├── BISON_models_pretrained_sparkleschat_7b_acc0.567.json

| | | ├── BISON_models_pretrained_sparkleschat_7b_acc0.567.html

| | | ├── BISON_models_pretrained_minigpt4_7b_acc0.460.json

| | | ├── BISON_models_pretrained_minigpt4_7b_acc0.460.html

│ ├── NLVR2

│ │ ├── images

│ │ ├── annotations

| | | ├── sparkles_evaluation_nlvr2_annotations.json

│ │ ├── results

| | | ├── NLVR2_models_pretrained_sparkleschat_7b_acc0.580.json

| | | ├── NLVR2_models_pretrained_sparkleschat_7b_acc0.580.html

| | | ├── NLVR2_models_pretrained_minigpt4_7b_acc0.513.json

| | | ├── NLVR2_models_pretrained_minigpt4_7b_acc0.513.html

├── assets

│ ├── images

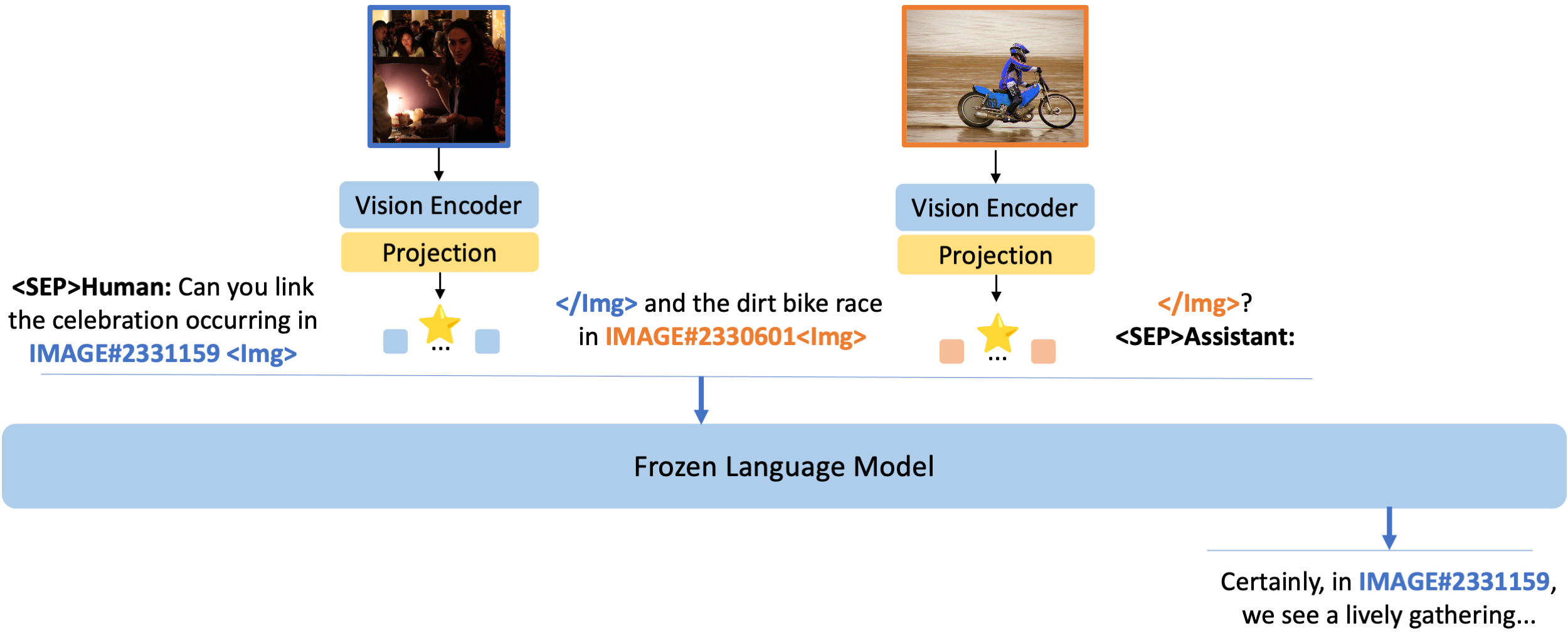

│ ├── statisticsSparklesChat is trained from the first stage pretrained model of MiniGPT-4, which connects a pretrained vision encoder and a pretrained LLM with a projection layer. For SparklesChat/MiniGPT-4, only the projection layer is trainable for efficiency and its parameters are saved separately. The language decoder, Vicuna, is based on the LLaMA framework, which can handle diverse language tasks. For image processing, we use the visual encoder from BLIP-2, combining a pretrained EVA-ViT in Vision Transformer (ViT) backbone with a pretrained Q-Former.

Detailed instructions to download these pretrained models

-

Pretrained vision models: the weights of EVA-ViT and Q-Former will be downloaded automatically because their links have been embedded in the code.

-

Pretrained language models:

- Request access to the LLaMA-7B weight and convert it to the HuggingFace Transformers format to

/path/to/llama-7b-hf/. See this instruction. - Downlaod the delta weight of the v0 version of Vicuna-7B (lmsys/vicuna-7b-delta-v0) to

/path/to/vicuna-7b-delta-v0/.git lfs install git clone https://huggingface.co/lmsys/vicuna-7b-delta-v0

- Add the delta to the original LLaMA weights to obtain the Vicuna weights in

/path/to/vicuna-7b-v0/. Check this instruction.Then, set the path ofpip install git+https://github.com/lm-sys/FastChat.git@v0.1.10 python -m fastchat.model.apply_delta --base /path/to/llama-7b-hf/ --target /path/to/vicuna-7b-v0/ --delta /path/to/vicuna-7b-delta-v0/

llama_modelin the configs/models/sparkleschat.yaml to the path of the vicuna weight.llama_model: "/path/to/vicuna-7b-v0/"

- Request access to the LLaMA-7B weight and convert it to the HuggingFace Transformers format to

-

(Optional, only for training) Download the first stage pretrained model of MiniGPT-4 (7B) and set the

ckptin train_configs/sparkleschat.yaml to the corresponding path.ckpt: '/path/to/Sparkles/models/pretrained/minigpt4_7b_stage1.pth'

-

(Optional, only for inference) Download the pretrained weight of SparklesChat and set the

ckptto the path in eval_configs/sparkles_eval.yaml.ckpt: '/path/to/Sparkles/models/pretrained/sparkleschat_7b.pth'

python demo.py --cfg-path eval_configs/sparkles_eval.yaml --gpu-id 0 --save-root /path/to/Sparkles/demo/Demo Illustrations.

First, please download the SparklesDialogue dataset.

Then, set the output_dir in train_configs/sparkleschat.yaml to the path where you want to save the model.

output_dir: "/path/to/Sparkles/models/SparklesChat/"Then run

torchrun --nproc-per-node 8 train.py --cfg-path train_configs/sparkleschat.yamlThe default setting is for training the final model of SparklesChat as reported in the paper.

Train other models.

You can train other models by changing the sample_ratio of different datasets or tuning other parameters such as the number of GPUs, batch size, and learning rate.

If you want to train with other datasets such as LLaVA_description, LLaVA_reasoning, cc_sbu_align, please uncomment their relevant configs in train_configs/sparkleschat.yaml and download them as well.

Note that for LLaVA, we have adapted the original text annotations (i.e., detail_23k.json and complex_reasoning_77k.json) to the format of Sparkles (i.e., LLaVA_complex_reasoning_77k_filtered_for_Sparkles.json and LLaVA_detail_23k_filtered_for_Sparkles.json).

You need to download the COCO images to /path/to/coco/train2017 and specify this path in train_configs/sparkleschat.yaml.

Download the SparklesDialogue dataset in /path/to/Sparkles/data/SparklesDialogueVG/ and /path/to/Sparkles/data/SparklesDialogueCC/.

Specify the root_dir in train_configs/sparkleschat.yaml to the corresponding paths.

Set the sparkles_root="/path/to/Sparkles/" in dataset/config_path.py.

SparklesDialogueCC.html and SparklesDialogueVG.html have been included in resources.

Images should be in /path/to/Sparkles/data/SparklesDialogueCC/images and /path/to/Sparkles/data/SparklesDialogueVG/images respectively.

Otherwise, please specify the relative_dir in dataset/visualize_data_in_html.py when generating the HTML files:

python dataset/visualize_data_in_html.py --visualize_SparklesDialogue # --sparkles_root /path/to/Sparkles/

# you can also visualize the evaluation results

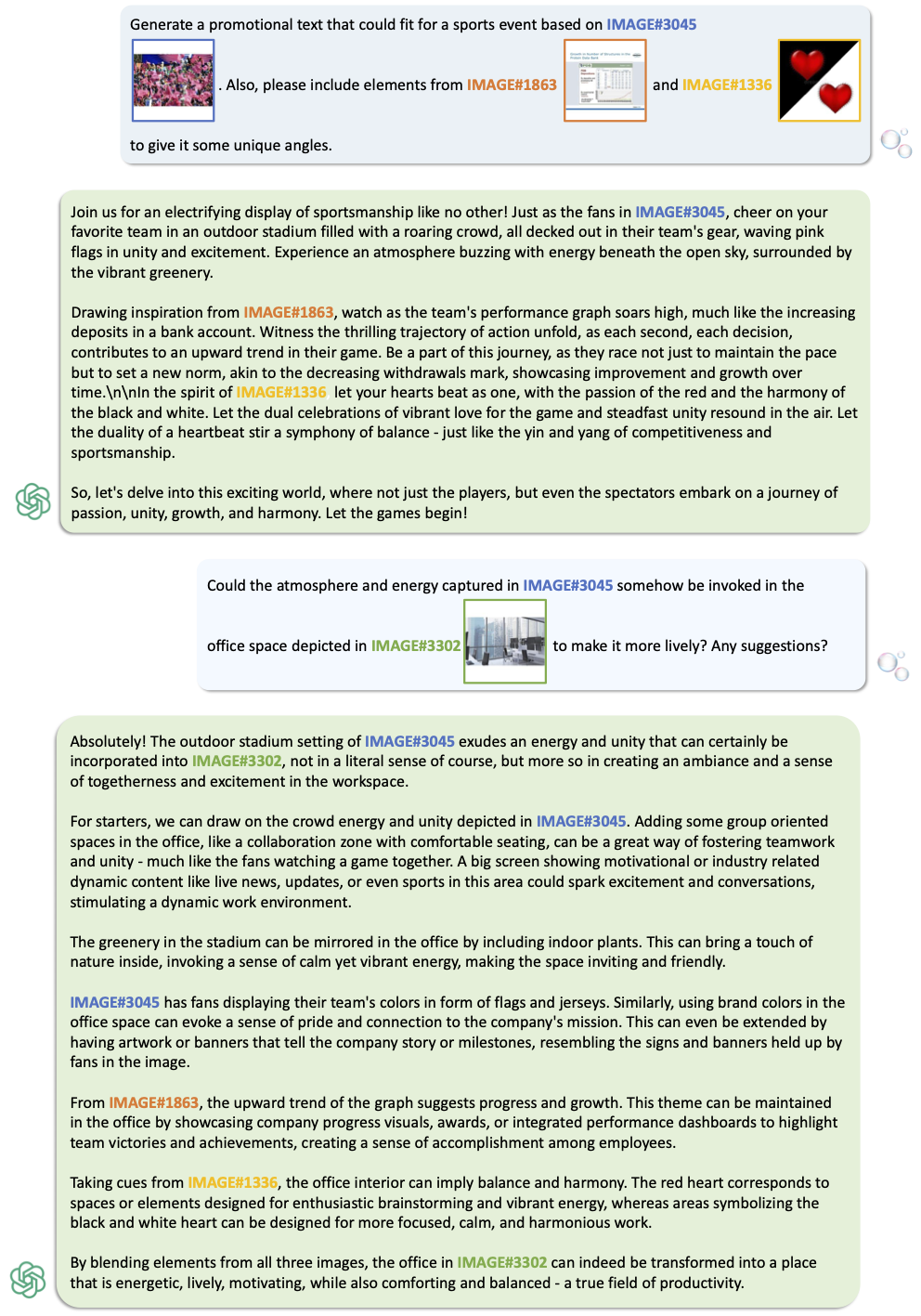

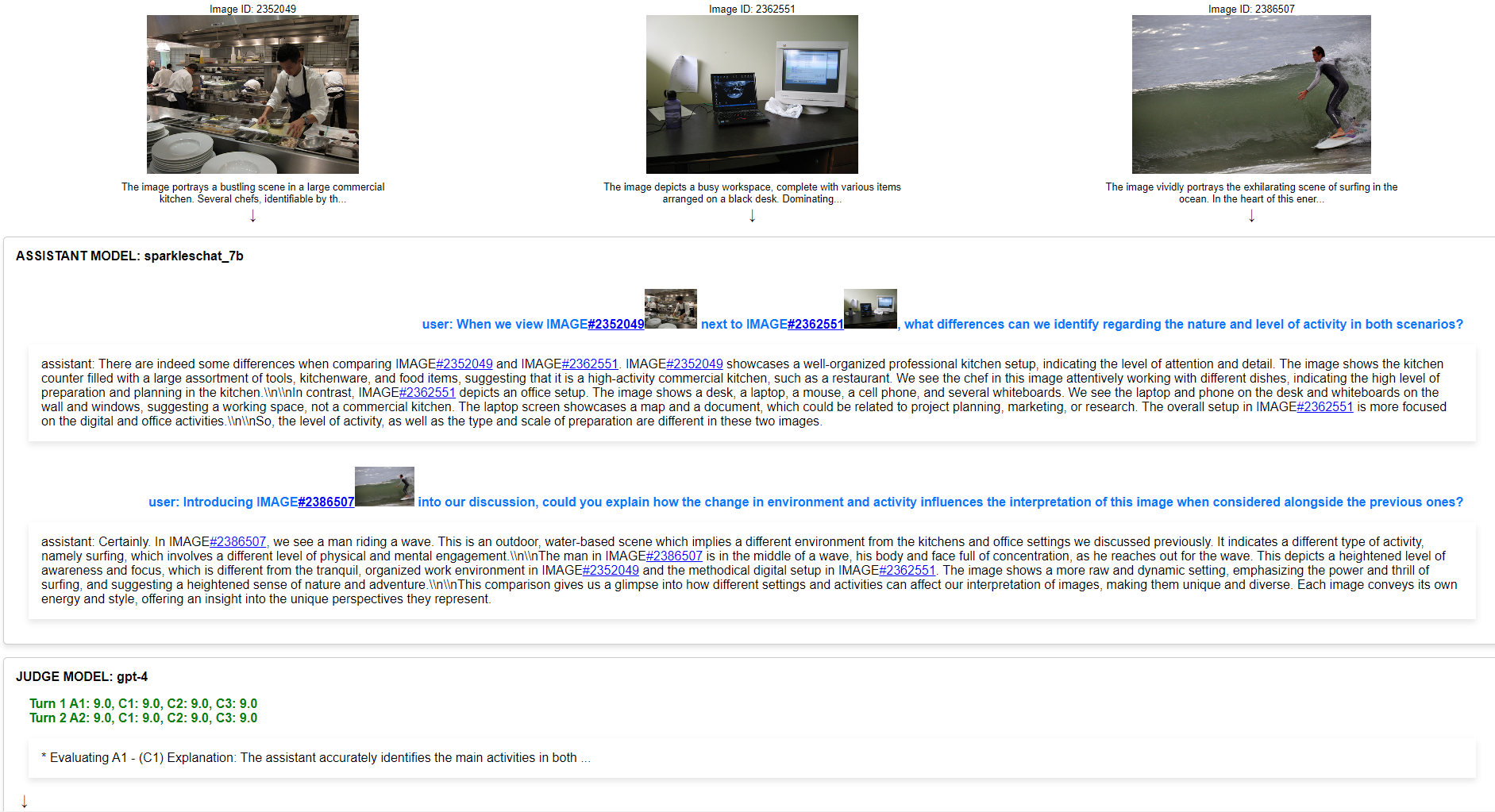

python dataset/visualize_data_in_html.py --visualize_eval_results # --sparkles_root /path/to/Sparkles/Full page illustration.

- Click arrows to view detailed image descriptions or the judge model's responses.

- Hover over image IDs to view their corresponding images.

- Click images to view the full-size image.

# Install benepar parser, refer to https://github.com/nikitakit/self-attentive-parser#installation

python -m spacy download en_core_web_md

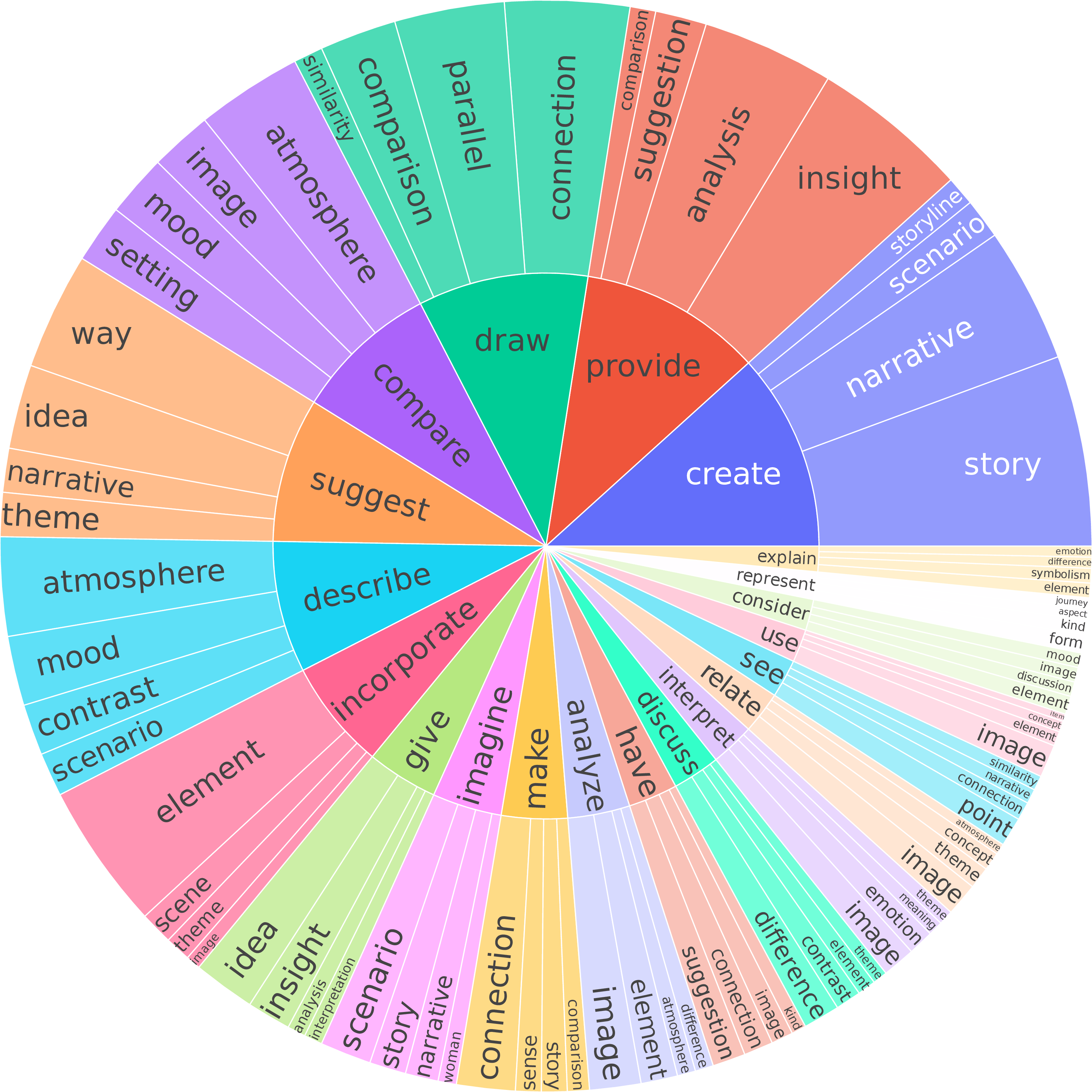

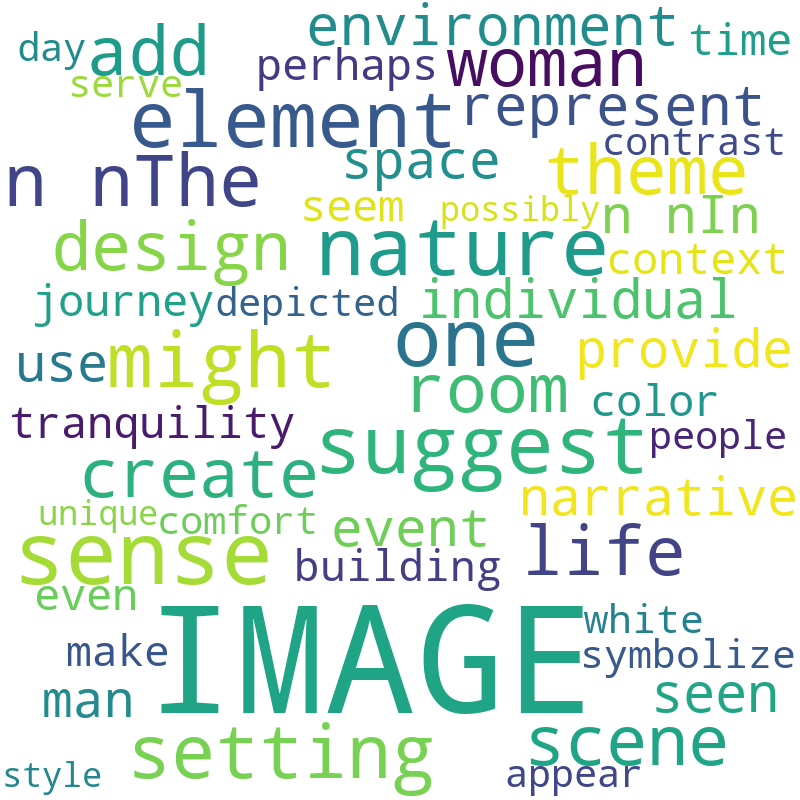

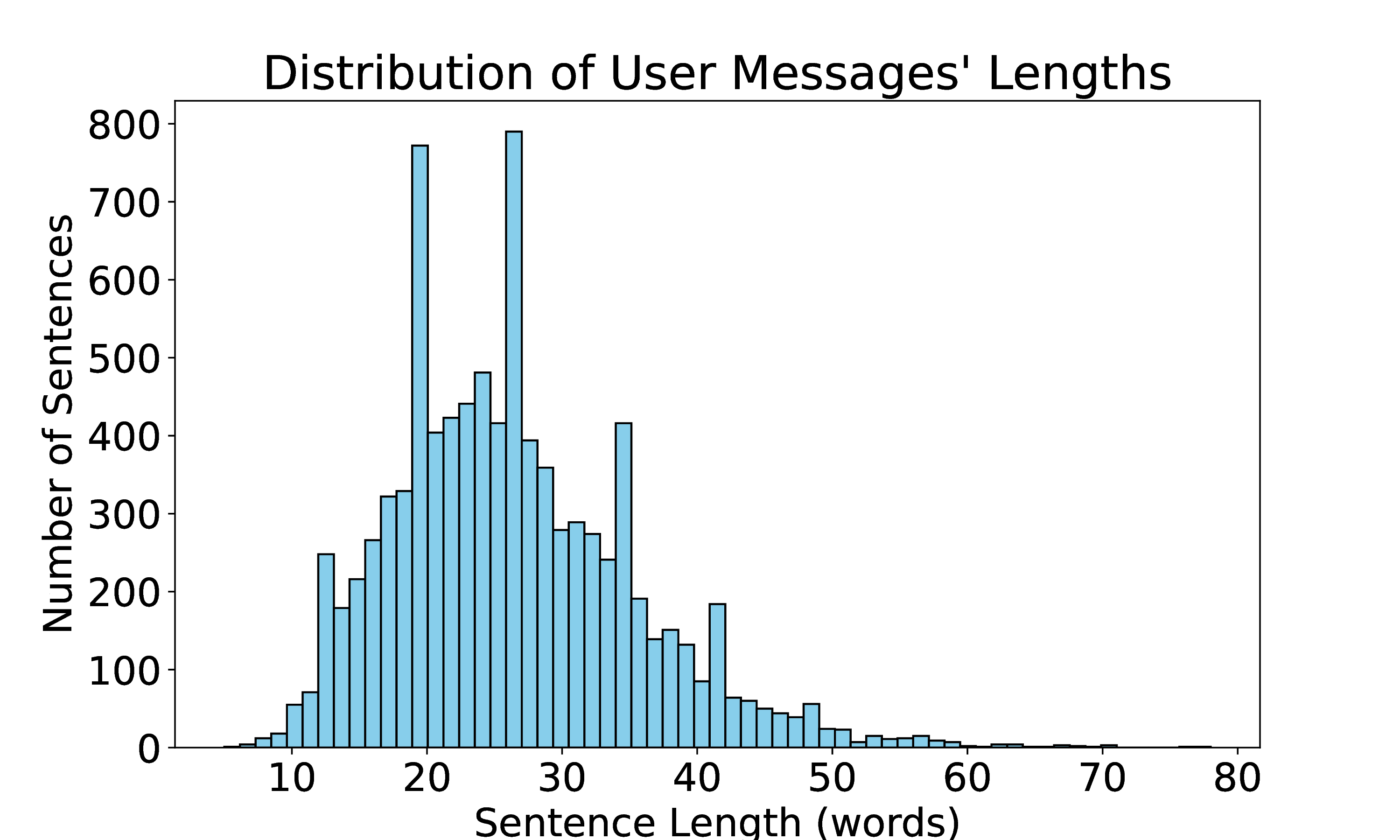

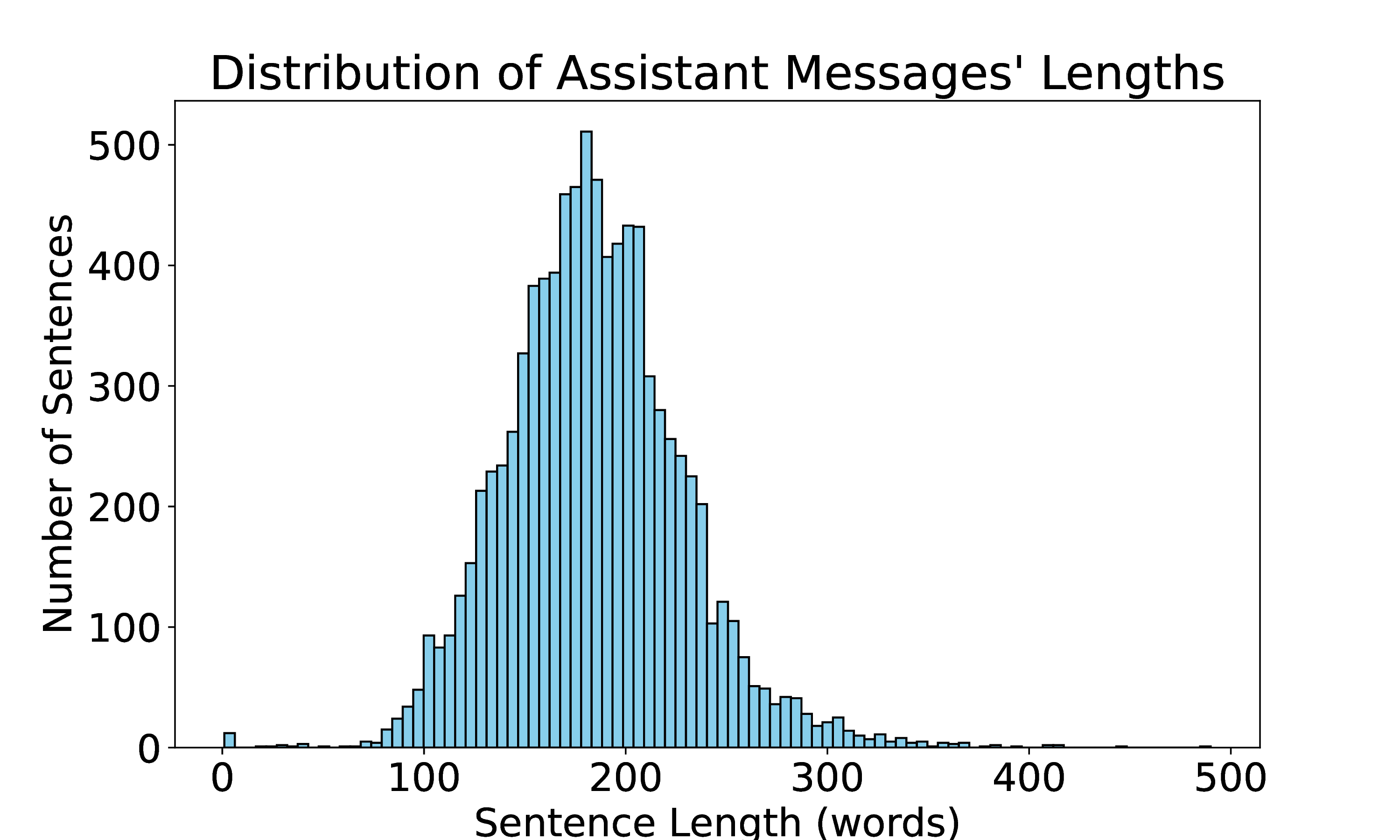

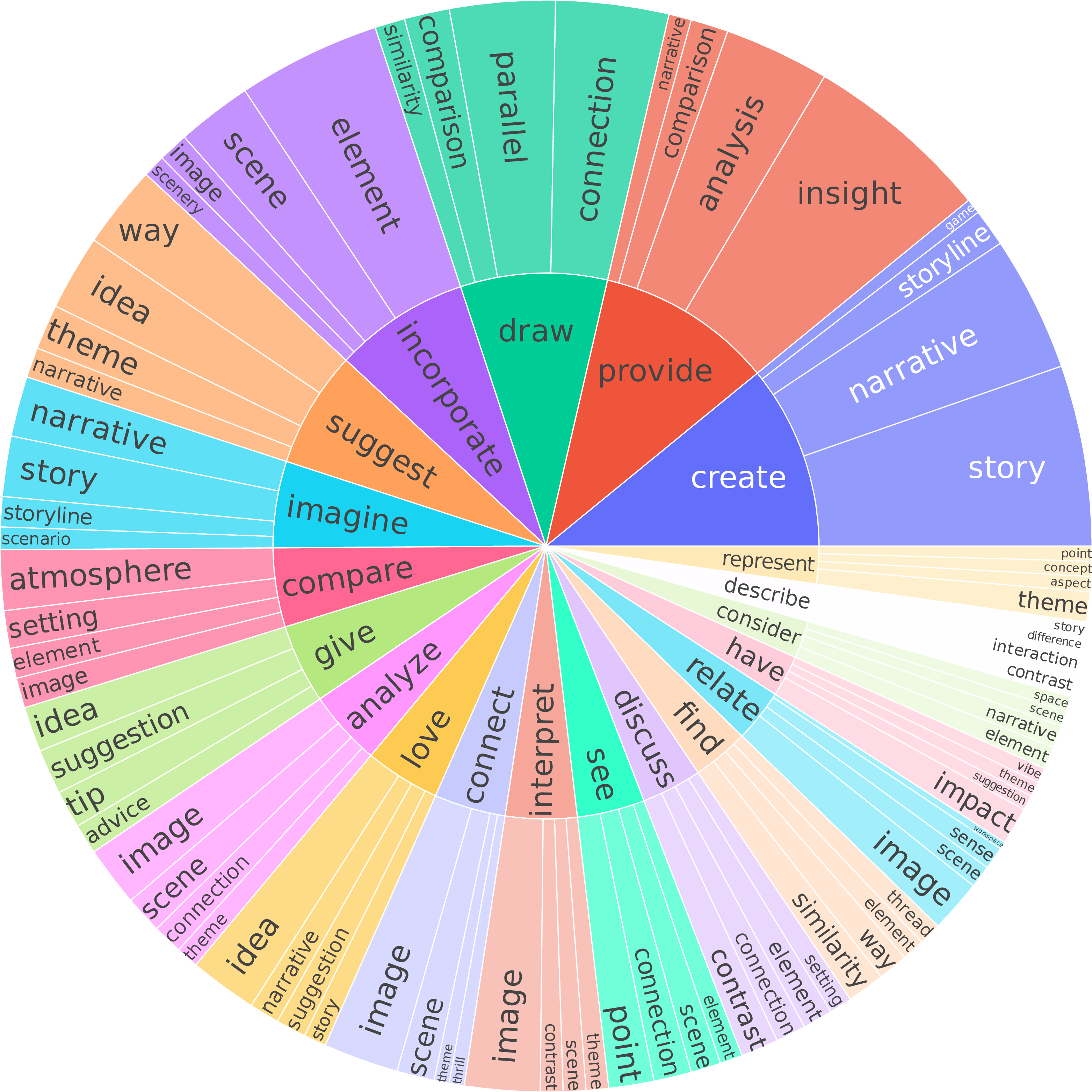

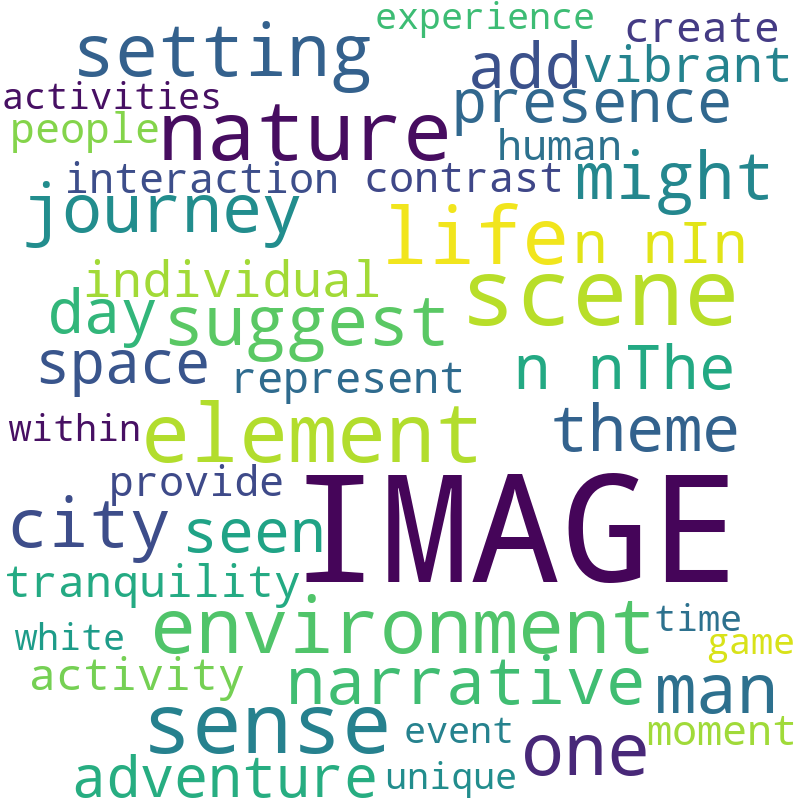

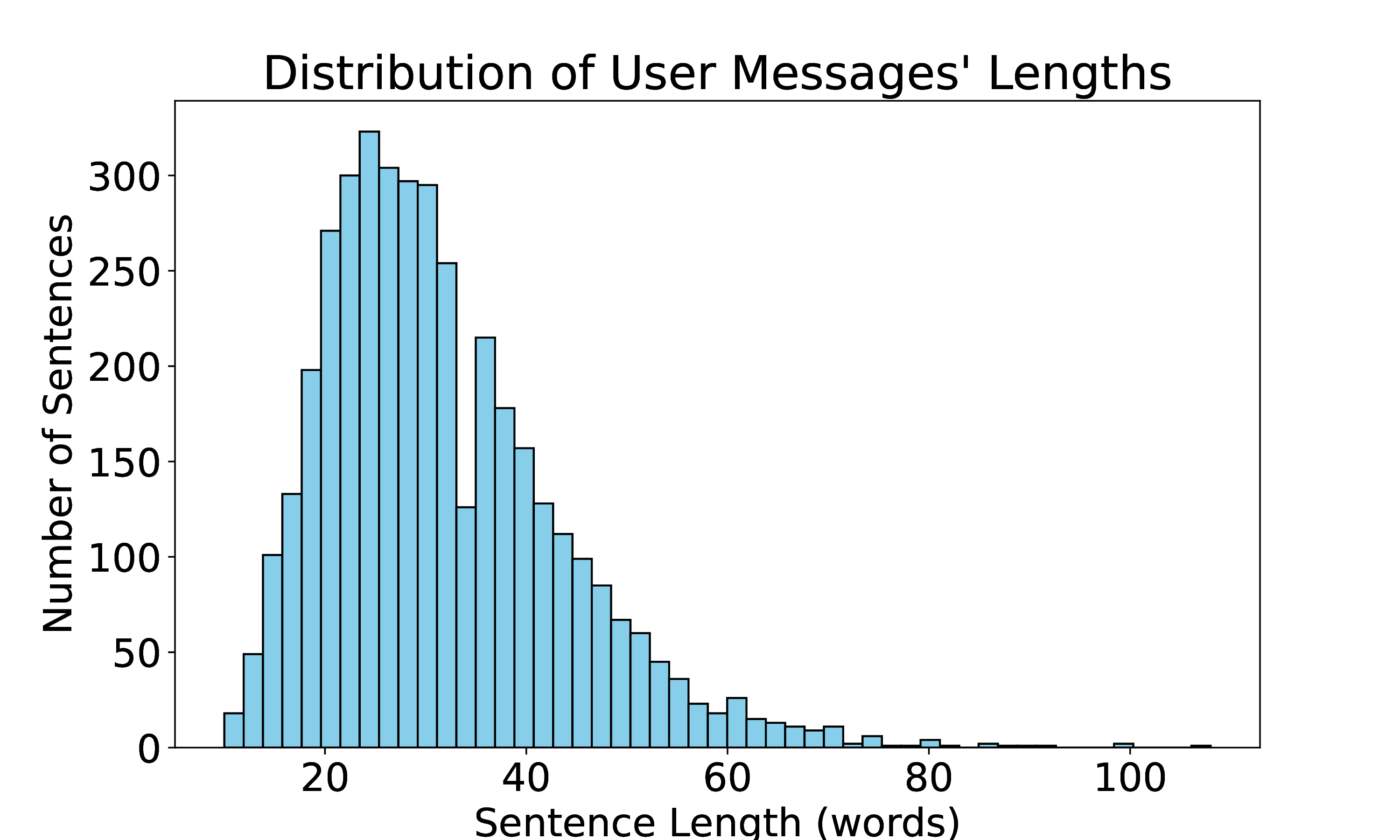

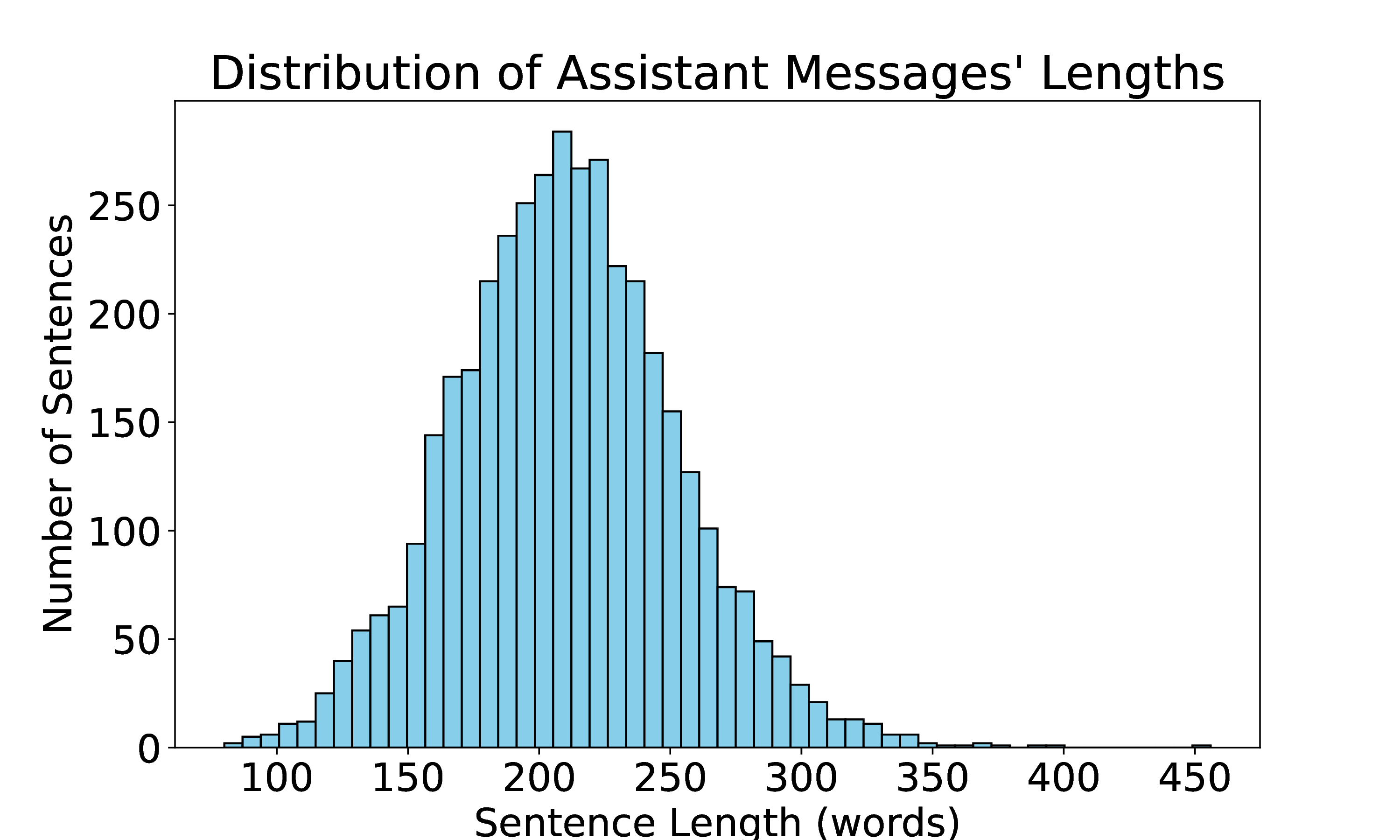

python dataset/data_statistics.py # --sparkles_root /path/to/Sparkles/SparklesDialogueCC.

SparklesDialogueVG.

Prepare detailed image descriptions by downloading the caption sources specified in the table. Note that image sources are not required for data generation as the images are not sent to GPT-4.

| Dataset Name | Image Source | Caption Source | #Dialogue | #Unique/Total Image |

|---|---|---|---|---|

| SparklesDialogueCC | Conceptual Captions | MiniGPT-4 | 4,521 | 3,373/12,979 |

| SparklesDialogueVG | Visual Genome | SVIT | 2,000 | 7,000/7,000 |

| SparklesEval | Visual Genome | SVIT | 150 | 550/550 |

#The `case_num` should be larger than the final number of the generated data to allow for some failure cases.

python datasets/generate_SparklesDialogue.py --case_num 20 --vg_image_data_path /path/to/Sparkles/data/VisualGenome/image_data.json # --sparkles_root /path/to/Sparkles/Download the evaluation datasets including SparklesEval, BISON and NLVR2 in /path/to/Sparkles/evaluation and set the root directory in evaluate.sh and run.sh.

set_root="--sparkles_root /path/to/Sparkles/"Set the path of the evaluated model's ckpt in eval_configs/sparkles_eval.yaml.

We have do this for SparklesChat before, change it if you want to evaluate other models.

ckpt: '/path/to/Sparkles/models/pretrained/sparkleschat_7b.pth'Evaluate SparklesChat on SparklesEval with one GPU:

python evaluate.py --sparkles_root /path/to/Sparkles --cfg-path eval_configs/sparkles_eval.yaml --num-beams 1 --inference --gpu-id 0 --dataset SparklesEval --data-from 0 --data-to 150

python evaluate.py --sparkles_root /path/to/Sparkles --cfg-path eval_configs/sparkles_eval.yaml --num-beams 1 --merge-results --dataset SparklesEvalEvaluate SparklesChat on SparklesEval, BISON and NLVR2 with multiple GPUs:

bash ./evaluate.sh

# or

./evaluate.sh 2>&1 | tee "/path/to/Sparkles/evaluation/evaluate.log"or train and evaluate SparklesChat in one script.

bash ./run.sh

# or

./run.sh 2>&1 | tee "/path/to/Sparkles/evaluation/run.log"The results in JSON and HTML formats will be automatically saved under /path/to/Sparkles/evaluation/{SparklesEval|BISON|NLVR2}/results/.

We provide the results of SparklesChat and MiniGPT-4 (7B) in each results folder.

All images used in the examples used in the paper are shared in Sparkles/assets/images.

If you like our paper or code, please cite us:

@article{huang2023sparkles,

title={Sparkles: Unlocking Chats Across Multiple Images for Multimodal Instruction-Following Models},

author={Huang, Yupan and Meng, Zaiqiao and Liu, Fangyu and Su, Yixuan and Nigel, Collier and Lu, Yutong},

journal={arXiv preprint arXiv:2308.16463},

year={2023}

}Sparkles is primarily based on MiniGPT-4, which is built upon BLIP2 from the LAVIS library, and Vicuna, which derives from LLaMA. Additionally, our data is generated by OpenAI's GPT-4. We extend our sincerest gratitude to these projects for their invaluable contributions.

Our code is distributed under the BSD 3-Clause License. Our model is subject to LLaMA's model license. Our data is subject to OpenAI's Terms of Use.

Feel free to open issues or email Yupan for queries related to Sparkles. For issues potentially stemming from our dependencies, you may want to refer to the original repositories.