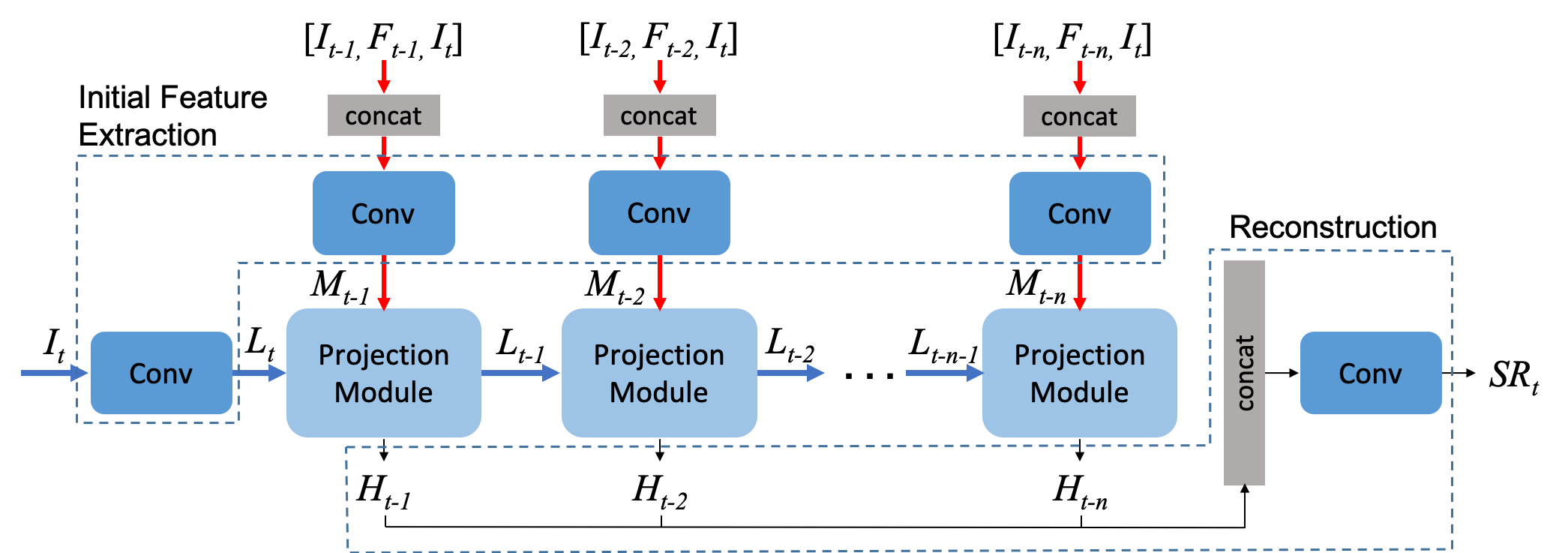

Recurrent Back-Projection Network for Video Super-Resolution (CVPR2019)

The original RBPN implementation was forked from alterzero/RBPN-PyTorch. The codes in this repository uses NVIDIA/nvvl, a library that loads video frames straight on GPUs. Whereas the original implementation of RBPN applies super-resolution(SR) techniques on already extracted video frames, this repository aims to apply the SR technique on raw video files so that low-resolution(LR) videos do not have to go through preprocessing step (extracting frames from videos and saving them on disk).

Since we want to expedite the computation by doing all the computations on GPU, we won't be using pyflow, which does all the computations on CPU, when extracting optical flows between an input RGB frame and neighboring frames. We will instead be using NVIDIA/FlowNet2.0, a pytorch implementation of FlowNet 2.0: Evolution of Optical Flow Estimation with Deep Networks.

This implementation of RBPN spawns three processes excluding the main process. The first process extracts optical flows using FlowNet2 and sends the optical flows, neighboring RGB frames and an input RGB frame to a queue. The second process runs RBPN with the item in the queue and sends the SR frames to another queue. The last process receives the SR frames and encode them into a video using opencv and save the video in disk.

Dependencies

- Python >= 3.5

- PyTorch >= 1.0.0

pip install torch==1.0.1 -f https://download.pytorch.org/whl/cu100/stable

- NVVL1.1 -> https://github.com/Haabibi/nvvl

# get forked version of NVVL1.1 source cd nvvl/pytorch1.0/ python setup.py install

- FlowNet2.0 -> code adapted from https://github.com/NVIDIA/flownet2-pytorch

cd flownet2-pytorch bash install.sh

For creating optimal environment for running RnB with RBPN, this is the step-by-step process for reproducing the environment I tested on. (Partially adapted from RnB repository)

git clone https://github.com/snuspl/rnb

cd rnb

# delete lines regarding pytorch-0.4.1/ torchvision-0.2.1 in 'spec-file.txt'

conda create -n <env_name> --file spec-file.txt

source activate <env_name>

cd ~

export PKG_CONFIG_PATH=/home/<username>/miniconda2/envs/<env_name>/lib/pkgconfig:$PKG_CONFIG_PATH # change accordingly, should include the file libavformat.pc

conda install pytorch torchvision cudatoolkit=10.0 -c pytorch # this installs cudatoolkit-10.0.130 / pytorch-1.1.0 / torchvision-0.3.0

pip install imageio

pip install scipy

git clone https://github.com/Haabibi/nvvl

cd nvvl/pytorch1.0

python setup.install

cd ~/ #any directory that does not have a subdirectory called 'nvvl'

python -c 'from nvvl import RnBLoader'

cd ~/RBPN-PyTorch/flownet2

bash install.shPretrained Model for RBPN

https://drive.google.com/drive/folders/1sI41DH5TUNBKkxRJ-_w5rUf90rN97UFn?usp=sharing

Pretrained Model for FlowNet2.0

https://drive.google.com/file/d/1hF8vS6YeHkx3j2pfCeQqqZGwA_PJq_Da/view?usp=sharing (more models can be found under flownet2 directory) (place the model under ./flownet2/ckpt)

HOW TO

Training

python main.py

Testing

python nvvl_eval.py

Dataset

- Vid4 Video: The Vid4 dataset the original RBPN uses contains extracted frames in sequence. Since this repository aims to receive a video as an input, video was encoded from the original frames using ffmpeg. If you want to use any dataset other than Vid4 videos, you can follow the instructions below to get videos by encoding frames.

Encoding extracted frames to h264 codec format video

NVVL1.1 only reads h264 codec format video, and to make LR video to SR, you need to have a video ready. Adapted from hamelot.io.

ffmpeg -r 25 -s 768x512 -i %03d.png -vcodec libx264 -crf 25 test.mp4-ris the framerate (fps)-crfis the quality, lower means better quality, 15-25 is usally good- -s is the resolution

the file will be output to:

test.mp4

Converting any video file codec format to h264

INPUT.avi -vcodec libx264 -crf 25 OUTPUT.mp4The paper on Image Super-Resolution

Deep Back-Projection Networks for Super-Resolution (CVPR2018)

Winner (1st) of NTIRE2018 Competition (Track: x8 Bicubic Downsampling)

Winner of PIRM2018 (1st on Region 2, 3rd on Region 1, and 5th on Region 3)

Project page: https://alterzero.github.io/projects/DBPN.html

Citations

If you find the original work of Super Resolution useful, please consider citing it.

@inproceedings{RBPN2019,

title={Recurrent Back-Projection Network for Video Super-Resolution},

author={Haris, Muhammad and Shakhnarovich, Greg and Ukita, Norimichi},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2019}

}