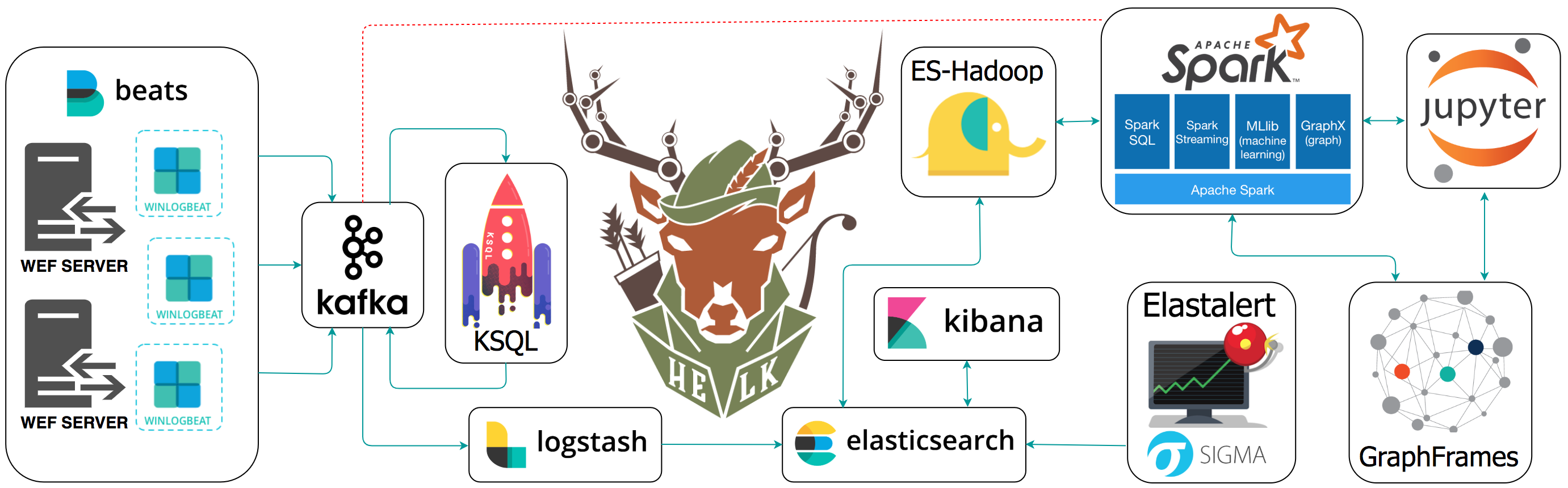

The Hunting ELK or simply the HELK is one of the first open source hunt platforms with advanced analytics capabilities such as SQL declarative language, graphing, structured streaming, and even machine learning via Jupyter notebooks and Apache Spark over an ELK stack. This project was developed primarily for research, but due to its flexible design and core components, it can be deployed in larger environments with the right configurations and scalable infrastructure.

- Provide an open source hunting platform to the community and share the basics of Threat Hunting.

- Expedite the time it takes to deploy a hunt platform.

- Improve the testing and development of hunting use cases in an easier and more affordable way.

- Enable Data Science capabilities while analyzing data via Apache Spark, GraphFrames & Jupyter Notebooks.

The project is currently in an alpha stage, which means that the code and the functionality are still changing. We haven't yet tested the system with large data sources and in many scenarios. We invite you to try it and welcome any feedback.

- Welcome to HELK! : Enabling Advanced Analytics Capabilities

- Spark

- Spark Standalone Mode

- Setting up a Pentesting.. I mean, a Threat Hunting Lab - Part 5

- An Integrated API for Mixing Graph and Relational Queries

- Graph queries in Spark SQL

- Graphframes Overview

- Elastic Producs

- Elastic Subscriptions

- Elasticsearch Guide

- spujadas elk-docker

- deviantony docker-elk

- Roberto Rodriguez @Cyb3rWard0g @THE_HELK

- Nate Guagenti @neu5ron

There are a few things that I would like to accomplish with the HELK as shown in the To-Do list below. I would love to make the HELK a stable build for everyone in the community. If you are interested on making this build a more robust one and adding some cool features to it, PLEASE feel free to submit a pull request. #SharingIsCaring

HELK's GNU General Public License

- Kubernetes Cluster Migration

- OSQuery Data Ingestion

- MITRE ATT&CK mapping to logs or dashboards

- Cypher for Apache Spark Integration (Adding option for Zeppelin Notebook)

- Test and integrate neo4j spark connectors with build

- Add more network data sources (i.e Bro)

- Research & integrate spark structured direct streaming

- Packer Images

- Terraform integration (AWS, Azure, GC)

- Add more Jupyter Notebooks to teach the basics

- Auditd beat intergation

More coming soon...