YOLOV is a high perfomance video object detector. Please refer to our paper on Arxiv for more details.

This repo is an implementation of PyTorch version YOLOV based on YOLOX.

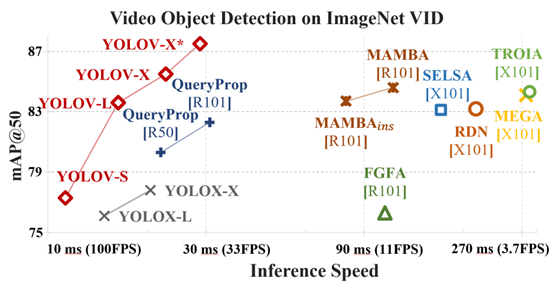

| Model | size | mAP@50val |

Speed 2080Ti(batch size=1) (ms) |

weights |

|---|---|---|---|---|

| YOLOX-s | 576 | 69.5 | 9.4 | |

| YOLOX-l | 576 | 76.1 | 14.8 | |

| YOLOX-x | 576 | 77.8 | 20.4 | |

| YOLOV-s | 576 | 77.3 | 11.3 | |

| YOLOV-l | 576 | 83.6 | 16.4 | |

| YOLOV-x | 576 | 85.5 | 22.7 | |

| YOLOV-x + post | 576 | 87.5 | - | - |

Add YOLOv7 bases

Installation

Install YOLOV from source.

git clone git@github.com:YuHengsss/YOLOV.git

cd YOLOVCreate conda env.

conda create -n yolov python=3.7

conda activate yolov

pip install -r requirements.txt

pip install yolox==0.3

pip3 install -v -e .Demo

Step1. Download a pretrained weights.

Step2. Run demos. For example:

python tools/vid_demo.py -f [path to your exp files] -c [path to your weights] --path /path/to/your/video --conf 0.25 --nms 0.5 --tsize 576 --save_result For online mode, you can run:

python tools/yolov_demo_online.py -f ./exp/yolov/yolov_l_online.py -c [path to your weights] --path /path/to/your/video --conf 0.25 --nms 0.5 --tsize 576 --save_result Reproduce our results on VID

Step1. Download datasets and weights:

Download ILSVRC2015 DET and ILSVRC2015 VID dataset from IMAGENET and organise them as follows:

path to your datasets/ILSVRC2015/

path to your datasets/ILSVRC/Download our COCO-style annotations for training and video sequences. Then, put them in these two directories:

YOLOV/annotations/vid_train_coco.json

YOLOV/yolox/data/dataset/train_seq.npyChange the data_dir in exp files to [path to your datasets] and Download our weights.

Step2. Generate predictions and convert them to IMDB style for evaluation.

python tools/val_to_imdb.py -f exps/yolov/yolov_x.py -c path to your weights/yolov_x.pth --fp16 --output_dir ./yolov_x.pickleEvaluation process:

python tools/REPPM.py --repp_cfg ./tools/yolo_repp_cfg.json --predictions_file ./yolov_x.pckl --evaluate --annotations_filename ./annotations/annotations_val_ILSVRC.txt --path_dataset [path to your dataset] --store_imdb --store_coco (--post)(--post) indicates involving post-processing method. Then you will get:

{'mAP_total': 0.8758871720817065, 'mAP_slow': 0.9059275666099181, 'mAP_medium': 0.8691557352372217, 'mAP_fast': 0.7459511040452989}Training example

python tools/vid_train.py -f exps/yolov/yolov_s.py -c weights/yoloxs_vid.pth --fp16Roughly testing

python tools/vid_eval.py -f exps/yolov/yolov_s.py -c weights/yolov_s.pth --tnum 500 --fp16tnum indicates testing sequence number.

Expand

If YOLOV is helpful for your research, please cite the following paper: