This is an implementation of the paper:

Evaluating Adversarial Attacks on Driving Safety in Vision-Based Autonomous Vehicles (IEEE Xplore/arXiv)

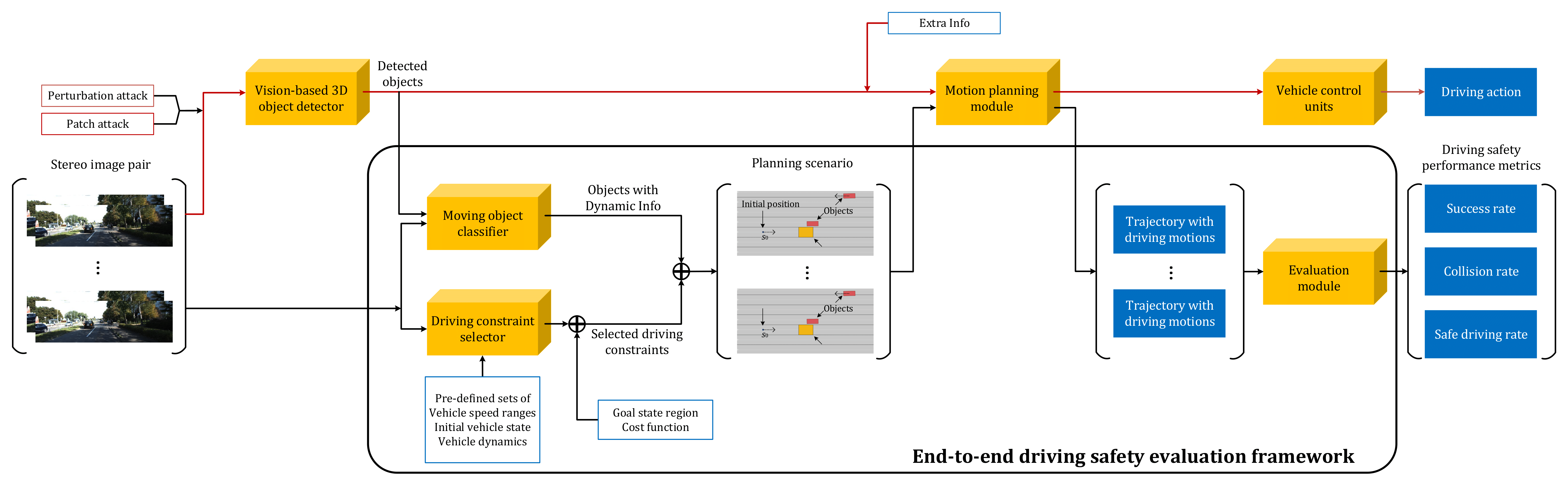

Although driving safety is the ultimate concern for autonomous driving, there is no comprehensive study on the linkage between the performance of deep learning models and the driving safety of autonomous vehicles under adversarial attacks. In this project, we investigate the impact of two primary types of adversarial attacks, namely, perturbation attacks and patch attacks, on the driving safety of vision-based autonomous vehicles rather than only from the perspective of the detection precision of deep learning models. In particular, we focus on the most important task for vision-based autonomous driving, i.e., the vision-based 3D object detection, and target two leading models in this domain, Stereo R-CNN and DSGN. To evaluate driving safety, we propose an end-to-end evaluation framework with a set of driving safety performance metrics.

- DSGN: please follow attack/DSGN/README.md to perform adversarial attacks.

- Stereo R-CNN: please refer attack/Stereo-RCNN/README.md to launch adversarial attacks.

- Please follow evaluation/README.md to launch the driving safety evaluation framework.

- adapt evaluation framework to the latest version of CommonRoad-Search (coming soon)

If you find our work useful in your research, please consider citing:

@article{zhang2021evaluating,

title={Evaluating Adversarial Attacks on Driving Safety in Vision-Based Autonomous Vehicles},

author={Zhang, Jindi and Lou, Yang and Wang, Jianping and Wu, Kui and Lu, Kejie and Jia, Xiaohua},

journal={arXiv preprint arXiv:2108.02940},

year={2021}

}

- We thank several repos for their contributions which are used in this repo

- DSGN implementation is taken from Jia-Research-Lab.

- Stereo R-CNN implementation is taken from HKUST-Aerial-Robotics Stereo-RCNN.

- We use the code for CommonRoad-Search with (commit 0d434310) instead of the latest one.

If you have any questions or suggestions about this repo, please feel free to contact me (kyrie.louy@gmail.com).