NLPContributionGraph challenge aim is to struct scholarly NLP contributions in the open research knowledge graph (ORKG). It is posited as a solution to the problem of keeping track of research progress. The dataset for this task is defined in a specific structure to be integrable within KG infrastructures such as ORKG. It consists of the following information:

Contribution sentences: A sentence about contributions from papers.Scientific terms and relations: A set of scientific terms or phrases from contribution sentences.Triples: subject-predicate-object statements for KG constructions. The triples are organized under three mandatory (Research Problem, Approach, Model) or more information units (IUs) (Code, Dataset, Experimental Setup, Hyperparameters, Baselines, Results, Tasks, Experiments, and Ablation Analysis).

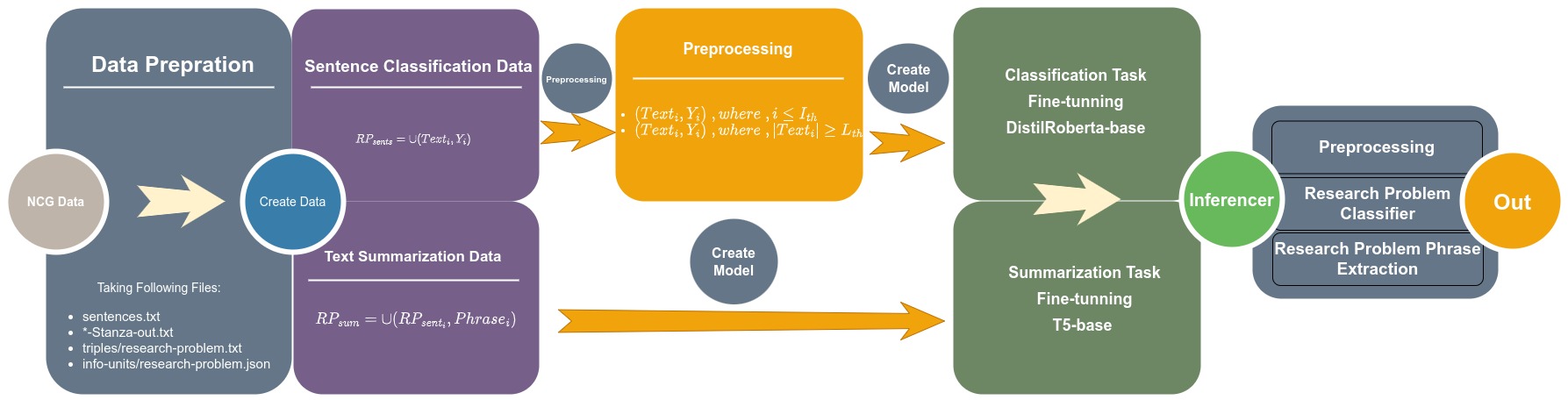

In this work, the major concern is Research Problem (RP) extraction. To do this, we need to find contribution sentences that contain RPs. Next, using the contribution sentences we are able to extract RPs. To do this we designed a classifier that identifies contribution sentences that contain RPs. Next, we feed them into a text summarizer to extract RP phrases.

The proposed method for the task is two-fold: (1) an RP classifier to identify RP sentences, and (2) a text summarizer to extract RP phrases. Also, we are only interested in extracting RPs so the rest of the data such as phrases and triples related to other IUs, are not relevant to this task. To only consider the metadata that is needed in this task, the original dataset was presented in NCG task training-data and NCG task test-data converted into the following format. The transformed data exist in the dataset/preprocessed directory.

[task-name-folder]/

├── [article-counter-folder]/

│ ├── [articlename]-Stanza-out.txt

│ ├── sentences.txt

│ └── info-units/

│ │ └── research-problem.json

│ └── triples/

│ │ └── research-problem.txt

│ └── ...

└── ...

Next, to train models, we list all sentences in the train/test for the RP classifier. Next, we used the info-units/research-problem.json file to build summarization data, where the phrases are the summary and the sentences are input texts for the summarization task. These datasets are exist in dataset/preprocessed/experiment-data directory. The stats of the RP classification and RP summarization tasks are as follows:

| Tasks\Datasets | Training-data | Test-data | ||||

| Class-0 | Class-1 | All-data | Class-0 | Class-1 | All-data | |

| RP Classifcation - Raw Data | 607 | 54831 | 55438 | 316 | 33639 | 33955 |

| RP Classifcation - Preprocessed Data | 607 | 9816 | 10423 | 314 | 6501 | 6815 |

| RP Summarization | 602 | 314 | ||||

Table 1: Statistics of the dataset

Note: build_dataset.py scripts are doing whole the dataset preparations with the proposed preprocessing method.

Figure 1 presents the proposed system architectures. After the data preparations, each task (RP classification and RP summarization) builds its own data. For the RP classification task, we made preprocessing which ended up decreasing unwanted samples (stats presented in Table 1) for fine-tuning the transformer model for RP sentences identification. For RP summarization, we fine-tuned T5 to summary RP sentences.

Figure 1: Proposed System Architecture

The preprocessing step and models are described in the following.

The number of samples was too high for the RP classification task of the proposed system. To reduce the size of the dataset we applied two thresholds over data based on hyperparameter tuning over the train set.

1) Maximum Index Threshold: According to J. D’Souza and S. Auer action in designing NCG dataset, most of the research problems come from the title, abstract, and introduction part of the paper. However here, due to the variant in the structure of papers, it is hard to separate these sections in order to reduce the amount of training unwanted sentences.

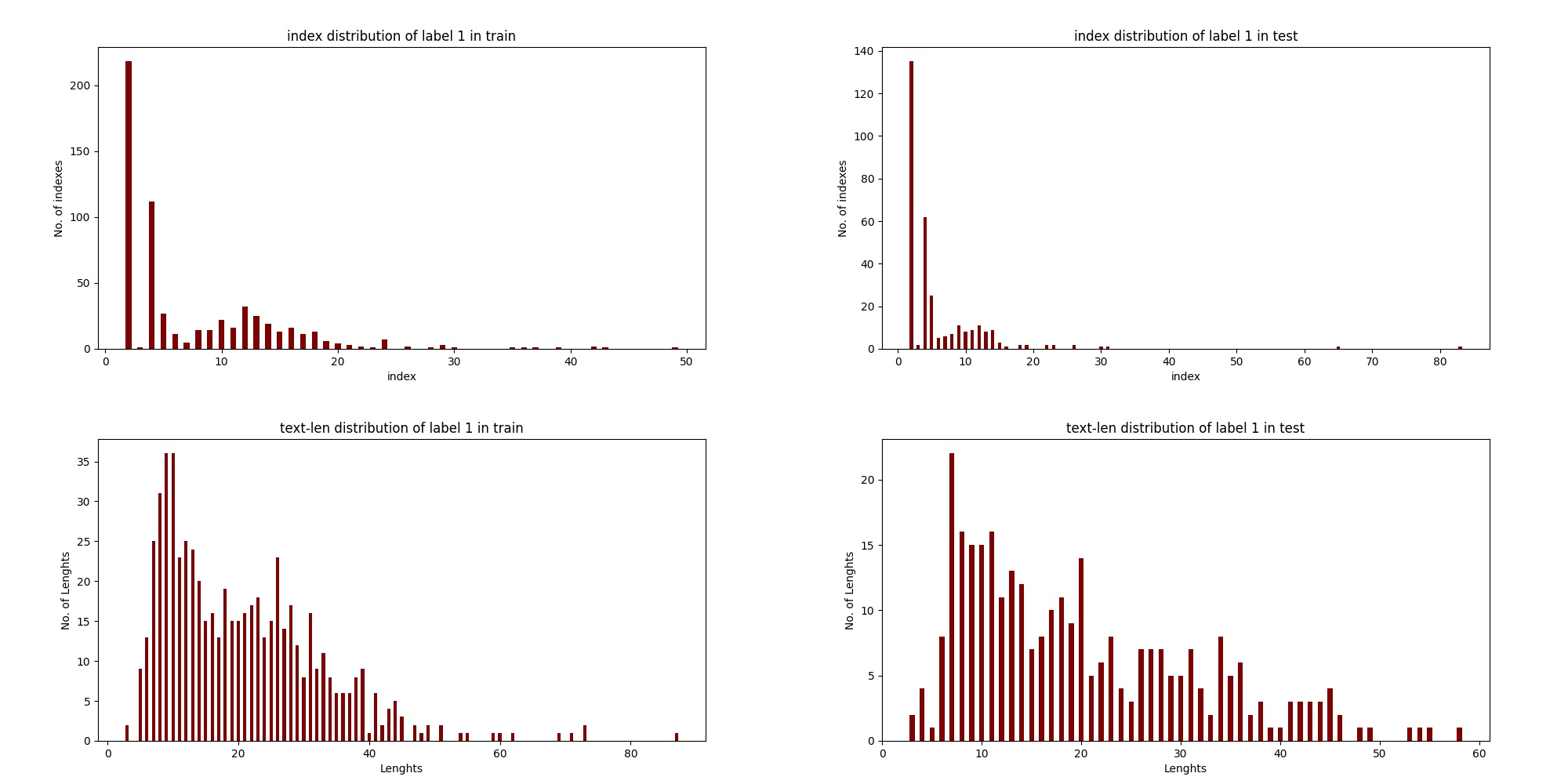

We made an analysis of the RP indexes distributions to find an appropriate number of samples from each document to be considered for the RP classification task. Here indexes are the no. of sentences that appeared in the paper to be RP.

Figure 2 shows the distribution of RP indexes in the train and test sets. To avoid any bias we select

Figure 2: Distributions of RP indexes and lenghts

Figure 2: Distributions of RP indexes and lenghts

2) Minimum Text Length Threshold: Most papers consist of sentences with a single word or 2, 3 words. This might not happen in RP sentences. To confirm the observations we plot the length of RP sentences in the train set. And according to these observations we used

In the end, we obtained a very good-shaped dataset for our classification task. The stats for the dataset is presented in Table 1. We reduced the train set by 82% and test set by 79%. During the data reduction, we only lost 2 samples in the test set. The created datasets are stored in the dataset/preprocessed/experiment-data directory.

The RP sentence identification is described as a text classification of the sentences into 0 or 1. Where we are interested in the category of 1. It is a highly imbalanced text classification problem. For this manner, we fine-tunned distilroberta-base with a learning rate of 2e-5, batch size of 16, and epoch number of 5.

After RP sentence identification, the next step is to extract RP phrases. To do that we tack a summarization approach. To build a summarization model specific for this task we fine-tuned the T5-base model with a learning rate of 2e-5, batch size of 8, and epoch number of 5. In tokenization step of T5 text summarization, we used input text max_length=1024 and summary max_length=128. During inference, we applied output max_length=10 for generating summaries.

Metrics: For the whole system in the NCG task, the scoring program has been designed to calculate F1, precision, and recalls for Sentences, Information Units, and Triples. Here, since we are only interested in one IU so results for triples will be the F1, precision, and recall of research-problem IU. For text summarization, we used Rouge1, Rouge2, RougeL, and RougeLsum for manual evaluations.

Baseline Model: For comparison of the proposed method, we made the simplest model for the task called xgboost-t5small. This model uses TFIDF features and XGBoost Classifier for RP sentence identification, and T5-Small for text summarization. According to the analysis, we found that XGBoost is performing better than other classifiers with TFIDF features.

Table 2 presents the experimental evaluations over test sets. The RP Classification results are averaged macro scores. According to these results, even fine-tuning a transformer model in the simplest way is performing quite well on this task. However, we may believe the data reduction technique effect as well.

For RP Summarization, T5 models are quite appropiate choice here since fine-tuning different version of this models in a simplest way without any hyperparamether tuning is giving very promising results.

| Task | RP Classifcation | RP Summarization | |||||

| Metrics | F1 | P | R | Rouge1 | Rouge2 | RougeL | RougheLsum |

| xgboost-t5small | 0.63 | 0.78 | 0.59 | 75.12 | 55.04 | 64.86 | 64.86 |

| distilroberta-t5base | 0.75 | 0.77 | 0.74 | 79.56 | 62.95 | 71.42 | 71.42 |

In conclusion, we can see that the distilroberta-t5base model is performing well. So introducing more complexity to this model may allow us to boost the current model performance.

| Models | Sentences | Information Units | Triples | Average | ||||||

| Metrics | F1 | P | R | F1 | P | R | F1 | P | R | F1 |

| xgboost-t5small | 0.281 | 0.604 | 0.183 | 0.609 | 1.0 | 0.438 | 0.173 | 0.385 | 0.112 | 0.355 |

| distilroberta-t5base | 0.524 | 0.568 | 0.487 | 0.941 | 1.0 | 0.890 | 0.326 | 0.362 | 0.297 | 0.597 |

Table 3 presents the final results of the test set using NCG task metrics. The main quantitative findings are:

- The proposed method

distilroberta-t5baseachieved averaged f1-score of 0.597 and beat the baseline model by a large margin. The averaged F1-Score has been calculated as follows:$(F1_{sentences} + F1_{IU} + F1_{triples})/3$ . - IUs (RPs) are being identified in 94% of the cases (the percentage is not representing the correctness of identified sentences as an RPs)

- RPs are now being identified in 52% of the cases which is two times higher than the baseline model.

- The weakness of the system comes from triples, where we have still low F1 scores in the

distilroberta-t5basemodel. This weakness may solve by giving more attention to the summarization module. Since we didn't put much effort into hyperparameter tuning of this module.

We have used experimentations on the test set to conclude the distilroberta-base model as a final system. Table 2 and Table 3 show experimental and final results. The quantitive analysis is presented as follows:

-

The recall metric become important in the RP classification task since we could get a high possibility of extracting RP phrases with RP summarization. However, the best model still suffers from this perspective. But considering the baseline model, it is improved by 30% and it shows why the summarization service was able to extract triples in the double rate of the baseline model (f1 score of 32%).

-

The

xgboost-t5smallmodel shows that TFIDF features are not quite well for this task. The possible reason behind this is the high word correlations between RP and other IU sentences. This leads to poor feature quality for RP class. -

Summarization model is working well in development however, its results decreased during the final evaluation phase where only RP sentences were considered for phrase extraction. Here considering RP sentence detection F1 score of 52% the summarization achieved 62.2% of the time (

32.6%/52.4% = 62.2%) correct summarization. So increasing the accuracy of the RP sentence detection module affects the triple (RP phrase) extraction module as well. -

The 59.7% averaged f1 score of the system is mostly affected by IU score since it is been calculated regardless of how much is accurate the content if a paper has the

research-problem.txttriples it will be counted toward true positives in IU. Ignoring this metric and considering$(F1_{sents} + F1_{triples})/2$ the obtained averaged f1 scores are 0.425 and 0.227 fordistilroberta-t5baseandxgboost-t5smallmodels respectively. So, the proposed method boosts the baseline models by 53%.

-

Investigations showed that in a few cases sentences with RP labels were repeated multiple times in papers, however, these sentences didn't appear in the original contribution sentences. As an example, in the

training-set/Passage_re-ranking/1paper, the first item ofinfo-units/resarch-problem.jsonappeared once in the title and one more time in the body of the paper. But the body sentence index didn't appear in thesentences.txt -

For many of the files in

info-units/research-problem.jsonthere is inconsistency in data structures and the issue has been solved in building the dataset by hard coding. As an example, intraining-set/natural-language-inference/, papers50,45, and14have an inconsistent data structure with other papers. This scenario is repeated many times. For inconsistency, RPs in these papers should be inside of a list like others. -

In scoring program we saw that the evaluation is being done on two papers for each task only. we fix this by allowing the evaluation to go through all the papers. The following line in code has been changed from

for i in range(2):tofor i in range(len(os.listdir(os.path.join(gold_dir, task)))):

[assets]/ # Model artifacts directory

[configuration]/ # Configs of model, data and evaluations

[datahandler]/ # data loader/saver modules to load/save files

[dataset]/ # dataset directory consist of created data and experimental data

[images]/ # repository image directory

[notebooks]/ # experimental jupyter notebook of the project such as training text-summarization (section 3.2) and classifications (section 3.3)

[outputs]/ # output of the models and evaluation results

[report]/ # build dataset and dataset stats report dir

[src]/ # classifcation, evaluation, and summarizer script dir

├── .gitignore

├── README.md

├── __init__.py

├── build_dataset.py # building datasets that mentioned in section 2

├── requirements.txt # requirenment of the project

├── runner.py # the final inferencers that combines models and do the evaluations

└── train_sentence_classifier_bs.py # xgboost model

- Building dataset:

python3 build_dataset.py- Train text-summarization and text-classification models using Jupyter-notebooks in

notebooksdir and save artifacts inassetsdir with the name ofclf-distilrobertaandsum-t5base. - Run the following script to produce outputs and evaluations on

outputs/distilroberta-t5basedirectory

python3 runner.py- Python3

- Packages in requirements.txt