(non-distribution & distribution)

Use Tensorflow to do classification containing data preparation, training, testing.(single computer single GPU & single computer multi-GPU & multi-computer multi-GPU)

中文博客地址: http://blog.csdn.net/renhanchi/article/details/79570665

All parameters are in arg_parsing.py. So before you start this program, you should read it carefully!

STEPS:

-

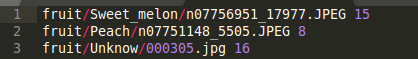

Put all images in different diractories. Then run img2list.sh to create a txt file containing pathes and labels of all iamges.

-

Run list2bin.py to convert the images from rgb to tfrecords.

-

For single computer, one GPU or more, whatever. Just run:

python main.py -

For distribution, first you should modify PS_HOSTS and WORKER_HOSTS in arg_parsing.py. And then copy all dataset and codes to every server.

For ps host, run:

CUDA_VISIBLE_DEVICES='' python src/main.py --job_name=ps --task_index=0

CUDA_VISIBLE_DEVICES='' means using CPU to concat parameters.

For worker host, run:

python src/main.py --job_name=worker --task_index=0

Do remenber to increase task_index in every server.

-

All ckpt and event files will be saved in MODEL_DIR.

-

For testing, just run:

python src/main.py --mode=testing

Notes

-

DO READ arg_parsing.py again and again to understand and control this program.

-

Use CUDA_VISIBLE_DEVICES=0,2 to choose GPUs.

-

For visualization, run:

tensorboard --logdir=models/ -

More details, please see my blog above.