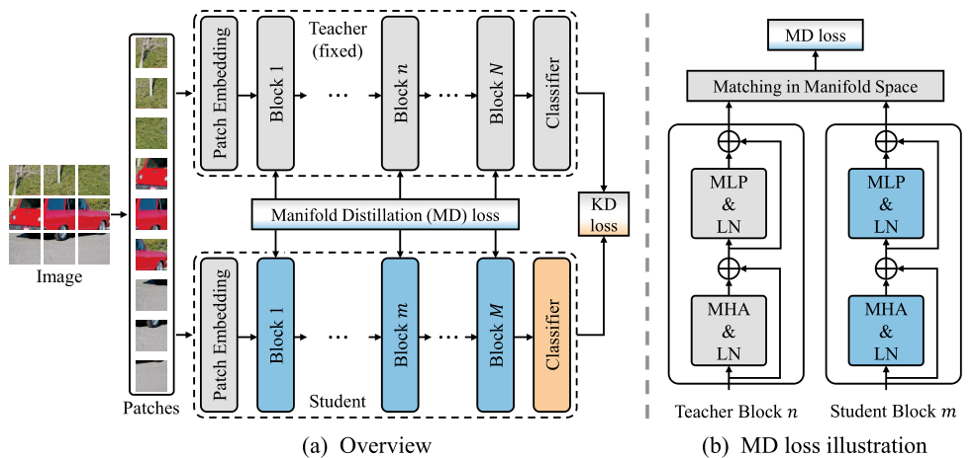

Implementation of the NeurIPS 2022 paper: Learning Efficient Vision Transformers via Fine-Grained Manifold Distillation.

This paper utilizes the patch-level information and propose a fine-grained manifold distillation method for transformer-based networks.

pytorch==1.8.0

timm==0.5.4

Download and extract ImageNet train and val images from http://image-net.org/. The directory structure is:

│path/to/imagenet/

├──train/

│ ├── n01440764

│ │ ├── n01440764_10026.JPEG

│ │ ├── n01440764_10027.JPEG

│ │ ├── ......

│ ├── ......

├──val/

│ ├── n01440764

│ │ ├── ILSVRC2012_val_00000293.JPEG

│ │ ├── ILSVRC2012_val_00002138.JPEG

│ │ ├── ......

│ ├── ......

To train a DeiT-Tiny student with a Cait-S24 teacher, run:

python -m torch.distributed.launch --nproc_per_node=8 main.py --distributed --output_dir <output-dir> --data-path <dataset-dir> --teacher-path <path-of-teacher-checkpoint> --model deit_tiny_patch16_224 --teacher-model cait_s24_224 --distillation-type soft --distillation-alpha 1 --distillation-beta 1 --w-sample 0.1 --w-patch 4 --w-rand 0.2 --K 192 --s-id 0 1 2 3 8 9 10 11 --t-id 0 1 2 3 20 21 22 23 --drop-path 0 Note: pretrained cait_s24_224 model can be download from deit.

| Teacher | Student | Acc@1 | Checkpoint & log |

|---|---|---|---|

| CaiT-S24 | DeiT-Tiny | 76.4 | checkpoint / log |

If you find this project useful in your research, please consider cite:

@inproceedings{hao2022manifold,

author = {Zhiwei Hao and Jianyuan Guo and Ding Jia and Kai Han and Yehui Tang and Chao Zhang and Han Hu and Yunhe Wang},

title = {Learning Efficient Vision Transformers via Fine-Grained Manifold Distillation},

booktitle = {Advances in Neural Information Processing Systems},

year = {2022}

}

This repo is based on DeiT and pytorch-image-models.