Interactive Toolbox for Precise Data Annotation and Robust Vision Learning

This repository is for end users. For source code, please visit our source code repository.

For a user-oriented introduction, please refer to our documentation and tutorial pages

For a more academic-style introduction, one can also refer to our workshop paper released recently:

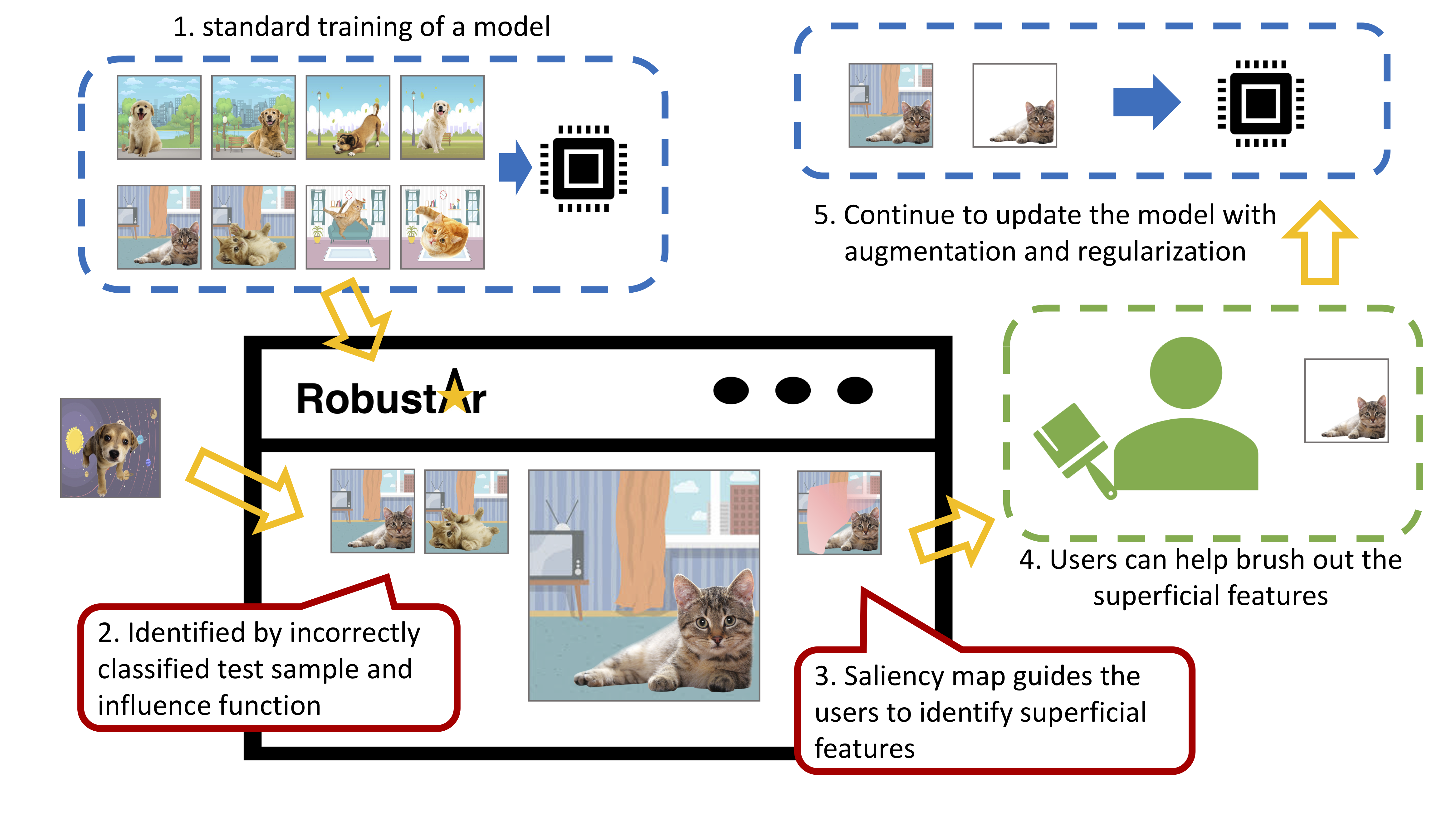

- the model can be trained elsewhere and then fed into the software;

- With new test samples, the model can help identify the samples that are responsible for the prediction through influence function;

- the software offers saliency map to help the user know which part of the features the model are paying necessary attention;

- the users can use the drawing tools to brush out the superficial pixels;

- new annotation of these images will serve as the role as augmented images for continued training.

-

To install Robustar, please first intall Docker locally. If you wish to use GPU, please install a few more dependencies with

install-nvidia-toolkit.sh. -

Then, one can start the robustar with robustar.sh included in this repository. You may need to use

sudoif you are on UNIX systems, or open run a terminal as system admin on Windows.- First time users please run

./robustar.sh -m setup -a <version>to pull docker image. Please visit our DockerHub page for a complete list of versions. - One can run

./robustar.sh -m run <options>to start robustar. Please read the next section for detailed options. You can also refer toexample.shfor sample running configurations.

- First time users please run

-

Directly run

./robustar.shwill display the help message.

Click to Expand Built-in Help Message

Help documentation for robustar.

Basic usage: robustar -m [command] [options]

[command] can be one of the following: setup, run.

setup will prepare and pull the docker image, and create a new container for it.

run will start to run the system.

Command line switches [options] are optional. The following switches are recognized.

-p --Sets the value for the port docker forwards to. Default is 8000.

-a --Sets the value for the tag of the image. Default is latest.

-n --Sets the value for the name of the docker container. Default is robustar.

-t --Sets the path of training images folder. Currently only supports the PyTorch DataLoader folder structure as following

-----images/

----------dogs/

---------------1.png

---------------2.png

----------cats/

---------------adc.png

---------------eqx.png

-e --Sets the path of testing images folder. Currently only supports the PyTorch DataLoader folder structure

-i --Sets the path of the calculation result of the influence function.

-c --Sets the path of model check points folder.

-o --Sets the path of configuration file. Default is configs.json.

-h --Displays this help message. No further functions are performed.

Please visit our source code repository.

Also, feel free to check out our Trello board to stay tuned for our latest updates!

Robustar has finally released v0.0.1-beta! ✨

While we are grateful that the community is interested in using our system, please bear with us that some functions are still in inchoate forms.

We are welcoming feedbacks of all kinds!

Chonghan Chen · Haohan Wang · Leyang Hu · Linjing Sun · Yuhao Zhang · Xinnuo Li