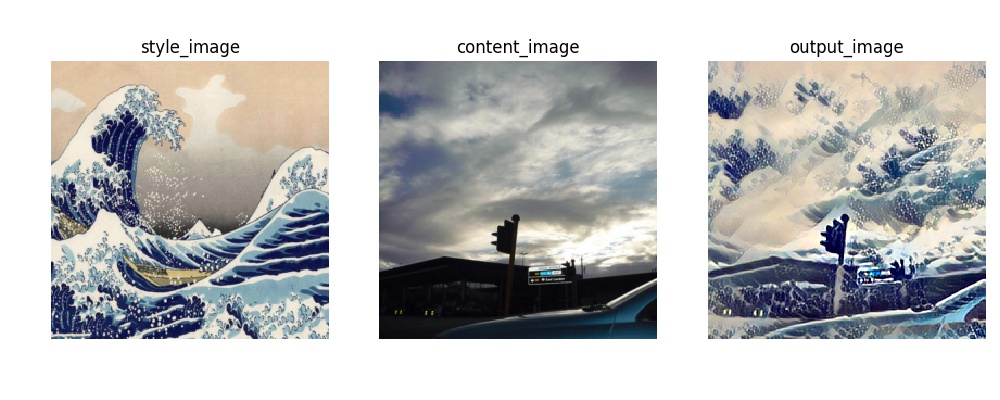

Image stylization is to combine a real image as a base content with the perceptual context of a style image to a final stylized image.

Up to now, I tried two methods to stylize images.

For the second method, it is trained on COCO2014

| Package | version |

|---|---|

| python | 3.5.2 |

| torch | 1.0.1 |

| torchvision | 0.2.2 |

| tqdm | 4.19.9 |

# train on the first method

python train.py

# train on the second method

python train2.py# test on specified images

python test.pyargument parser is coming soon..

This project is an implementation of A Neural Algorithm of Artistic Style.

The core idea of it is to interpret the gram matrix as style loss to express the perceptual and cognitive mode of style image while leverage the tradeoff between the loss from the origin content image.

The trainable parameters contains only the pixel value of final output image.

|

|

|

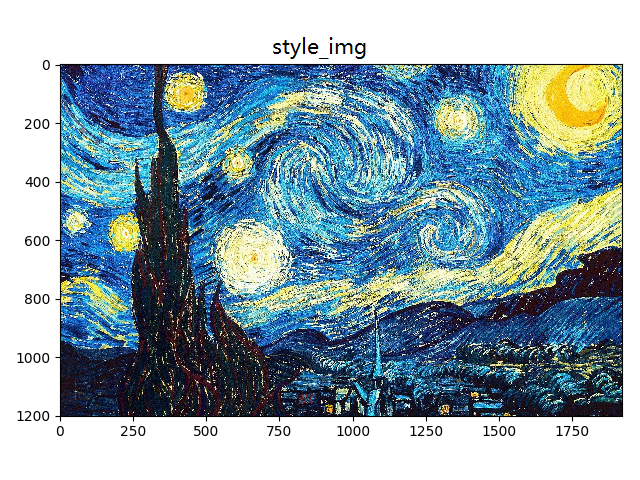

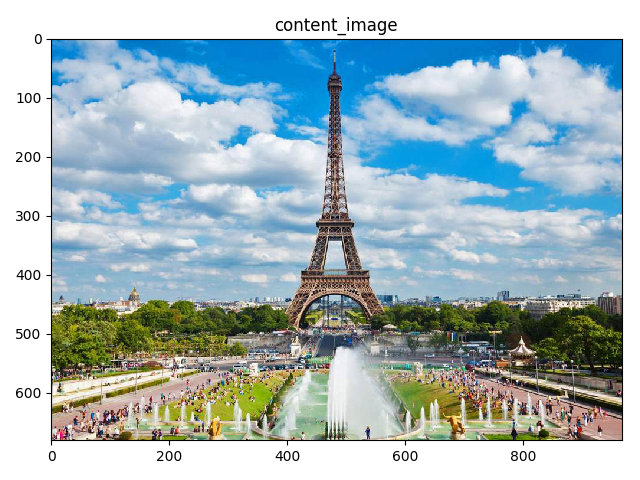

This project is an implentation of Perceptual Losses for Real-Time Style Transfer and Super-Resolution

Different with the first trial, the generation of this method is based on the transform net. In particular, it is composed of downsampling with CNN network, residual blocks and upsampling parts using nearest padding, therefore it can be implemented in real-time according to various content-image.

Further more, total variation loss is used to smooth the final image.

Training for 1 epoch of 32000 images in batch size of 4 costs around 2 hours and 40 minutes on one K80(12GB) GPU.

state_dict(weights) of the model can be fetched from My Google drive