A PyTorch implementation of the Pix2Pix architecture for image-to-image translation, tailored for depth estimation from dashboard camera images. The model consists of a U-Net-based generator that learns a mapping from RGB input images to their corresponding depth maps, and a PatchGAN discriminator that enforces local realism by evaluating image patches rather than the entire image. The system is trained using a combination of adversarial loss (to encourage realistic outputs) and L1 loss (to ensure pixel-wise similarity to the ground truth). Input-target image pairs are extracted from composite images where the input RGB image is on the right half and the target depth/thermal image is on the left.

- Generator: U-Net architecture with skip connections

- Discriminator: PatchGAN discriminator for realistic image generation

- Loss: Combined adversarial loss and L1 loss for better pixel-wise accuracy

Pix2Pix/

├── data/

│ ├── loader.py # Data loading utilities

│ └── __init__.py

├── model/

│ ├── generator.py # U-Net Generator

│ ├── discriminator.py # PatchGAN Discriminator

│ └── __init__.py

├── train/

│ └── train.py # Training script

├── inference/

│ └── test.py # Inference script

├── output/ # Generated results

└── data.py # Data preprocessing

- PyTorch

- NumPy

- OpenCV

- Matplotlib

- imageio

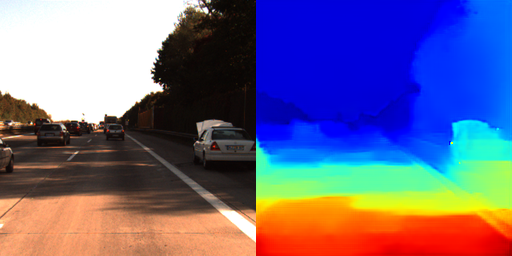

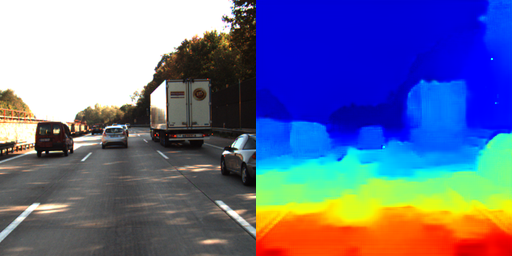

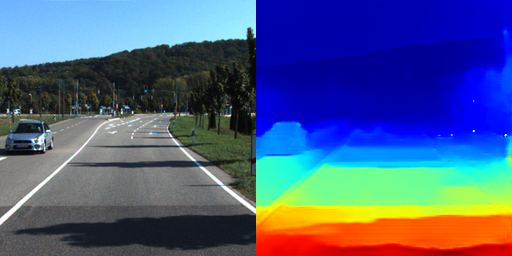

python train/train.pypython inference/test.pyThe model generates depth maps from dashboard camera images. Here are some example results:

| Input → Output |

|---|

|

|

|

The model is trained on the pix2pix-depth dataset containing paired RGB and depth images from dashboard cameras.

Dataset used: https://www.kaggle.com/datasets/greg115/pix2pix-depth

This project is licensed under the Apache License - see the LICENSE file for details.