Taha Koleilat, Hojat Asgariandehkordi, Hassan Rivaz, Yiming Xiao

[Paper] [Overview] [Datasets] [Demo] [BibTeX]

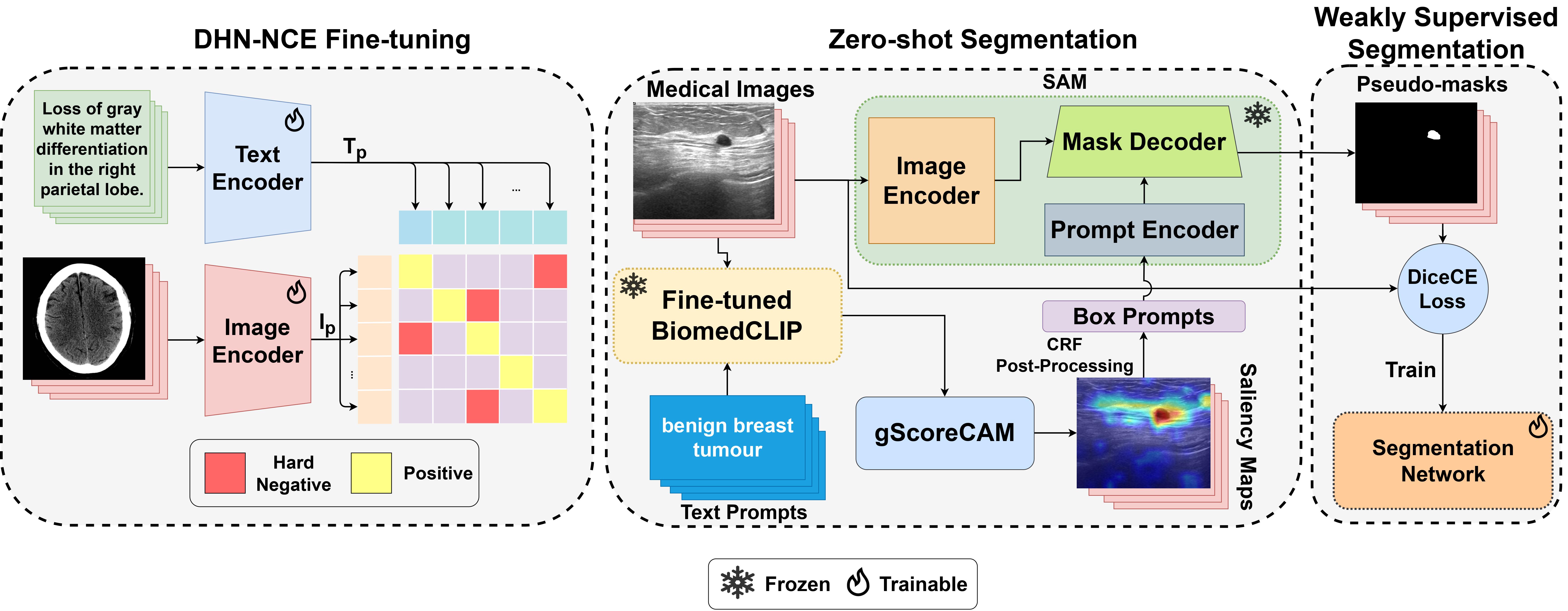

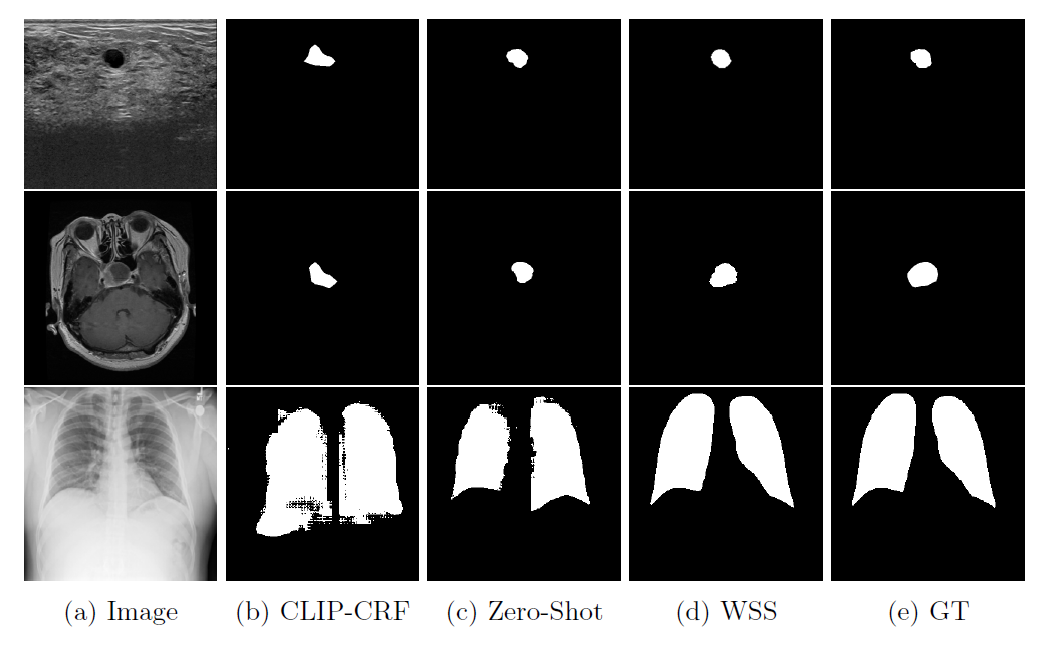

Abstract: Medical image segmentation of anatomical structures and pathology is crucial in modern clinical diagnosis, disease study, and treatment planning. To date, great progress has been made in deep learning-based segmentation techniques, but most methods still lack data efficiency, generalizability, and interactability. Consequently, the development of new, precise segmentation methods that demand fewer labeled datasets is of utmost importance in medical image analysis. Recently, the emergence of foundation models, such as CLIP and Segment-Anything-Model (SAM), with comprehensive cross-domain representation opened the door for interactive and universal image segmentation. However, exploration of these models for data-efficient medical image segmentation is still limited, but is highly necessary. In this paper, we propose a novel framework, called MedCLIP-SAM that combines CLIP and SAM models to generate segmentation of clinical scans using text prompts in both zero-shot and weakly supervised settings. To achieve this, we employed a new Decoupled Hard Negative Noise Contrastive Estimation (DHN-NCE) loss to fine-tune the BiomedCLIP model and the recent gScoreCAM to generate prompts to obtain segmentation masks from SAM in a zero-shot setting. Additionally, we explored the use of zero-shot segmentation labels in a weakly supervised paradigm to improve the segmentation quality further. By extensively testing three diverse segmentation tasks and medical image modalities (breast tumor ultrasound, brain tumor MRI, and lung X-ray), our proposed framework has demonstrated excellent accuracy.

- Radiology Objects in COntext (ROCO)

- MedPix

- Breast UltraSound Images (BUSI)

- UDIAT

- COVID-QU-Ex

- Brain Tumors

Install anaconda following the anaconda installation documentation. Create an environment with all required packages with the following command :

conda env create -f medclipsam_env.yml

conda activate medclipsam

then setup the segment-anything library:

cd segment-anything

pip install -e .

cd ..

download model checkpoints for SAM and place them in the segment-anything directory

Three model versions of the SAM model are available with different backbone sizes. These models can be instantiated by running

Click the links below to download the checkpoint for the corresponding model type.

defaultorvit_h: ViT-H SAM model.vit_l: ViT-L SAM model.vit_b: ViT-B SAM model.

finally create a directory for your data that you want to work with in the main working directory

python saliency_maps/visualize_cam.py --cam-version gscorecam --image-folder <path/to/data/dir> --model-domain biomedclip --save-folder <path/to/save/dir>

you can also input a different text prompt for each image one by one:

python saliency_maps/visualize_cam.py --cam-version gscorecam --image-folder <path/to/data/dir> --model-domain biomedclip --save-folder <path/to/save/dir> --one-by-one

You should use the output directory of the generated saliency maps in Step 1 as the input directory:

python crf/densecrf_sal.py --input-path <path/to/data/dir> --sal-path <path/to/saliency/maps> --output-path <path/to/output/dir>

you can also change the CRF settings:

python crf/densecrf_sal.py --input-path <path/to/data/dir> --sal-path <path/to/saliency/maps> --output-path <path/to/output/dir> --gaussian-sxy <gsxy> --bilateral-sxy <bsxy> --bilateral-srgb <bsrgb> --epsilon <epsilon> --m <m> --tau <tau>

You should use the output directory of the produced CRF labels in Step 2 as the input directory. In our experiments, we used the ViT-B checkpoint:

python segment-anything/prompt_sam.py --checkpoint <path/to/checkpoint> --model-type vit_b --input <path/to/data/dir> --output <path/to/output/dir> --mask-input <path/to/CRF/masks>

an option to do multi-contour evaluation is implemented:

python segment-anything/prompt_sam.py --checkpoint <path/to/checkpoint> --model-type vit_b --input <path/to/data/dir> --output <path/to/output/dir> --mask-input <path/to/CRF/masks> --multi-contour

You can optionally fine-tune the BiomedCLIP pre-trained model using our DHN-NCE Loss

Special thanks to open_clip, gScoreCAM, pydensecrf, and segment-anything for making their valuable code publicly available.

If you use MedCLIP-SAM, please consider citing:

@article{koleilat2024medclip,

title={MedCLIP-SAM: Bridging Text and Image Towards Universal Medical Image Segmentation},

author={Koleilat, Taha and Asgariandehkordi, Hojat and Rivaz, Hassan and Xiao, Yiming},

journal={arXiv preprint arXiv:2403.20253},

year={2024}

}