AI powered system for detecting whether a person is wearing a mask and if not, their facial expression

-

Samuel Mohebban (B.A. Psychology - George Washington University)

-

I outline how to do this in my Blog

- In the age of COVID-19, mask protection has been a vital instrument for stopping spread of the virus. However, there has been much debate over whether people should be forced to wear one.

- Many businesses require that all customers wear a face mask, and have been found to enforce these rules by not allowing people to enter if they are found not wearing a face covering.

- Even though these rules are clear, many people around the United States have refused to follow these rules, and have causes disruptions at local business for being told they cannot enter without a mask

- This project is meant to fix this issue by detecting whether a person is wearing a mask, and if they are not, their facial expression will be read to determine if they are disgruntled.

- This detection will use a Convolutional Neural Network to read each frame of a video and make these detections

keras(PlaidML backend --> GPU: RX 580 8GB)numpyopencvmatplotlib

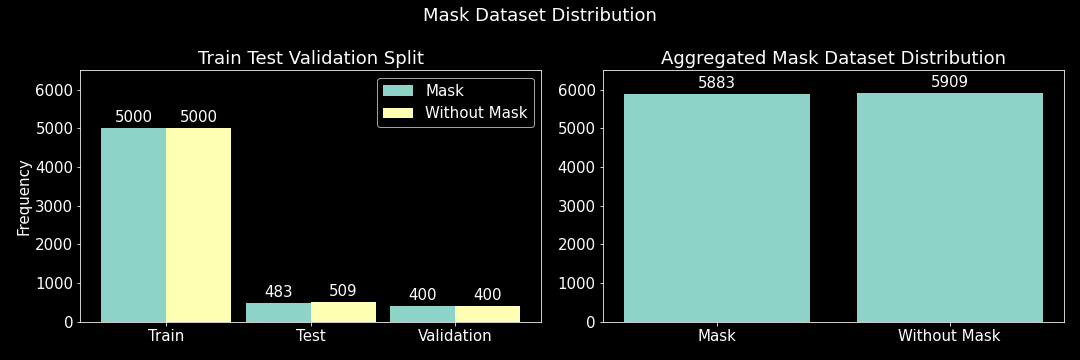

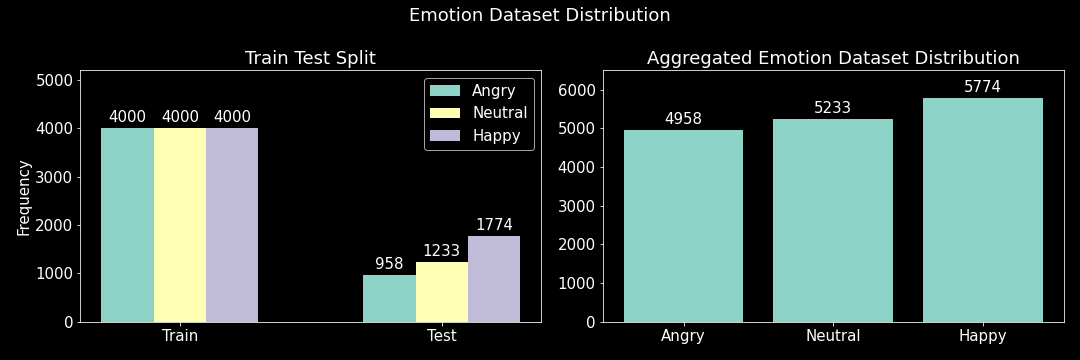

- Two datasets were used to train two neural networks

1. Face Mask: ~12K Images Dataset (Kaggle)

2. Emotion: ~30K Images Dataset (Kaggle)

1. Face Mask Dataset

2. Emotion Dataset

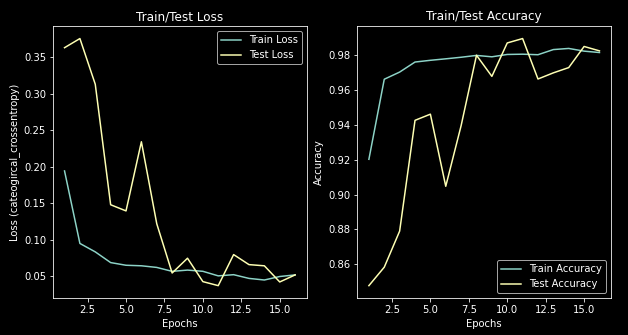

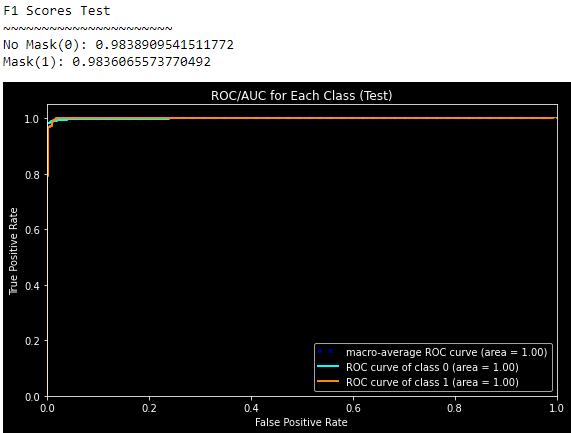

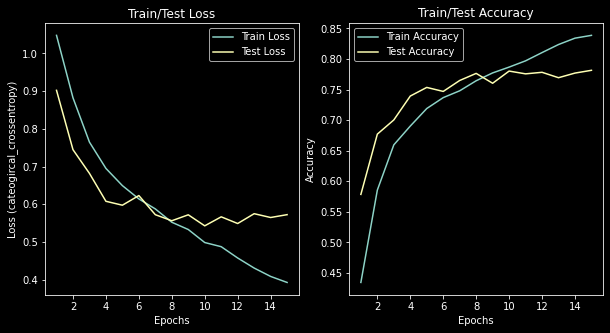

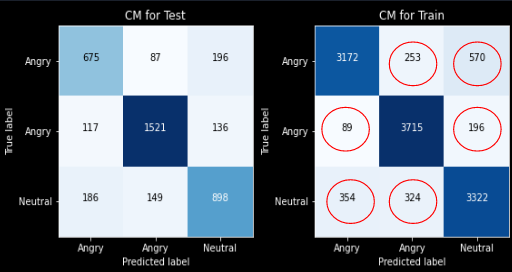

- For each model, early stopping was applied to prevent the model from overfitting

- The data for the models can be retrieved using the functions within the Functions.py file

- Visualizations functions for the confusion matrix, loss/accuracy, and ROC curve can be found within the Viz.py file

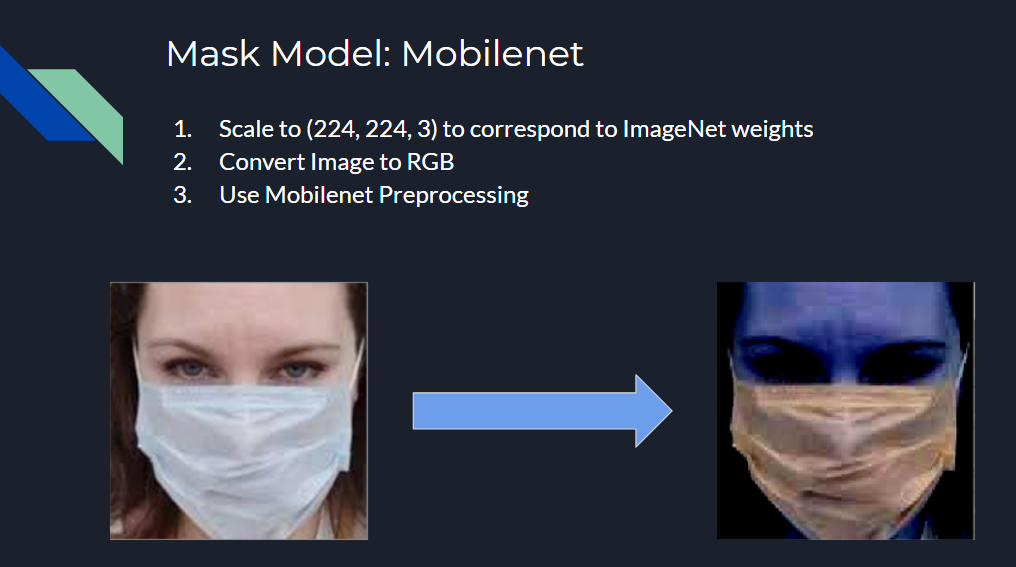

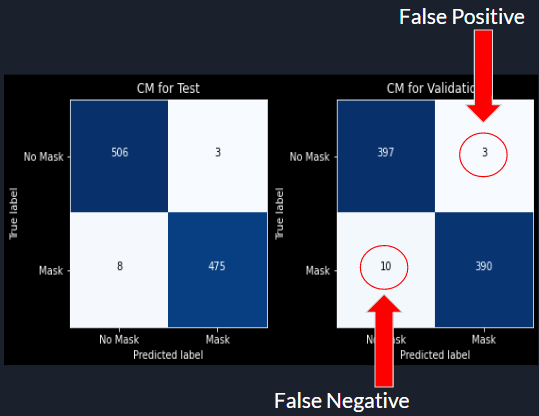

1. Face Mask Detection: Mobilenet

- Notebook

- Images were resized to (224, 224)

- Weights for imagenet were applied as well as sigmoid activation for the output layer, and binary crossentropy for the loss function

- Mobilenet was trained on the mask dataset

- Augmentation was applied to the training to ensure the model is able to generalize predictions to unknown data

- Predicted Classes: No Mask(0) & Mask(1)

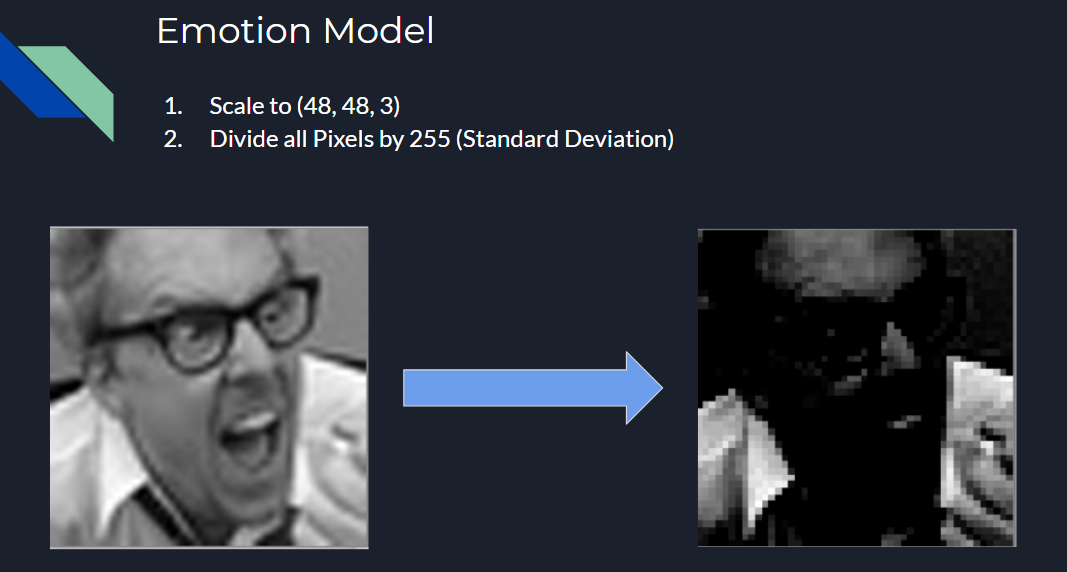

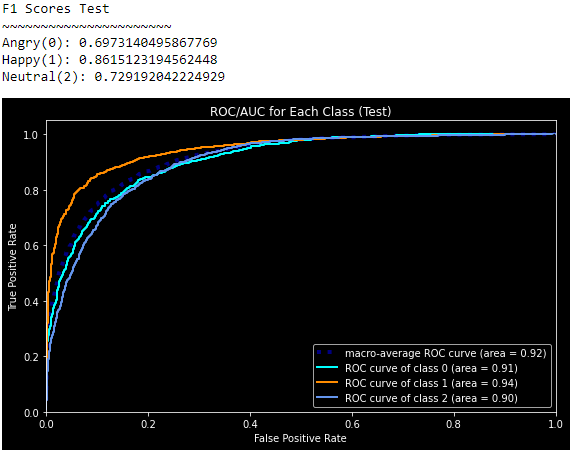

2. Emotion Detection: Convolutional Neural Network

- Notebook

- Images were resized to (48,48)

- Softmax activation and categorical crossentropy were applied

- Predicted Classes: Angry(0), Happy(1), Neutral(2)

- Notebook

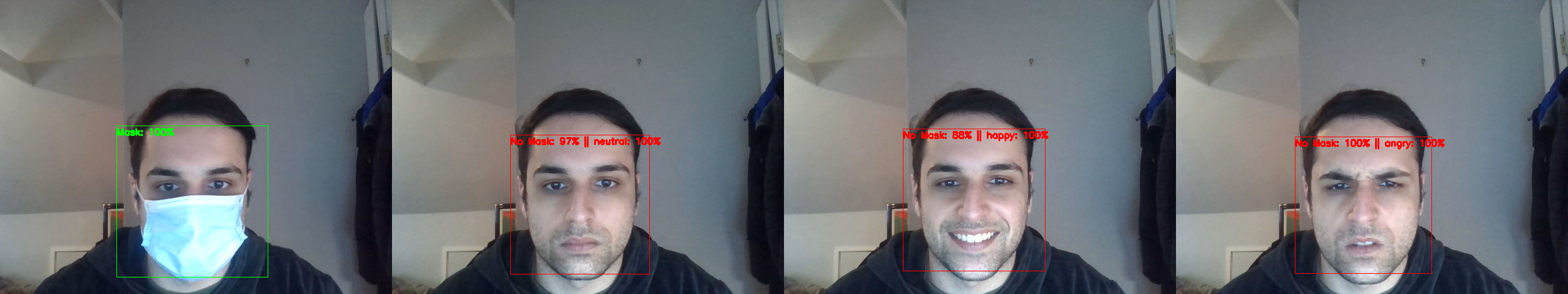

- In this section, the models were applied to live video

- The steps for applying this to live video were as follows:\

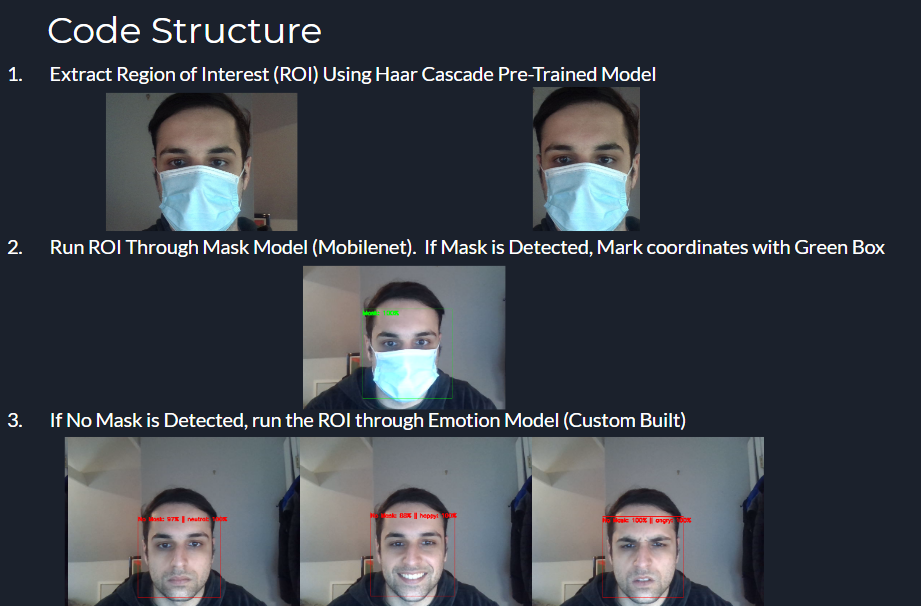

- Use Haar feature based cascade classifier to locate the coordinates of a face within a frame

- Extract the ROI of the face using the coordinates given by the classifier

- Make two copies of the ROI, one for the mask model and another for the emotion model

- Resize each copy to the correspoding dimensions used within the models

- Start by making a mask prediction

- If the model detects there is a mask, it will stop predicting and show a green box

- If the model does not detect the mask, the algorithm will move onto the emotion model

- Below you can see a .gif of how it works on my local machine

- Use more models (InceptionNet, VGG16, etc.)

- Use a camera that detects heat. This can be used to determine if someone is experiencing fever.

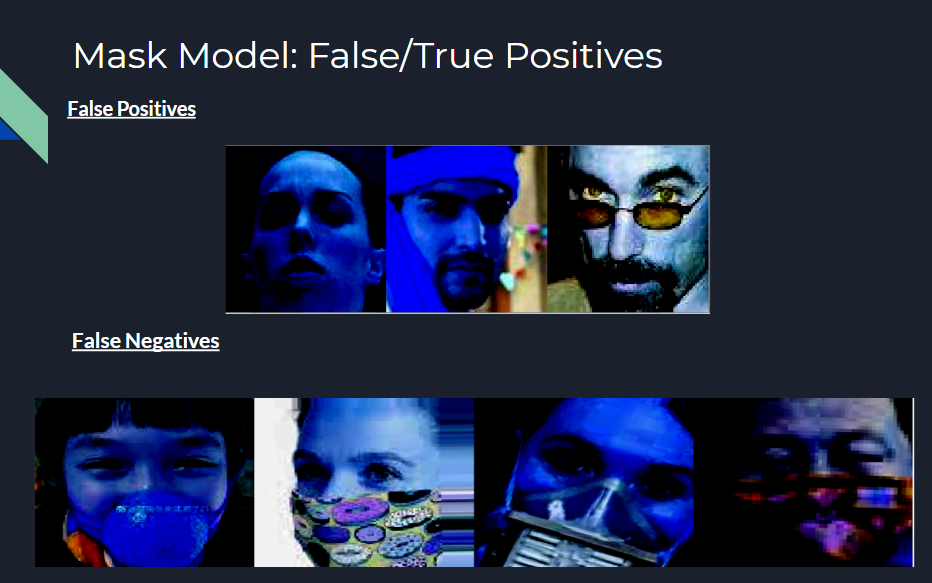

- More data. So far, this model has trouble detecting a mask when a person is wearing glasses. Using more images with people that wear glasses will get better results