Similarity-weighted Construction of Contextualized Commonsense Knowledge Graphs for Knowledge-intense Argumentation Tasks

This repository provides the code and resources to construct CCKGs as described in our paper Similarity-weighted Construction of Contextualized Commonsense Knowledge Graphs for Knowledge-intense Argumentation Tasks.

Please feel free to send us an email (plenz@cl.uni-heidelberg.de) if you have any questions, comments or feedback.

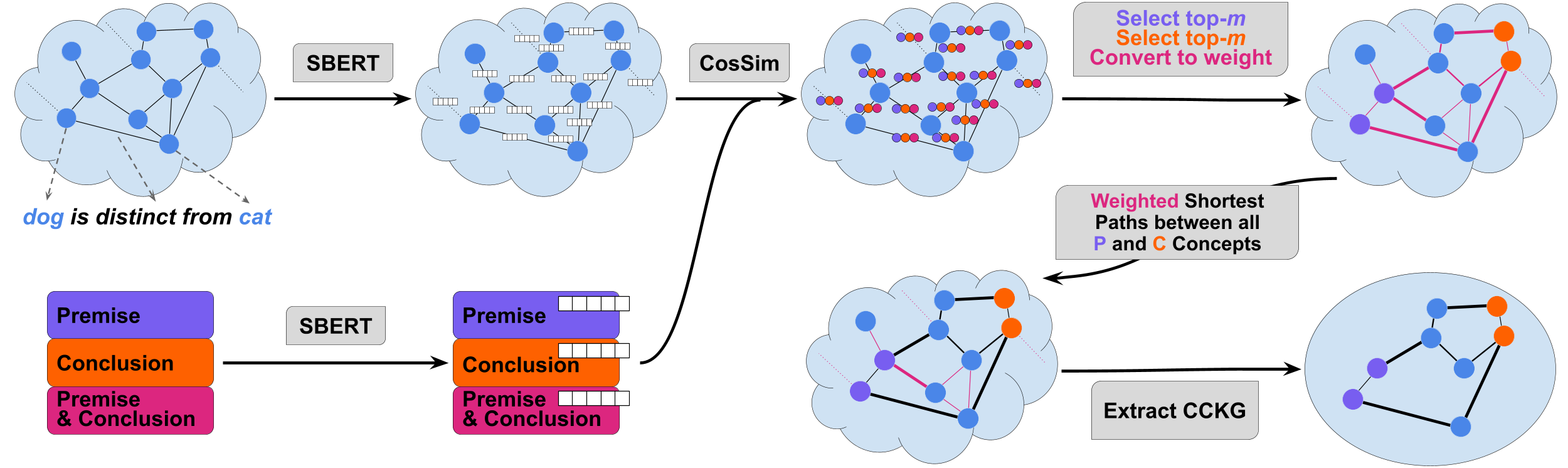

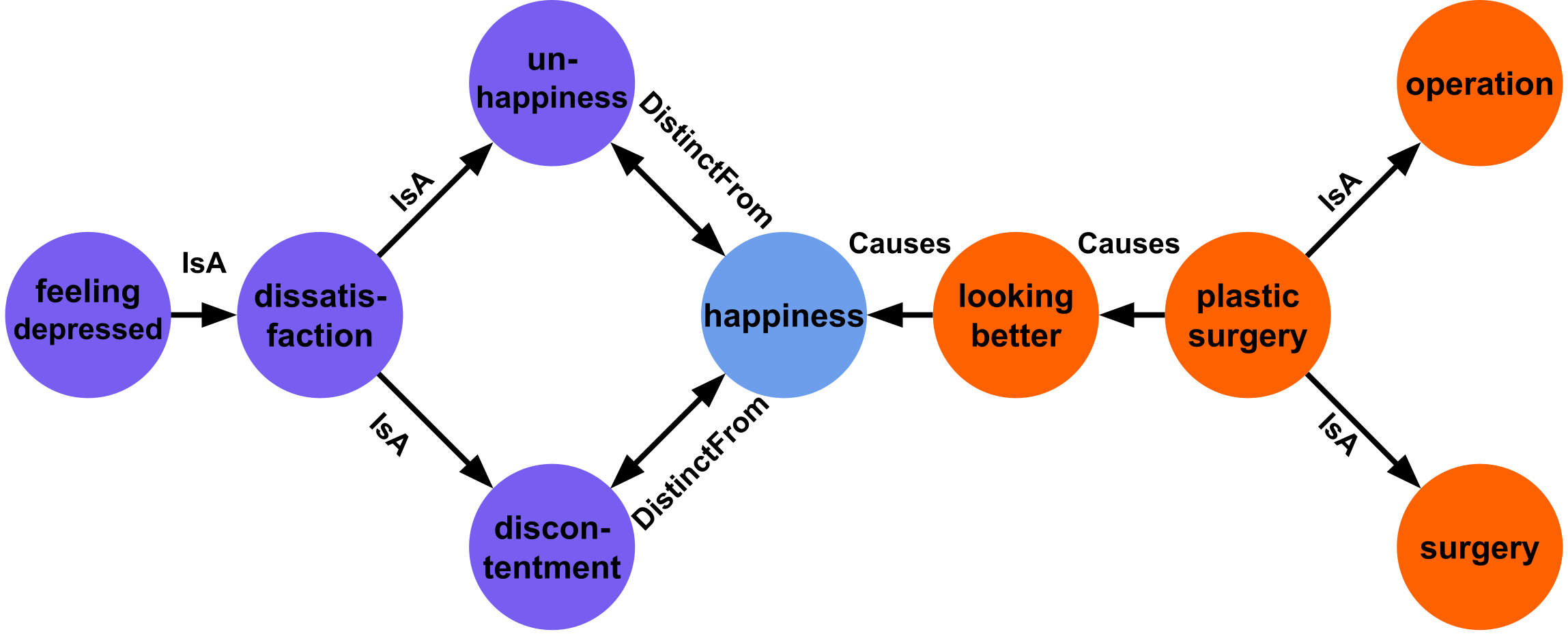

We propose a method to construct Contextualized Commensense Knowledge Graphs (short: CCKGs). The CCKGs are extracted from a given knowledge graph, by constructing similarity-weighted shortest paths which increase contextualization.

CCKGs can be used in various domains and tasks, essentially they can be used whenever text-conditioned subgraphs need to be extracted from a larger graph. However, we only evaluated our approach for Commonsense Knowledge Graphs and for Argumentative application.

We tested the code with python version 3.8. The necessary packages are specified in requirements.txt.

If you use Conda, you can run the following commands to create a new environment:

conda create -y -n CCKG python=3.8

conda activate CCKG

pip install -r requirements.txtFor ConceptNet and ExplaKnow you can download the preprocessed knowledge graphs by running

wget https://www.cl.uni-heidelberg.de/~plenz/CCKG/knowledgegraph.tar.gz

mv knowledgegraph.tar.gz data/

tar -xvzf data/knowledgegraph.tar.gz

rm data/knowledgegraph.tar.gzPlease refer to section 7 if you want to use a different knowledge graph.

You can extract concepts and save them to lookup dictionaries by running

bash_scripts/concept_extractions.shIn the script you can adjust the setting, e.g. what task, datasplit and / or knowledge graph you want to use. This script yields dictionaries with lookups of what concepts belong to which sentences. The keys of the dictionaries are the lower-cased sentences.

Afterwards you have to run

python python_scripts/combine_lookup_dicts.pyto combine the lookups from different datasplits to one lookup. Note that you might have to adjust the arguments in python_scripts/combine_lookup_dicts.py.

Note that if you want to test different settings of CCKGs, then you do not have to compute the shortest paths for all of them individually. For example, if you want to experiment with different values of

We first compute the shortest paths and save them in a jsonl-dictionary format. You can compute the shortest paths with

bash_scripts/compute_shortest_paths.shNote that this script only marginally improves from GPUs, so it can be run in CPUs. If you have multiple CPU cores available, then you can process different parts of your data by setting start_index and end_index. Afterwards you need to combine the files to one file (while maintaining the order of lines). This can be done for example by

cat fn_index{?,??,???,????,?????}.jsonl > fn.jsonlwhere fn needs to be replaced by the correct file name. In case you compute all shortest paths in one go (i.e. without creating multiple files) we still advise to rename the file to not include index.

You can prune the graphs by running

bash_scripts/prune_graphs.shNote that in the script you have to provide the path to the shortest path dict computed in Step 3, as well as several parameters that you already used to compute the shortest path dict in step 3, for example the number of shortest paths

Also, same as in step 3 you can prune graphs in parallel by giving a start_index and hence, you need to combine / rename the output file accordingly.

An example script to load CCKGs to iGraph is provided in bash_scripts/load_graphs.sh and load_shortest_path_dict.py.

To construct CCKGs on different data you need to implement your own datahandler and include it in the argparse for the datahandler. A template is provided at the end of methods/data_handler.py. Parts that need to be changed are marked with # TDND which stands for # ToDo New Data. You need to implement __init__ to define relevant paths, load_raw_data which loads the data from the disk to the RAM as a list and get_premise_and_conclusion which returns the premise and the conclusion given the index of the data instance. How to deal with (non-argumentative) data where you do not have a premise and a conclusion is described in the comments. Finally you need to add your new datahandler to str_to_datahandler.

Afterwards, you can just pass your new datahandler in steps 2-5.

To construct CCKGs from a different knowledge graph you need to first create the knowledge graph and then pass it in the datahandler(s).

The knowledge graph has to be saved as a directed igraph. Multiple edges between two concepts should be only one edge in the graph. The graph has the following features:

- graph feature

sBERT_uri(*): uri of the SBERT model that was used - node feature

name: name of the concept - node feature

embedding(*): SBERT embedding of the concept (not always necessary) - edge feature

relation: List of all relations between the two concepts - edge feature

nl_relation(*): List of the verbalizations of the triplets - edge feature

embedding(*): List of the SBERT embeddings of the triplets

Features marked by (*) can be added with the code add_embedding_to_knowledgegraph.py. Make sure that the embeddings have a L2 norm of 1, and normalize them if necessary.

Please refer to the provided knowledge graphs for an example.

Please consider citing our paper if it, or this repository was beneficial for your work:

@inproceedings{plenz-etal-2023-similarity,

title = "Similarity-weighted Construction of Contextualized Commonsense Knowledge Graphs for Knowledge-intense Argumentation Tasks",

author = "Plenz, Moritz and

Opitz, Juri and

Heinisch, Philipp and

Cimiano, Philipp and

Frank, Anette",

booktitle = "Proceedings of the 61th Annual Meeting of the Association for Computational Linguistics",

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

}