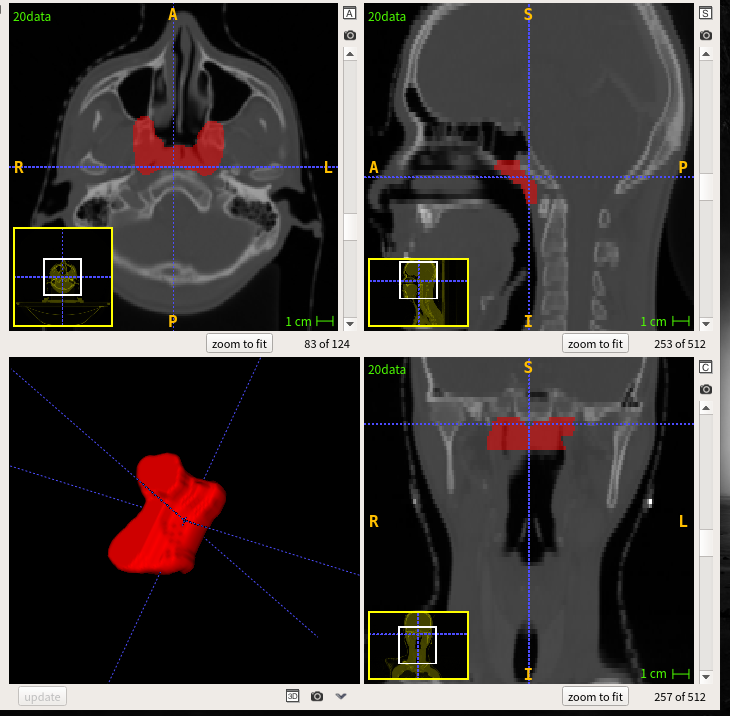

This repository proivdes source code for automatic segmentation of Gross Target Volume (GTV) of Nasopharynx Cancer (NPC) from CT images according to the following paper:

- [1] Haochen Mei, Wenhui Lei, Ran Gu, Shan Ye, Zhengwentai Sun, Shichuan Zhang and Guotai Wang. "Automatic Segmentation of Gross Target Volume of Nasopharynx Cancer using Ensemble of Multiscale Deep Neural Networks with Spatial Attention." NeuroComputing, accepted. 2020.

- Pytorch version =0.4.1

- TensorboardX to visualize training performance

- Some common python packages such as Numpy, Pandas, SimpleITK

In this repository, we use 2.5D U-Net to segment Gross Target Volume (GTV) of Nasopharynx Cancer (NPC) from CT images. First we download the images from internet, then edit the configuration file for training and testing. During training, we use tensorboard to observe the performance of the network at different iterations. We then apply the trained model to testing images and obtain quantitative evaluation results.

- The dataset can be downloaded from StructSeg2019 Challenge. It consists of 50 CT images of GTV. Download the images and save them in to a single folder, like

/origindata. - Create two folders in your saveroot, like

saveroot/dataandsaveroot/label. Then setdatarootandsaverootand then runpython movefiles.pyin Data_preprocessing folder to save the images and annotations to a single folder respectively. - Create three folders for each scale image in your saveroot and then create two folders in each of them like

saveroot/small_sacle/dataandsaveroot/small_sacle/label. Runpython preprocess.pyin Data_preprocessing folder to perform preprocessing for the images and annotations and then save each of them to a single folder respectively. - Set

saverootaccording to your computer inexamples/miccai/write_csv_files.pyand runpython write_csv_files.pyto randomly split the 50 images into training (40), validation (10) and testing (10) sets. The validation set and testing set are the same in our experimental setting. The output csv files are saved inconfig.

- Set the value of

root_diras yourGTV_rootinconfig/train_test.cfg. Add the path ofPyMICtoPYTHONPATHenvironment variable (if you haven't done this). Then you can start trainning by running following command:

export PYTHONPATH=$PYTHONPATH:your_path_of_PyMIC

python ../../pymic/train_infer/train_infer.py train config/train_test.cfg- During training or after training, run the command

tensorboard --logdir model/2D5unetand then you will see a link in the output, such ashttp://your-computer:6006. Open the link in the browser and you can observe the average Dice score and loss during the training stage.

- After training, run the following command to obtain segmentation results of your testing images:

mkdir result

python ../../pymic/train_infer/train_infer.py test config/train_test.cfgOr you can directly download the pre-trained models of Unet2D5(https://pan.baidu.com/s/1RCHojd0MXM1NBoBtA1plQw Extraction code: 5u2i )and our proposed model(https://pan.baidu.com/s/14UIRIHdsI8pFbIjv2GyKgw Extraction code: ax2p). Then you can put the weights in examples/miccai/model/ and perform testing phase the same as above. The performance of these two models at DICE is 0.6216 and 0.6504 respectively. Besides, model ensemble is not used in the above models.

- Then replace

ground_truth_folderwith your ownGTV_root/labelinconfig/evaluation.cfg, and run the following command to obtain quantitative evaluation results in terms of dice.

python ../../pymic/util/evaluation.py config/evaluation.cfgYou can also set metric = assd in config/evaluation.cfg and run the evaluation command again. You will get average symmetric surface distance (assd) evaluation results.