This neural system for image captioning is roughly based on the paper "Show, Attend and Tell: Neural Image Caption Generation with Visual Attention" by Xu et al. (ICML2015). The input is an image, and the output is a sentence describing the content of the image. It uses a convolutional neural network to extract visual features from the image, and uses a LSTM recurrent neural network to decode these features into a sentence. A soft attention mechanism is incorporated to improve the quality of the caption. This project is implemented using the Tensorflow library, and allows end-to-end training of both CNN and RNN parts.

- Tensorflow (instructions)

- NumPy (instructions)

- OpenCV (instructions)

- Natural Language Toolkit (NLTK) (instructions)

- Pandas (instructions)

- Matplotlib (instructions)

- tqdm (instructions)

- tips:

- delete all pycache folders under current directory

find . -name '__pycache__' -type d -exec rm -rf {} \;- Dataset Preparing:

- download faster_rcnn_resnet checkpoint

cd data

wget http://download.tensorflow.org/models/object_detection/faster_rcnn_resnet50_coco_2018_01_28.tar.gz

tar -xzf faster_rcnn_resnet50_coco_2018_01_28.tar.gz- frozen graph using checkpoint

cd ../code/

export PYTHONPATH=$PYTHONPATH:./object_detection/

python ./object_detection/export_inference_graph.py \

--input_type image_tensor \

--pipeline_config_path ../data/faster_rcnn_resnet50_coco_2018_01_28/pipeline.config --trained_checkpoint_prefix ../data/faster_rcnn_resnet50_coco_2018_01_28/model.ckpt --output_directory ../data/faster_rcnn_resnet50_coco_2018_01_28/exported_graphs

cp ../data/faster_rcnn_resnet50_coco_2018_01_28/exported_graphs/frozen_inference_graph.pb ../data- skip if have download coco dataset, else run the following command to get coco

OUTPUT_DIR="/home/zisang/im2txt"

sh ./dataset/download_mscoco.sh.sh ../data/coco- get feature for each region proposal(100*2048)

- for coco run the following command

DATASET_DIR="/home/zisang/Documents/code/data/mscoco/raw-data"

OUTPUT_DIR="/home/zisang/im2txt/data/coco"

python ./dataset/build_data.py \

--graph_path="../data/frozen_inference_graph.pb" \

--dataset "coco" \

--train_image_dir="${DATASET_DIR}/train2014" \

--val_image_dir="${DATASET_DIR}/val2014" \

--train_captions_file="${DATASET_DIR}/annotations/captions_train2014.json" \

--val_captions_file="${DATASET_DIR}/annotations/captions_val2014.json" \

--output_dir="${OUTPUT_DIR}" \

--word_counts_output_file="${OUTPUT_DIR}/word_counts.txt" - for flickr8k

DATASET_DIR="/home/zisang/Documents/code/data/Flicker8k"

OUTPUT_DIR="/home/zisang/im2txt/data/flickr8k"

python ./dataset/build_data.py \

--graph_path "../data/frozen_inference_graph.pb" \

--dataset "flickr8k" \

--min_word_count 2 \

--image_dir "${DATASET_DIR}/Flicker8k_Dataset/" \

--text_path "${DATASET_DIR}/" \

--output_dir "${OUTPUT_DIR}" \

--train_shards 32\

--num_threads 8- Training:

First make sure you are under the folder

code, then setup various parameters in the fileconfig.pyand then run a command like this:

python train.py --input_file_pattern='../data/flickr8k/train-?????-of-00016' \

--number_of_steps=10000 \

--attention='bias' \

--optimizer='Adam' \

--train_dir='../output/model'To monitor the progress of training, run the following command:

tensorboard --logdir='../output/model'- Evaluation: To evaluate a trained model using the flickr30 data, run a command like this:

python eval.py --input_file_pattern='../data/flickr8k/val-?????-of-00008' \

--checkpoint_dir='../output/model' \

--eval_dir='../output/eval' \

--min_global_step=10 \

--num_eval_examples=32 \

--beam_size=3The result will be shown in stdout. Furthermore, the generated captions will be saved in the file output/val/flickr30_results.json.

- Inference:

You can use the trained model to generate captions for any JPEG images! Put such images in the folder

test/images, and run a command like this:

python main.py --phase=test \

--model_file='../output/models/xxxxxx.npy' \

--beam_size=3The generated captions will be saved in the folder test/results.

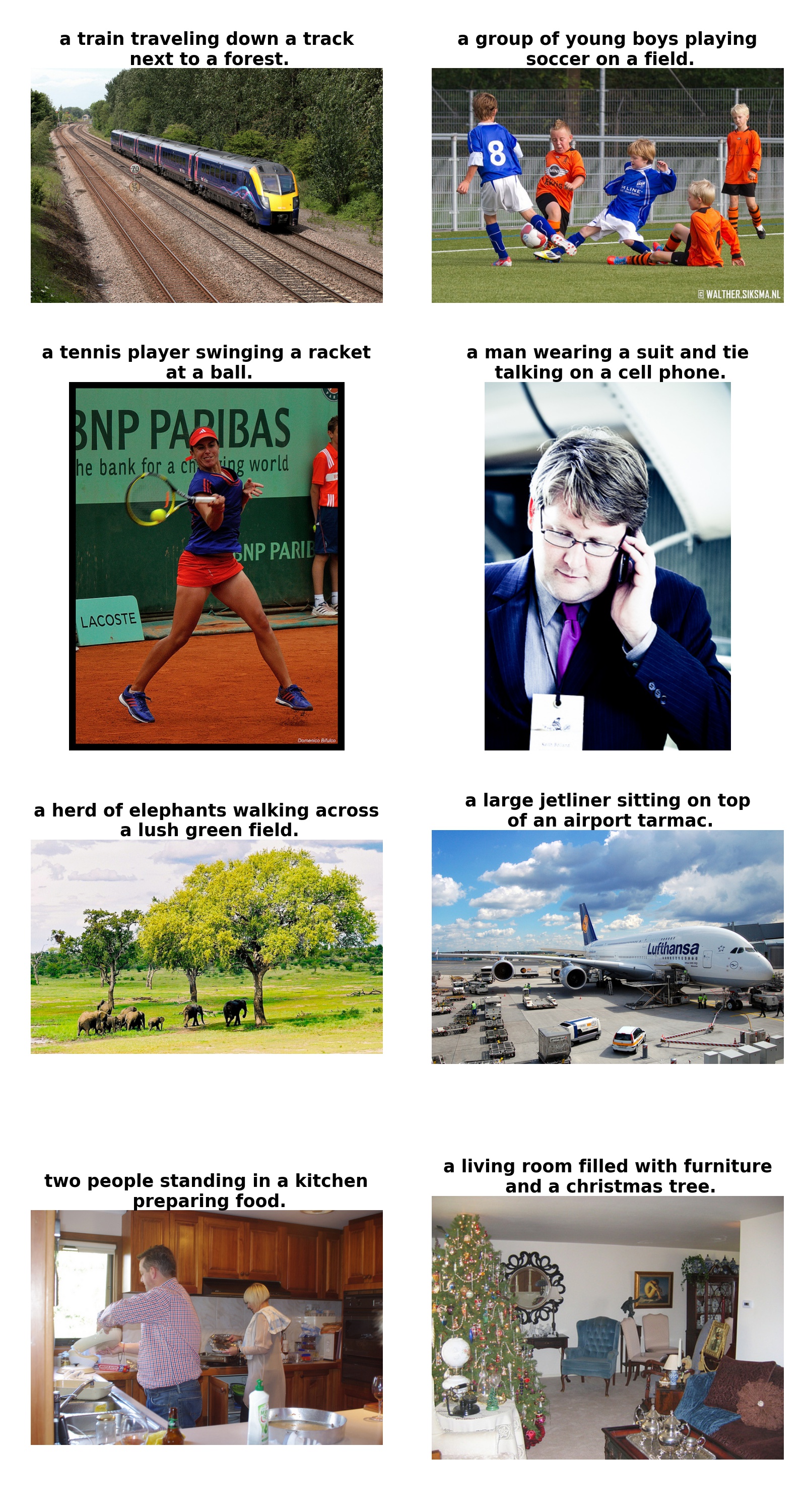

A pretrained model with default configuration can be downloaded here. This model was trained solely on the COCO train2014 data. It achieves the following BLEU scores on the COCO val2014 data (with beam size=3):

- BLEU-1 = 70.3%

- BLEU-2 = 53.6%

- BLEU-3 = 39.8%

- BLEU-4 = 29.5%

Here are some captions generated by this model:

- Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard Zemel, Yoshua Bengio. ICML 2015.

- The original implementation in Theano

- An earlier implementation in Tensorflow

- Microsoft COCO dataset