Contact: nmajeske@iu.edu

HydroLearn contains submodules (other repositories) which must be cloned for full functionality. This repository and all submodules may be cloned at once using the --recurse-submodules flag. See examples below:

git clone https://github.com/HipGraph/HydroLearn_Dev.git --recurse-submodulesgit clone git@github.com:HipGraph/HydroLearn_Dev.git --recurse-submodulesHydroLearn and its dependencies are best handled using Anaconda and Pip. The script Setup.py is meant to install all dependencies and integrate (correct import statements, etc) of all submodules. First, users will need to create their conda environment:

conda create -n HydroLearn python=3.9Once created, activate this environment and install/integrate all dependencies using the following:

conda activate HydroLearn

python Setup.py install_dependencies <pytorch_version(1.12.0)> <cuda_version(11.3)>

python Setup.py integrate_submodulesSetup.py dependency installation defaults to PyTorch and Cuda versions 1.12.0 and 11.3 but may be called with different versions supplied as second and third arguments. HydroLearn is implemented in the default versions and conflicts are likely to arise with other PyTorch and Cuda versions.

HydroLearn may be executed simply by invoking the Execute script:

python Execute.pyThis will execute HydroLearn using all default settings defined in Variables.py. To change the execution of HydroLearn, users can provide command-line arguments similar to the example below:

python Execute.py --training:train true --evaluation:evaluate true --evaluation:evaluated_checkpoint \"Best\"This invocation executes HydroLearn in train and evaluation modes and evaluates the model by checkpoint name "Best". HydroLearn uses a custom argument parser (implemented in Arguments.py) to convert command-line arguments to an equivalent Container (more on Containers below) instance. This parser uses a custom command-line argument syntax which is defined below. The example command-line arguments given above will be parsed into a container object of the following structure:

-> training

train = True

-> evaluating

evaluate True

evaluated_checkpoint "Best"In this case, the parsed container consists of two child containers named "training" and "evaluating" consisting of the variable names and values specified by our command-line arguments. Command-line arguments take the following syntactic form:

--<sub-container_path:><partition__>arg_name arg_valuewhere <> indicates an optional field and elements include:

- The argument flag "--"

- The sub-container path for hierarchical arguments. For example, --child:grandchild:arg_name arg_value denotes an argument by name "arg_name" and value "arg_value" located in a sub-container "grandchild" of sub-container "child". Note that this path is colon-separated and must include a trailing colon if used. The equivalent non-hierarchical argument form is --arg_name arg_value

- The partition this argument name-value pair is assigned to

- The argument name (arg_name)

- The argument value (arg_value)

After parsing command-line arguments to an equivalent container, HydroLearn merges this container into the default variables container (defined in Variables.py) and executes on this new variable set. Note that merging container B into container A consists of replacing the values of all common variables in A with the values of variables in container B. By default, hierarchical variables are merged in a one-to-one fashion but non-hierarchical variables are merged recursively. More specifically, non-hierarchical variables are propagated down all sub-containers replacing the value for each common variable name. This functionality allows users to set the value for all instances of a variable by providing a single non-hierachical argument rather than repeating the argument for each hierarchical instance.

The Driver.py script supplies additional functionality for execution of HydroLearn including distributed, experiment, and analysis execution.

Manually entering command-line arguments is time consuming and impractical for complex settings. To this end, the Experimentation package allows users to define experiments (argument sets for multiple invocations) as a module. With an experiment module Experimentation/MyExperiments.py, Driver.py can execute HydroLearn for each argument set defined in an experiment using:

python Driver.py --E \"MyExperiments\" --e 1Here --E specifies the experiment module and --e specifies the experiment of that module to execute. Consider using the example module Experimentation/ExperimentTemplate.py to get started. Users may wish to view the argument sets of their experiment without execution of the pipeline. This may be achieved by the following command:

python Driver.py --E \"MyExperiments\" --e 1 --driver:debug trueIt is common for the results of an experiment to require some form of processing to produce a final product (plots, tables, etc). To this end, the Analysis package allows users to define all post-processing routines for an experiment as a module. With an analysis module Analysis/MyAnalysis.py, Driver.py can execute all post-processing routines for an experiment using:

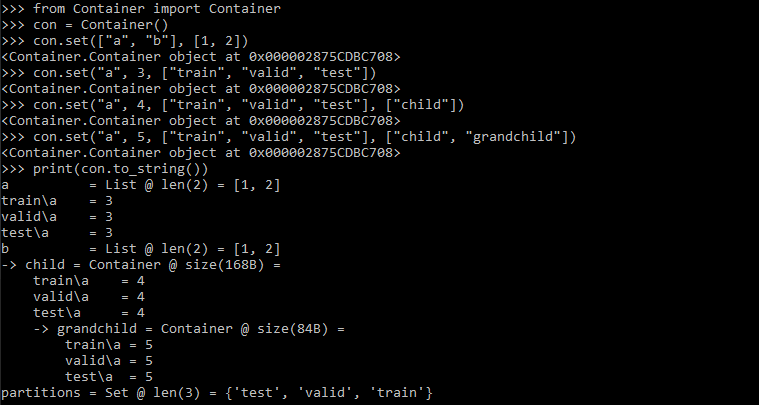

python Driver.py --A \"MyAnalysis\" --a 1To maintain flexibility and manage the namespace, HydroLearn relies heavily on the Container class to store runtime data. Simply put, containers are python dictionaries with added functionality. Specifically, this added functionality facilitates the creation of hierarchical data structures with built-in partitioning. Below are just a few examples of working with the Container class:

# Basic operations for var "a" with value 1

con = Container()

con.set("a", 1)

a = con.get("a")

con.rem("a")# Basic operations for var "a" with value 1 under partition "train"

con = Container()

con.set("a", 1, partition="train")

train_a = con.get("a", partition="train")

con.rem("a", "train")# Basic operations for var "a" with value 1 under partition "train" in child container "child"

con = Container()

con.set("a", 1, partition="train", context="child")

train_a = con.get("a", partition="train", context="child")

con.rem("a", partition="train", context="child")con = Container()

con.set(["a", "b"], 1, "train") # Vars with same value & partition

a, b = con.get(["a", "b"], "train")

con.rem(["a", "b"], "train")

con.set(["a", "b"], 1, ["train", "test"]) # Vars with same value but different partitions

a, b = con.get(["a", "b"], ["train", "test"])

con.rem(["a", "b"], ["train", "test"])

con.set("a", 1, "*") # Var with any existing partition

a_vals = con.get("a", "*")

con.rem("a", "*") Here --A specifies the analysis module and --a specifies the post-processing routine of that module to execute. Consider using the example module Analysis/AnalysisTemplate.py to get started.

Here --A specifies the analysis module and --a specifies the post-processing routine of that module to execute. Consider using the example module Analysis/AnalysisTemplate.py to get started.

In order to feed new data into HydroLearn, users will need to complete the following steps:

- Verify data format

Dataset loading is currently implemented for spatial and spatiotemporal data. Loading assumes each data file is comma-separated (.csv) and requires the following format:- Spatial Data

For spatial data containing S spatial elements and F spatial features, loading requires the file to contain S lines of F comma-separated features. - Spatiotemporal Data

For spatiotemporal data containing T time-steps, S spatial elements, and F spatiotemporal features, loading requires the file to contain T x S lines of F comma-separated features.

For both spatial and spatiotemporal data, spatial elements must be listed contiguously (see Data/WabashRiver/Observed/Spatiotemporal.csv). Finally, labels for each time-step and spatial element are required.

- Spatial Data

- Create a dataset directory and add data files

All datasets are stored in their own sub-directory under Data. Simply create a new directory under Data and add all data files to it. - Implement a DatasetVariables module

The pipeline recognizes datasets by searching the Data directory (recursively) for all instances of DatasetVariables.py. Users must implement this module and place the script file at the root of their dataset directory. As an example, the Wabash River ground truth dataset is setup with its DatasetVariables.py module in Data/WabashRiver/Observed/. To facilitate user implementation of the DatasetVariables module, a template is included under Data/DatasetVariablesTemplate.py and lists all variables that must be defined. It is recommended that users start with this template and follow the Wabash River DatasetVariables module as an example.

In order to add new models to HydroLearn, users will need to complete the following steps:

- Implement a Model module

The pipeline recognizes models by searching the Models directory (non-recursively) for all modules with the exception of __init__.py, Model.py and ModelTemplate.py. Model operations including initialization, optimization, prediction, etc, are defined and operated by the model itself while HydroLearn simply calls a select few functions. Currently, HydroLearn is designed assuming models are implemented in PyTorch but is flexible enough to allow the incorporation of models implemented in Tensorflow (see Models/GEOMAN.py). As a result, models currently implemented for HydroLearn inherit from a Model class implemented in the Models/Model.py module and this class inherits from PyTorch's torch.nn.Module. To facilitate user implementation of model modules, a template is included under Models/ModelTemplate.py. It is recommended that users start with this template and follow the LSTM model module under Models/LSTM.py as an example.

- Analysis/Analysis.py : Defines the base analysis class from which user-defined analysis classes must inherit.

- Arguments.py : A module defining the argument parser and builder which parses command-line arguments into a Container object or builds command-line arguments from a Container object respectively.

- Container.py : Hierarchical container class to facilitate partitioning of data. Widely used across HydroLearn as most data classes inherit from this class.

- Data/Data.py : The all-encompassing data module that manages loading, caching, and processing of all datasets.

- Data/Graph.py : Defines all routines for loading, pre-processing, partitioning, etc for graphical/geometrical data.

- Data/SpatialData.py : Defines all routines for loading, pre-processing, partitioning, etc for data that is only spatially distributed.

- Data/SpatiotemporalData.py : Defines all routines for loading, pre-processing, partitioning, etc for data that is both spatially and temporally distributed.

- Driver.py : Handles execution of HydroLearn including distributed (or non-distributed) invocation of Execute.py, invocation of experiments, and invocation of analysis.

- Experimentation/Experiment : Defines the base experiment class from which user-defined experiment classes must inherit.

- Execute.py : The pipeline script which defines data loading, pre-processing, model training/evaluation, plotting, and more.

- Models/Model.py : The module for PyTorch machine learning models. All models implemented will inherit from the Model class and, by inheritance, PyTorch's Module class. See Models/LSTM.py for an example.

- Plotting.py : Defines all plotting routines.

- Utility.py : Defines all miscellaneous/common routines.

- Variables.py : Defines all variables and their default values.