Paper 🤗Data 🤗Rating Reward Model 🤗Ranking Reward Model

Authors: Hritik Bansal, John Dang, Aditya Grover

pip install -r requirements.txt

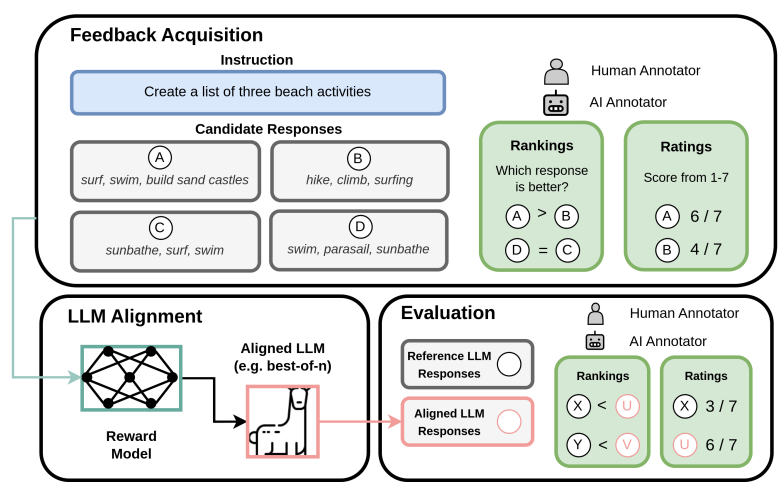

You can access the large-scale ratings and rankings feedback data at 🤗 hf datasets

- The first step would be to collect instructions data in the

instructions.jsonformat. - The file should be formatted as:

[

{"instruction": instruction_1, "input": input_1 (Optional as many instances may not have any input)},

{"instruction": instruction_2, "input": input_2},

.

.

]

- In our work, we merge three instructions datasets: a subset of Dolly, UserOrient Self Instruct, and a subset of SuperNI-V2. Feel free to create your custom instructions dataset. Our subset of SuperNI-V2 is present here.

- Once the

data.jsonis ready, we use Alpaca-7B model to generate five responses for each instance. - Use Alpaca original repo to setup the environment and download the model checkpoints.

- Use this script inference_alpaca to generate CSV file with instructions, input, response1, response2, response3, response4, response5 in it.

- Sample Alpaca generation is present here.

- Convert the

jsonfile to acsvfile using the conversion code here

python utils/convert_data_to_csv_ratings.py --input data/alpaca_generation/sample_generation.json --output data/alpaca_generation/ratings_sample_generation.csv- Run the llm_feedback_ratings.py using the following sample command:

OPENAI_API_KEY=<Your OAI API KEY> python llm_feedback_ratings.py --input_csv ../data/alpaca_generation/ratings_sample_generation.csv --save_feedback_csv ../data/alpaca_generation/feedback_ratings_sample_generation.csv - Convert the

jsonfile to acsvfile using the conversion code here

python utils/convert_data_to_csv_rankings.py --input data/alpaca_generation/sample_generation.json --output data/alpaca_generation/rankings_sample_generation.csv - Run the llm_feedback_rankings.py using the following sample command:

OPENAI_API_KEY=<Your OAI API KEY> python scripts/llm_feedback_rankings.py --input_csv data/alpaca_generation/rankings_sample_generation.csv --save_feedback_csv data/alpaca_generation/feedback_rankings_sample_generation.csv Run the consistency.py with the following command:

python consistency.py --ratings_csv <ratings csv w/ AI feedback> --rankings_csv <rankings csv w/ AI feedback>We use Alpaca-7B as the backbone for the ratings and rankings reward models. please setup Alpaca checkpoints before moving forward. We use single A6000 GPU to train our reward models. Since, we use HF trainer, it should be straightforward to extend the code to multi-GPUs.

Some of the code is adopted from Alpaca-LoRA, thanks to its developers!

- Split your ratings feedback data into train.csv and val.csv

- Setup wandb dashboard for logging purposes.

- Sample command to get started with the reward model:

CUDA_VISIBLE_DEVICES=4 python train.py --output_dir <dir> --train_input_csv <train.csv> --test_input_csv <val.csv> --model_name <path to alpaca 7b>- You should be able to see the trained checkpoints being stored in your

output_dirafter every epoch.

Our pretrained Checkpoint is present 🤗 here. Please note that you need to make a few changes to the code to load this checkpoint as mentioned here.

- Firstly, we convert the rankings feedback data into a suitable format for reward model training. Run convert_data_for_ranking_rm.py.

python convert_data_for_ranking_rm.py --input ../data/alpaca_generation/feedback_rankings_sample_generation.csv --output ../data/alpaca_generation/feedback_rankings_for_rm_sample.json- The output of the above code would be:

[

'i_1': {'sentences': [[a1, a2], [a3, a4]...]}

...

'i_n': {'sentences': [[b1, b2], [b3, b4]...]}

]

where [a1, a2] and [a3, a4] are pair of responses for the instruction i1 such that a1 is preferred over a2 and a3 is preferred over a4.

- Split the created json into

train.jsonandval.json. Sample command to launch the training:

CUDA_VISIBLE_DEVICES=4 python train.py --per_device_train_batch_size 1 --output_dir <output_dir> --train_input <train.json> --test_input <val.json> --model_name <path to alpaca 7b> --learning_rate 1e-4 --run_name test --gradient_accumulation_steps 4 - You should be able to see the trained checkpoints being stored in your

output_dirafter every epoch. - Our pretrained Checkpoint is present 🤗 here. Please note that you need to make a few changes to the code to load this checkpoint as mentioned here.

- We utilize AlpacaEval (thanks to the developers!) as the outputs of the reference model

text-davinci-003on unseen instructions data. - Firstly, generate

64responses for the553unseen instructions from Alpaca-7B model. Run this command to do so:

python inference/eval_inference_alpaca.py --device_id 3 --input_data data/inference_model_outputs/davinci003.json --save_generations data/inference_model_outputs/alpaca7b.json --model_path <path to alpaca-7b>This code should generate a file that looks like alpaca.json 3. We will re-rank the 64 responses from the model using the reward models trained in the previous step. Run this command to do so:

python inference/reranking.py --device_id 3 --input_data data/inference_model_outputs/alpaca7b_temp1_64.json --save_generations data/inference_model_outputs/ratings_alpaca.json --reward_model_path <path to checkpoint-xxx> --alpaca_model_path <path to alpaca 7B> --reward_model_name ratings_alpaca- Create a

csvfiles from thejsonof responses using the following command

python utils/convert_data_for_ratings_eval.py --input data/inference_model_outputs/davinci003.json --output data/inference_model_outputs/davinci_for_llm_eval.csv

python utils/convert_data_for_ratings_eval.py --input data/inference_model_outputs/ratings_alpaca.json --output data/inference_model_outputs/ratings_for_llm_eval.csv- Run the following command to rate the outputs using LLM:

OPENAI_API_KEY=<YOUR OPENAI KEY> python scripts/llm_feedback_ratings.py --input_csv data/inference_model_outputs/davinci_for_llm_eval.csv --save_feedback_csv data/inference_model_outputs/feedback_ratings_davinci_for_llm_eval.csv

OPENAI_API_KEY=<YOUR OPENAI KEY> python scripts/llm_feedback_ratings.py --input_csv data/inference_model_outputs/ratings_for_llm_eval.csv --save_feedback_csv data/inference_model_outputs/feedback_ratings_ratings_for_llm_eval.csv - Run the following command to get the win-rate for the aligned LLM against the reference model:

python scripts/calc_win_rate_from_ratings_eval.py --input1 data/inference_model_outputs/feedback_ratings_davinci_for_llm_eval.csv --input2 data/inference_model_outputs/feedback_ratings_ratings_for_llm_eval.csv - Create a single

csvfile from thejsonfiles containing the model outputs -- reference and aligned LLM.

python utils/convert_data_for_rankings_eval.py --davinci_file data/inference_model_outputs/davinci003.json --alpaca_file data/inference_model_outputs/ratings_alpaca.json --output data/inference_model_outputs/rankings_for_llm_eval.csv- Run the following command to get the rankings for the pairwise judgments from the LLM

OPENAI_API_KEY=<YOUR OPENAI API KEY> python scripts/llm_feedback_rankings.py --input_csv data/inference_model_outputs/rankings_for_llm_eval.csv --save_feedback_csv data/inference_model_outputs/feedback_rankings_davincivsratings_for_llm_eval.csv- Run the following command to get the win-rate for the aligned LLM against the reference model:

python scripts/calc_win_rate_from_rankings_eval.py --input data/inference_model_outputs/feedback_rankings_davincivsratings_for_llm_eval.csv TODO