SIMULATION-BASED REINFORCEMENT LEARNING FOR SOCIAL DISTANCING

This is an open source pandemic simulation environment for reinforcement learning created with Unity ml-agents. This is a dissertation project for Advanced Computer Science Master in University of Sussex.

Open the "Project" folder in Pandemic_Simulation with Unity Editor. Play Assets/PandemicSimulation/Scenes/T3.unity

- This project uses mlagents Release 4 (It is migrated from Unity-ml-agent 1.1.0 Release 3)

- Unity 2919.4.0f1

- Anaconda Environment with Python 3.7

- Tensorflow 2.3.0

A step by step series of examples that tell you how to get a development env running

To train an agent:

- Go to the folder of config/PPO or config/SAC then open trainer_config.yaml (each algorithm has its own configuration file)

- Activate the environment

- Start Training

mlagents-learn is the main training utility provided by the ML-Agents Toolkit.

It accepts a number of CLI options in addition to a YAML configuration file that

contains all the configurations and hyperparameters to be used during training.

The set of configurations and hyperparameters to include in this file depend on

the agents in your environment and the specific training method you wish to

utilize. Keep in mind that the hyperparameter values can have a big impact on

the training performance (i.e. your agent's ability to learn a policy that

solves the task). In this page, we will review all the hyperparameters for all

training methods and provide guidelines and advice on their values.

To view a description of all the CLI options accepted by mlagents-learn, use

the --help:

mlagents-learn --helpThe basic command for training is:

mlagents-learn ./trainer_config.yaml --run-id first_run

To see the results use:

tensorboard --logdir results

This will open a localhost where you can see all the graphs that ml-agents provide to you

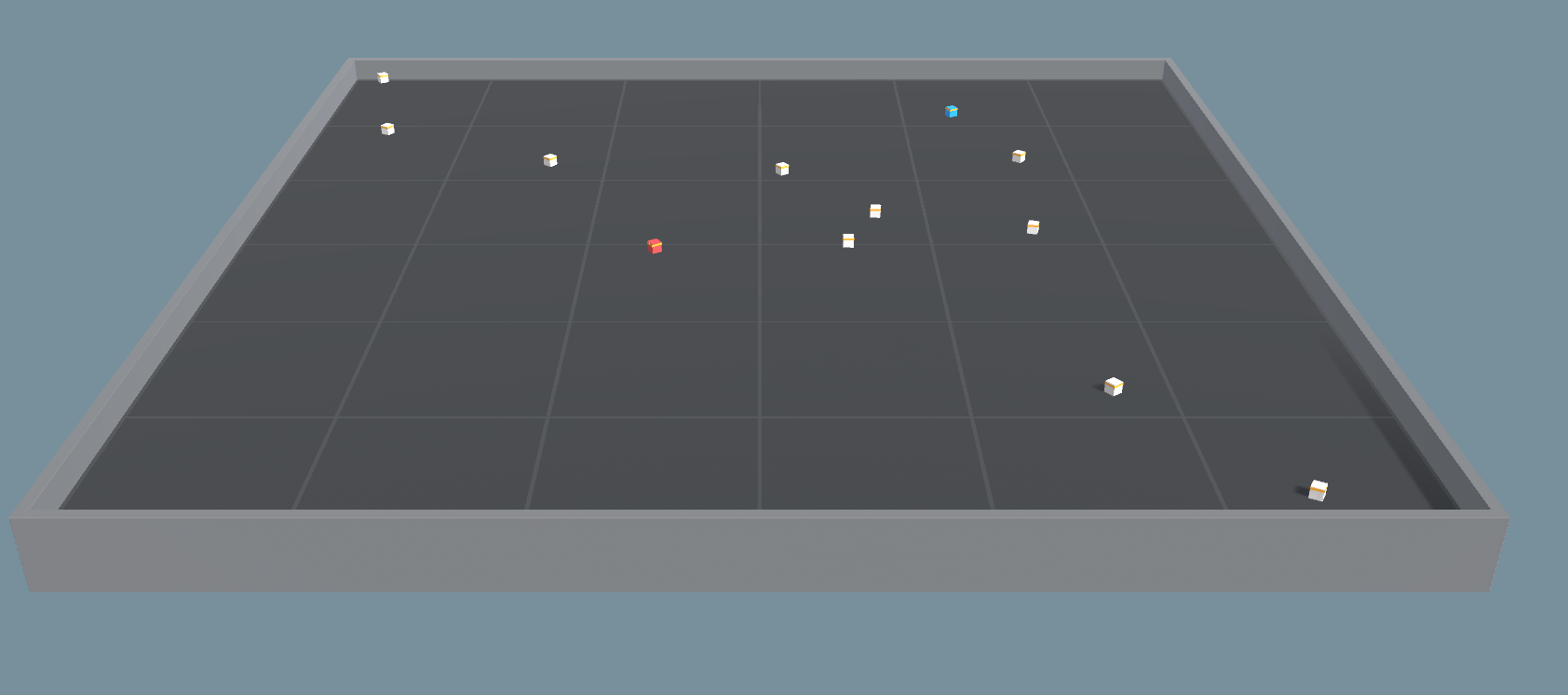

Single-Agent Environment: Surviving in an Epidemic outbreak and collecting rewards

Multi-Agent Environment: Social Distancing and discovery of self-quarantine

Different states of the agents

Through the simulation, the agent’s status of health changes. To represent the change, we used 4 different colors. a) White Bots indicates that the agent is not controlled by a brain. It only has simple hard-coded actions such as directly going targeted locations or bouncing from the walls. This represents individuals in a community who are not acting logically. b) Blue Bots are agents with a brain that controls them. c)Red Bots indicates whether with brain or not the bot is infected. d)Purple indicates that the agent is not infectious anymore. In SIR models’ purple agents call as Recovered-Removed.

- Ege Hosgungor - Initial work - Hsgngr

See also the list of contributors who participated in this project.

This project is inspired from followings:

If you find this useful for your research, please consider citing this Hsgngr. Please contact Ege Hosgungor <hsgngr@gmail.com> with any comments or feedback.