This is the final project for my Digital Image Processing course, which is written in python with KISS in mind. Everything including error estimation are included in the code, run using python 2.x with OpenCV and Numpy will produce roughly the same result as shown below.

The project is merely Laboratory Projects for Digital Image Processing by Gonzalez and Woods, project 12 (中文版) , go take a look.

In a word, I'm trying to compute the fourier descriptors of an arbitrary shape (the shape must be white on black BTW), and reconstruct the contour of the image using the minimum number of descriptors. And based on these descriptors that I've selected I try to construct SVM classifier, Bayers classifier, and minimum distance classifier using descriptors contaiminated by gaussian noise.

I took a long time accepting the fact that the classifiers works too well to believe, in all three cases the classifiers gives a success rate of 100%, I managed to explain within the program.

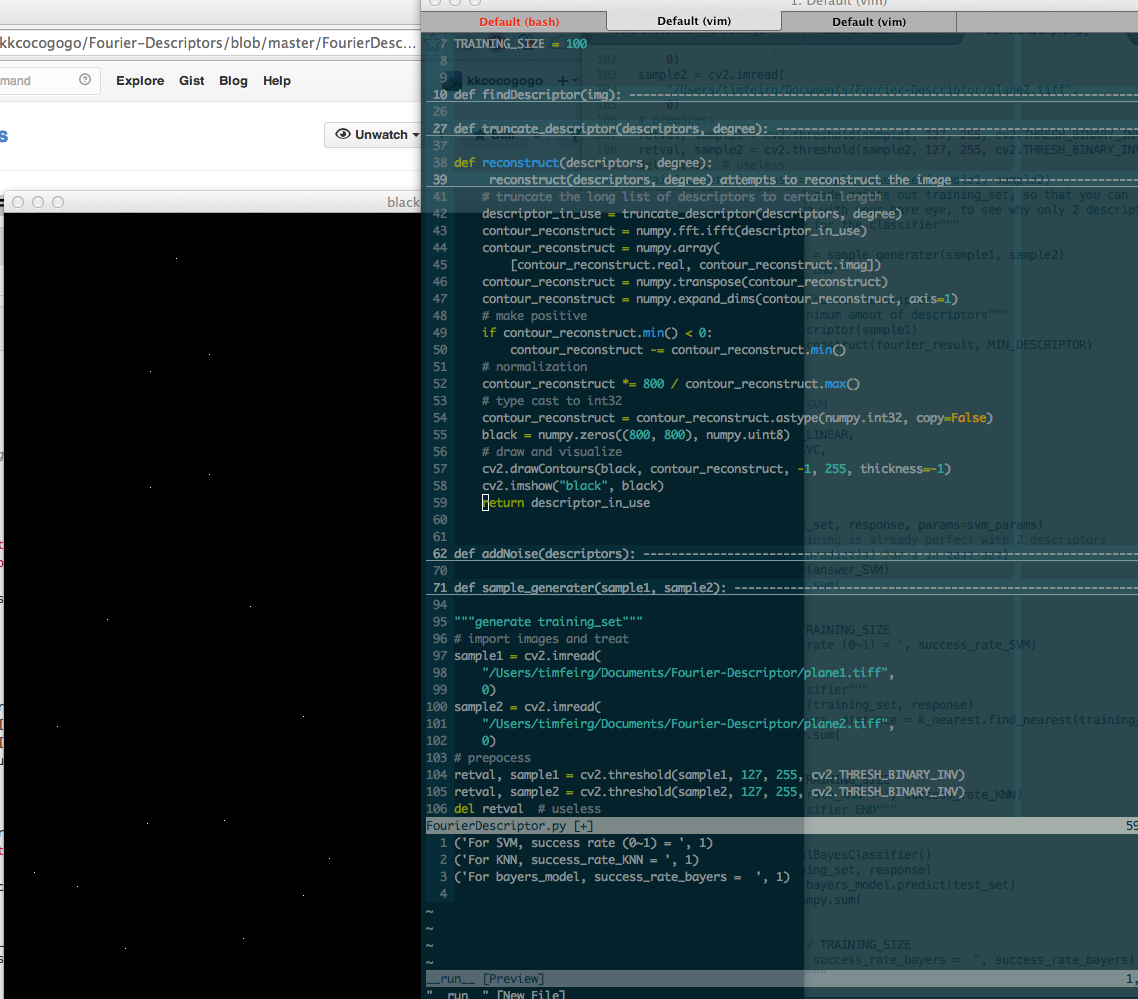

As mentioned, for all three classifiers the success rate are 100%, you can play with the noise, that ought to make a difference. The following screenshot is the result using 18 descriptors.

I tried very hard to KISS so the final code is quite intuitive from my point of view. There's no real reason for optimization.

- Here's a dangerous trap: when you're truncating, say, 20 from the fourier descriptors, it's vital that these 20 frequencies should be zero symmetric, or else you'd reconstruct weird shape.

- 2 descriptors are enough for 100% success rate, print the training set and you'll know why.

The text book was somehow difficult to understand, I basically learn everything based on internet, so the approach I use may not be accurately the same as what it's like in the text book.

Check out all the other stuff inside this repository to see if there's anything helpful, there're all sorts of pdf files for reading.