Paper: https://aclanthology.org/2023.findings-eacl.79.pdf

- Tran Cong Dao

- Pham Nhut Huy

- Nguyen Tuan Anh

- Hy Truong Son (Correspondent / PI)

- bash: bash scripts to run the pipeline

- config: model_config (json files)

- dataset: datasets folder (both store original txt dataset and the pointer to memory of datasets.load_from_disk)

- source: main python files to run pre-training tokenizers

- tokenizer: folder to store tokenizers

- Split the original txt datasets into train, validation and test sets with 90%, 5%, 5%.

- Using the PyVi library to segment the datasets

- Save datasets to disk

- Load datasets

- Train the tokenizers with SentencePiece models

- Save tokenizers

- Load datasets

- Load tokenizers

- Pre-train DeBERTa-v3

- POS tagging and NER (POS_NER)

- Question Answering (QA and QA2)

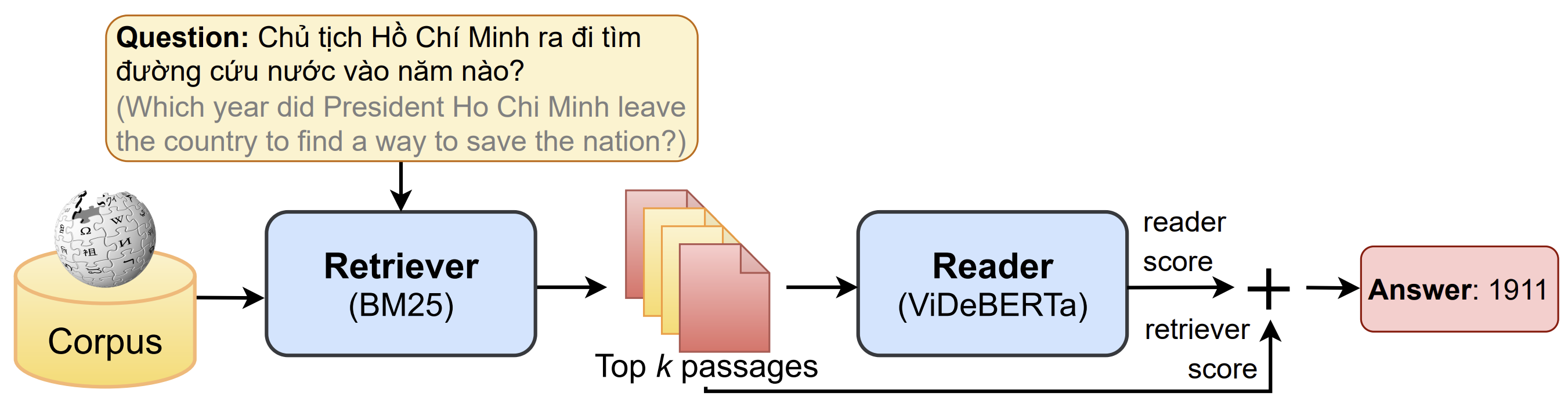

- Open-domain Question Answering (OPQA)