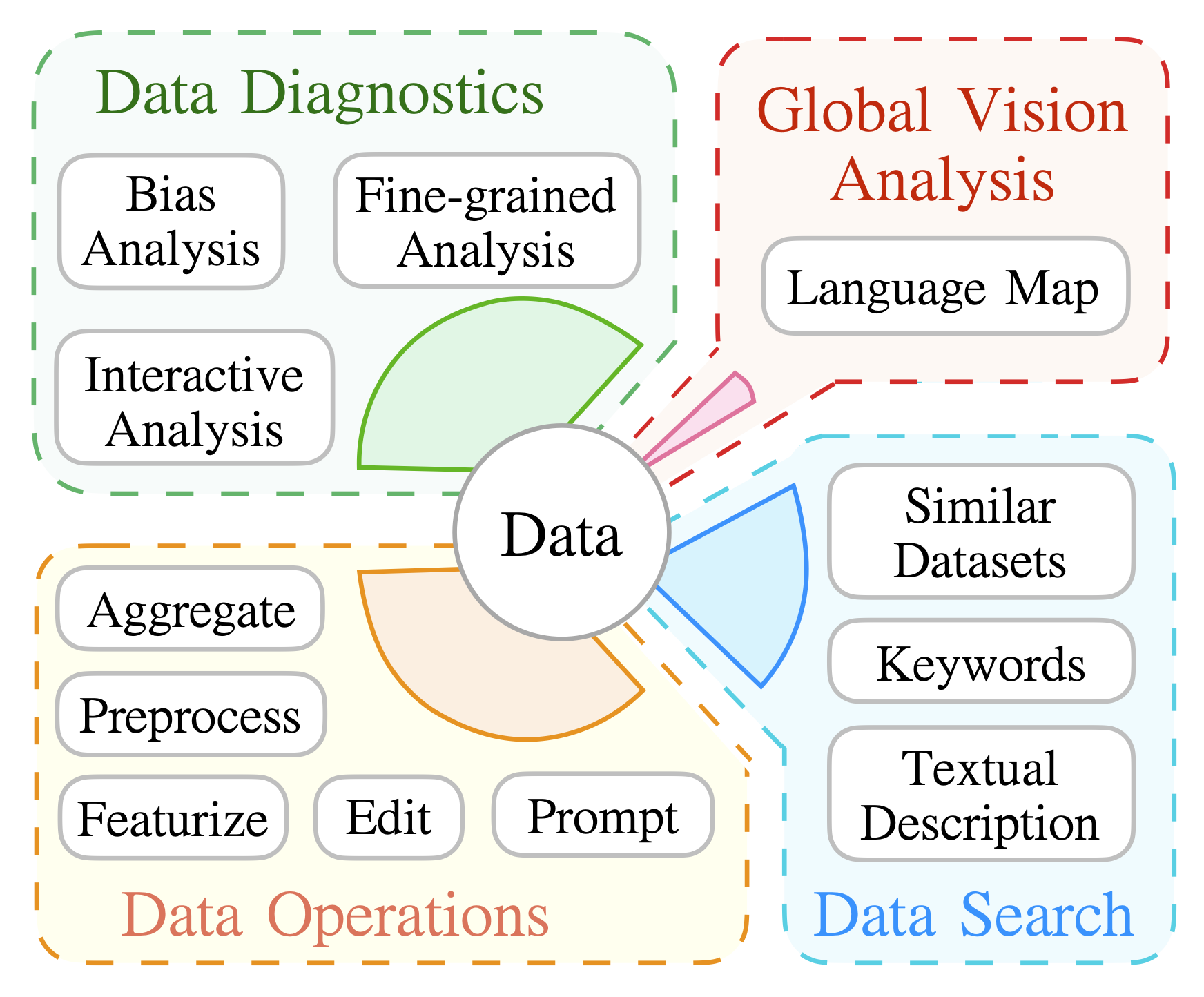

DataLab is a unified platform that allows for NLP researchers to perform a number of data-related tasks in an efficient and easy-to-use manner. In particular, DataLab supports the following functionalities:

- Data Diagnostics: DataLab allows for analysis and understanding of data to uncover undesirable traits such as hate speech, gender bias, or label imbalance.

- Operation Standardization: DataLab provides and standardizes a large number of data processing operations, including aggregating, preprocessing, featurizing, editing and prompting operations.

- Data Search: DataLab provides a semantic dataset search tool to help identify appropriate datasets given a textual description of an idea.

- Global Analysis: DataLab provides tools to perform global analyses over a variety of datasets.

DataLab can be installed from PyPi

pip install --upgrade pip

pip install datalabsor from the source

# This is suitable for SDK developers

pip install --upgrade pip

git clone git@github.com:ExpressAI/DataLab.git

cd Datalab

pip install .Here we give several examples to showcase the usage of DataLab. For more information, please refer to the corresponding sections in our documentation.

# pip install datalabs

from datalabs import operations, load_dataset

from featurize import *

dataset = load_dataset("ag_news")

# print(task schema)

print(dataset['test']._info.task_templates)

# data operators

res = dataset["test"].apply(get_text_length)

print(next(res))

# get entity

res = dataset["test"].apply(get_entities_spacy)

print(next(res))

# get postag

res = dataset["test"].apply(get_postag_spacy)

print(next(res))

from edit import *

# add typos

res = dataset["test"].apply(add_typo)

print(next(res))

# change person name

res = dataset["test"].apply(change_person_name)

print(next(res))-

text-classificationtext:strlabel:ClassLabel

-

text-matchingtext1:strtext2:strlabel:ClassLabel

-

summarizationtext:strsummary:str

-

sequence-labelingtokens:List[str]tags:List[ClassLabel]

-

question-answering-extractive:context:strquestion:stranswers:List[{"text":"","answer_start":""}]

one can use dataset[SPLIT]._info.task_templates to get more useful task-dependent information, where

SPLIT could be train or validation or test.

DataLab originated from a fork of the awesome Huggingface Datasets and TensorFlow Datasets. We highly thank the Huggingface/TensorFlow Datasets for building this amazing library. More details on the differences between DataLab and them can be found in the section